End-to-end Multi-Object Tracking Algorithm Integrating Global Local Feature Interaction and Angular Momentum Mechanism

-

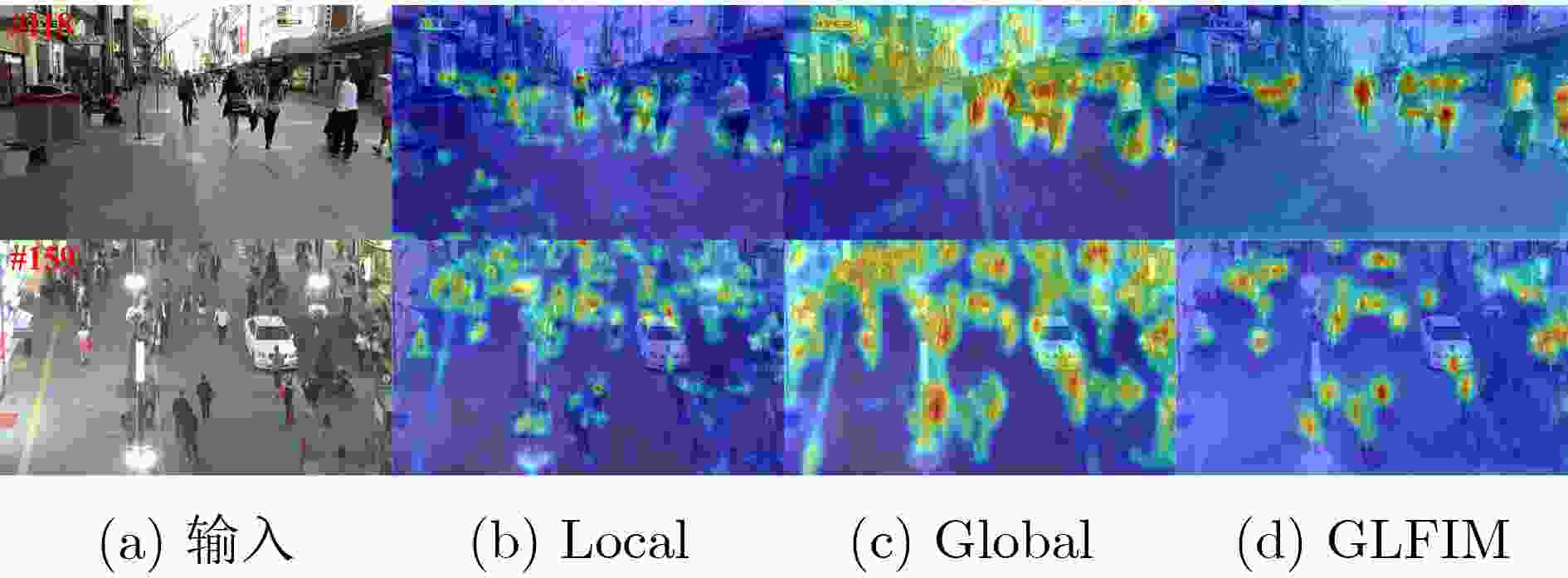

摘要: 针对多目标跟踪(MOT)算法性能对于检测准确度和数据关联策略的依赖性问题,该文提出一种新的端到端算法。在检测方面,首先基于特征金字塔网络,提出空间残差特征金字塔模块(SRFPN),以提升特征融合和信息传递的效率。随后,引入全局局部特征交互模块(GLFIM)来平衡局部细节和全局上下文信息,增强多尺度特征的专注度,提高模型对目标尺度变化的适应性。在关联方面,引入角动量机制(AMM),充分考虑目标运动方向,以提升连续帧之间目标匹配的精确性。在MOT17和UAVDT数据集上进行实验验证,所提跟踪器的检测性能和关联性能均显著提升,并且在目标遮挡、尺度变化和杂乱背景等复杂场景下表现出良好的鲁棒性。Abstract: A novel end-to-end algorithm is proposed to tackle the dependency of Multi-Object Tracking (MOT) algorithm performance on detection accuracy and data association strategies. Concerning detection, the Spatial Residual Feature Pyramid Network (SRFPN) is introduced based on feature pyramid networks to enhance feature fusion and information propagation efficiency. Subsequently, a Global Local Feature Interaction Module (GLFIM) is introduced to balance local details and global contextual information, thereby improving the focus of multi-scale feature outputs and the model’s adaptability to target scale variations. Regarding the association, an Angular Momentum Mechanism (AMM) is introduced to consider target motion direction, thereby enhancing the accuracy of target matching between consecutive frames. Experimental validation on MOT17 and UAVDT datasets demonstrates significant enhancements in both detection and association performance of the proposed tracker, showcasing robustness in complex scenarios such as target occlusion, scale variation, and cluttered backgrounds.

-

表 1 MOT17数据集上的实验结果对比

算法 MOTA↑(%) IDF1↑(%) MOTP↑ MT↑(%) ML↓(%) IDs↓ FN↓ FP↓ Hz↑ MPNTrack[19] 58.8 61.7 – 28.8 33.5 1 185 213 594 17 413 6.5 CRF-RNN[20] 53.1 53.7 76.1 24.2 30.7 2 518 234 991 27 194 1.4 DSORT[5] 60.3 61.2 79.1 31.5 20.3 2 442 185 301 36 111 20.0 Tracktor++[21] 53.5 52.3 78.0 19.5 36.6 4 611 248 047 12 201 1.5 CSE-FF[22] 67.7 56.1 77.9 33.7 22.8 5 706 – – 30.0 CTracker[11] 66.6 57.4 78.2 32.2 24.2 5 529 160 491 22 284 34.4 本文 68.1 58.7 78.6 34.0 21.2 5 535 159 474 15 104 29.8 注:粗体为每列最优,斜体为每列次优 表 2 UAVDT数据集上的实验结果对比

算法 MOTA↑(%) IDF1↑(%) MOTP↑ MT↑(%) ML↓(%) IDs↓ FN↓ FP↓ IOUT[23] 36.6 23.7 72.1 534 357 9 938 163 881 42 245 SORT[3] 39 43.7 74.3 484 400 2 350 172 628 33 037 DAN[4] 41.6 29.7 72.5 648 367 12 902 – – ByteTrack[24] 39.1 44.7 74.3 – – 2 341 – – CTracker[11] 40.3 49.2 78.3 296 449 4 280 152 568 50 292 本文 41.4 50.3 78.4 301 441 3 841 154 938 44 330 表 3 SRFPN不同感受野扩展方法的性能对比

表 4 不同算法在上下文信息利用上的性能对比

表 5 MOT17数据集上的消融实验效果

算法模块 评价指标 SRFPN GLFIM AMM MOTA↑(%) IDF1↑(%) MOTP↑ MT↑(%) ML↓(%) IDs↓ FN↓ FP↓ Hz↑ 66.6 57.4 78.2 32.2 24.2 5 529 160 491 22 284 34.4 √ 67.5 57.2 78.7 33.9 20.7 5 489 152 579 22 169 31.7 √ 67.1 58.3 78.3 33.3 21.5 5 360 154 915 15 118 32.9 √ 66.6 57.7 78.1 32.5 24.0 5 093 160 497 22 298 33.5 √ √ 67.3 58.3 78.5 33.6 21.5 5 499 154 426 21 452 30.9 √ √ 67.1 58.5 78.4 33.5 21.5 5 365 154 936 21 610 32.4 √ √ 67.3 57.8 78.5 33.7 21.5 5 415 153 268 23 473 30.6 √ √ √ 68.1 58.7 78.6 34.0 21.2 5 535 159 474 15 104 29.8 表 6 GLFIM引入位置消融实验结果

层级 MOTA↑(%) IDF1↑(%) Para↓ Hz↑ Baseline 66.6 57.4 43.6 34.4 P7 66.8 57.6 44.0 34.1 P7,P6 66.8 57.9 44.4 33.9 P7,P6,P5 67.2 57.0 44.9 33.6 P7,P6,P5,P4 67.0 57.8 45.3 33.4 All(本文) 67.1 58.3 45.7 32.9 -

[1] 张红颖, 贺鹏艺. 基于卷积注意力模块和无锚框检测网络的行人跟踪算法[J]. 电子与信息学报, 2022, 44(9): 3299–3307. doi: 10.11999/JEIT210634.ZHANG Hongying and HE Pengyi. Pedestrian tracking algorithm based on convolutional block attention module and anchor-free detection network[J]. Journal of Electronics & Information Technology, 2022, 44(9): 3299–3307. doi: 10.11999/JEIT210634. [2] 伍瀚, 聂佳浩, 张照娓, 等. 基于深度学习的视觉多目标跟踪研究综述[J]. 计算机科学, 2023, 50(4): 77–87. doi: 10.11896/jsjkx.220300173.WU Han, NIE Jiahao, ZHANG Zhaowei, et al. Deep learning-based visual multiple object tracking: A review[J]. Computer Science, 2023, 50(4): 77–87. doi: 10.11896/jsjkx.220300173. [3] BEWLEY A, GE Zongyuan, OTT L, et al. Simple online and realtime tracking[C]. 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, USA, 2016: 3464–3468. doi: 10.1109/ICIP.2016.7533003. [4] SUN Shijie, AKHTAR N, SONG Huansheng, et al. Deep affinity network for multiple object tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(1): 104–119. doi: 10.1109/TPAMI.2019.2929520. [5] WOJKE N, BEWLEY A, and PAULUS D. Simple online and realtime tracking with a deep association metric[C]. 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 2017: 3645–3649. doi: 10.1109/ICIP.2017.8296962. [6] WANG Zhongdao, ZHENG Liang, LIU Yixuan, et al. Towards real-time multi-object tracking[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 107–122. doi: 10.1007/978-3-030-58621-8_7. [7] ZHANG Yifu, WANG Chunyu, WANG Xinggang, et al. FairMOT: On the fairness of detection and re-identification in multiple object tracking[J]. International Journal of Computer Vision, 2021, 129(11): 3069–3087. doi: 10.1007/s11263-021-01513-4. [8] YU En, LI Zhuoling, HAN Shoudong, et al. RelationTrack: Relation-aware multiple object tracking with decoupled representation[J]. IEEE Transactions on Multimedia, 2023, 25: 2686–2697. doi: 10.1109/TMM.2022.3150169. [9] CHU Peng, WANG Jiang, YOU Quanzeng, et al. TransMOT: Spatial-temporal graph transformer for multiple object tracking[C]. Proceedings of 2023 IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2023: 4859–4869. doi: 10.1109/WACV56688.2023.00485. [10] XU Yihong, BAN Yutong, DELORME G, et al. TransCenter: Transformers with dense representations for multiple-object tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(6): 7820–7835. doi: 10.1109/TPAMI.2022.3225078. [11] PENG Jinlong, WANG Changan, WAN Fangbin, et al. Chained-tracker: Chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 145–161. doi: 10.1007/978-3-030-58548-8_9. [12] PANG Bo, LI Yizhuo, ZHANG Yifan, et al. TubeTK: Adopting tubes to track multi-object in a one-step training model[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 6307–6317. doi: 10.1109/CVPR42600.2020.00634. [13] ZHANG Chuang, ZHENG Sifa, WU Haoran, et al. AttentionTrack: Multiple object tracking in traffic scenarios using features attention[J]. IEEE Transactions on Intelligent Transportation Systems, 2024, 25(2): 1661–1674. doi: 10.1109/TITS.2023.3315222. [14] OGAWA T, SHIBATA T, and HOSOI T. FRoG-MOT: Fast and robust generic multiple-object tracking by IoU and motion-state associations[C]. 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, USA, 2024: 6549–6558. doi: 10.1109/WACV57701.2024.00643. [15] HUANG Huimin, XIE Shiao, LIN Lanfen, et al. ScaleFormer: Revisiting the transformer-based backbones from a scale-wise perspective for medical image segmentation[C]. Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 2022: 964–971. doi: 10.24963/ijcai.2022/135. [16] MILAN A, LEAL-TAIXÉ L, REID I, et al. MOT16: A benchmark for multi-object tracking[EB/OL]. https://arxiv.org/abs/1603.00831, 2016. [17] DU Dawei, QI Yuankai, YU Hongyang, et al. The unmanned aerial vehicle benchmark: Object detection and tracking[C]. Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 375–391. doi: 10.1007/978-3-030-01249-6_23. [18] LUO Yutong, ZHONG Xinyue, ZENG Minchen, et al. CGLF-Net: Image emotion recognition network by combining global self-attention features and local multiscale features[J]. IEEE Transactions on Multimedia, 2024, 26: 1894–1908. doi: 10.1109/TMM.2023.3289762. [19] BRASÓ G and LEAL-TAIXÉ L. Learning a neural solver for multiple object tracking[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 6246–6256. doi: 10.1109/CVPR42600.2020.00628. [20] XIANG Jun, XU Guohan, MA Chao, et al. End-to-end learning deep CRF models for multi-object tracking deep CRF models[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2021, 31(1): 275–288. doi: 10.1109/TCSVT.2020.2975842. [21] BERGMANN P, MEINHARDT T, and LEAL-TAIXÉ L. Tracking without bells and whistles[C]. Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 941–951. doi: 10.1109/ICCV.2019.00103. [22] ZHOU Yan, CHEN Junyu, WANG Dongli, et al. Multi-object tracking using context-sensitive enhancement via feature fusion[J]. Multimedia Tools and Applications, 2024, 83(7): 19465–19484. doi: 10.1007/s11042-023-16027-z. [23] BOCHINSKI E, EISELEIN V, and SIKORA T. High-speed tracking-by-detection without using image information[C]. 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 2017: 1–6. doi: 10.1109/AVSS.2017.8078516. [24] ZHANG Yifu, SUN Peize, JIANG Yi, et al. ByteTrack: Multi-object tracking by associating every detection box[C]. 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 1–21. doi: 10.1007/978-3-031-20047-2_1. [25] LIU Songtao, HUANG Di, and WANG Yunhong. Receptive field block net for accurate and fast object detection[C]. Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 404–419. doi: 10.1007/978-3-030-01252-6_24. [26] PAN Huihui, HONG Yuanduo, SUN Weichao, et al. Deep dual-resolution networks for real-time and accurate semantic segmentation of traffic scenes[J]. IEEE Transactions on Intelligent Transportation Systems, 2023, 24(3): 3448–3460. doi: 10.1109/TITS.2022.3228042. [27] FU Jun, LIU Jing, TIAN Haijie, et al. Dual attention network for scene segmentation[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3141–3149. doi: 10.1109/CVPR.2019.00326. [28] XIE Yakun, ZHU Jun, LAI Jianbo, et al. An enhanced relation-aware global-local attention network for escaping human detection in indoor smoke scenarios[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 186: 140–156. doi: 10.1016/j.isprsjprs.2022.02.006. -

下载:

下载:

下载:

下载: