Remote Sensing Semi-supervised Feature Extraction Framework And Lightweight Method Integrated With Distribution-aligned Sampling

-

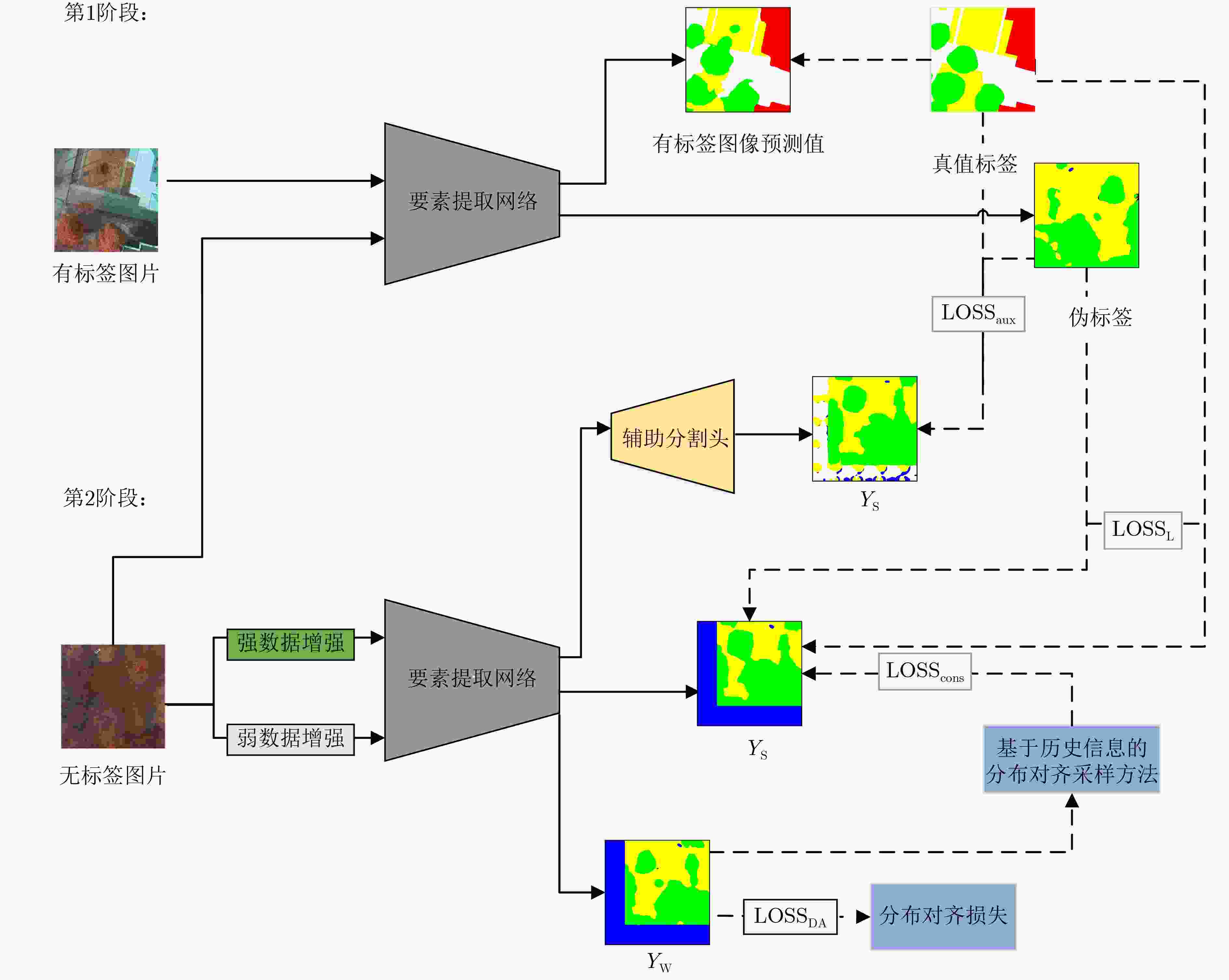

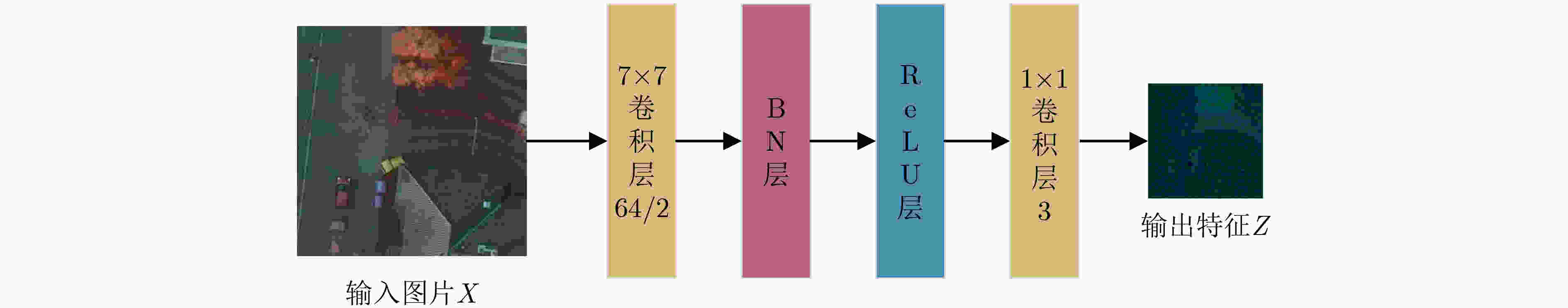

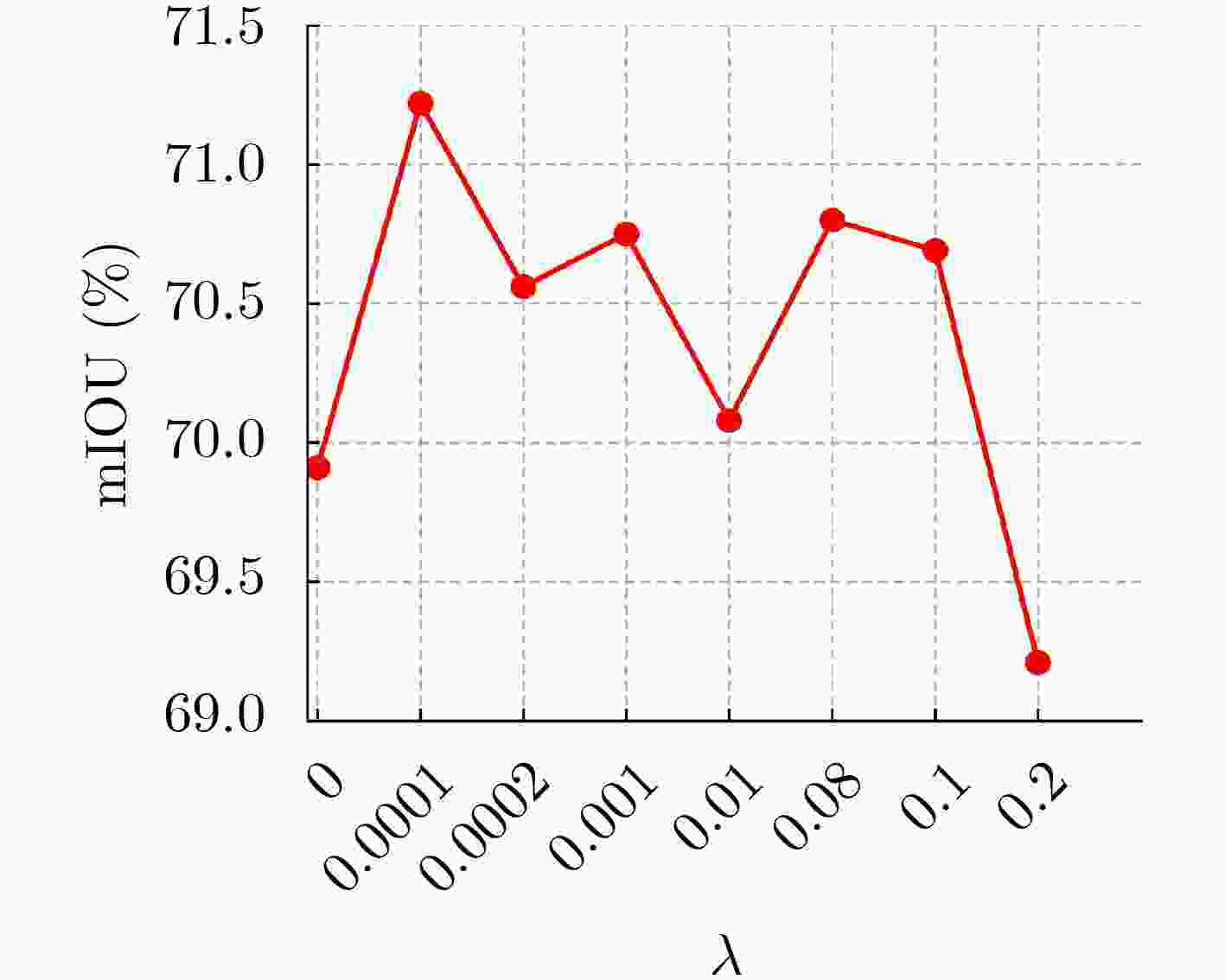

摘要: 近年来,利用无标签数据辅助少量标签数据进行训练的遥感半监督要素提取任务被广泛研究,大多数工作采用自训练或一致性正则方法提高要素提取性能,但仍存在数据类别分布不均衡导致的不同类别准确率差异大的问题。该文提出分布采样对齐的遥感半监督要素提取框架(FIDAS),通过获取的历史数据类别分布,在调整不同类别的训练难度的同时引导模型学习数据真实分布。具体来说,利用历史数据分布信息对各类别进行采样,增加难例类别通过阈值的概率,使模型接触到更多难例类别的特征信息。其次设计分布对齐损失,提升模型学习到的类别分布与真实数据类别分布之间的对齐程度,提高模型鲁棒性。此外,为了降低引入的Transformer模型计算量,提出图像特征块自适应聚合网络,对冗余的输入图像特征进行聚合,提升模型训练速度。该方法通过遥感要素提取数据集Potsdam上的实验,在1/32的半监督数据比例设置下,该方法相较于国际领先方法取得了4.64%的平均交并比(mIoU)提升,并在基本保持要素提取精度的同时,训练时间缩短约30%,验证了该文方法在遥感半监督要素提取任务中的高效性和性能优势。

-

关键词:

- 半监督学习 /

- 遥感图像密集要素提取 /

- 分布对齐采样 /

- 轻量化

Abstract: In recent years, the semi-supervised element extraction task in remote sensing, which utilizes unlabeled data to assist training with a small amount of labeled data, has been widely explored. Most existing approaches adopt self-training or consistency regularization methods to enhance element extraction performance. However, there still exists a significant discrepancy in accuracy among different categories due to the imbalanced distribution of data classes. Therefore, a feature extraction Framework Integrated with Distribution-Aligned Sampling (FIDAS) framework is proposed in this paper. By leveraging historical data class distributions, the framework adjusts the training difficulty for different categories while guiding the model to learn the true data distribution. Specifically, it utilizes historical data distribution information to sample from each category, increasing the probability of difficult-category instances passing through thresholds and enabling the model to capture more features of difficult categories. Furthermore, a distribution alignment loss is designed to improve the alignment between the learned category distribution and the true data category distribution, enhancing model robustness. Additionally, to reduce the computational overhead introduced by the Transformer model, an image feature block adaptive aggregation network is proposed, which aggregates redundant input image features to accelerate model training. Experiments are conducted on the remote sensing element extraction dataset Potsdam. Under the setting of a 1/32 semi-supervised data ratio, a 4.64% improvement in mean Intersection over Union (mIoU) is achieved by the proposed approach compared to state-of-the-art methods. Moreover, while the essential element extraction accuracy is maintained, the training time is reduced by approximately 30%. The effectiveness and performance advantages of the proposed method in semi-supervised remote sensing element extraction tasks are demonstrated by these results. -

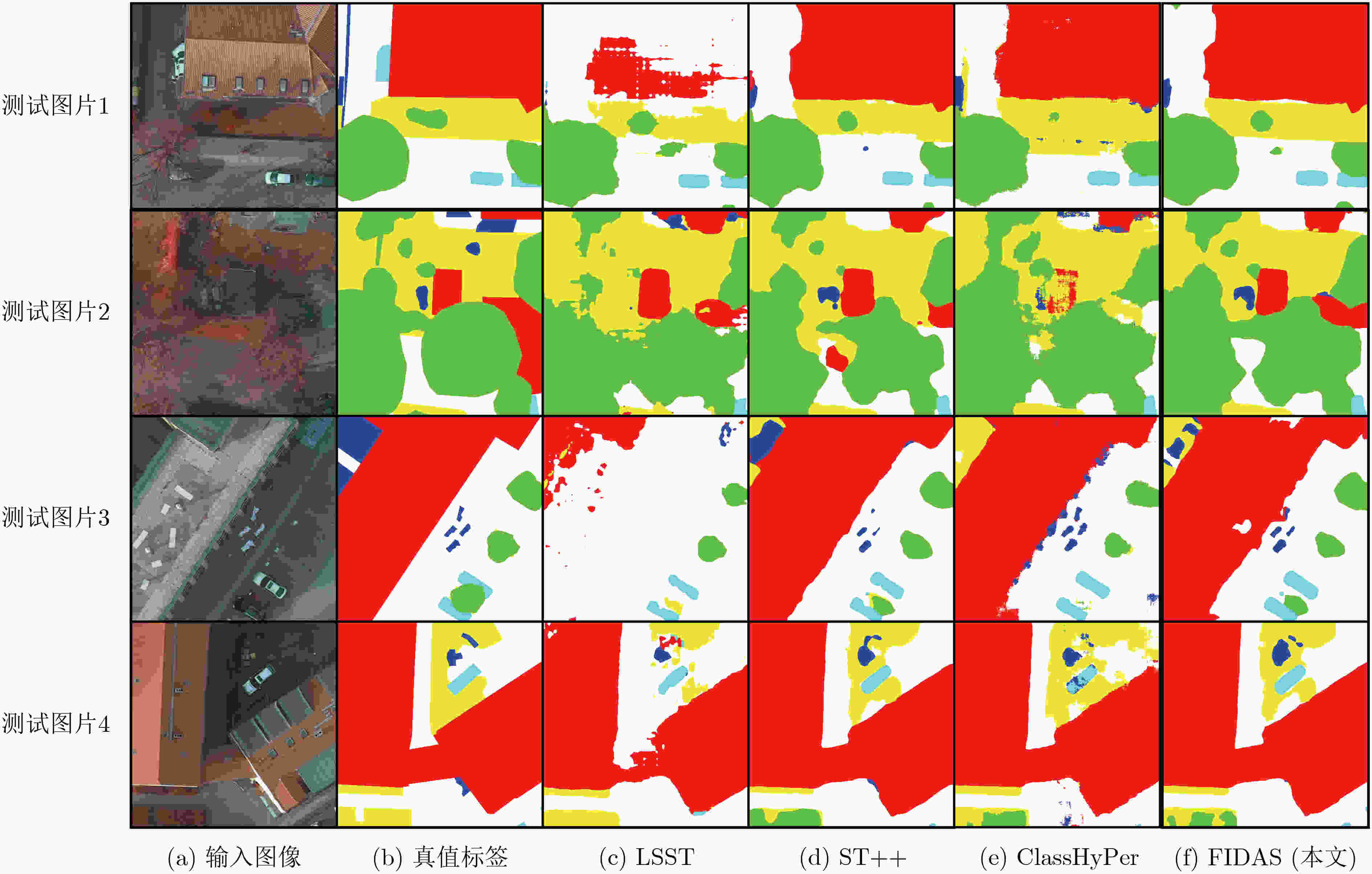

表 1 potsdam数据集$ 1/32 $设置下的实验结果

方法 OA$ \left(\mathrm{\%}\right) $ mIoU$ \left(\mathrm{\%}\right) $ mF1$ \left(\mathrm{\%}\right) $ 时间$ \left(\mathrm{s}\right) $ 各类别IoU$ \left(\mathrm{\%}\right) $ 不透水表面 建筑物 低矮植被 树木 汽车 背景类 ST++ 83.92 64.63 74.46 – 77.02 81.57 69.10 69.87 81.42 8.77 LSST 80.57 60.82 74.15 – 71.00 77.53 62.88 64.46 62.43 26.62 ClassHyPer 83.62 66.58 78.23 – 76.06 82.85 67.25 70.49 75.00 27.84 FIDAS(本文) 86.61 71.22 81.67 10716 80.75 87.93 71.12 73.55 80.64 33.31 FIDAS (轻量化) 85.06 68.70 79.88 7242 78.78 85.39 68.66 72.00 76.38 30.96 表 2 potsdam数据集$ 1/16,1/8 $和$ 1/4 $设置下的实验结果$ \left(\mathrm{\%}\right) $

比例 方法 OA mIoU mF1 1/16 ST++ 85.18 66.76 77.24 LSST 81.97 62.16 75.00 ClassHyPer 85.48 68.90 80.05 FIDAS(本文) 86.95 71.97 82.52 1/8 ST++ 83.96 67.10 78.24 LSST 82.35 64.00 76.85 ClassHyPer 85.93 69.41 80.27 FIDAS(本文) 87.51 73.06 83.29 1/4 ST++ 85.55 67.99 78.18 LSST 83.24 64.83 77.52 ClassHyPer 86.26 69.89 80.65 FIDAS(本文) 88.10 73.79 83.83 表 3 轻量化结果指标对比

方法 OA$ \left(\mathrm{\%}\right) $ mIoU$ \left(\mathrm{\%}\right) $ mF1$ \left(\mathrm{\%}\right) $ 时间 $ \left(\mathrm{s}\right) $ 浮点数运算量 参数量 FIDAS(本文) 86.61 71.22 81.67 10716 75.5×1011 59.79M FIDAS (轻量化) 85.06 68.70 79.88 7242 19.4×1011 59.80M 表 4 Potsdam数据集$ 1/32 $设置下的分布对齐采样和对齐损失消融实验$ \left(\mathrm{\%}\right) $

实验编号 分布对齐采样 对齐损失 辅助头 OA mIoU mF1 1 $ \times $ $ \times $ $ \surd $ 84.39 67.72 78.89 2 $ \surd $ $ \times $ $ \surd $ 86.28 69.91 80.44 3 $ \surd $ $ \surd $ $ \surd $ 86.61 71.22 81.67 表 5 Potsdam数据集$ 1/32 $设置下的图像特征块自适应聚合网络消融实验(%)

实验编号 批归一化层(BN层) 最大池化层 ReLU mIoU 1 $ \surd $ $ \surd $ $ \surd $ 67.48 2 $ \surd $ $ \times $ $ \surd $ 68.51 3 $ \times $ $ \times $ $ \surd $ 68.70 -

[1] PERSELLO C, WEGNER J D, HÄNSCH R, et al. Deep learning and earth observation to support the sustainable development goals: Current approaches, open challenges, and future opportunities[J]. IEEE Geoscience and Remote Sensing Magazine, 2022, 10(2): 172–200. doi: 10.1109/MGRS.2021.3136100. [2] STEWART A J, ROBINSON C, CORLEY I A, et al. Torchgeo: Deep learning with geospatial data[C]. The 30th International Conference on Advances in Geographic Information Systems, Seattle, USA, 2022: 19. doi: 10.1145/3557915.3560953. [3] GE Yong, ZHANG Xining, ATKINSON P M, et al. Geoscience-aware deep learning: A new paradigm for remote sensing[J]. Science of Remote Sensing, 2022, 5: 100047. doi: 10.1016/j.srs.2022.100047. [4] YASIR M, WAN Jianhua, LIU Shanwei, et al. Coupling of deep learning and remote sensing: A comprehensive systematic literature review[J]. International Journal of Remote Sensing, 2023, 44(1): 157–193. doi: 10.1080/01431161.2022.2161856. [5] WANG Xiaolei, HU Zirong, SHI Shouhai, et al. A deep learning method for optimizing semantic segmentation accuracy of remote sensing images based on improved UNet[J]. Scientific Reports, 2023, 13(1): 7600. doi: 10.1038/s41598-023-34379-2. [6] RONNEBERGER O, FISCHER P, and BROX T. U-net: Convolutional networks for biomedical image segmentation[C]. The 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. doi: 10.1007/978-3-319-24574-4_28. [7] HAN Wei, ZHANG Xiaohan, WANG Yi, et al. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2023, 202: 87–113. doi: 10.1016/j.isprsjprs.2023.05.032. [8] WANG Di, ZHANG Jing, DU Bo, et al. Samrs: Scaling-up remote sensing segmentation dataset with segment anything model[C]. The 37th Advances in Neural Information Processing Systems, New Orleans, USA, 2023: 36. [9] YANG Xiangli, SONG Zixing, KING I, et al. A survey on deep semi-supervised learning[J]. IEEE Transactions on Knowledge and Data Engineering, 2023, 35(9): 8934–8954. doi: 10.1109/TKDE.2022.3220219. [10] YANG Lihe, ZHUO Wei, QI Lei, et al. St++: Make self-trainingwork better for semi-supervised semantic segmentation[C]. The 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 4258–4267. doi: 10.1109/CVPR52688.2022.00423. [11] YANG Zhujun, YAN Zhiyuan, DIAO Wenhui, et al. Label propagation and contrastive regularization for semisupervised semantic segmentation of remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5609818. doi: 10.1109/TGRS.2023.3277203. [12] ZHANG Bin, ZHANG Yongjun, LI Yansheng, et al. Semi-supervised deep learning via transformation consistency regularization for remote sensing image semantic segmentation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023, 16: 5782–5796. doi: 10.1109/JSTARS.2022.3203750. [13] HE Yongjun, WANG Jinfei, LIAO Chunhua, et al. ClassHyPer: ClassMix-based hybrid perturbations for deep semi-supervised semantic segmentation of remote sensing imagery[J]. Remote Sensing, 2022, 14(4): 879. doi: 10.3390/rs14040879. [14] WANG Jiaxin, CHEN Sibao, DING C H Q, et al. RanPaste: Paste consistency and pseudo label for semisupervised remote sensing image semantic segmentation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 2002916. doi: 10.1109/TGRS.2021.3102026. [15] LU Xiaoqiang, JIAO Licheng, LIU Fang, et al. Simple and efficient: A semisupervised learning framework for remote sensing image semantic segmentation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5543516. doi: 10.1109/TGRS.2022.3220755. [16] XU Yizhe, YAN Liangliang, and JIANG Jie. EI-HCR: An efficient end-to-end hybrid consistency regularization algorithm for semisupervised remote sensing image segmentation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 4405015. doi: 10.1109/TGRS.2023.3285752. [17] QI Xiyu, MAO Yongqiang, ZHANG Yidan, et al. PICS: Paradigms integration and contrastive selection for semisupervised remote sensing images semantic segmentation[J]. IEEE Transactions on Geoscience and Remote Sensing, 2023, 61: 5602119. doi: 10.1109/TGRS.2023.3239042. [18] WANG Jiaxin, CHEN Sibao, DING C H Q, et al. Semi-supervised semantic segmentation of remote sensing images with iterative contrastive network[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 2504005. doi: 10.1109/LGRS.2022.3157032. [19] LI Linhui, ZHANG Wenjun, ZHANG Xiaoyan, et al. Semi-supervised remote sensing image semantic segmentation method based on deep learning[J]. Electronics, 2023, 12(2): 348. doi: 10.3390/electronics12020348. [20] CHEN L C, PAPANDREOU G, SCHROFF F, et al. Rethinking atrous convolution for semantic image segmentation[J]. arXiv preprint arXiv: 1706.05587, 2017. doi: 10.48550/arXiv.1706.05587. [21] CHEN L C, ZHU Yukun, PAPANDREOU G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 833–851. doi: 10.1007/978-3-030-01234-2_49. [22] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 6000–6010. [23] DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16×16 words: Transformers for image recognition at scale[C]. The 9th International Conference on Learning Representations, 2021. [24] LIU Ze, LIN Yutong, CAO Yue, et al. Swin transformer: Hierarchical vision transformer using shifted windows[C]. The 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 9992–10002. doi: 10.1109/ICCV48922.2021.00986. [25] LONG J, SHELHAMER E, and DARRELL T. Fully convolutional networks for semantic segmentation[C]. The 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 3431–3440. doi: 10.1109/CVPR.2015.7298965. [26] IOFFE S and SZEGEDY C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]. The 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 448–456. [27] ROTTENSTEINER F, SOHN G, JUNG J, et al. The ISPRS benchmark on urban object classification and 3D building reconstruction[C]. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Melbourne, Australia, 2012: 293–298. [28] XIAO Tete, LIU Yingcheng, ZHOU Bolei, et al. Unified perceptual parsing for scene understanding[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 432–448. doi: 10.1007/978-3-030-01228-1_26. [29] ZHANG Hang, DANA K, SHI Jianping, et al. Context encoding for semantic segmentation[C]. The 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7151–7160. doi: 10.1109/CVPR.2018.00747. [30] DENG Jia, DONG Wei, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]. The 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 248–255. doi: 10.1109/CVPR.2009.5206848. [31] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. The 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [32] LOSHCHILOV I and HUTTER F. Decoupled weight decay regularization[C]. The 7th International Conference on Learning Representations, New Orleans, USA, 2019. -

下载:

下载:

下载:

下载: