EvolveNet: Adaptive Self-Supervised Continual Learning without Prior Knowledge

-

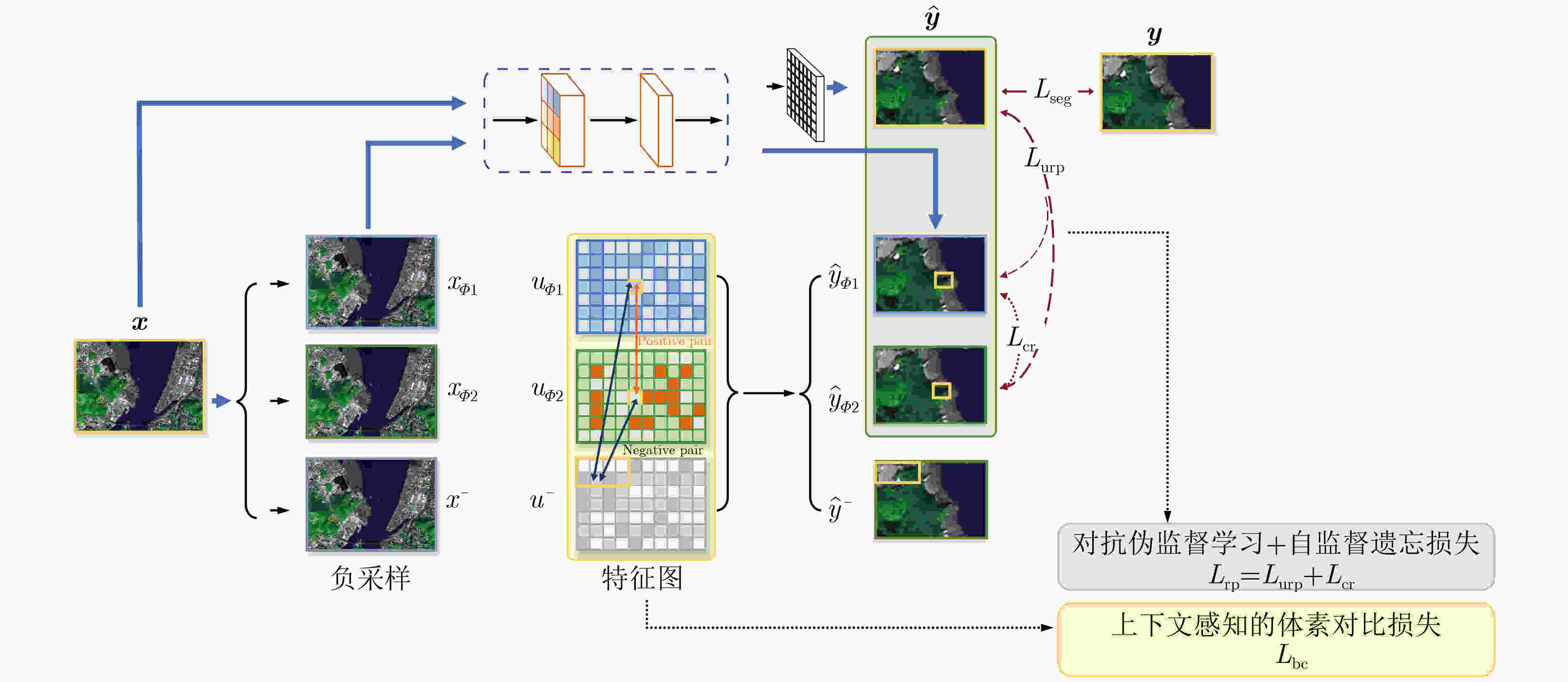

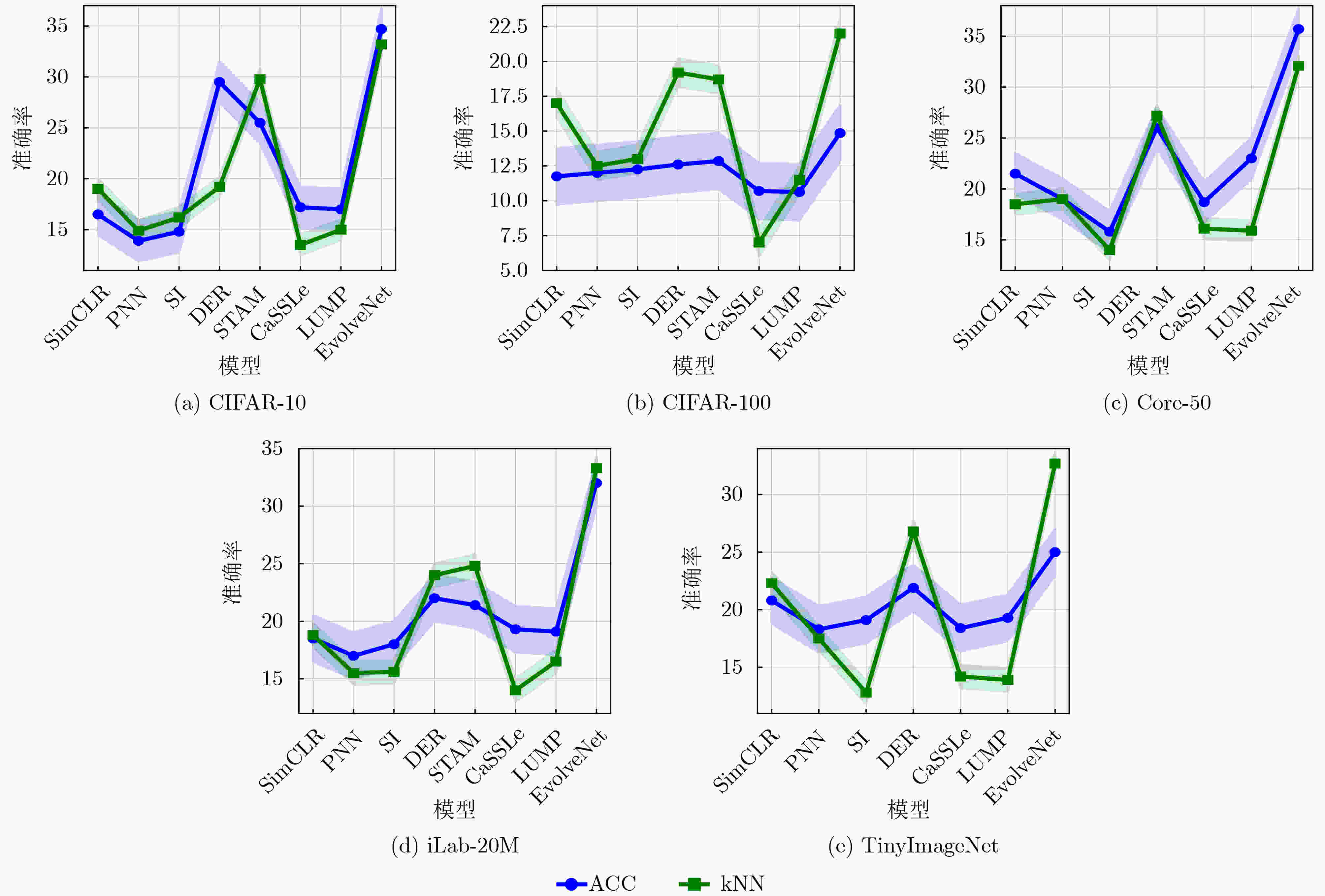

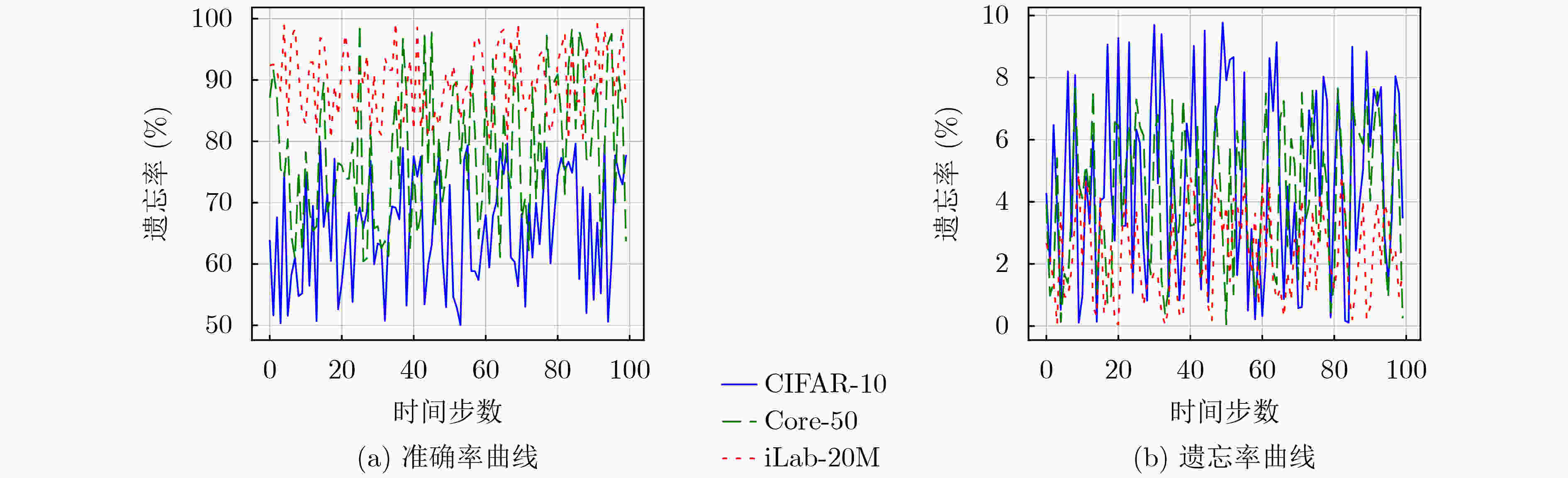

摘要: 无监督持续学习(UCL)是指能够随着时间的推移而学习,同时在没有监督的情况下记住以前的模式。虽然在这个方向上取得了很大进展,但现有工作通常假设对于即将到来的数据有强大的先验知识(例如,知道类别边界),而在复杂和不可预测的开放环境中可能无法获得这些知识。受到现实场景的启发,该文提出一个更实际的问题设置,称为无先验知识的在线自监督持续学习。所提设置具有挑战性,因为数据是非独立同分布的,且缺乏外部监督、没有先验知识。为了解决这些挑战,该文提出一种进化网络模型(英文名EvolveNet),它是一种无先验知识的自适应自监督持续学习方法,能够纯粹地从数据连续体中提取和记忆表示。EvolveNet围绕3个主要组件设计:对抗伪监督学习损失、自监督遗忘损失和在线记忆更新,以进行均匀子集选择。这3个组件的设计旨在协同工作,以最大化学习性能。该文在5个公开数据集上对EvolveNet进行了全面实验。结果显示,在所有设置中,EvolveNet优于现有算法,在CIFAR-10, CIFAR-100和TinyImageNet数据集上的准确率显著提高,同时在针对增量学习的多模态数据集Core-50和iLab-20M上也表现最佳。该文还进行了跨数据集的泛化实验,结果显示EvolveNet在泛化方面更加稳健。最后,在Github上开源了EvolveNet模型和核心代码,促进了无监督持续学习的进展,并为研究社区提供了有用的工具和平台。Abstract: Unsupervised Continual Learning (UCL) refers to the ability to learn over time while remembering previous patterns without supervision. Although significant progress has been made in this direction, existing works often assume strong prior knowledge about forthcoming data (e.g., knowing class boundaries), which may not be obtainable in complex and unpredictable open environments. Inspired by real-world scenarios, a more practical problem setting called unsupervised online continual learning without prior knowledge is proposed in this paper. The proposed setting is challenging because the data are non-i.i.d. and lack external supervision or prior knowledge. To address these challenges, a method called EvolveNet is intriduced, which is an adaptive unsupervised continual learning approach capable of purely extracting and memorizing representations from data streams. EvolveNet is designed around three main components: adversarial pseudo-supervised learning loss, self-supervised forgetting loss, and online memory update for uniform subset selection. The design of these three components aims to synergize and maximize learning performance. We conduct comprehensive experiments on five public datasets with EvolveNet. The results show that EvolveNet outperforms existing algorithms in all settings, achieving significantly improved accuracy on CIFAR-10, CIFAR-100, and TinyImageNet datasets, as well as performing best on the multimodal datasets Core-50 and iLab-20M for incremental learning. The cross-dataset generalization experiments are also conducted, demonstrating EvolveNet’s robustness in generalization. Finally, we open-source the EvolveNet model and core code on GitHub, facilitating progress in unsupervised continual learning and providing a useful tool and platform for the research community.

-

表 1 不同对比和遗忘损失组合下顺序数据流的平均最终KNN准确率

表 2 不同损失函数组合下顺序数据流的iLab-20M的平均KNN准确率(%)

损失函数组合 平均最终KNN准确率 ${{L}} $rp 85.2 ${{L}} $bc 82.6 ${{L}} $rp + Lbc 89.5 ${{L}} $rp + U 87.3 ${{L}} $rp + C 88.1 ${{L}} $rp + U + C 91.2 ${{L}} $rp + ${{L}} $bc + U 90.4 ${{L}} $rp + ${{L}} $bc + C 91.0 ${{L}} $rp + ${{L}} $bc + U + C 92.7 表 3 在Core-50和iLab-20M上的跨数据集泛化。

训练数据集⇒ iLab-20M Core-50 测试数据集⇒ Core-50 iLab-20M 模型 Acc(↑) $\Delta $(%)(↓) Acc(↑) $\Delta $(%)(↓) SimCLR[17] 53.5 17 49.3 26 EvolveNet 71.6 8 79.9 15 表 4 EvolveNet模型在CIFAR-10,Core-50和iLab-20M数据集上与带类标签的持续学习算法的性能比较

-

[1] MEHTA S V, PATIL D, CHANDAR S, et al. An empirical investigation of the role of pre-training in lifelong learning[J]. The Journal of Machine Learning Research, 2023, 24(1): 214. [2] BAKER M M, NEW A, AGUILAR-SIMON M, et al. A domain-agnostic approach for characterization of lifelong learning systems[J]. Neural Networks, 2023, 160: 274–296. doi: 10.1016/j.neunet.2023.01.007. [3] PURUSHWALKAM S, MORGADO P, and GUPTA A. The challenges of continuous self-supervised learning[C]. The 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 702–721. doi: 10.1007/978-3-031-19809-0_40. [4] GRAUMAN K, WESTBURY A, BYRNE E, et al. Ego4D: Around the world in 3, 000 hours of egocentric video[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 18995–19012. doi: 10.1109/CVPR52688.2022.01842. [5] DE LANGE M and TUYTELAARS T. Continual prototype evolution: Learning online from non-stationary data streams[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 8250–8259. doi: 10.1109/ICCV48922.2021.00814. [6] VERWIMP E, YANG Kuo, PARISOT S, et al. Re-examining distillation for continual object detection[J]. arXiv: 2204.01407, 2022. [7] SUN Yu, WANG Xiaolong, LIU Zhuang, et al. Test-time training with self-supervision for generalization under distribution shifts[C]. The 37th International Conference on Machine Learning, 2020: 9229–9248. [8] CARION N, MASSA F, SYNNAEVE G, et al. End-to-end object detection with transformers[C]. The 16th European Conference, Glasgow, UK, 2020: 213–229. doi: 10.1007/978-3-030-58452-8_13. [9] WANG Xin, HUANG T E, DARRELL T, et al. Frustratingly simple few-shot object detection[J]. arXiv: 2003.06957, 2020. [10] WU Jiaxi, LIU Songtao, HUANG Di, et al. Multi-scale positive sample refinement for few-shot object detection[C]. The 16th European Conference, Glasgow, UK, 2020: 456–472. doi: 10.1007/978-3-030-58517-4_27. [11] BRAHMA D and RAI P. A probabilistic framework for lifelong test-time adaptation[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 3582–3591. doi: 10.1109/CVPR52729.2023.00349. [12] YAN Xiaopeng, CHEN Ziliang, XU Anni, et al. Meta R-CNN: Towards general solver for instance-level low-shot learning[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 9577–9586. doi: 10.1109/ICCV.2019.00967. [13] DENG Jia, DONG Wei, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]. 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 5351–5359. doi: 10.1109/CVPR.2009.5206848. [14] MENEZES A G, DE MOURA G, ALVES C, et al. Continual object detection: A review of definitions, strategies, and challenges[J]. Neural Networks, 2023, 161: 476–493. doi: 10.1016/j.neunet.2023.01.041. [15] LOMONACO V, PELLEGRINI L, RODRIGUEZ P, et al. CVPR 2020 continual learning in computer vision competition: Approaches, results, current challenges and future directions[J]. Artificial Intelligence, 2022, 303: 103635. doi: 10.1016/j.artint.2021.103635. [16] GRAFFIETI G, BORGHI G, and MALTONI D. Continual learning in real-life applications[J]. IEEE Robotics and Automation Letters, 2022, 7(3): 6195–6202. doi: 10.1109/LRA.2022.3167736. [17] BAE H, BROPHY E, CHAN R H M, et al. IROS 2019 lifelong robotic vision: Object recognition challenge [competitions][J]. IEEE Robotics & Automation Magazine, 2020, 27(2): 11–16. doi: 10.1109/MRA.2020.2987186. [18] VERWIMP E, YANG Kuo, PARISOT S, et al. CLAD: A realistic continual learning benchmark for autonomous driving[J]. Neural Networks, 2023, 161: 659–669. doi: 10.1016/j.neunet.2023.02.001. [19] SARFRAZ F, ARANI E, and ZONOOZ B. Sparse coding in a dual memory system for lifelong learning[C]. The 37th AAAI Conference on Artificial Intelligence, Washington, USA, 2023: 9714–9722. doi: 10.1609/aaai.v37i8.26161. [20] MISHRA R and SURI M. A survey and perspective on neuromorphic continual learning systems[J]. Frontiers in Neuroscience, 2023, 17: 1149410. doi: 10.3389/fnins.2023.1149410. [21] WANG Yuxiong, RAMANAN D, and HEBERT M. Meta-learning to detect rare objects[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 9925–9934. doi: 10.1109/ICCV.2019.01002. [22] ZHU Chenchen, CHEN Fangyi, AHMED U, et al. Semantic relation reasoning for shot-stable few-shot object detection[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 8782–8791. doi: 10.1109/CVPR46437.2021.00867. [23] YPSILANTIS N A, GARCIA N, HAN Guangxing, et al. The met dataset: Instance-level recognition for artworks[C]. The 1st Neural Information Processing Systems Track on Datasets and Benchmarks, 2021. [24] QIAO Limeng, ZHAO Yuxuan, LI Zhiyuan, et al. DeFRCN: Decoupled faster R-CNN for few-shot object detection[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 8681–8690. doi: 10.1109/ICCV48922.2021.00856. [25] CHU Zhixuan, LI Ruopeng, RATHBUN S, et al. Continual causal inference with incremental observational data[C]. 2023 IEEE 39th International Conference on Data Engineering, Anaheim, USA, 2023: 3430–3439. doi: 10.1109/ICDE55515.2023.00263. [26] DAMEN D, DOUGHTY H, FARINELLA G M, et al. The EPIC-KITCHENS dataset: Collection, challenges and baselines[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(11): 4125–4141. doi: 10.1109/TPAMI.2020.2991965. [27] 杨静, 何瑶, 李斌, 等. 基于门控机制与重放策略的持续语义分割方法[J]. 电子与信息学报, 2022. doi: 10.11999/JEIT230803.YANG Jing, HE Yao, LI Bin, et al. A continual semantic segmentation method based on gating mechanism and replay strategy[J]. Journal of Electronics & Information Technology, 2022. doi: 10.11999/JEIT230803. [28] JEONG J, LEE S, KIM J, et al. Consistency-based semi-supervised learning for object detection[C]. The 33rd International Conference on Neural Information Processing Systems, Vancouver, Canada, 2019: 965. [29] 张立民, 谭凯文, 闫文君, 等. 基于持续学习和联合特征提取的特定辐射源识别[J]. 电子与信息学报, 2023, 45(1): 308–316. doi: 10.11999/JEIT211176.ZHANG Limin, TAN Kaiwen, YAN Wenjun, et al. Specific emitter identification based on continuous learning and joint feature extraction[J]. Journal of Electronics & Information Technology, 2023, 45(1): 308–316. doi: 10.11999/JEIT211176. [30] OSTAPENKO O. Continual learning via local module composition[C]. The 35th International Conference on Neural Information Processing Systems, 2021: 30298–30312. [31] XU Mengde, ZHANG Zheng, HU Han, et al. End-to-end semi-supervised object detection with soft teacher[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 3060–3069. doi: 10.1109/ICCV48922.2021.00305. [32] SHE Qi, FENG Fan, HAO Xinyue, et al. OpenLORIS-Object: A robotic vision dataset and benchmark for lifelong deep learning[C]. 2020 IEEE International Conference on Robotics and Automation, Paris, France, 2020: 4767–4773. doi: 10.1109/ICRA40945.2020.9196887. [33] KANG Bingyi, LIU Zhuang, WANG Xin, et al. Few-shot object detection via feature reweighting[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 8420–8429. doi: 10.1109/ICCV.2019.00851. -

下载:

下载:

下载:

下载: