Statistical Feature-based Search for Multivariate Time Series Forecasting

-

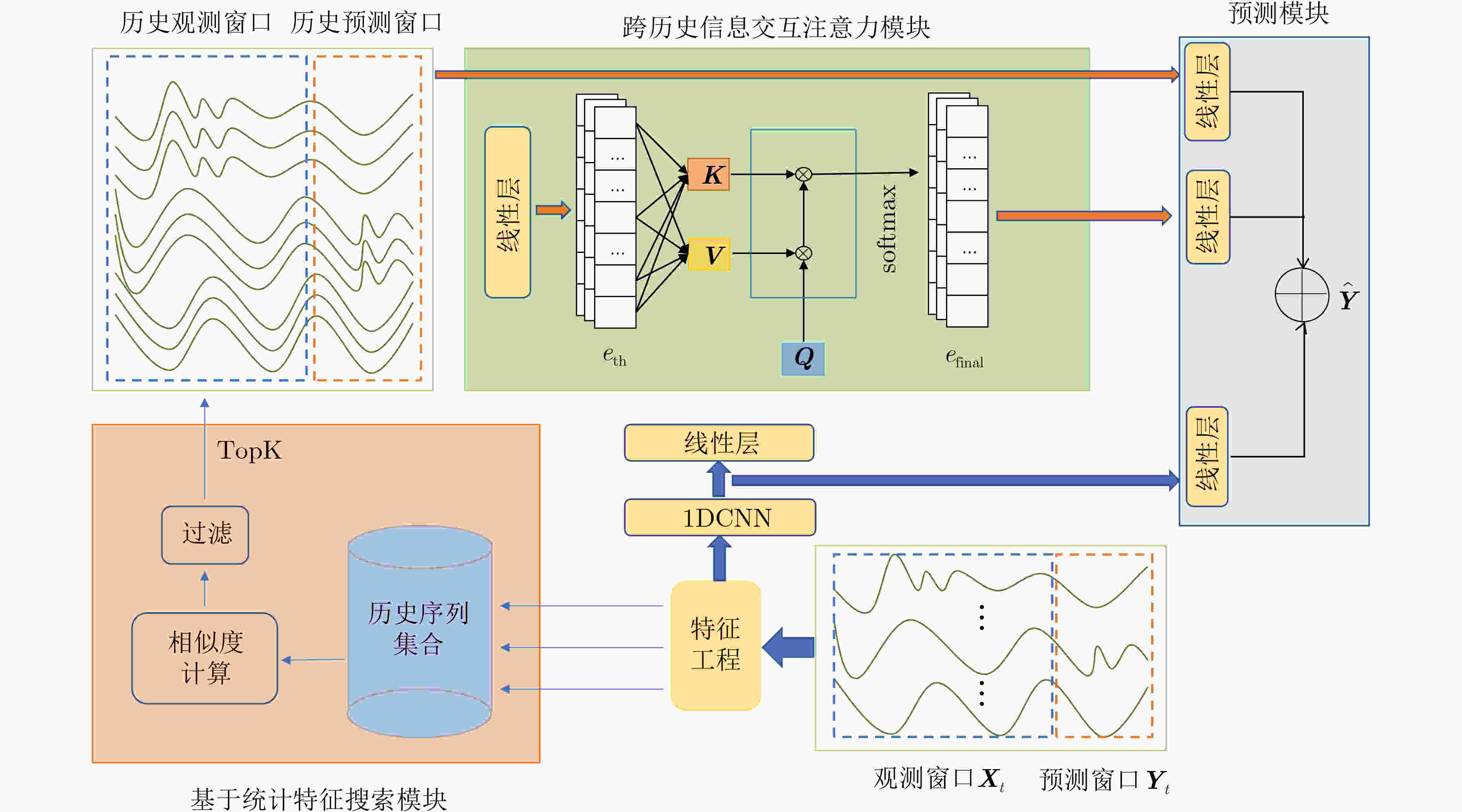

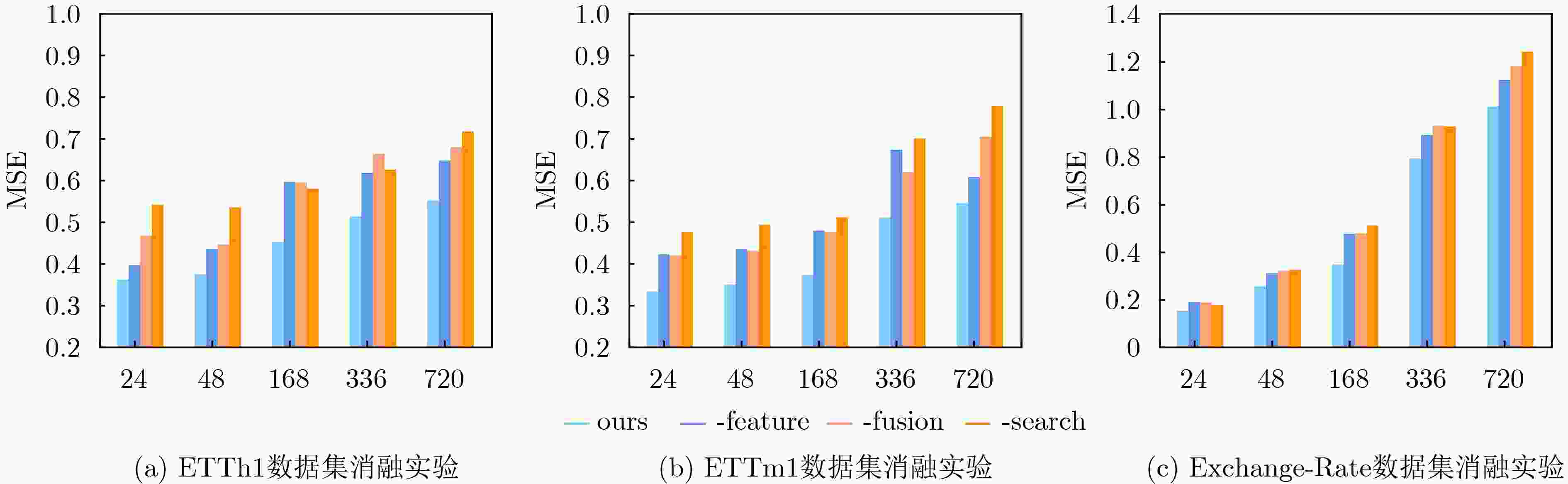

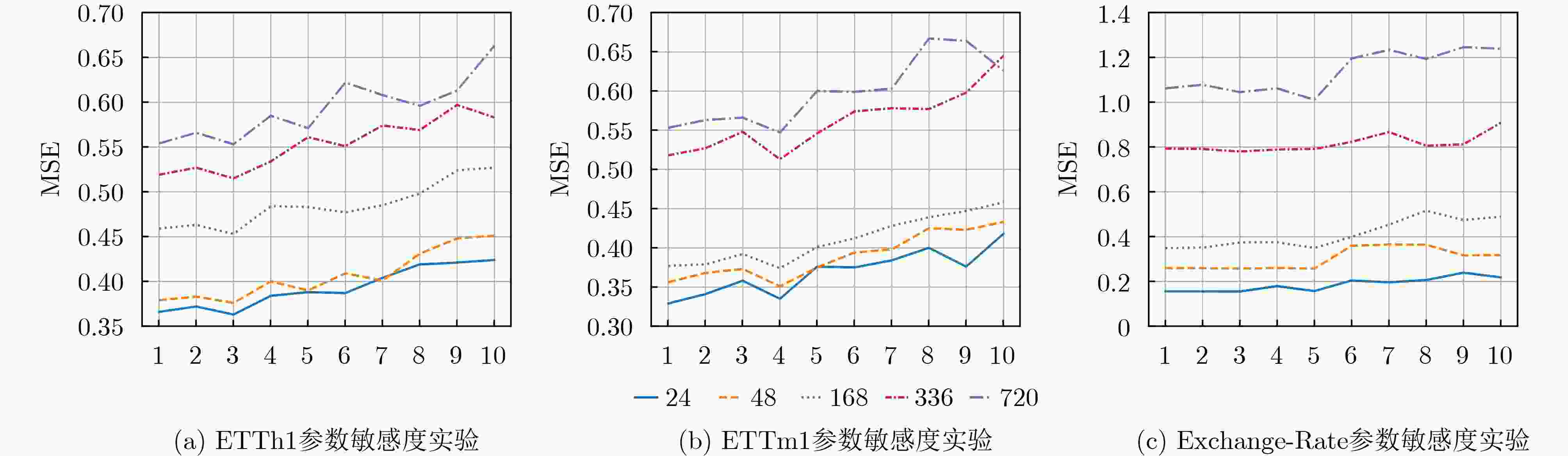

摘要: 时间序列中包含一些长期依赖关系,如长期趋势性、季节性和周期性,这些长期依赖信息的跨度可能是以月为单位的,直接应用现有方法无法显式建模时间序列的超长期依赖关系。该文提出基于统计特征搜索的预测方法来显式地建模时间序列中的长期依赖。首先对多元时间序列中的平滑特征、方差特征和区间标准化特征等统计特征进行抽取,提高时间序列搜索对趋势性、周期性、季节性的感知。随后结合统计特征在历史序列搜索相似的序列,并利用注意力机制融合当前序列信息与历史序列信息,生成可靠的预测结果。在5个真实的数据集上的实验表明该文提出的方法优于6种最先进的方法。Abstract: There are long-term dependencies, such as trends, seasonality, and periodicity in time series, which may span several months. It is insufficient to apply existing methods in modeling the long-term dependencies of the series explicitly. To address this issue, this paper proposes a Statistical Feature-based Search for multivariate time series Forecasting (SFSF). First, statistical features which include smoothing, variance, and interval standardization are extracted from multivariate time series to enhance the perception of the time series’ trends and periodicity. Next, statistical features are used to search for similar series in historical sequences. The current and historical sequence information is then blended using attention mechanisms to produce accurate prediction results. Experimental results show that the SFSF method outperforms six state-of-the-art methods.

-

Key words:

- Multivariate time series /

- Forecasting /

- Attention mechanism /

- Long-term dependency

-

表 1 数据集基本信息

变量数目 长度 时间粒度 ETTh1&ETTh2 7 17420 1 h ETTm1&ETTm2 7 69680 15 min Exchange-Rate 8 7588 1 d 表 2 各模型使用的主要技术对比

卷积神经

网络循环神经

网络自注意力

机制自监督

学习特征分解 TCN √ LSTNet √ √ LogTrans √ √ Informer √ TS2Vec √ Autoformer √ √ SFSF √ √ 表 3 ETTh1数据集结果

Model 24 48 168 336 720 MSE MAE MSE MAE MSE MAE MSE MAE MSE MAE TCN 0.763 0.742 0.848 0.948 1.128 1.227 1.383 1.294 1.645 1.783 LSTNet 1.181 1.134 1.177 1.390 1.540 1.568 2.178 1.904 2.593 1.985 LogTrans 0.637 0.612 0.823 0.724 0.952 0.917 1.356 0.988 1.335 1.315 Informer 0.565 0.532 0.675 0.643 0.821 0.747 1.096 0.837 1.184 0.873 TS2Vec 0.576 0.462 0.698 0.654 0.766 0.747 1.068 0.791 1.153 0.917 Autoformer 0.427 0.415 0.437 0.474 0.493 0.531 0.522 0.536 0.548 0.563 SFSF-ED 0.363 0.351 0.376 0.412 0.453 0.524 0.515 0.531 0.553 0.541 表 7 Exchange-Rate数据集结果

Model 24 48 168 336 720 MSE MAE MSE MAE MSE MAE MSE MAE MSE MAE TCN 0.323 0.432 2.968 1.473 2.981 1.401 3.089 1.476 3.139 1.472 LSTNet 0.384 0.439 1.575 1.065 1.521 1.003 1.513 1.085 2.250 1.234 LogTrans 0.253 0.300 0.967 0.811 1.084 0.890 1.614 1.126 1.920 1.128 Informer 0.387 0.375 0.880 0.723 1.173 0.872 1.727 1.077 2.475 1.361 TS2Vec 0.315 0.277 0.301 0.369 0.759 0.717 1.199 0.914 1.596 1.043 Autoformer 0.158 0.273 0.195 0.464 0.333 0.456 0.869 0.890 1.298 0.927 SFSF-ED 0.189 0.204 0.353 0.490 0.618 0.592 0.780 0.870 1.235 0.873 SFSF-DTW 0.155 0.237 0.257 0.319 0.349 0.396 0.795 0.758 1.011 0.824 表 4 ETTh2数据集结果

Model 24 48 168 336 720 MSE MAE MSE MAE MSE MAE MSE MAE MSE MAE TCN 1.328 0.894 1.357 0.999 1.895 1.509 2.207 1.496 3.498 1.539 LSTNet 1.403 1.461 1.610 1.644 2.260 1.813 2.592 2.628 3.610 3.784 LogTrans 0.865 0.766 1.842 1.031 4.124 1.697 3.901 1.714 3.882 1.594 Informer 0.636 0.628 1.475 1.002 3.509 1.528 2.745 1.372 3.517 1.434 TS2Vec 0.450 0.534 0.625 0.558 1.940 1.093 2.329 1.257 2.690 1.326 Autoformer 0.338 0.366 0.374 0.373 0.481 0.463 0.525 0.472 0.536 0.489 SFSF-ED 0.318 0.322 0.347 0.332 0.378 0.413 0.474 0.572 0.570 0.449 表 5 ETTm1数据集结果

Model 24 48 168 336 720 MSE MAE MSE MAE MSE MAE MSE MAE MSE MAE TCN 0.350 0.419 0.531 0.390 0.661 0.692 1.306 1.307 1.426 1.424 LSTNet 1.973 1.212 2.067 1.218 2.793 1.618 1.295 2.098 1.890 2.920 LogTrans 0.446 0.385 0.554 0.684 0.701 0.820 1.422 1.263 1.679 1.439 Informer 0.319 0.318 0.361 0.454 0.564 0.537 1.345 0.852 3.396 1.323 TS2Vec 0.402 0.437 0.567 0.481 0.556 0.564 0.745 0.675 0.795 0.633 Autoformer 0.377 0.412 0.484 0.446 0.528 0.482 0.619 0.532 0.653 0.617 SFSF-ED 0.335 0.348 0.351 0.314 0.374 0.413 0.513 0.496 0.547 0.506 表 6 ETTm2数据集结果

Model 24 48 168 336 720 MSE MAE MSE MAE MSE MAE MSE MAE MSE MAE TCN 1.271 3.110 3.034 1.323 3.069 1.391 3.120 1.314 3.106 1.405 LSTNet 1.280 3.086 3.175 1.324 3.125 1.374 3.118 1.434 3.212 1.344 LogTrans 0.693 0.497 0.757 0.587 0.980 0.753 1.344 0.898 3.073 1.308 Informer 0.288 0.361 0.362 0.415 0.602 0.558 1.322 0.861 3.375 1.364 TS2Vec 0.260 0.225 0.371 0.279 0.375 0.387 0.569 0.425 0.648 0.436 Autoformer 0.228 0.271 0.243 0.347 0.291 0.369 0.334 0.381 0.447 0.441 SFSF-ED 0.214 0.256 0.234 0.293 0.252 0.319 0.308 0.374 0.371 0.468 表 8 基于自注意力机制模型的时间复杂度

方法 时间复杂度 LogTrans O(Llog2L) Informer O(Llog2L) Autoformer O(Llog2L) SFSF O(KL2M) -

[1] ORESHKIN B N, CARPOV D, CHAPADOS N, et al. N-Beats: Neural basis expansion analysis for interpretable time series forecasting[C]. International Conference on Learning Representations, Addis Ababa, Ethiopia, 2020: 1–31. [2] SALINAS D, FLUNKERT V, GASTHAUS J, et al. DeepAR: Probabilistic forecasting with autoregressive recurrent networks[J]. International Journal of Forecasting, 2020, 36(3): 1181–1191. doi: 10.1016/j.ijforecast.2019.07.001. [3] BAI Shaojie, KOLTER J Z, and KOLTUN V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling[EB/OL]. https://arxiv.org/abs/1803.01271, 2018. [4] LAI Guokun, CHANG Weicheng, YANG Yiming, et al. Modeling long-and short-term temporal patterns with deep neural networks[C]. The 41st international ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, USA, 2018: 95–104. doi: 10.1145/3209978.3210006. [5] ZHOU Jie, CUI Ganqu, HU Shengding, et al. Graph neural networks: A review of methods and applications[J]. AI Open, 2020, 1: 57–81. doi: 10.1016/j.aiopen.2021.01.001. [6] WU Zonghan, PAN Shirui, LONG Guodong, et al. Connecting the dots: Multivariate time series forecasting with graph neural networks[C]. The 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2020: 753–763. doi: 10.1145/3394486.3403118. [7] SHAO Zezhi, ZHANG Zhao, WANG Fei, et al. Pre-training enhanced spatial-temporal graph neural network for multivariate time series forecasting[C]. The 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, USA, 2022: 1567–1577. [8] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 6000–6010. [9] YUAN Li, CHEN Yunpeng, WANG Tao, et al. Tokens-to-token ViT: Training vision transformers from scratch on imageNet[C]. The IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 538–547. doi: 10.1109/ICCV48922.2021.00060. [10] HUANG Siteng, WANG Donglin, WU Xuehan, et al. DSANet: Dual self-attention network for multivariate time series forecasting[C]. The 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 2019: 2129–2132. doi: 10.1145/3357384.3358132. [11] LI Shiyang, JIN Xiaoyong, XUAN Yao, et al. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting[C]. The 33rd International Conference on Neural Information Processing Systems, Vancouver, Canada, 2019, 32: 471. [12] ZHOU Haoyi, ZHANG Shanghang, PENG Jieqi, et al. Informer: Beyond efficient transformer for long sequence time-series forecasting[C]. The 35th AAAI Conference on Artificial Intelligence, Palo Alto, USA, 2021: 11106–11115. doi: 10.1609/aaai.v35i12.17325. [13] WU Haixu, XU Jiehui, WANG Jianmin, et al. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting[C]. The 35th International Conference on Neural Information Processing Systems, Red Hook, USA, 2021: 1717. [14] ZHOU Tian, MA Ziqing, WEN Qingsong, et al. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting[C]. International Conference on Machine Learning, Baltimore, USA, 2022: 27268–27286. [15] LIU Shizhan, YU Hang, LIAO Cong, et al. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting[C]. The Tenth International Conference on Learning Representations, Vienna, Austria, 2022: 1–20. [16] YUE Zhihan, WANG Yujing, DUAN Juanyong, et al. TS2Vec: Towards universal representation of time series[C]. The 36th AAAI Conference on Artificial Intelligence, Palo Alto, USA, 2022: 8980–8987. doi: 10.1609/aaai.v36i8.20881. [17] ZENG Ailing, CHEN Muxi, ZHANG Lei, et al. Are transformers effective for time series forecasting?[C]. The 37th AAAI Conference on Artificial Intelligence, Washington, USA, 2023: 11121–11128. doi: 10.1609/aaai.v37i9.26317. -

下载:

下载:

下载:

下载: