Super-Resolution Inpainting of Low-resolution Randomly Occluded Face Images

-

摘要: 针对低分辨率随机遮挡人脸图像,该文提出一种端到端的4倍超分辨率修复生成对抗网络(SRIGAN)。SRIGAN生成网络由编码器、特征补偿子网络和含有金字塔注意力模块的解码器构成;判别网络为改进的Patch判别网络。该网络通过特征补偿子网络和两阶段训练策略有效学习遮挡区域的缺失特征,通过在解码器中引入金字塔注意力模块和多尺度重建损失增强信息重构,从而实现低分辨率随机遮挡图像与4倍高分辨率完整图像的映射。同时,通过损失函数设计和改进Patch判别网络,确保网络训练的稳定性,提升生成网络性能。对比实验和模块验证实验验证了该算法的有效性。Abstract: An end-to-end quadruple Super-Resolution Inpainting Generative Adversarial Network (SRIGAN) is proposed in this paper, for low-resolution random occlusion face images. The generative network consists of an encoder, a feature compensation subnetwork, and a decoder constructed with a pyramid attention module. The discriminant network is an improved Patch discriminant network. The network can effectively learn the absent features of the occluded region through a feature compensation subnetwork and a two-stage training strategy. Then, the information is constructed with the decoder with a pyramid attention module and multi-scale reconstruction loss. Hence, the generative network can transform a low-resolution occlusion image into a quadruple high-resolution complete image. Furthermore, the improvements of the loss function and Patch discriminant network are employed to ensure the stability of network training and enhance the performance of the generated network. The effectiveness of the proposed algorithm is verified by comparison and module verification experiments.

-

1 训练流程

循环1,训练阶段1,训练编码器En,解码器De,判别网络D,迭代训练n=1,2,···,N1: 步骤1 数据预处理得到Igt, M, ILR, ${\boldsymbol{I}}' $(LR遮挡人脸图像); 步骤2 输入ILR到En-De得到生成图像Igen; 步骤3 冻结生成网络,以损失函数LD优化判别网络; 步骤4 冻结判别网络,以LG=λmulLmul+λadvLadv+λperLper+λstyleLstyle为损失函数优化生成网络; 步骤5 当n= N1,循环1结束。 循环2,训练阶段2,训练特征补偿网络Fc;冻结编码器En,只对Fc进行优化,迭代训练n=1,2,···,N2: 步骤1 数据预处理得到Igt, M, ILR, ${\boldsymbol{I}}' $; 步骤2 输入ILR到En得到特征p; 步骤3 输入${\boldsymbol{I}}' $ 到En-Fc得到特征q; 步骤4 以损失函数LFc优化Fc; 步骤5 当n= N2,循环2结束。 2 数据预处理

步骤1 选取HR人脸图像真值Igt:

从训练集中随机取出m个HR图像$ {\boldsymbol{I}}_{{\text{gt}}}^{(1)},{\boldsymbol{I}}_{{\text{gt}}}^{(2)}, \cdots ,{\boldsymbol{I}}_{{\text{gt}}}^{(m)} $组成一个批量。步骤2 对Igt使用双线性插值下采样生成LR图像真值ILR; 步骤3 生成随机掩码M: i=1,2,···, m迭代生成: 在[1,2]区间随机生成正整数j; 若j=1,从不规则掩码数据集随机抽取掩码; 若j=2,随机生成掩码位置坐标(a, b),掩码边长c和d;生成对角线坐标为(a, b)和 (a+c, b+d) 的矩形掩码; 对掩码进行随机旋转、裁剪,得到M(i) 步骤4 生成LR遮挡人脸图像I'(i)= I(i) ×M(i) 表 1 不同训练方法和阶段性能比较

评价指标 直接训练修复 两阶段SR修复 第1阶段SR重建 PSNR(dB)↑ 21.207 0 24.860 8 25.295 9 SSIM ↑ 0.724 7 0.890 3 0.906 4 MAE ↓ 0.060 6 0.036 1 0.034 5 表 2 各模块量化对比

评价指标 解码器 多尺度解码器 完整网络 PSNR (dB)↑ 20.346 0 22.986 1 24.860 8 SSIM↑ 0.685 5 0.821 8 0.890 3 MAE↓ 0.068 5 0.047 9 0.036 1 表 3 SR-Inpainting级联方法对比

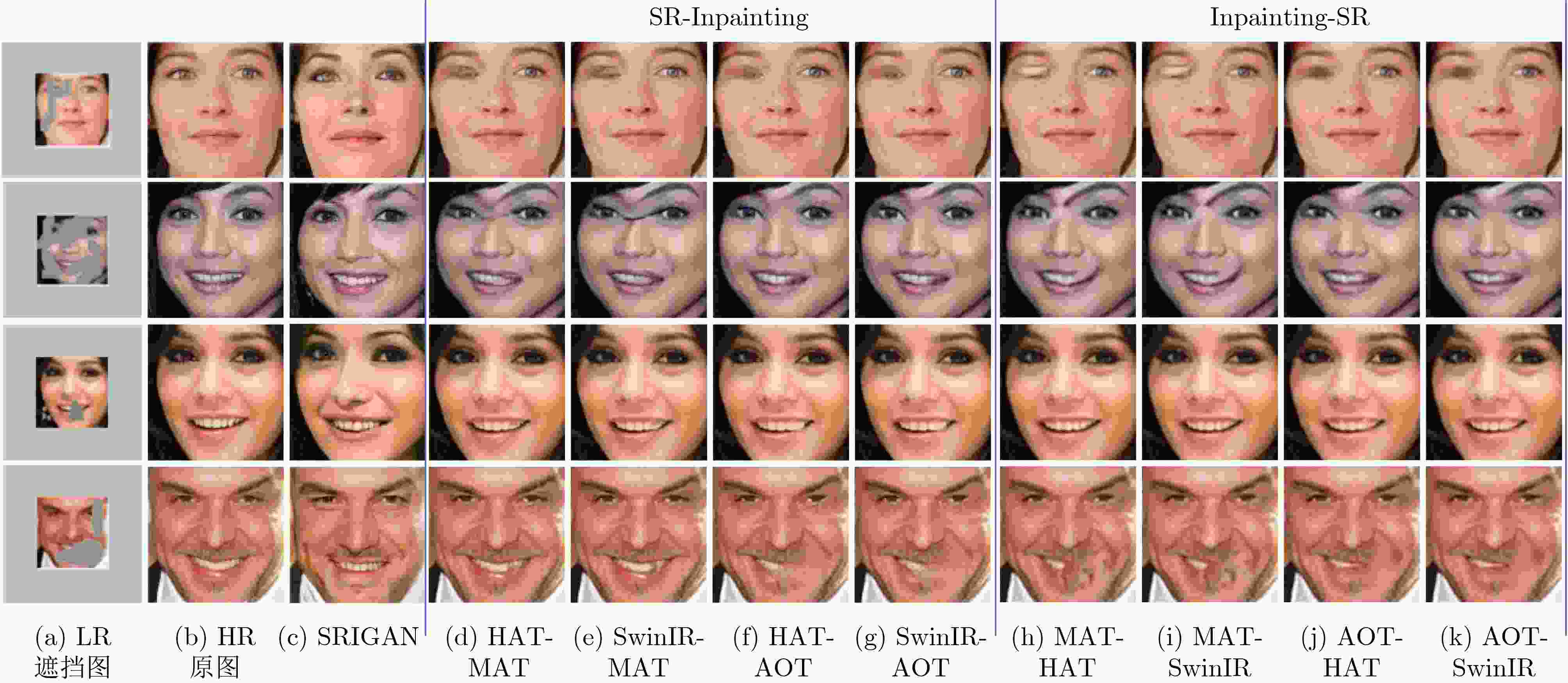

DRN-CA DRN-EC PAN-CA PAN-EC HAT-MAT HAT-AOT SwinIR-MAT SwinIR-AOT 本文方法 PSNR(dB)↑ 24.296 2 25.319 8 24.571 5 25.623 7 25.574 2 26.645 9 25.548 3 25.894 2 24.860 8 SSIM ↑ 0.810 1 0.864 9 0.817 4 0.872 5 0.861 6 0.886 1 0.859 6 0.877 3 0.890 3 MAE↓ 0.039 9 0.036 1 0.038 8 0.034 9 0.035 3 0.032 7 0.036 4 0.033 4 0.036 1 表 4 Inpainting-SR级联方法对比

CA-DRN EC-DRN CA-PAN EC-PAN MAT-HAT AOT-HAT MAT-SwinIR AOT-SwinIR 本文方法 PSNR(dB)↑ 24.393 9 22.838 3 22.212 3 24.487 5 25.807 2 26.598 6 23.892 4 24.623 5 24.860 8 SSIM ↑ 0.841 3 0.810 6 0.790 3 0.844 9 0.819 1 0.831 5 0.771 0 0.782 7 0.890 3 MAE↓ 0.045 7 0.053 9 0.057 8 0.045 3 0.036 7 0.034 2 0.053 1 0.047 5 0.036 1 -

[1] 刘颖, 张艺轩, 佘建初, 等. 人脸去遮挡新技术研究综述[J]. 计算机科学与探索, 2021, 15(10): 1773–1794. doi: 10.3778/j.issn.1673-9418.2103092.LIU Ying, ZHANG Yixuan, SHE Jianchu, et al. Review of new face occlusion inpainting technology research[J]. Journal of Frontiers of Computer Science and Technology, 2021, 15(10): 1773–1794. doi: 10.3778/j.issn.1673-9418.2103092. [2] 卢启萌, 毛晓, 凌嵘, 等. 口罩佩戴对人像鉴定的影响[J]. 中国司法鉴定, 2021(5): 89–94. doi: 10.3969/j.issn.1671-2072.2021.05.010.LU Qimeng, MAO Xiao, LING Rong, et al. Influence of mask wearing on identification of human images[J]. Chinese Journal of Forensic Sciences, 2021(5): 89–94. doi: 10.3969/j.issn.1671-2072.2021.05.010. [3] 廖海斌, 陈友斌, 陈庆虎. 基于非局部相似字典学习的人脸超分辨率与识别[J]. 武汉大学学报:信息科学版, 2016, 41(10): 1414–1420. doi: 10.13203/j.whugis20140498.LIAO Haibin, CHEN Youbin, and CHEN Qinghu. Non-local similarity dictionary learning based super-resolution for improved face recognition[J]. Geomatics and Information Science of Wuhan University, 2016, 41(10): 1414–1420. doi: 10.13203/j.whugis20140498. [4] 王山豹, 梁栋, 沈玲. 利用多模态注意力机制生成网络的图像修复[J]. 计算机辅助设计与图形学学报, 2023, 35(7): 1109–1121. doi: 10.3724/SP.J.1089.2023.19578.WANG Shanbao, LIANG Dong, and SHEN Ling. Image inpainting with multi-modal attention mechanism generative networks[J]. Journal of Computer-Aided Design & Computer Graphics, 2023, 35(7): 1109–1121. doi: 10.3724/SP.J.1089.2023.19578. [5] 张子迎, 周华. 强化结构的数字壁画病害修复算法研究[J]. 系统仿真学报, 2022, 34(7): 1524–1531. doi: 10.16182/j.issn1004731x. joss.21-0034.ZHANG Ziying and ZHOU Hua. Research on inpainting algorithm of digital murals based on enhanced structural information[J]. Journal of System Simulation, 2022, 34(7): 1524–1531. doi: 10.16182/j.issn1004731x.joss.21-0034. [6] BARNES C, SHECHTMAN E, FINKELSTEIN A, et al. PatchMatch: A randomized correspondence algorithm for structural image editing[J]. ACM Transactions on Graphics, 2009, 28(3): 24. doi: 10.1145/1531326.1531330. [7] BERTALMIO M, SAPIRO G, CASELLES V, et al. Image inpainting[C]. The 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, USA, 2000: 417–424. doi: 10.1145/344779.344972. [8] ESEDOGLU S and SHEN Jianhong. Digital inpainting based on the Mumford–Shah–Euler image model[J]. European Journal of Applied Mathematics, 2002, 13(4): 353–370. doi: 10.1017/S0956792502004904. [9] YU Jiahui, LIN Zhe, YANG Jimei, et al. Generative image inpainting with contextual attention[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 5505–5514. doi: 10.1109/CVPR.2018.00577. [10] NAZERI K, NG E, JOSEPH T, et al. EdgeConnect: Generative image inpainting with adversarial edge learning[J]. arXiv preprint arXiv: 1901.00212, 2019. [11] LI Wenbo, LIN Zhe, ZHOU Kun, et al. MAT: Mask-aware transformer for large hole image inpainting[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 10758–10768. doi: 10.1109/CVPR52688.2022.01049. [12] ZENG Yanhong, FU Jianlong, CHAO Hongyang, et al. Aggregated contextual transformations for high-resolution image inpainting[J]. IEEE Transactions on Visualization and Computer Graphics, 2023, 29(7): 3266–3280. doi: 10.1109/TVCG.2022.3156949. [13] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C]. The 27th International Conference on Neural Information Processing Systems, Montréal, Canada, 2014: 1384–1393. [14] GUO Yong, CHEN Jian, WANG Jingdong, et al. Closed-loop matters: Dual regression networks for single image super-resolution[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 5407–5416. doi: 10.1109/CVPR42600.2020.00545. [15] MEI Yiqun, FAN Yuchen, ZHANG Yulun, et al. Pyramid attention network for image restoration[J]. International Journal of Computer Vision, 2023, 131(12): 3207–3225. doi: 10.1007/s11263-023-01843-5. [16] 汪荣贵, 雷辉, 杨娟, 等. 基于自相似特征增强网络结构的图像超分辨率重建[J]. 光电工程, 2022, 49(5): 210382. doi: 10.12086/oee.2022.210382.WANG Ronggui, LEI Hui, YANG Juan, et al. Self-similarity enhancement network for image super-resolution[J]. Opto-Electronic Engineering, 2022, 49(5): 210382. doi: 10.12086/oee.2022.210382. [17] 黄友文, 唐欣, 周斌. 结合双注意力和结构相似度量的图像超分辨率重建网络[J]. 液晶与显示, 2022, 37(3): 367–375. doi: 10.37188/CJLCD.2021-0178.HUANG Youwen, TANG Xin, and ZHOU Bin. Image super-resolution reconstruction network with dual attention and structural similarity measure[J]. Chinese Journal of Liquid Crystals and Displays, 2022, 37(3): 367–375. doi: 10.37188/CJLCD.2021-0178. [18] CHEN Xiangyu, WANG Xintao, ZHOU Jiantao, et al. Activating more pixels in image super-resolution transformer[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 22367–22377. doi: 10.1109/CVPR52729.2023.02142. [19] LIANG Jingyun, CAO Jiezhang, SUN Guolei, et al. SwinIR: Image restoration using swin transformer[C]. 2021 IEEE/CVF International Conference on Computer Vision Workshops, Montreal, Canada, 2021: 1833–1844. doi: 10.1109/ICCVW54120.2021.00210. [20] ARJOVSKY M, CHINTALA S, and BOTTOU L. Wasserstein GAN[J]. arXiv preprint arXiv: 1701.07875, 2017. [21] GULRAJANI I, AHMED F, ARJOVSKY M, et al. Improved training of wasserstein GANs[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 5767–5777. [22] MIYATO T, KATAOKA T, KOYAMA M, et al. Spectral normalization for generative adversarial networks[J]. arXiv preprint arXiv: 1802.05957, 2018. [23] ISOLA P, ZHU Junyan, ZHOU Tinghui, et al. Image-to-image translation with conditional adversarial networks[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1125–1134. doi: 10.1109/CVPR.2017.632. [24] JOHNSON J, ALAHI A, and FEI-FEI L. Perceptual losses for real-time style transfer and super-resolution[C]. The 14th European Conference on Computer Vision, Amsterdam, the Netherlands, 2016: 694–711. doi: 10.1007/978-3-319-46475-6_43. [25] LIU Guilin, REDA F A, SHIH K J, et al. Image inpainting for irregular holes using partial convolutions[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 85–100. doi: 10.1007/978-3-030-01252-6_6. -

下载:

下载:

下载:

下载: