Local Adaptive Federated Learning with Channel Personalized Normalization

-

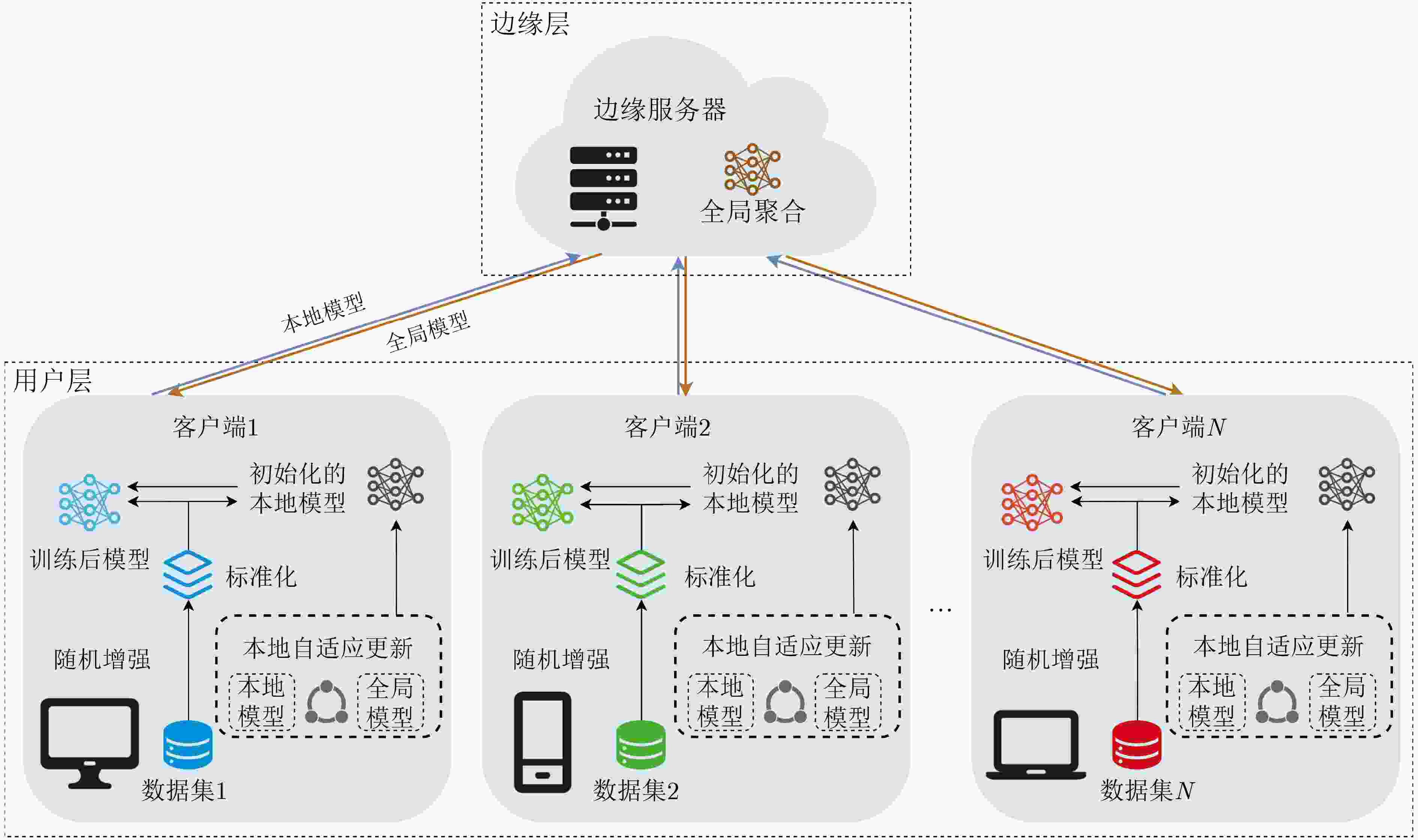

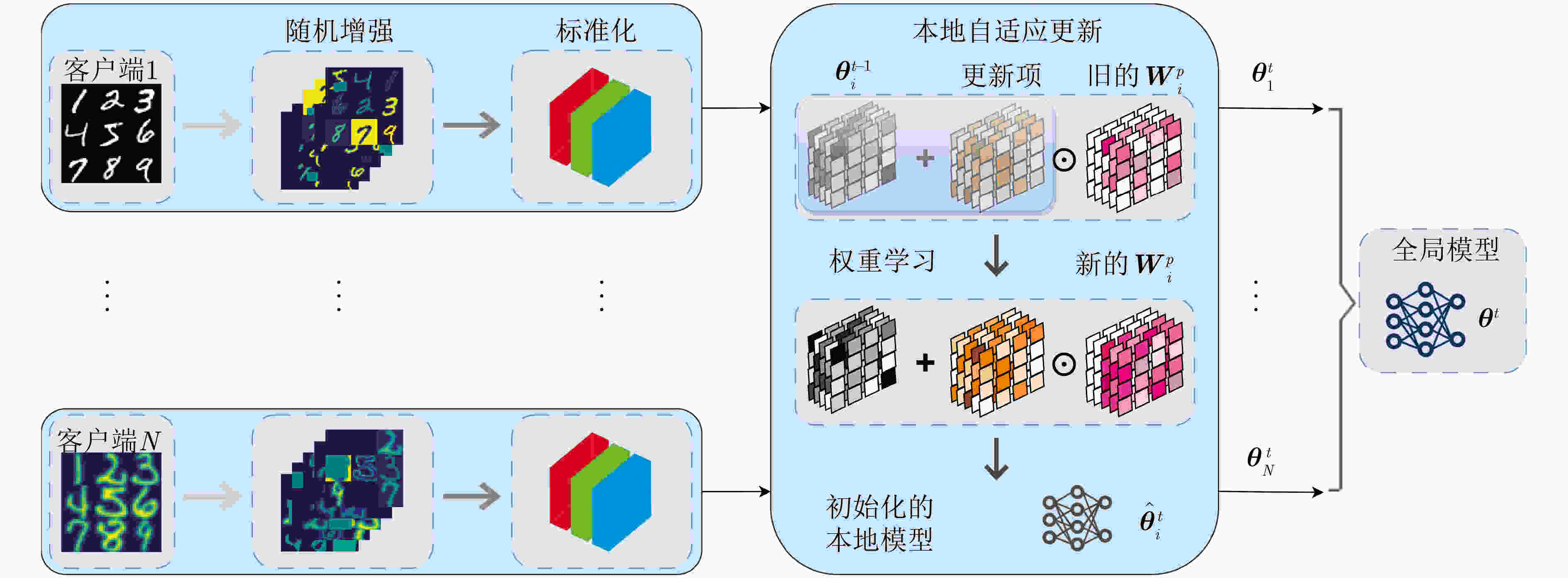

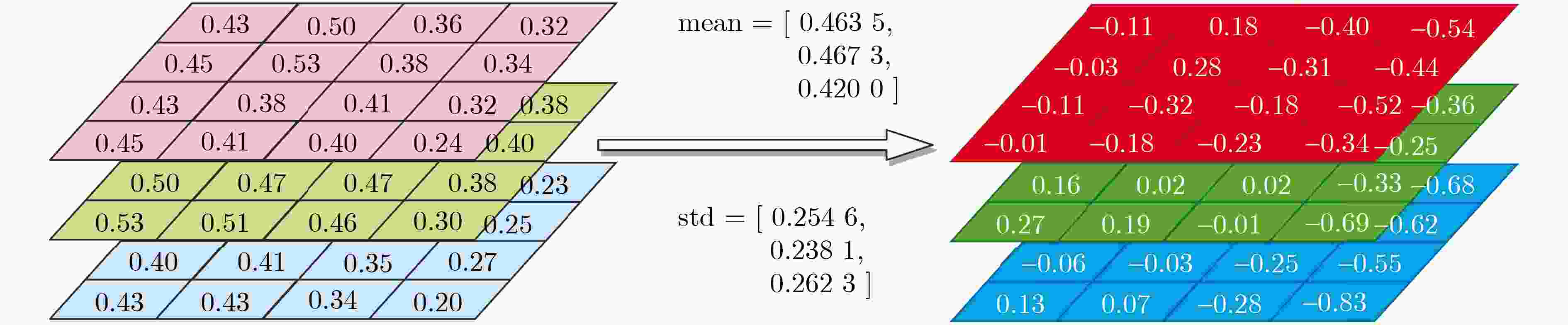

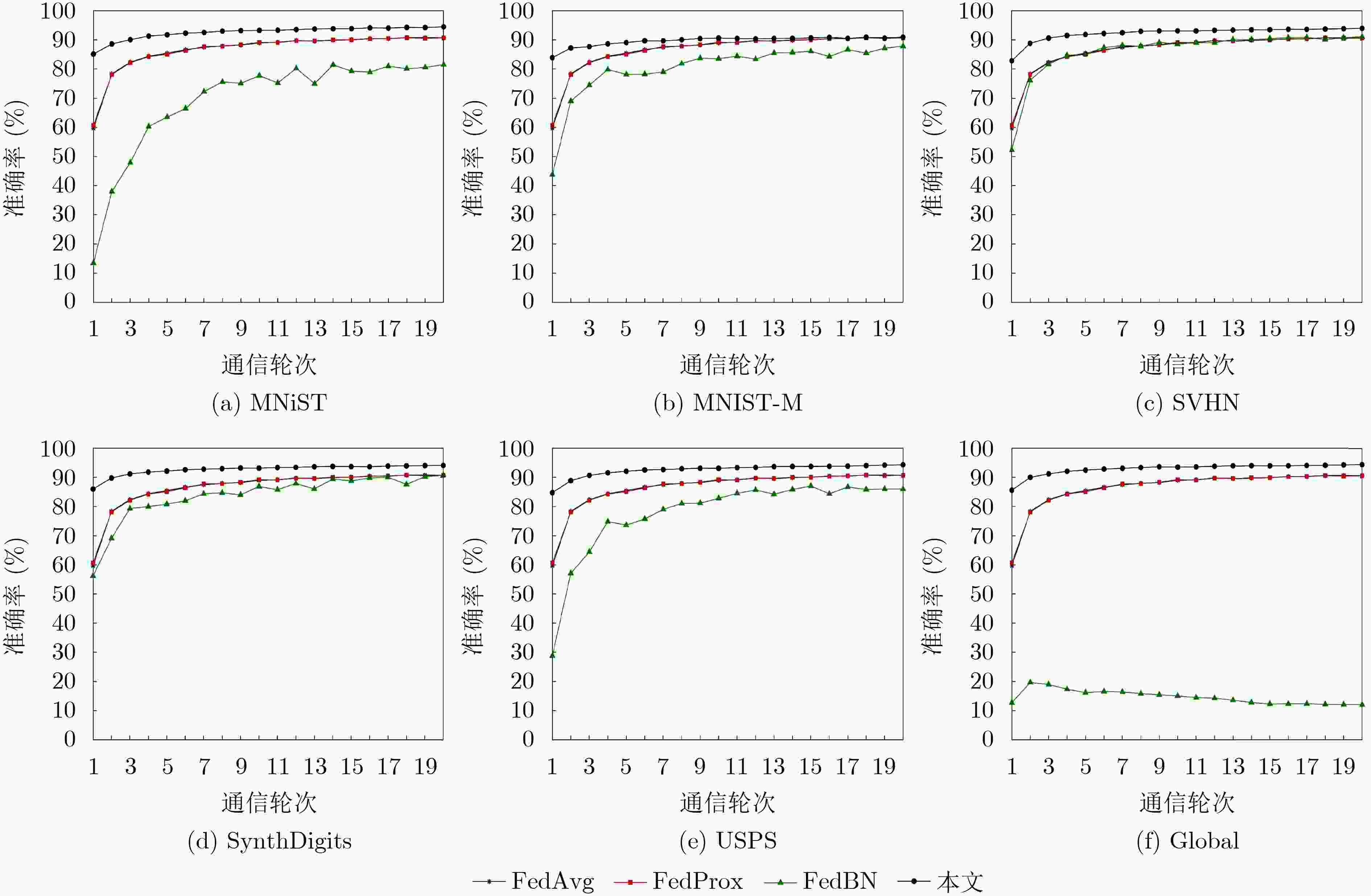

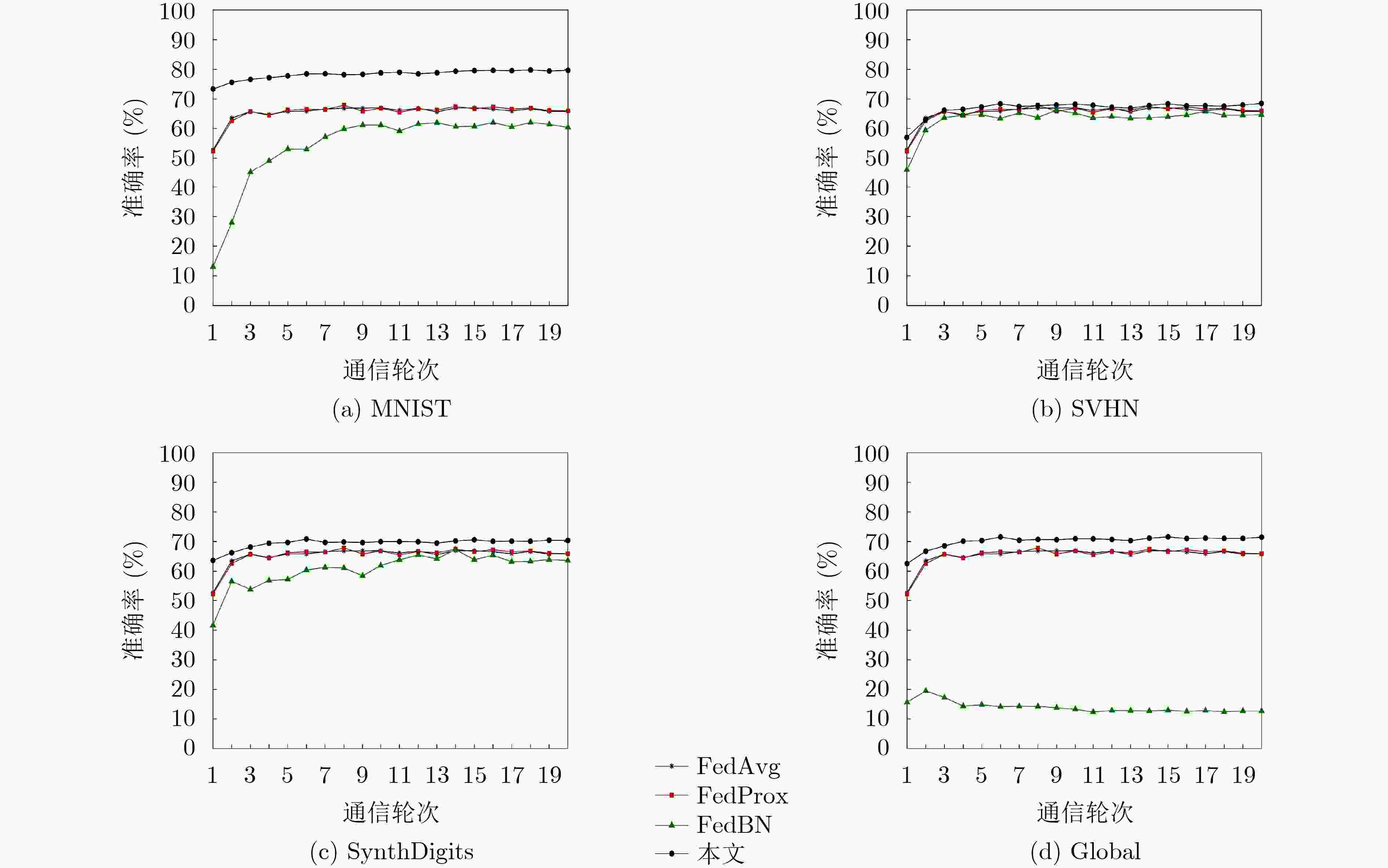

摘要: 为了缓解联邦学习(FL)中客户端之间由于完全重叠特征偏移所带来的数据异构问题影响,该文提出一种融合通道个性标准化的本地自适应联邦学习算法。具体地,构建了一个面向数据特征偏移的联邦学习模型,在训练开始之前先对客户端中的图像数据集进行一系列随机增强操作。其次,客户端分别按颜色通道单独计算数据集的均值和标准差,实现通道个性标准化。进一步地,设计本地自适应更新联邦学习算法,即自适应地聚合全局模型和本地模型以进行本地初始化,该聚合方法的独特之处在于既保留了客户端模型的个性化特征,同时又能从全局模型中捕获必要信息,以提升模型的泛化性能。最后,实验结果表明,该文所提算法与现有相关算法相比,收敛速度更快,准确率提高了3%~19%。Abstract: To relieve the impact of data heterogeneity problems caused by full overlapping attribute skew between clients in Federated Learning (FL), a local adaptive FL algorithm that incorporates channel personalized normalization is proposed in this paper. Specifically, an FL model oriented to data attribute skew is constructed, and a series of random enhancement operations are performed on the images data set in the client before training begins. Next, the client calculates the mean and standard deviation of the data set separately by color channel to achieve channel personalized normalization. Furthermore, a local adaptive update FL algorithm is designed, that is, the global model and the local model are adaptively aggregated for local initialization. The uniqueness of this aggregation method is that it not only retains the personalized characteristics of the client model, but also can capture necessary information in the global model to improve the generalization performance of the model. Finally, the experimental results demonstrate that the proposed algorithm obtains competitive convergence speed compared with existing representative works and the accuracy is 3%~19% higher.

-

Key words:

- Edge computing /

- Federated Learning (FL) /

- Normalization /

- Model aggregation

-

1 融合通道个性标准化的本地自适应联邦学习算法

输入:客户端数量$N$; 损失函数$L$; 客户端本地样本数据量权重$r$; 初始化全局模型${{\boldsymbol{\theta}} ^0}$; 本地模型学习率$\alpha $; 聚合权重学习率$\eta $; 客户端数据采样率$s\% $; 自适应聚合层数$p$. 输出:训练后的本地模型${\boldsymbol{\theta}} _1^t,{\boldsymbol{\theta}} _2^t,\cdots,{\boldsymbol{\theta}} _N^t$以及全局模型${{\boldsymbol{\theta}} ^t}$. (1) BEGIN (2) 边缘服务器向所有客户端发送${{\boldsymbol{\theta}} ^0}$以初始化本地模型; (3) 所有客户端将聚合权重${\boldsymbol{W}}_i^p,\forall i \in \left[ N \right]$初始化为1; (4) FOR $t$ IN 通信轮次$T$ DO (5) 服务器将${{\boldsymbol{\theta}} ^{t - 1}}$发送给所有客户端; (6) FOR 所有客户端并行 DO (7) 客户端$i$采样$s\% $本地数据; (8) 客户端$i$对本地数据随机增强; (9) 客户端$i$基于式(3)通道个性标准化本地数据; (10) IF $t = 2$ THEN (11) WHILE ${\boldsymbol{W}}_i^p$不收敛 DO (12) 客户端$i$基于式(8)训练${\boldsymbol{W}}_i^p$; (13) ELSE IF $t > 2$ THEN (14) 客户端$i$基于式(8)训练${\boldsymbol{W}}_i^p$; (15) 客户端$i$基于式(7)聚合出$\hat {\boldsymbol{\theta}} _i^t$用以本地训练; (16) 客户端$i$基于本地训练获得

${\boldsymbol{\theta}} _i^t \leftarrow \hat {\boldsymbol{\theta}} _i^t - \alpha \nabla \hat {\boldsymbol{\theta}} _i^tL\left( {\hat {\boldsymbol{\theta}} _i^t,{D_i};{{\boldsymbol{\theta}} ^{t - 1}}} \right)$;(17) 客户端$i$上传${\boldsymbol{\theta}} _i^t$给边缘服务器以聚合; (18) END FOR (19) 服务器基于式(9)聚合全局模型${{\boldsymbol{\theta}} ^t}$; (20) END FOR (21) END -

[1] KAISSIS G A, MAKOWSKI M R, RÜCKERT D, et al. Secure, privacy-preserving and federated machine learning in medical imaging[J]. Nature Machine Intelligence, 2020, 2(6): 305–311. doi: 10.1038/s42256-020-0186-1. [2] TANG Zhenheng, SHI Shaohuai, and CHU Xiaowen. Communication-efficient decentralized learning with sparsification and adaptive peer selection[C]. IEEE 40th International Conference on Distributed Computing Systems (ICDCS), Singapore, 2020: 1207–1208. doi: 10.1109/ICDCS47774.2020.00153. [3] YANG Qiang, LIU Yang, CHEN Tianjian, et al. Federated machine learning: Concept and applications[J]. ACM Transactions on Intelligent Systems and Technology, 2019, 10(2): 12. doi: 10.1145/3298981. [4] CHEN Yang, SUN Xiaoyan, and JIN Yaochu. Communication-efficient federated deep learning with layerwise asynchronous model update and temporally weighted aggregation[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(10): 4229–4238. doi: 10.1109/TNNLS.2019.2953131. [5] WU Donglei, ZOU Xiangyu, ZHANG Shuyu, et al. SmartIdx: Reducing communication cost in federated learning by exploiting the CNNs structures[C]. The 36th AAAI Conference on Artificial Intelligence, 2022: 4254–4262. doi: 10.1609/aaai.v36i4.20345. [6] MILLS J, HU Jia, and MIN Geyong. Communication-efficient federated learning for wireless edge intelligence in IoT[J]. IEEE Internet of Things Journal, 2020, 7(7): 5986–5994. doi: 10.1109/JIOT.2019.2956615. [7] SATTLER F, WIEDEMANN S, MÜLLER K R, et al. Robust and communication-efficient federated learning from non-I. I. D. data[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(9): 3400–3413. doi: 10.1109/TNNLS.2019.2944481. [8] DUAN Moming, LIU Duo, CHEN Xianzhang, et al. Astraea: Self-balancing federated learning for improving classification accuracy of mobile deep learning applications[C]. IEEE 37th International Conference on Computer Design (ICCD), Abu Dhabi, United Arab Emirates, 2019: 246–254. doi: 10.1109/ICCD46524.2019.00038. [9] LIM W Y B, LUONG N C, HOANG D T, et al. Federated learning in mobile edge networks: A comprehensive survey[J]. IEEE Communications Surveys & Tutorials, 2020, 22(3): 2031–2063. doi: 10.1109/COMST.2020.2986024. [10] MENDIETA M, YANG Taojiannan, WANG Pu, et al. Local learning matters: Rethinking data heterogeneity in federated learning[C]. The 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 8387–8396. doi: 10.1109/CVPR52688.2022.00821. [11] WANG Jianyu, LIU Qinghua, LIANG Hao, et al. Tackling the objective inconsistency problem in heterogeneous federated optimization[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 638. doi: 10.48550/arXiv.2007.07481. [12] LI Qinbin, HE Bingsheng, and SONG D. Model-contrastive federated learning[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 10708–10717. doi: 10.1109/CVPR46437.2021.01057. [13] GHOSH A, CHUNG J, YIN Dong, et al. An efficient framework for clustered federated learning[J]. IEEE Transactions on Information Theory, 2022, 68(12): 8076–8091. doi: 10.1109/TIT.2022.3192506. [14] SATTLER F, MÜLLER K R, and SAMEK W. Clustered federated learning: Model-agnostic distributed multitask optimization under privacy constraints[J]. IEEE Transactions on Neural Networks and Learning Systems, 2021, 32(8): 3710–3722. doi: 10.1109/TNNLS.2020.3015958. [15] KULKARNI V, KULKARNI M, and PANT A. Survey of personalization techniques for federated learning[C]. The 2020 Fourth World Conference on Smart Trends in Systems, Security and Sustainability (WorldS4), London, UK, 2020: 794–797. doi: 10.1109/WorldS450073.2020.9210355. [16] MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, USA, 2017: 1273–1282. [17] ZHAO Zhongyuan, FENG Chenyuan, HONG Wei, et al. Federated learning with non-iid data in wireless networks[J]. IEEE Transactions on Wireless Communications, 2021, 21(3): 1927–1942. doi: 10.1109/TWC.2021.3108197. [18] KE Guolin, MENG Qi, FINLEY T, et al. LightGBM: A highly efficient gradient boosting decision tree[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 3149–3157. [19] LI Qinbin, DIAO Yiqun, CHEN Quan, et al. Federated learning on non-IID data silos: An experimental study[C]. 2022 IEEE 38th International Conference on Data Engineering, Kuala Lumpur, Malaysia, 2022: 965–978. doi: 10.1109/ICDE53745.2022.00077. [20] LI Tian, SAHU A K, ZAHEER M, et al. Federated optimization in heterogeneous networks[C]. Machine Learning and Systems, Austin, USA, 2020: 1–22. doi: 10.48550/arXiv.1812.06127. [21] WANG Hao, KAPLAN Z, NIU Di, et al. Optimizing federated learning on non-iid data with reinforcement learning[C]. The IEEE INFOCOM 2020 - IEEE Conference on Computer Communications, Toronto, Canada, 2020: 1698–1707. doi: 10.1109/INFOCOM41043.2020.9155494. [22] WANG Hongyi, YUROCHKIN M, SUN Yuekai, et al. Federated learning with matched averaging[C]. The 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 2020: 1–16. [23] HUANG Yutao, CHU Lingyang, ZHOU Zirui, et al. Personalized cross-silo federated learning on non-IID data[C]. The 35th AAAI Conference on Artificial Intelligence, 2021: 7865–7873. doi: 10.1609/aaai.v35i9.16960. [24] LI Xinchun, ZHAN Dechuan, SHAO Yunfeng, et al. FedPHP: Federated personalization with inherited private models[C]. Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Bilbao, Spain, 2021: 587–602. doi: 10.1007/978-3-030-86486-6_36. [25] ZHANG M, SAPRA K, FIDLER S, et al. Personalized federated learning with first order model optimization[C]. The 9th International Conference on Learning Representations (ICLR), 2021: 1–17. [26] LUO Jun and WU Shandong. Adapt to adaptation: Learning personalization for cross-silo federated learning[C]. 31st International Joint Conference on Artificial Intelligence, Vienna, Austria, 2022: 2166–2173. [27] LI Xiaoxiao, JIANG Meirui, ZHANG Xiaofei, et al. FedBN: Federated learning on non-IID features via local batch normalization[C]. The 9th International Conference on Learning Representations, 2021: 1–27. -

下载:

下载:

下载:

下载: