Intelligent Weighted Energy Consumption and Delay Optimization for UAV-Assisted MEC Under Malicious Jamming

-

摘要: 近年来,将移动边缘计算(MEC)服务器搭载在无人机(UAV)上为地面移动用户提供服务备受学术界和工业界广泛的关注。但在恶意干扰环境下,如何有效调度资源降低系统时延和能耗成为关键问题。为此,针对干扰机影响下无人机辅助边缘计算的问题,该文建立一个以最小化加权能耗与时延为目标的模型,联合优化无人机飞行轨迹、资源调度和任务分配来提升无人机辅助移动边缘计算系统性能。鉴于优化问题难求解以及恶意干扰行为动态多变,该文提出了一种基于双延迟深度确定性策略梯度(TD3)的资源调度算法,同时结合优先经验回放(PER)机制提高算法收敛速度和稳定性,高效对抗恶意干扰攻击。仿真结果表明所提算法较其他算法,能够有效降低系统的时延和能耗,并具有很好的收敛性与稳定性。Abstract: In recent years, mounting Mobile Edge Computing (MEC) servers on Unmanned Aerial Vehicle (UAV) to provide services for mobile ground users has been widely researched in academia and industry. However, in malicious jamming environments, how to effectively schedule resources to reduce system delay and energy consumption becomes a key challenge. Therefore, this paper considers a UAV-assisted MEC system under a malicious jammer, where an optimization model is established to minimize the weighted energy consumption and delay by jointly optimizing UAV flight trajectories, resource scheduling, and task allocation. As the optimization problem is difficult to be solved and the malicious jamming behavior is dynamic, a Twin Delayed Deep Deterministic (TD3) policy gradient algorithm is proposed to search for the optimal policy. At the same time, the Prioritized Experience Replay (PER) technique is added to improve the convergence speed and stability of the algorithm, which is highly effective against malicious interference attacks. The simulation results show that the proposed algorithm can effectively reduce the delay and energy consumption, and achieve good convergence and stability compared with other algorithms.

-

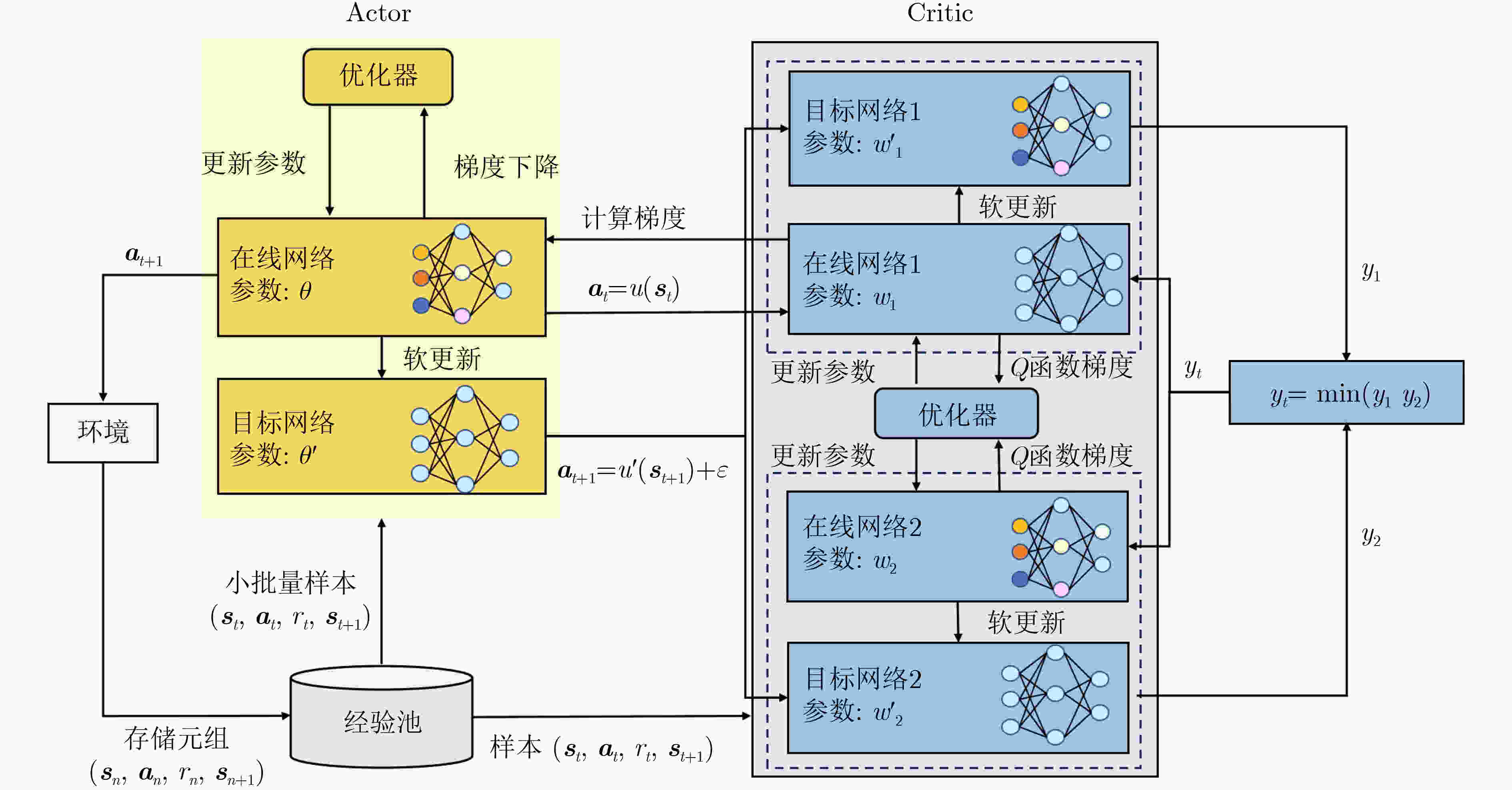

1 基于PER-TD3的时延和能耗加权最小化的算法流程

(1) 设置无人机辅助边缘计算系统环境,初始化在线网络参数${w_1},{w_2},\theta $和目标网络参数:$w_1^{'} \leftarrow {w_1},w_2^{'} \leftarrow {w_2},{\theta ^{'}} \leftarrow \theta $。 (2) 初始化经验回放池。 (3) 循环训练轮数${\text{Episode}} = 1,2, \cdots ,E$: (4) 重置参数并得到初始状态${{\boldsymbol{s}}_1}$; (5) 循环训练步数${\text{Step}} = 1,2, \cdots ,N$: (6) 通过Actor选择加入噪声的动作:${{\boldsymbol{a}}_n}$; (7) 无人机执行动作${a_n}$,进入下一个状态${{\boldsymbol{s}}_{n + 1}}$,并从环境中获得奖励${r_n}$,并计算当前经验的优先级${p_n}$; (8) 如果经验池未满,将四元组$\left( {{{\boldsymbol{s}}_n},{{\boldsymbol{a}}_n},{r_n},{{\boldsymbol{s}}_{n + 1}}} \right)$及其优先级存储至经验池中; (9) 如果经验池满,在经验池按照优先级选取小批量样本$\left( {{{\boldsymbol{s}}_t},{{\boldsymbol{a}}_t},{r_t},{{\boldsymbol{s}}_{t + 1}}} \right)$输入网络中; (10) 基于策略平滑由Actor目标网络输出动作:$ {{\boldsymbol{a}}_{t + 1}} = {u^{'}}\left( {{{\boldsymbol{s}}_{t + 1}}\left| {{\theta ^{'}}} \right.} \right) + \varepsilon $; (11) 计算目标值:${y_t} = {r_t} + \gamma \min \left( {Q_1^{'}\left( {{{\boldsymbol{s}}_{t + 1}},{{\boldsymbol{a}}_{t + 1}}\left| {w_1^{'}} \right.} \right),Q_2^{'}\left( {{{\boldsymbol{s}}_{t + 1}},{{\boldsymbol{a}}_{t + 1}}\left| {w_2^{'}} \right.} \right)} \right)$; (12) 根据TD值更新优先级,计算重要性采样权重,更新损失函数,更新网络参数; (13) 直到${\text{Step}} = N$; (14) 直到${\text{Episode}} = E$; (15)计算获得无人机飞行轨迹、资源调度和任务分配策略,输出系统的能耗和时延。 -

[1] MAO Yuyi, YOU Changsheng, ZHANG Jun, et al. A survey on mobile edge computing: The communication perspective[J]. IEEE Communications Surveys & Tutorials, 2017, 19(4): 2322–2358. doi: 10.1109/COMST.2017.2745201. [2] WANG Di, TIAN Jie, ZHANG Haixia, et al. Task offloading and trajectory scheduling for UAV-enabled MEC networks: An optimal transport theory perspective[J]. IEEE Wireless Communications Letters, 2022, 11(1): 150–154. doi: 10.1109/LWC.2021.3122957. [3] LIN Xingqin, YAJNANARAYANA V, MURUGANATHAN S D, et al. The sky is not the limit: LTE for unmanned aerial vehicles[J]. IEEE Communications Magazine, 2018, 56(4): 204–210. doi: 10.1109/MCOM.2018.1700643. [4] CHEN Fangni, FU Jiafei, WANG Zhongpeng, et al. Joint communication and computation resource optimization in FD-MEC cellular networks[J]. IEEE Access, 2019, 7: 168444–168454. doi: 10.1109/ACCESS.2019.2954622. [5] ZHANG Xiaochen, ZHANG Jiao, XIONG Jun, et al. Energy-efficient multi-UAV-enabled multiaccess edge computing incorporating NOMA[J]. IEEE Internet of Things Journal, 2020, 7(6): 5613–5627. doi: 10.1109/JIOT.2020.2980035. [6] ZHANG Liang and ANSARI N. Latency-aware IoT service provisioning in UAV-aided mobile-edge computing networks[J]. IEEE Internet of Things Journal, 2020, 7(10): 10573–10580. doi: 10.1109/JIOT.2020.3005117. [7] HU Qiyu, CAI Yunlong, YU Guanding, et al. Joint offloading and trajectory design for UAV-enabled mobile edge computing systems[J]. IEEE Internet of Things Journal, 2019, 6(2): 1879–1892. doi: 10.1109/JIOT.2018.2878876. [8] YU Zhe, GONG Yanmin, GONG Shimin, et al. Joint task offloading and resource allocation in UAV-enabled mobile edge computing[J]. IEEE Internet of Things Journal, 2020, 7(4): 3147–3159. doi: 10.1109/JIOT.2020.2965898. [9] ZHOU Wen, XING Ling, XIA Junjuan, et al. Dynamic computation offloading for MIMO mobile edge computing systems with energy harvesting[J]. IEEE Transactions on Vehicular Technology, 2021, 70(5): 5172–5177. doi: 10.1109/TVT.2021.3075018. [10] 余雪勇, 朱烨, 邱礼翔, 等. 基于无人机辅助边缘计算系统的节能卸载策略[J]. 系统工程与电子技术, 2022, 44(3): 1022–1029. doi: 10.12305/j.issn.1001-506X.2022.03.35.YU Xueyong, ZHU Ye, QIU Lixiang, et al. Energy efficient offloading strategy for UAV aided edge computing systems[J]. Systems Engineering and Electronics, 2022, 44(3): 1022–1029. doi: 10.12305/j.issn.1001-506X.2022.03.35. [11] 李安, 戴龙斌, 余礼苏, 等. 加权能耗最小化的无人机辅助移动边缘计算资源分配策略[J]. 电子与信息学报, 2022, 44(11): 3858–3865. doi: 10.11999/JEIT210832.LI An, DAI Longbin, YU Lisu, et al. Resource allocation for unmanned aerial vehicle-assisted mobile edge computing to minimize weighted energy consumption[J]. Journal of Electronics & Information Technology, 2022, 44(11): 3858–3865. doi: 10.11999/JEIT210832. [12] LI Yuxi. Deep reinforcement learning: An overview[EB/OL].https://arxiv.org/abs/1701.07274, 2017. [13] CHENG Nan, LYU Feng, QUAN Wei, et al. Space/aerial-assisted computing offloading for IoT applications: A learning-based approach[J]. IEEE Journal on Selected Areas in Communications, 2019, 37(5): 1117–1129. doi: 10.1109/JSAC.2019.2906789. [14] SEID A M, BOATENG G O, ANOKYE S, et al. Collaborative computation offloading and resource allocation in multi-UAV-assisted IoT networks: A deep reinforcement learning approach[J]. IEEE Internet of Things Journal, 2021, 8(15): 12203–12218. doi: 10.1109/JIOT.2021.3063188. [15] XIAO Liang, DING Yuzhen, HUANG Jinhao, et al. UAV anti-jamming video transmissions with QoE guarantee: A reinforcement learning-based approach[J]. IEEE Transactions on Communications, 2021, 69(9): 5933–5947. doi: 10.1109/TCOMM.2021.3087787. [16] FUJIMOTO S, HOOF H, and MEGER D. Addressing function approximation error in actor-critic methods[C]. Proceedings of the 35th International Conference on Machine Learning, Stockholmsmässan, Sweden, 2018: 1587–1596. [17] LIN Na, TANG Hailun, ZHAO Liang, et al. A PDDQNLP algorithm for energy efficient computation offloading in UAV-assisted MEC[J]. IEEE Transactions on Wireless Communications, 2023, 22(12): 8876–8890. doi: 10.1109/TWC.2023.3266497. [18] LIU Boyang, WAN Yiyao, ZHOU Fuhui, et al. Resource allocation and trajectory design for MISO UAV-assisted MEC networks[J]. IEEE Transactions on Vehicular Technology, 2022, 71(5): 4933–4948. doi: 10.1109/TVT.2022.3140833. [19] ZHOU Yi, PAN Cunhua, YEOH P L, et al. Secure communications for UAV-enabled mobile edge computing systems[J]. IEEE Transactions on Communications, 2020, 68(1): 376–388. doi: 10.1109/TCOMM.2019.2947921. -

下载:

下载:

下载:

下载: