A Secure Gradient Aggregation Scheme Based on Local Differential Privacy in Asynchronous Horizontal Federated Learning

-

摘要: 联邦学习作为一种新兴的分布式机器学习框架,通过在用户私有数据不出域的情况下进行联合建模训练,有效地解决了传统机器学习中的数据孤岛和隐私泄露问题。然而,联邦学习存在着训练滞后的客户端拖累全局训练速度的问题,异步联邦学习允许用户在本地完成模型更新后立即上传到服务端并参与到聚合任务中,而无需等待其他用户训练完成。然而,异步联邦学习也存在着无法识别恶意用户上传的错误模型,以及泄露用户隐私的问题。针对这些问题,该文设计一种面向隐私保护的异步联邦的安全梯度聚合方案(SAFL)。用户采用本地差分隐私策略,对本地训练的模型添加扰动并上传到服务端,服务端通过投毒检测算法剔除恶意用户,以实现安全聚合(SA)。最后,理论分析和实验表明在异步联邦学习的场景下,提出的方案能够有效识别出恶意用户,保护用户的本地模型隐私,减少隐私泄露风险,并相对于其他方案在模型的准确率上有较大的提升。Abstract: Federated learning is an emerging distributed machine learning framework that effectively solves the problems of data silos and privacy leakage in traditional machine learning by performing joint modeling training without leaving the user’s private data out of the domain. However, federated learning suffers from the problem of training-lagged clients dragging down the global training speed. Related research has proposed asynchronous federated learning, which allows the users to upload to the server and participate in the aggregation task as soon as they finish updating their models locally, without waiting for the other users. However, asynchronous federated learning also suffers from the inability to recognize malicious models uploaded by malicious users and the problem of leaking user’s privacy. To address these issues, a privacy-preserving Secure Aggregation scheme for asynchronous Federated Learning(SAFL) is designed. The users add perturbations to locally trained models and upload the perturbed models to the server. The server detects and rejects the malicious users through a poisoning detection algorithm to achieve Secure Aggregation(SA). Finally, theoretical analysis and experiments show that in the scenario of asynchronous federated learning, the proposed scheme can effectively detect malicious users while protecting the privacy of users’ local models and reducing the risk of privacy leakage. The proposed scheme has also a significant improvement in the accuracy of the model compared with other schemes.

-

表 1 异步联邦学习方案对比

算法1 SAFL-Server算法 输入:数据集$\left\{ {{D_1},{D_2}, \cdots ,{D_N}} \right\}$,学习率$\eta $,全局训练轮数$T$,

缓冲区大小为K,客户端的数量为N,每轮选中的客户端占比为 F。输出:收敛后的全局模型${\omega _t}$ (1) for t = 1 to T do (2) $k \leftarrow 0$ (3) $ m \leftarrow {\text{max}}\left( {F \cdot N,1} \right) $ (4) ${S_t} \leftarrow {\text{random}}\left\{ {{C_1},{C_1}, \cdots ,{C_m}} \right\} - {\text{poisonerList}}$ (5) for each client $i \in {S_t}$ in parallel do (6) $ {\text{ClientUpdate}}\left( {i,{w_t},t,{\sigma _i}} \right) $ (7) if receive client update from client $i$ then (8) $ \left( {w_t^i,{t_i}} \right) \leftarrow $received update from client $i$ (9) $k \leftarrow k + 1$ (10) if $k = = K$ then (11) $ w_t^{{\text{set}}} \leftarrow \left\{ {w_t^1,w_t^2, \cdots ,w_t^i} \right\} $ (12) $ {\text{cid}} \leftarrow {\text{max}}\left( {{\text{cos}}\left( {w_t^{{\text{set}}},{w_t}} \right)} \right) $ // 计算最大余弦相似度 (13) $ {w_{t + 1}} \leftarrow {\text{SA}}\left( {{\text{cid}},w_t^{{\text{set}}}} \right) $ // 安全聚合模型 (14) $k \leftarrow 0$ (15) return ${w_t}$ 算法2 SAFL-Client Thread-1 ${\rm{ClientUpdate}}$: 输入:本地迭代轮次E,梯度裁剪阈值C,第t 轮的全局模型${w_t}$,

本地差分隐私参数${\varepsilon _i},{\delta _i}$。输出:更新模型$ {w_i} $和训练轮次$t$ (1) for k = 1 to E do (2) for batch $b \subseteq {D_i}$ do (3) ${\boldsymbol{g}}_{i,b}^k\left( {{\mathcal{D}_{i,b}}} \right) \leftarrow \nabla \ell \left( {w,b} \right)$ // 计算梯度 (4) ${\boldsymbol{g}}_{i,b}^k\left( {{\mathcal{D}_{i,b}}} \right) \leftarrow {\boldsymbol{g}}_{i,b}^k\left( {{\mathcal{D}_{i,b}}} \right)/\max \left( {1,\frac{{\parallel {\boldsymbol{g}}_{i,b}^k\left( {{\mathcal{D}_{i,b}}} \right){\parallel _2}}}{C}} \right)$ //

裁剪梯度(5) $ w_i^{k + 1} \leftarrow w_i^k - \dfrac{1}{{\left| {{D_i}} \right|}}\displaystyle\sum\nolimits_{b = 1}^{\left| {{D_i}} \right|} {\eta {\boldsymbol{g}}_{i,b}^k\left( {{D_{i,b}}} \right)} $ (6) $ w_i^{k + 1} \leftarrow w_i^{k + 1} + N\left( {0,\Delta {s^{{D_i}}}/{\varepsilon _i}} \right) $ // 添加高斯噪声 (7) send $\left( {{w_i},t} \right)$ to the server Thread-2 ${\rm{ClientEvaluate}}$ 输入:诚实客户端${\text{cid}}$,混淆模型集合${\text{garbleSe}}{{\text{t}}_t}$ 输出:每个模型的预测准确率记录为列表${\text{scoreLis}}{{\text{t}}_t}$ (8) local variables$ l = 0,{\text{scoreLis}}{{\text{t}}_t} = [\;] $ (9) for each model $ m \in {\text{garbleLis}}{{\text{t}}_t} $ do (10) ${\text{gcc}} \leftarrow {\text{Evaluate}}\left( {m,{D_{{\text{cid}}}}} \right)$ // 计算模型对数据集${D_{{\text{cid}}}}$的

预测准确率(11) ${\text{scoreLis}}{{\text{t}}_t}\left[ l \right] \leftarrow {\text{gcc}}$ (12) $l \leftarrow l + 1$ (13) return ${\text{scoreLis}}{{\text{t}}_t}$ 算法3 SAFL-Detect 输入:第t轮缓冲区中的模型集合 $ w_t^{{\text{set}}} $ 输出:投毒用户集合$ {\text{posionerSe}}{{\text{t}}_t} $ (1) $ {\text{randomModelSe}}{{\text{t}}_t} \leftarrow \left\{ {{w_1},{w_2}, \cdots ,{w_i}} \right\} $ (2) $ {\text{garbelModelSe}}{{\text{t}}_t} \leftarrow {{\mathrm{shuffle}}} (w_t^{{\text{set}}} + {\text{randomModelSe}}{{\text{t}}_t}) $ (3) $ {\text{socreLis}}{{\text{t}}_t} \leftarrow {\text{ClientEvaluate}}({\text{garbelModelSe}}{{\text{t}}_t}) $ (4) ${\mathrm{cluster1}},\;{\text{cluster}}2 \leftarrow {{\mathrm{K}}} {\text{-}} {\text{Means}}({\text{socreLis}}{{\text{t}}_t})$ (5) $ {\text{posionerSet}}_{t}\leftarrow \mathrm{min}(\mathrm{avg}(\text{cluster1}),\text{avg(cluster2})) $ (6) return $ {\text{posionerSe}}{{\text{t}}_t} $ 算法4 安全聚合算法${\text{SA}}$ 输入:第t轮接收到的客户端模型$w_t^{{\text{set}}}$,投毒次数集合

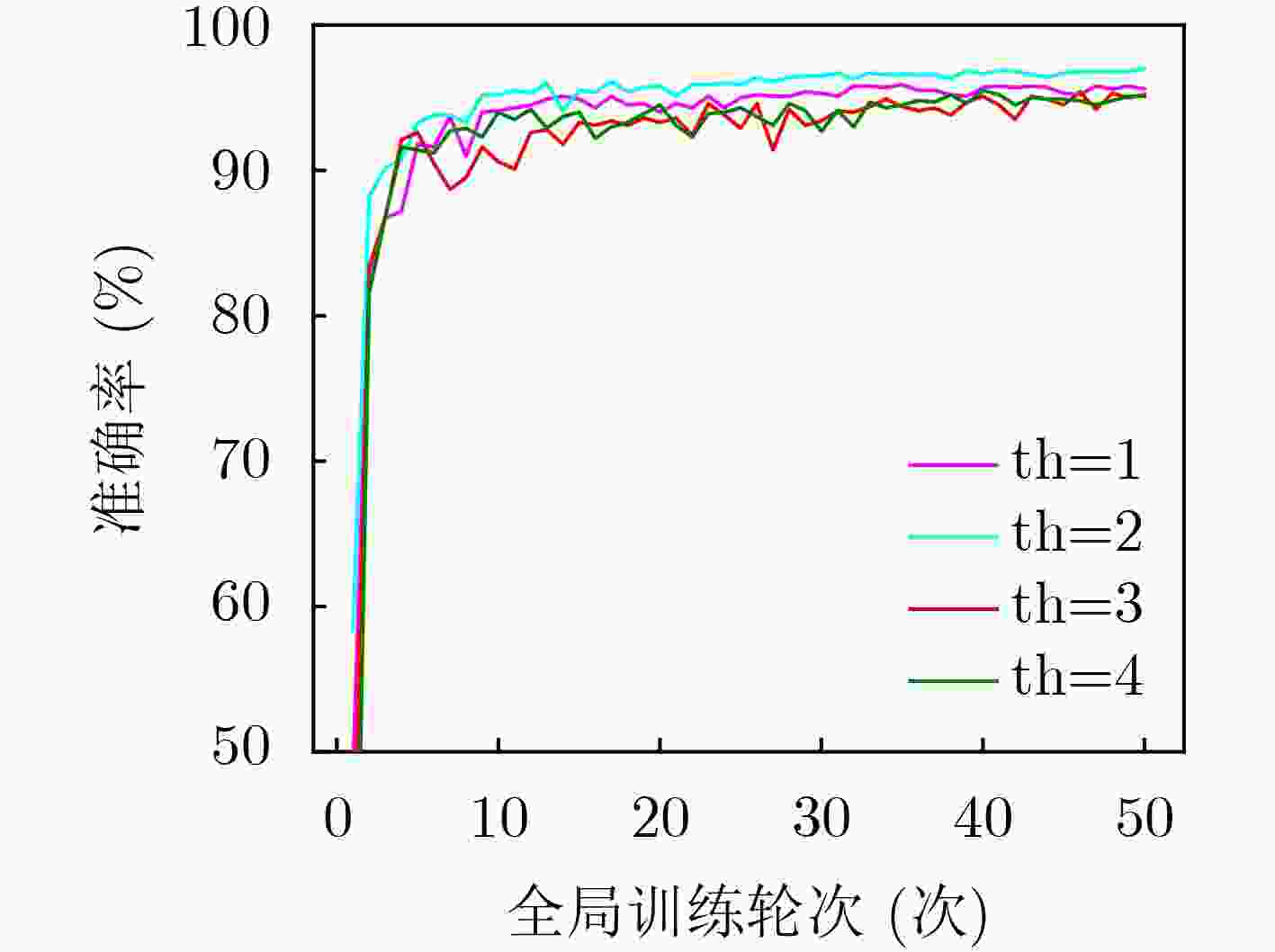

${\text{clientsAttack}}$。投毒次数的阈值${\text{th}}$。客户端$i$的数据集样本数量

$\left| {{D_i}} \right|$。${t_i}$是客户端$i$接收模型时的全局轮次输出:第t轮的全局模型${w_t}$ (1) $ {\text{poisonerSe}}{{\text{t}}_t} \leftarrow {\text{Detect}}(w_t^{{\text{set}}}) $ (2) for each client id $ {\text{id}} \in {\text{poisonerSe}}{{\text{t}}_t} $ do (3) ${\text{clientsAttack}}\left[ {{\text{id}}} \right] \leftarrow {\text{clientsAttack}}\left[ {{\text{id}}} \right] + 1$ (4) for each client model ${w_i} \in w_t^{{\text{set}}}$ do (5) if ${\text{clientsAttack}}\left[ i \right] < {\text{th}}$ then (6) $s \leftarrow {(1 + t - {t_i})^{ - 0.5}}$ (7) ${w_t} \leftarrow {w_{t - 1}} + s \cdot \left( {\left| {{D_i}} \right|/M} \right){w_i}$ (8) return ${w_t}$ -

[1] MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2017: 1273–82. [2] YANG Qiang, LIU Yang, CHEN Tianjian, et al. Federated machine learning: Concept and applications[J]. ACM Transactions on Intelligent Systems and Technology, 2019, 10(2): 12. doi: 10.1145/3298981. [3] BONAWITZ K, EICHNER H, GRIESKAMP W, et al. Towards federated learning at scale: System design[C]. Machine Learning and Systems, Stanford, USA, 2019: 374–88. [4] XIE Cong, KOYEJO S, and GUPTA I. Asynchronous federated optimization[EB/OL]. https://arxiv.org/abs/1903.03934, 2019. [5] LIU Jianchun, XU Hongli, WANG Lun, et al. Adaptive asynchronous federated learning in resource-constrained edge computing[J]. IEEE Transactions on Mobile Computing, 2023, 22(2): 674–690. doi: 10.1109/TMC.2021.3096846. [6] HUBA D, NGUYEN J, MALIK K, et al. Papaya: Practical, private, and scalable federated learning[C]. Machine Learning and Systems, Santa Clara, USA, 2022: 814–32. [7] BAGDASARYAN E, VEIT A, HUA Yiqing, et al. How to backdoor federated learning[C]. The Twenty Third International Conference on Artificial Intelligence and Statistics, Palermo, Italy, 2020: 2938–2948. [8] 高莹, 陈晓峰, 张一余, 等. 联邦学习系统攻击与防御技术研究综述[J]. 计算机学报, 2023, 46(9): 1781–1805. doi: 10.11897/SP.J.1016.2023.01781.GAO Ying, CHEN Xiaofeng, ZHANG Yiyu, et al. A survey of attack and defense techniques for federated learning systems[J]. Chinese Journal of Computers, 2023, 46(9): 1781–1805. doi: 10.11897/SP.J.1016.2023.01781. [9] 汤凌韬, 陈左宁, 张鲁飞, 等. 联邦学习中的隐私问题研究进展[J]. 软件学报, 2023, 34(1): 197–229. doi: 10.13328/j.cnki.jos.006411.TANG Lingtao, CHEN Zuoning, ZHANG Lufei, et al. Research progress of privacy issues in federated learning[J]. Journal of Software, 2023, 34(1): 197–229. doi: 10.13328/j.cnki.jos.006411. [10] SO J, NOLET C J, YANG C S, et al. Lightsecagg: A lightweight and versatile design for secure aggregation in federated learning[C]. Machine Learning and Systems, Santa Clara, USA, 2022: 694–720. [11] FANG Minghong, LIU Jia, GONG N Z, et al. AFLGuard: Byzantine-robust asynchronous federated learning[C]. The 38th Annual Computer Security Applications Conference, Austin, USA, 2022: 632–646. doi: 10.1145/3564625.3567991. [12] WANG Rong and TSAI W T. Asynchronous federated learning system based on permissioned blockchains[J]. Sensors, 2022, 22(4): 1672. doi: 10.3390/s22041672. [13] LU Yunlong, HUANG Xiaohong, DAI Yueyue, et al. Differentially private asynchronous federated learning for mobile edge computing in urban informatics[J]. IEEE Transactions on Industrial Informatics, 2020, 16(3): 2134–2143. doi: 10.1109/TII.2019.2942179. [14] DAMASKINOS G, EL MHAMDI E M, GUERRAOUI R, et al. Asynchronous Byzantine machine learning (the case of SGD)[C]. The 35th International Conference on Machine Learning, Stockholmsmässan, Sweden, 2018: 1153–1162. [15] 刘艺璇, 陈红, 刘宇涵, 等. 联邦学习中的隐私保护技术[J]. 软件学报, 2022, 33(3): 1057–1092. doi: 10.13328/j.cnki.jos.006446.LIU Yixuan, CHEN Hong, LIU Yuhan, et al. Privacy-preserving techniques in federated learning[J]. Journal of Software, 2022, 33(3): 1057–1092. doi: 10.13328/j.cnki.jos.006446. [16] WANG Bo, LI Hongtao, GUO Yina, et al. PPFLHE: A privacy-preserving federated learning scheme with homomorphic encryption for healthcare data[J]. Applied Soft Computing, 2023, 146: 110677. doi: 10.1016/j.asoc.2023.110677. [17] FENG Jun, YANG L T, ZHU Qing, et al. Privacy-preserving tensor decomposition over encrypted data in a federated cloud environment[J]. IEEE Transactions on Dependable and Secure Computing, 2020, 17(4): 857–868. doi: 10.1109/TDSC.2018.2881452. [18] 李腾, 方保坤, 马卓, 等. 基于同态加密的医疗数据密文异常检测方法[J]. 中国科学:信息科学, 2023, 53(7): 1368–1391. doi: 10.1360/ssi-2022-0214.LI Teng, FANG Baokun, MA Zhuo, et al. Homomorphic encryption-based ciphertext anomaly detection method for e-health records[J]. Scientia Sinica (Informationis), 2023, 53(7): 1368–1391. doi: 10.1360/ssi-2022-0214. [19] GEHLHAR T, MARX F, SCHNEIDER T, et al. SafeFL: MPC-friendly framework for private and robust federated learning[C]. 2023 IEEE Security and Privacy Workshops (SPW), San Francisco, USA, 2023: 69–76. doi: 10.1109/SPW59333.2023.00012. [20] MANSOURI M, ÖNEN M, JABALLAH W B, et al. Sok: Secure aggregation based on cryptographic schemes for federated learning[J]. Proceedings on Privacy Enhancing Technologies, 2023, 2023(1): 140–157. doi: 10.56553/popets-2023-0009. doi: 10.56553/popets-2023-0009. doi: 10.56553/popets-2023-0009.doi:10.56553/popets-2023-0009. [21] FENG Jun, YANG L T, NIE Xin, et al. Edge–cloud-aided differentially private tucker decomposition for cyber–physical–social systems[J]. IEEE Internet of Things Journal, 2022, 9(11): 8387–8396. doi: 10.1109/JIOT.2020.3004826. [22] CAO Di, CHANG Shan, LIN Zhijian, et al. Understanding distributed poisoning attack in federated learning[C]. IEEE 25th International Conference on Parallel and Distributed Systems (ICPADS), Tianjin, China, 2019: 233–239. doi: 10.1109/ICPADS47876.2019.00042. [23] ABADI M, CHU A, GOODFELLOW I, et al. Deep learning with differential privacy[C]. The 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 2016: 308–318. doi: 10.1145/2976749.2978318. [24] KONEČNÝ J, MCMAHAN H B, XU F X, et al. Federated learning: Strategies for improving communication efficiency[C]. 6th International Conference on Learning Representations, Vancouver, BC, Canada, 2016. [25] BLANCHARD P, EL MHAMDI E M, GUERRAOUI R, et al. Machine learning with adversaries: Byzantine tolerant gradient descent[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 118–128. [26] YIN Dong, CHEN Yudong, KANNAN R, et al. Byzantine-robust distributed learning: Towards optimal statistical rates[C]. The 35th International Conference on Machine Learning, Stockholmsmässan, Sweden, 2018: 5650–5659. -

下载:

下载:

下载:

下载: