Damaged Inscription Recognition Based on Hierarchical Decomposition Embedding and Bipartite Graph

-

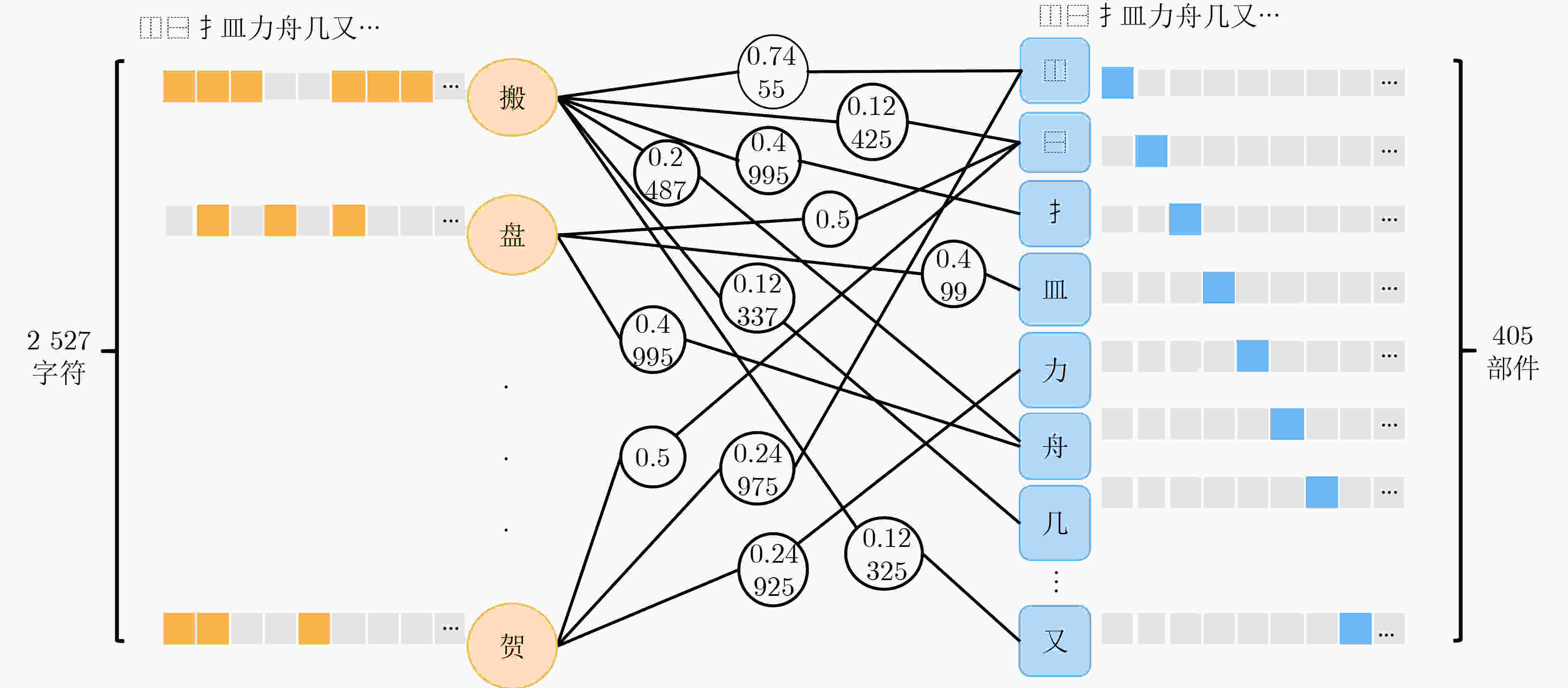

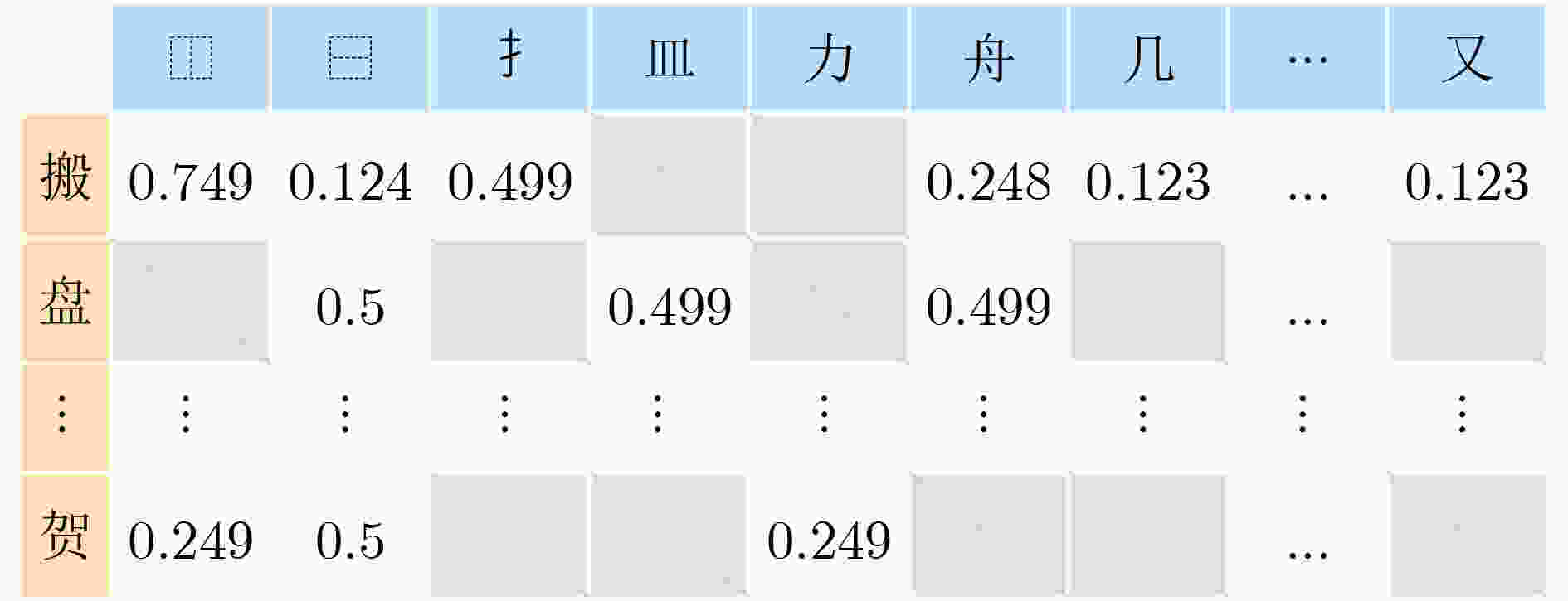

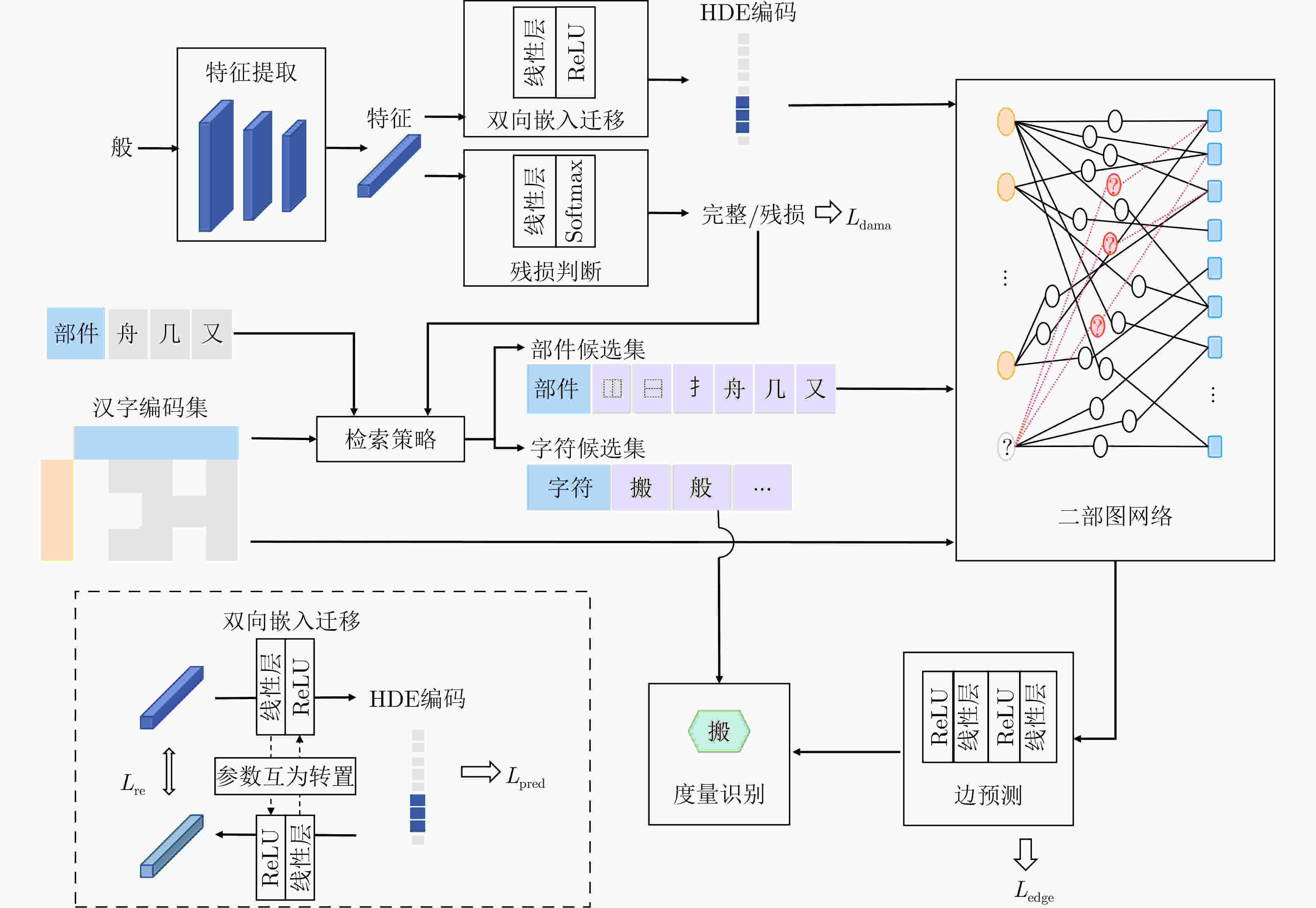

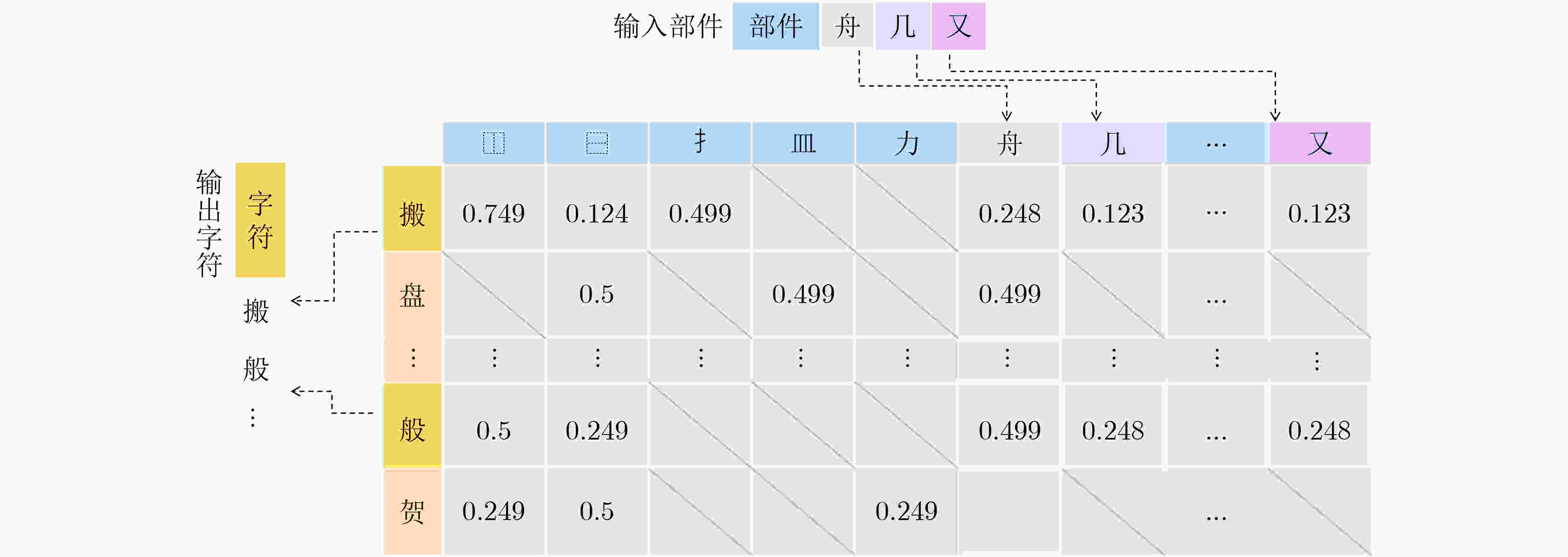

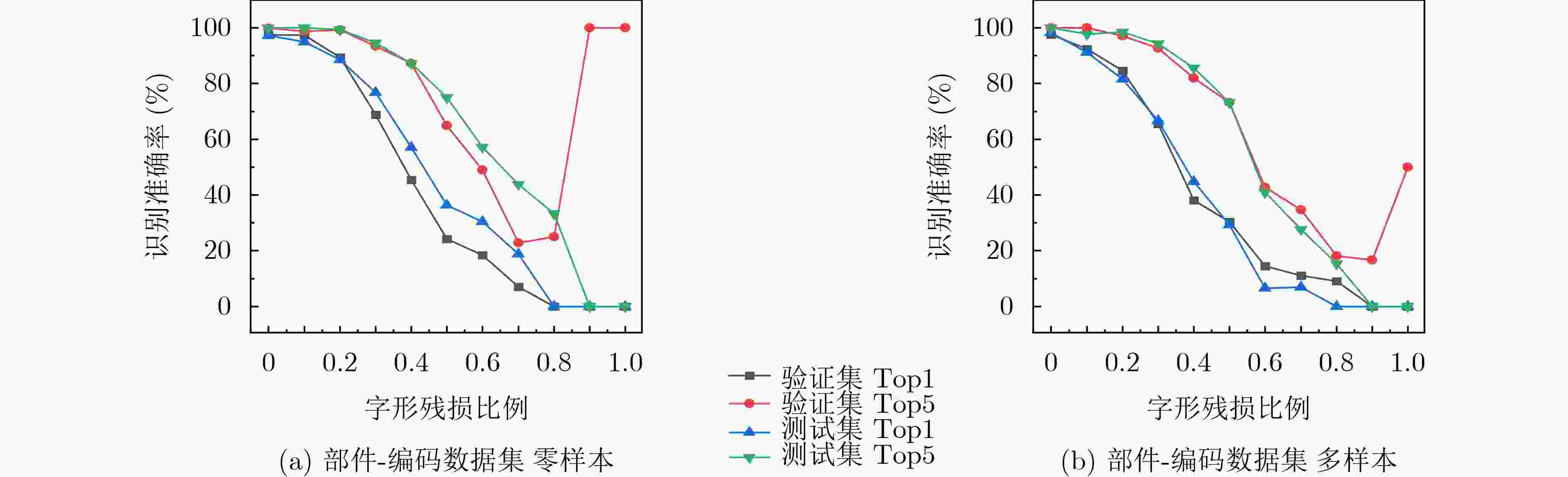

摘要: 古籍碑刻承载着丰富的历史文化信息,但是由于自然风化浸蚀和人为破坏使得碑石上的文字信息残缺不全。古碑文语义信息多样化且样例不足,使得学习行文语义补全识别残损文字变得十分困难。该文试图从字形空间语义建模解决补全残损汉字进行识别理解这一挑战性任务。该文在层级拆分嵌入(HDE)编码方法的基础上使用动态图修补嵌入(DynamicGrape),对待识别汉字的图像进行特征映射并判别是否残损。如未残损直接转化为层级拆分编码,输入二部图推理字节点到部件节点的边权重,比对字库编码识别理解;如残损需要在字库里检索可能字和部件,对汉字编码的特征维度进行选择,输入二部图推理预测可能的汉字结果。在自建的数据集以及中文自然文本(CTW)数据集中进行验证,结果表明二部图网络可以有效迁移和推理出残损文字字形信息,该文方法可以有效对残损汉字进行识别理解,为残损结构信息处理开拓出了新的思路和途径。Abstract: Ancient inscriptions carry rich historical and cultural information. However, due to natural weathering and man-made destruction, the text information on the inscriptions is incomplete. The semantic information of ancient inscriptions is diverse and the text examples of ancient inscription are insufficient, which make it very difficult to learn the semantic information between Chinese characters for recognizing damaged characters. The challenging task of damaged characters recognition and understanding by Chinese character spatial semantic modeling is attempted to be solved in this paper. Based on Hierarchical Decomposition Embedding(HDE), the proposed DynamicGrape performs feature mapping on damaged character image and determines whether it is damaged. If character is not damaged, its image is directly converted into hierarchical decomposition embedding to reason the edge weight of the bipartite graph for recognizing Chinese character. If character is damaged, it is necessary to search for possible Chinese characters and components in the encoding set, select the feature dimension of HDE from image mapping, and input the bipartite graph to infer the possible Chinese character. In the self-built dataset and Chinese Text in the Wild(CTW) dataset, the experimental results show that the bipartite graph network can not only transfer and infer Chinese character pattern of damaged characters effectively, but also precisely recognize and understand damaged Chinese characters. It opens up new ideas for the damaged structure information processing.

-

表 1 先进方法的对比实验(%)

模型 识别准确率 完整汉字 残损汉字 所有汉字 部件-编码数据集 零样本 CRNN[1] 0 0 0 DenseNet[34] 0 0 0 ResNet[35] 0 0 0 RCN[15] 0 0 0 ZCTRN[21] 0.401 6 0.201 9 0.252 0 CCDF[14] 0 0 0 DynamicGrape 99.407 1 55.606 9 66.567 8 部件-编码数据集 多样本 CRNN[1] 0.269 4 0 0.197 8 DenseNet[34] 76.350 1 54.814 8 60.534 1 ResNet[35] 81.750 5 64.915 8 69.386 7 RCN[15] 0 0 0 ZCTRN[21] 0.189 8 0.137 3 0.151 2 CCDF[14] 0 0 0 DynamicGrape 97.579 1 50.909 1 63.303 7 CTW数据集 CRNN[1] 66.057 3 / 66.057 3 DenseNet[34] 79.375 4 / 79.375 4 ResNet[35] 80.429 4 / 80.429 4 RCN[15] 0 / 0 ZCTRN[21] 0.142 7 / 0.142 7 CCDF[14] 82.485 4 / 82.485 4 DynamicGrape 97.254 4 / 97.254 4 表 2 部件-编码 零样本数据集下的消融实验

模型 MSE MAE 残损判断

准确率(%)识别准确率(%) 完整汉字 残损汉字 所有文字 验证集 DynamicGrape w/o damaged & BiTrans 0.017 6 0.105 5 / 99.407 1 56.068 6 66.913 9 DynamicGrape w/o damaged 0.017 8 0.115 6 / 99.407 1 55.606 9 66.567 8 DynamicGrape w/o BiTrans 0.019 7 0.085 2 95.796 2 98.419 0 59.102 9 68.941 6 DynamicGrape 0.020 9 0.104 2 74.975 3 82.608 7 66.160 9 70.277 0 测试集 DynamicGrape w/o damaged & BiTrans 0.016 0 0.096 2 / 98.809 5 63.589 1 72.282 1 DynamicGrape w/o damaged 0.016 8 0.109 0 / 98.809 5 63.199 0 72.184 1 DynamicGrape w/o BiTrans 0.021 8 0.093 4 93.535 7 98.015 9 63.979 2 72.380 0 DynamicGrape 0.021 0 0.105 3 75.318 3 80.158 7 71.001 3 73.261 5 表 3 部件-编码 多样本数据集下的消融实验

模型 MSE MAE 残损判断

准确率(%)识别准确率(%) 完整汉字 残损汉字 所有文字 验证集 DynamicGrape w/o damaged & BiTrans 0.015 5 0.103 9 / 98.137 8 51.380 5 63.798 2 DynamicGrape w/o damaged 0.018 4 0.115 9 / 97.765 4 51.447 8 63.748 8 DynamicGrape w/o BiTrans 0.013 8 0.068 0 94.906 0 97.579 1 51.447 8 63.699 3 DynamicGrape 0.011 8 0.061 5 26.508 4 97.579 1 50.707 1 63.155 3 测试集 DynamicGrape w/o damaged & BiTrans 0.016 8 0.107 8 / 98.550 7 51.275 2 64.054 8 DynamicGrape w/o damaged 0.019 6 0.119 0 / 98.550 7 51.677 9 64.348 7 DynamicGrape w/o BiTrans 0.014 7 0.070 6 92.752 2 97.826 1 50.335 6 63.173 4 DynamicGrape 0.013 4 0.064 9 26.934 4 98.188 4 51.275 2 63.956 9 表 4 CTW数据集下的消融实验

模型 MSE MAE 残损判断

准确率(%)识别准确率(%) 完整汉字 残损汉字 所有文字 DynamicGrape w/o damaged & BiTrans 0.002 2 0.024 9 / 97.247 9 / 97.247 9 DynamicGrape w/o damaged 0.001 1 0.014 1 / 97.352 0 / 97.352 0 DynamicGrape w/o BiTrans 0.001 1 0.010 3 / 97.033 2 / 97.033 2 DynamicGrape 0.001 6 0.013 3 / 97.254 4 / 97.254 4 -

[1] SHI Baoguang, BAI Xiang, and YAO Cong. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(11): 2298–2304. doi: 10.1109/TPAMI.2016.2646371. [2] BUŠTA M, NEUMANN L, and MATAS J. Deep TextSpotter: An end-to-end trainable scene text localization and recognition framework[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2204–2212. doi: 10.1109/ICCV.2017.242. [3] LIU Xuebo, LIANG Ding, YAN Shi, et al. FOTS: Fast oriented text spotting with a unified network[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 5676–5685. doi: 10.1109/CVPR.2018.00595. [4] LIU Yuliang, CHEN Hao, SHEN Chunhua, et al. ABCNet: Real-time scene text spotting with adaptive Bezier-curve network[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9809–9818. doi: 10.1109/CVPR42600.2020.00983. [5] SHI Baoguang, WANG Xinggang, LYU Pengyuan, et al. Robust scene text recognition with automatic rectification[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 4168–4176. doi: 10.1109/CVPR.2016.452. [6] LI Hui, WANG Peng, SHEN Chunhua, et al. Show, attend and read: A simple and strong baseline for irregular text recognition[C]. The 33rd AAAI conference on artificial intelligence, Honolulu, USA, 2019: 8610–8617. doi: 10.1609/aaai.v33i01.33018610. [7] QIAO Zhi, ZHOU Yu, YANG Dongbao, et al. SEED: Semantics enhanced encoder-decoder framework for scene text recognition[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 13528–13537. doi: 10.1109/CVPR42600.2020.01354. [8] HE Tong, TIAN Zhi, HUANG Weilin, et al. An end-to-end TextSpotter with explicit alignment and attention[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 5020–5029. doi: 10.1109/CVPR.2018.00527. [9] WANG Wenhai, XIE Enze, LI Xiang, et al. PAN++: Towards efficient and accurate end-to-end spotting of arbitrarily-shaped text[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(9): 5349–5367. doi: 10.1109/TPAMI.2021.3077555. [10] LIAO Minghui, ZHANG Jian, WAN Zhaoyi, et al. Scene text recognition from two-dimensional perspective[C]. The 33rd AAAI Conference on Artificial Intelligence, Honolulu, USA, 2019: 8714–8721. doi: 10.1609/aaai.v33i01.33018714. [11] YU Deli, LI Xuan, ZHANG Chengquan, et al. Towards accurate scene text recognition with semantic reasoning networks[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 12113–12122. doi: 10.1109/CVPR42600.2020.01213. [12] LYU Pengyuan, LIAO Minghui, YAO Cong, et al. Mask TextSpotter: An end-to-end trainable neural network for spotting text with arbitrary shapes[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 67–83. doi: 10.1007/978-3-030-01264-9_5. [13] LIU Chang, YANG Chun, QIN Haibo, et al. Towards open-set text recognition via label-to-prototype learning[J]. Pattern Recognition, 2023, 134: 109109. doi: 10.1016/j.patcog.2022.109109. [14] LIU Chang, YANG Chun, and YIN Xucheng. Open-set text recognition via character-context decoupling[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 4523–4532. doi: 10.1109/CVPR52688.2022.00448. [15] LI Yunqing, ZHU Yixing, DU Jun, et al. Radical counter network for robust Chinese character recognition[C]. The 25th International Conference on Pattern Recognition, Milan, Italy, 2021: 4191–4197. doi: 10.1109/ICPR48806.2021.941291. [16] WANG Wenchao, ZHANG Jianshu, DU Jun, et al. DenseRAN for offline handwritten Chinese character recognition[C]. 2018 16th International Conference on Frontiers in Handwriting Recognition, Niagara Falls, USA, 2018: 104–109. doi: 10.1109/ICFHR-2018.2018.00027. [17] ZHANG Jianshu, ZHU Yixing, DU Jun, et al. Radical analysis network for zero-shot learning in printed Chinese character recognition[C]. 2018 IEEE International Conference on Multimedia and Expo, San Diego, USA, 2018: 1–6. doi: 10.1109/ICME.2018.8486456. [18] WU Changjie, WANG Zirui, DU Jun, et al. Joint spatial and radical analysis network for distorted Chinese character recognition[C]. 2019 International Conference on Document Analysis and Recognition Workshops, Sydney, Australia, 2019: 122–127. doi: 10.1109/ICDARW.2019.40092. [19] WANG Tianwei, XIE Zecheng, LI Zhe, et al. Radical aggregation network for few-shot offline handwritten Chinese character recognition[J]. Pattern Recognition Letters, 2019, 125: 821–827. doi: 10.1016/j.patrec.2019.08.005. [20] CAO Zhong, LU Jiang, CUI Sen, et al. Zero-shot handwritten Chinese character recognition with hierarchical decomposition embedding[J]. Pattern Recognition, 2020, 107: 107488. doi: 10.1016/j.patcog.2020.107488. [21] HUANG Yuhao, JIN Lianwen, and PENG Dezhi. Zero-shot Chinese text recognition via matching class embedding[C]. The 16th International Conference on Document Analysis and Recognition, Lausanne, Switzerland, 2021: 127–141. doi: 10.1007/978-3-030-86334-0_9. [22] YANG Chen, WANG Qing, DU Jun, et al. A transformer-based radical analysis network for Chinese character recognition[C]. The 25th International Conference on Pattern Recognition, Milan, Italy, 2021: 3714–3719. doi: 10.1109/ICPR48806.2021.941243. [23] DIAO Xiaolei, SHI Daqian, TANG Hao, et al. RZCR: Zero-shot Character Recognition via Radical-based Reasoning[C]. The Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 2023. [24] ZENG Jinshan, XU Ruiying, WU Yu, et al. STAR: Zero-shot Chinese character recognition with stroke-and radical-level decompositions[EB/OL]. https://arxiv.org/abs/2210.08490, 2022. [25] GAN Ji, WANG Weiqiang, and LU Ke. Characters as graphs: Recognizing online handwritten Chinese characters via spatial graph convolutional network[EB/OL]. https://arxiv.org/abs/2004.09412, 2020. [26] GAN Ji, WANG Weiqiang, and LU Ke. A new perspective: Recognizing online handwritten Chinese characters via 1-dimensional CNN[J]. Information Sciences, 2019, 478: 375–390. doi: 10.1016/j.ins.2018.11.035. [27] CHEN Jingye, LI Bin, and XUE Xiangyang. Zero-shot Chinese character recognition with stroke-level decomposition[EB/OL]. https://arxiv.org/abs/2106.11613, 2021. [28] YU Haiyang, CHEN Jingye, LI Bin, et al. Chinese character recognition with radical-structured stroke trees[EB/OL]. https://arxiv.org/abs/2211.13518, 2022. [29] CHEN Zongze, YANG Wenxia, and LI Xin. Stroke-based autoencoders: Self-supervised learners for efficient zero-shot Chinese character recognition[J]. Applied Sciences, 2023, 13(3): 1750. doi: 10.3390/app13031750. [30] 杨春, 刘畅, 方治屿, 等. 开放集文字识别技术[J]. 中国图象图形学报, 2023, 28(6): 1767–1791. doi: 10.11834/jig.230018.YANG Chun, LIU Chang, FANG Zhiyu, et al. Open set text recognition technology[J]. Journal of Image and Graphics, 2023, 28(6): 1767–1791. doi: 10.11834/jig.230018. [31] HAMILTON W L, YING Z, and LESKOVEC J. Inductive representation learning on large graphs[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 1025–1035. [32] YOU Jiaxuan, MA Xiaobai, DING D Y, et al. Handling missing data with graph representation learning[C]. The 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 1601. [33] YUAN Tailing, ZHU Zhe, XU Kun, et al. A large Chinese text dataset in the wild[J]. Journal of Computer Science and Technology, 2019, 34(3): 509–521. doi: 10.1007/s11390-019-1923-y. [34] HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 4700–4708. doi: 10.1109/CVPR.2017.243. [35] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. -

下载:

下载:

下载:

下载: