A Continual Semantic Segmentation Method Based on Gating Mechanism and Replay Strategy

-

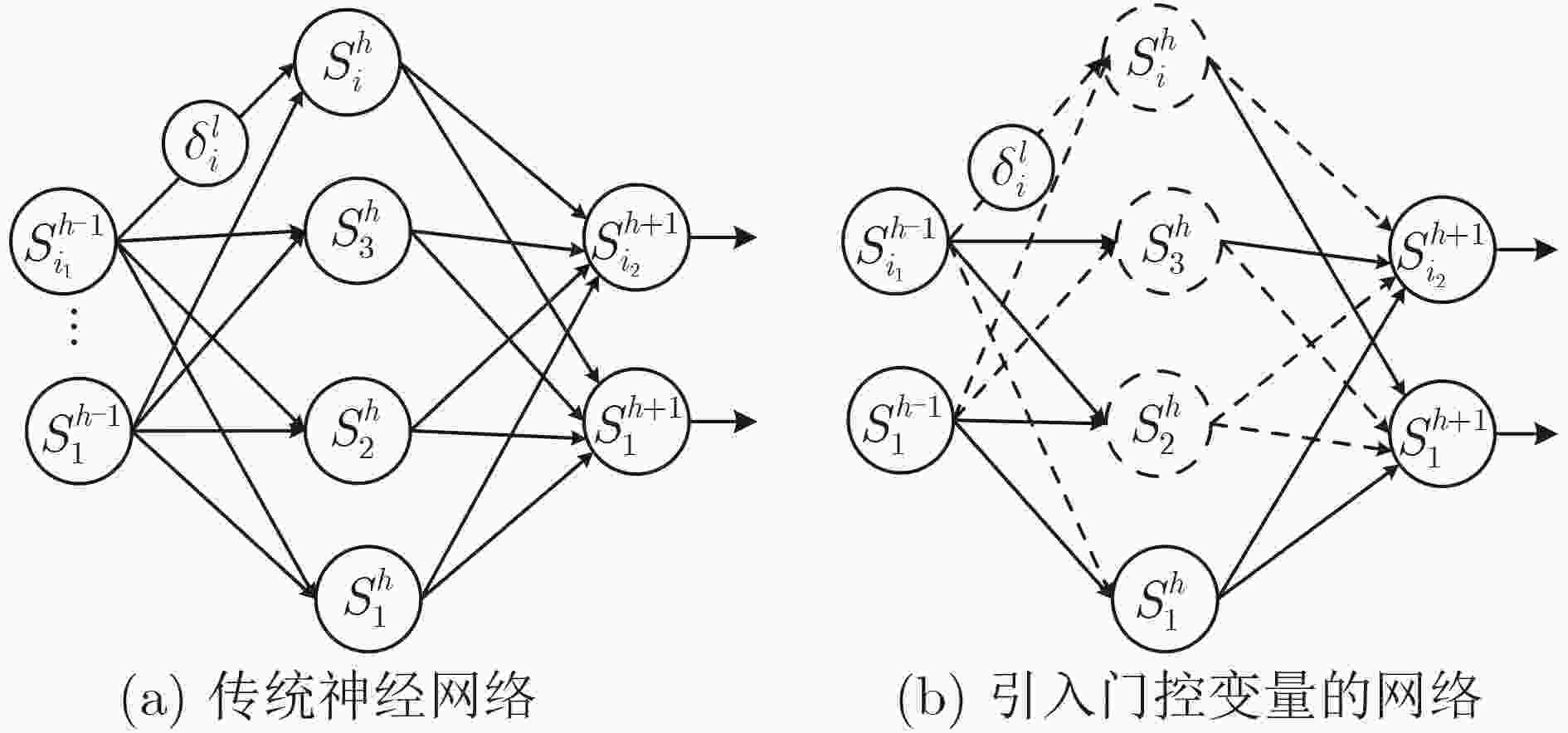

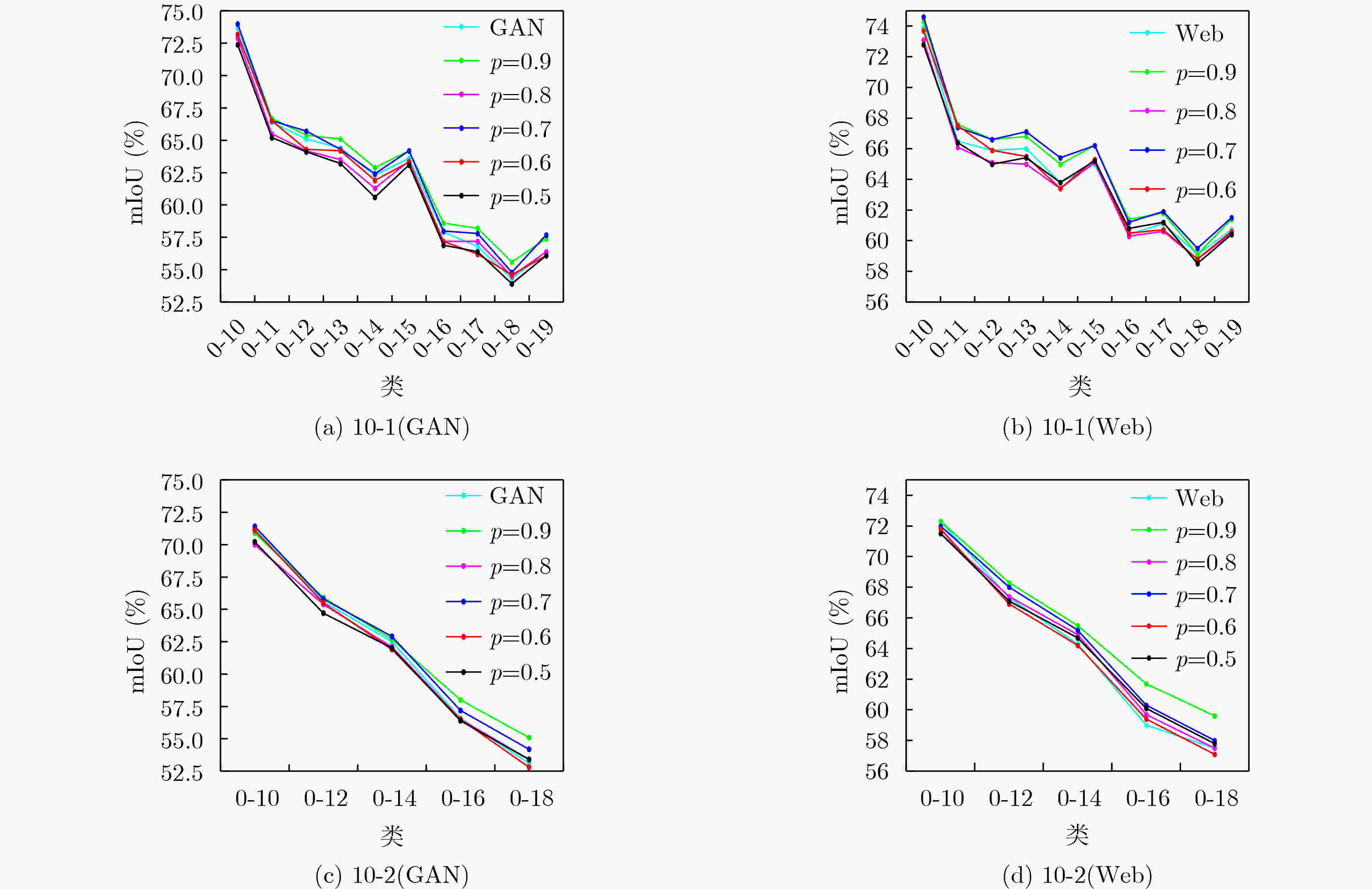

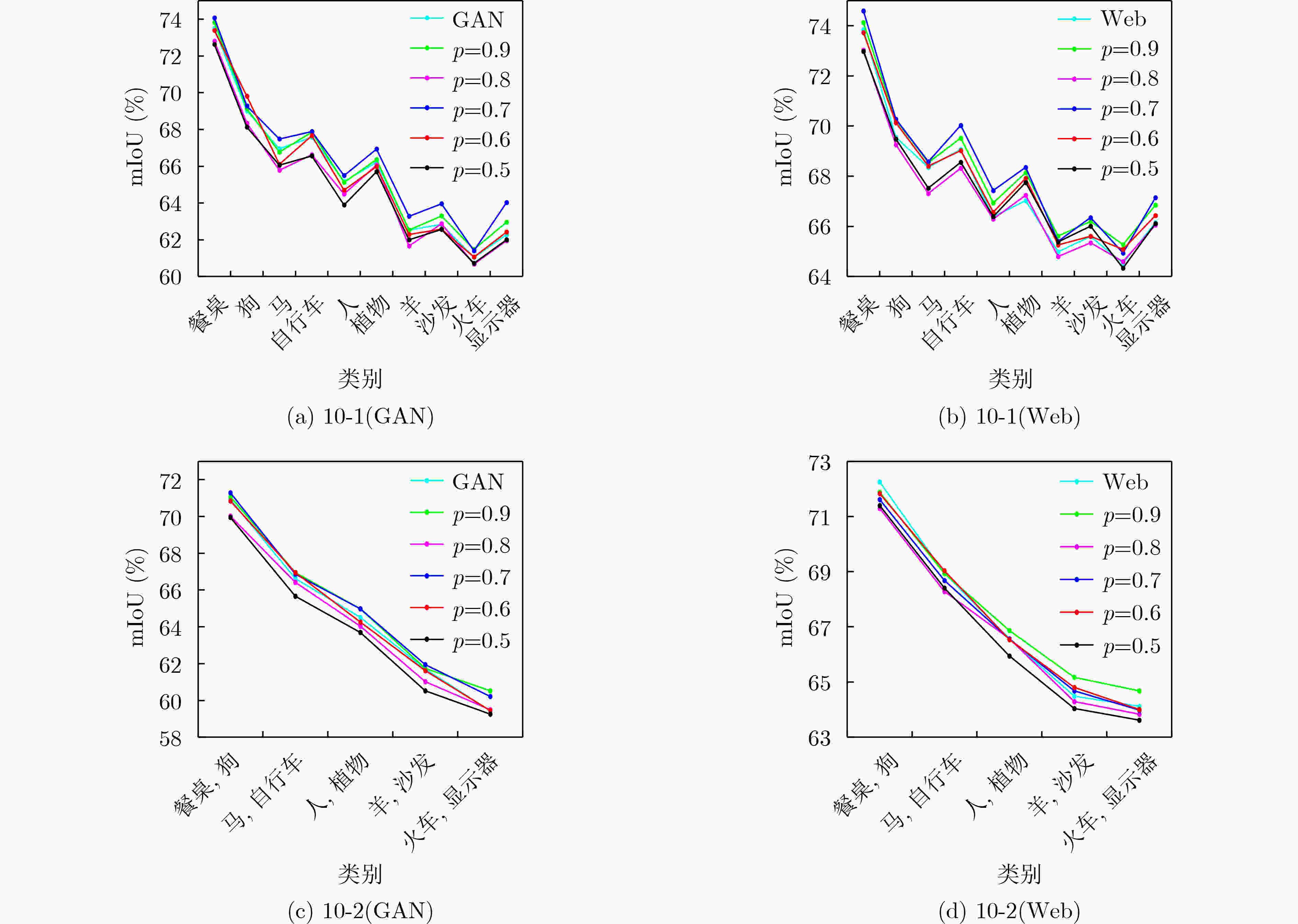

摘要: 基于深度神经网络的语义分割模型在增量更新知识时由于新旧任务参数之间的干扰加之背景漂移现象,会加剧灾难性遗忘。此外,数据常常由于隐私、安全等因素无法被存储导致模型失效。为此,该文提出基于门控机制与重放策略的持续语义分割方法。首先,在不存储旧数据的情况下,通过生成对抗网络生成及网页抓取作为数据来源,使用标签评估模块解决无监督问题、背景自绘模块解决背景漂移问题;接着,使用重放策略缓解灾难性遗忘;最后,将门控变量作为一种正则化手段增加模型稀疏性,研究了门控变量与持续学习重放策略结合的特殊情况。在Pascal VOC2012数据集上的评估结果表明,在复杂场景10-2, 生成对抗网络 (GAN)、Web的设置中,该文在全部增量步骤结束后的旧任务性能比基线分别提升了3.8%, 3.7%,在场景10-1中,相比于基线分别提升了2.7%, 1.3%。Abstract: Due to the interference and background drift between new and old task parameters, semantic segmentation model based on deep neural networks promotes catastrophic forgetting of old knowledge. Furthermore, information frequently cannot be stored owing to privacy concerns, security concerns, and other issues, which leads to model failure. Therefore, a continual semantic segmentation method based on gating mechanism and replay strategy is proposed. First, without storing old data, generative adversarial network and webpage crawling are used as data sources, the label evaluation module is used to solve the unsupervised problem and the background self-drawing module is used to solve background drift problem. Then, catastrophic forgetting is mitigated by replay strategy; Finally, gated variables are used as a regularization means to increase the sparsity of the module and study the special case of gated variables combined with continual learning replay strategy. Our evaluation results on the Pascal VOC2012 dataset show that in the settings of complex scenario 10-2, Generative Adversarial Networks (GAN) and Web, the performance of the old task after all incremental steps are improved by 3.8% and 3.7% compared with the baseline, and in scenario 10-1, they are improved by 2.7% and 1.3% compared with the baseline, respectively.

-

Key words:

- Continual learning /

- Semantic segmentation /

- Replay strategies /

- Gating variables

-

表 1 20个类的中英文类名

中文类名 飞机 自行车 鸟 船 瓶子 巴士汽车 汽车 猫 椅子 牛 英文类名 airplane bicycle bird boat bottle bus car cat chair cow 中文类名 餐桌 狗 马 摩托车 人 盆栽 羊 沙发 火车 监视器 英文类名 dining table dog horse motorbike person potted plant sheep sofa train monitor 表 2 门控0-1伯努利变量对模型稳定性-可塑性的影响

场景 类 GAN p=0.9 p=0.8 p=0.7 p=0.6 p=0.5 Web p=0.9 p=0.8 p=0.7 p=0.6 p=0.5 19-1 0-19 68.2±0.8 67.9±0.3 67.0±0.8 68.2±0.4 67.6±0.3 68.4±0.3 67.7±1.3 67.2±0.7 67.1±1.0 68.3±0.7 67.7±0.3 68.4±0.3 20 50.9±1.1 51.3±1.3 51.2±1.1 52.2±1.1 50.6±1.7 50.1±2.3 51.0±1.5 51.9±1.4 53.0±0.9 51.7±1.6 52.0±2.7 52.9±1.6 15-5 0-15 68.9±0.3 69.1±0.3 69.1±0.5 69.0±0.4 69.1±0.5 69.9±0.2 69.4±1.7 70.0±0.4 69.9±0.9 69.8±0.3 69.9±0.7 70.7±0.3 16-20 51.5±0.5 51.3±0.6 51.3±0.4 51.6±0.6 51.5±0.8 51.8±0.5 54.2±0.4 54.2±0.6 54.6±0.5 54.3±0.3 55.0±0.5 55.0±0.4 10-10 0-10 66.8±0.5 67.0±0.2 66.4±0.3 67.3±0.3 66.9±0.5 66.6±0.7 68.4±0.3 68.6±0.2 67.6±0.6 68.4±0.2 68.1±0.1 68.0±0.7 11-20 58.1±0.6 59.2±0.5 58.4±0.6 58.6±0.4 58.3±0.4 57.7±0.4 58.1±0.5 59.6±0.6 58.1±0.6 58.8±0.3 58.1±0.4 57.5±0.4 10-5 0-10 68.7±0.6 68.4±0.4 68.0±0.2 68.8±0.4 68.6±0.2 68.1±0.9 69.5±0.5 69.9±0.2 69.0±0.4 69.9±0.3 69.5±0.2 68.7±0.6 11-15 63.1±0.3 63.8±1.2 63.0±0.7 63.3±0.7 63.2±0.9 63.0±0.5 63.7±0.8 65.0±0.7 63.9±0.4 64.3±0.3 63.9±1.1 63.7±1.1 0-15 61.3±0.8 61.7±0.8 61.5±0.5 61.6±0.7 62.0±0.5 61.6±0.4 63.1±0.5 62.5±1.3 64.0±0.6 62.2±0.8 63.9±0.6 63.9±1.0 16-20 49.3±0.5 50.0±1.1 49.2±0.6 48.4±0.2 48.7±1.1 49.0±0.1 53.0±0.4 54.1±0.8 53.1±0.5 53.0±0.3 53.4±0.9 53.2±0.3 10-2 0-10 71.2±0.7 70.9±0.8 70.0±0.3 71.4±0.6 71.1±0.4 70.2±1.0 72.3±0.4 72.3±0.4 71.5±0.6 72.0±1.1 71.8±0.4 71.5±1.0 11-12 57.0±1.0 56.2±1.5 56.2±1.1 57.1±0.2 57.7±1.2 56.5±1.0 60.5±0.9 60.1±1.7 60.8±0.7 59.8±1.2 60.7±0.4 60.8±0.5 0-12 65.6±1.3 65.9±0.7 65.4±0.6 65.8±0.6 65.5±0.3 64.7±0.5 67.3±0.9 68.3±1.1 67.4±0.2 68.0±1.0 66.9±0.6 67.1±0.6 13-14 53.3±1.7 53.8±0.8 53.5±1.1 53.3±0.7 53.1±0.4 52.9±1.5 60.0±1.6 60.6±1.3 61.0±0.3 61.0±1.4 60.9±0.4 60.6±1.3 0-14 62.5±1.3 62.7±0.6 62.1±0.6 62.9±0.6 61.9±0.5 62.0±0.9 64.3±1.6 65.5±0.6 64.9±0.6 65.2±0.3 64.2±1.2 64.7±0.7 15-16 51.4±1.8 53.6±1.5 53.4±1.6 53.1±1.2 53.4±2.7 53.2±1.4 52.8±1.6 54.7±1.8 54.9±1.2 54.2±0.9 53.9±1.7 53.6±1.3 0-16 56.6±0.9 58.0±1.1 56.5±0.8 57.2±0.3 56.5±0.7 56.4±0.6 59.0±1.1 61.7±0.9 59.7±0.2 60.3±0.3 59.4±0.8 60.1±0.9 17-18 36.8±1.0 36.0±0.8 35.2±0.4 35.1±1 34.2±1.3 34.5±1.5 41.1±0.7 41.8±1.2 41.8±0.7 41.2±2.1 40.6±1.1 42.1±1.1 0-18 53.1±0.8 55.1±1.2 53.4±0.8 54.2±0.2 52.8±0.3 53.4±0.6 57.5±0.7 59.6±0.7 57.5±0.6 58.0±0.4 57.1±0.7 57.8±1.1 19-20 55.1±1.1 54.1±2.0 54.8±0.7 55.1±1 53.9±1.1 54.2±0.2 60.2±1.1 60.0±1.2 60.2±1.0 59.7±0.7 60.5±0.7 60.1±1.0 10-1 0-10 73.7±0.6 74.0±0.7 72.9±0.5 74±0.7 73.2±0.8 72.4±0.6 73.9±0.3 74.3±0.4 73.1±0.7 74.6±0.2 73.7±0.8 72.8±0.7 11 31.8±1.3 33.8±2.9 32.1±2.4 33.5±2.1 35.9±3.6 35.0±3.2 31.5±3.4 34.2±3.9 34.0±1.2 32.4±1.5 38.2±1.7 34.4±3.0 0-11 66.4±0.4 66.7±0.8 65.5±0.2 66.5±0.7 66.5±1.2 65.2±0.6 66.5±0.3 67.6±0.8 66.1±0.3 67.4±0.5 67.5±0.9 66.4±1.1 12 60.1±2.6 59.1±3.5 60.5±1.0 57.2±2.6 60.1±2.4 57.5±0.4 63.8±1.8 65.2±2.7 65.2±3.1 62.2±1.2 66.0±1.7 65.8±2.9 0-12 65.1±0.7 65.4±0.9 64.2±0.4 65.7±0.5 64.3±1.0 64.1±0.6 65.9±0.2 66.6±1.3 65.1±0.6 66.6±0.6 65.9±1.0 65.0±1.0 13 38.8±1.5 39.3±1.9 39.4±1.5 39.5±0.6 39.0±1.3 40.2±2.2 45.5±1.6 45.2±3.2 43.3±2.3 44.9±1.9 45.4±1.6 47.6±1.1 0-13 64.4±0.9 65.1±0.2 63.5±0.8 64.3±0.8 64.2±0.4 63.2±0.3 66.0±0.7 66.8±1.2 65.0±0.6 67.1±0.8 65.5±1.2 65.4±0.7 14 57.2±1.3 58.2±1.4 58.9±0.9 59.4±1.5 57.9±0.4 56.4±2.2 62.8±2.7 64.7±1.5 64.6±0.3 65.6±1.9 64.7±2.9 62.3±2.5 0-14 62.3±0.9 62.9±0.4 61.3±0.6 62.4±0.8 61.9±0.9 60.6±0.8 63.8±0.5 65.0±0.9 63.4±0.3 65.4±0.8 63.4±1.2 63.8±0.8 15 67.3±0.5 68.7±1.0 68.9±0.3 69.1±0.6 68.2±0.6 68.5±0.6 68.4±0.5 68.4±0.3 69.2±0.1 68.3±0.6 69.1±0.7 69.4±0.5 0-15 63.6±0.5 64.2±0.4 63.4±0.6 64.2±0.6 63.3±1.3 63.1±0.4 65.0±0.5 66.2±0.6 65.1±0.4 66.2±0.6 65.3±0.9 65.2±0.4 16 26.5±4.0 28.2±1.2 27.7±3.2 26.4±1.6 26.2±1.3 25.6±1.9 37.1±1.8 35.6±1.6 37.2±2.7 36.4±1.6 36.5±1.7 38.2±1.6 0-16 57.9±0.4 58.6±0.5 57.2±0.6 58±0.6 57.2±0.5 56.9±0.7 60.4±0.2 61.4±1.4 60.3±0.2 61.2±1.1 60.5±1.5 60.8±0.6 17 33.6±1.3 34.0±0.5 32.4±1.9 33±0.8 31.1±1.4 33.0±0.3 42.3±2.9 44.7±2.2 43.6±2.7 42.2±1.9 43.2±2.0 45.6±1.4 0-17 56.8±0.7 58.2±0.2 57.2±1.1 57.8±0.6 56.2±0.6 56.4±0.7 61.1±0.4 61.8±0.6 60.6±0.9 61.9±0.7 60.7±0.8 61.2±0.4 18 24.1±0.9 23.7±0.9 25.3±0.6 24.7±1.7 23.7±1.7 23.9±1.2 23.9±0.9 24.4±0.5 25.2±0.4 24.2±0.6 25.4±2.0 24.8±0.6 0-18 54.3±0.8 55.6±0.5 54.5±0.5 54.8±1 54.6±0.5 53.9±0.4 59.1±0.5 59.1±0.6 58.8±1.0 59.5±1.1 58.8±0.7 58.5±0.6 19 49.4±1.8 51.4±2.0 52.4±3.2 50.5±1.5 50.6±0.4 50.7±0.6 57.1±1.0 59.7±1.6 60.0±1.9 57.3±1.5 57.6±3.1 57.1±1.9 0-19 56.4±0.9 57.4±0.4 56.4±0.3 57.7±0.3 56.1±0.3 56.1±0.3 60.7±0.4 61.4±0.7 60.5±1.1 61.5±0.5 60.6±0.7 60.4±0.5 20 45.1±2.2 42.5±2.3 43.9±1.1 43.9±2.6 44.0±2.7 43.9±1.6 51.4±1.5 50.6±1.0 49.5±2.0 50.2±1.6 48.4±2.2 48.8±1.4 -

[1] GONG Xuan, XIA Xin, ZHU Wentao, et al. Deformable Gabor feature networks for biomedical image classification[C]. 2021 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2021: 4003–4011. doi: 10.1109/WACV48630.2021.00405. [2] NING Xin, TIAN Weijuan, YU Zaiyang, et al. HCFNN: High-order coverage function neural network for image classification[J]. Pattern Recognition, 2022, 131: 108873. doi: 10.1016/j.patcog.2022.108873. [3] HE Junjun, DENG Zhongying, ZHOU Lei, et al. Adaptive pyramid context network for semantic segmentation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 7511–7520. doi: 10.1109/CVPR.2019.00770. [4] YANG Jing, LI Shaobo, WANG Zheng, et al. Using deep learning to detect defects in manufacturing: A comprehensive survey and current challenges[J]. Materials, 2020, 13(24): 5755. doi: 10.3390/ma13245755. [5] CHEN Pengfei, YU Xuehui, HAN Xumeng, et al. Point-to-box network for accurate object detection via single point supervision[C]. 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 51–67. doi: 10.1007/978-3-031-20077-9_4. [6] SHENG Hualian, CAI Sijia, ZHAO Na, et al. Rethinking IoU-based optimization for single-stage 3D object detection[C]. 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022: 544–561. doi: 10.1007/978-3-031-20077-9_32. [7] CHAUDHRY A, ROHRBACH M, ELHOSEINY M, et al. Continual learning with tiny episodic memories[EB/OL]. https://arxiv.org/abs/1902.10486v1, 2019. [8] KIRKPATRICK J, PASCANU R, RABINOWITZ N, et al. Overcoming catastrophic forgetting in neural networks[J]. Proceedings of the National Academy of Sciences of the United States of America, 2017, 114(13): 3521–3526. doi: 10.1073/pnas.1611835114. [9] ZENKE F, POOLE B, and GANGULI S. Continual learning through synaptic intelligence[C]. The 34th International Conference on Machine Learning, Sydney, Australia, 2017: 3987–3995. [10] ALJUNDI R, BABILONI F, ELHOSEINY M, et al. Memory aware synapses: Learning what (not) to forget[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 144–161. doi: 10.1007/978-3-030-01219-9_9. [11] VAN DE VEN G M and TOLIAS A S. Three scenarios for continual learning[EB/OL]. https://arxiv.org/abs/1904.07734, 2019. [12] WU Yue, CHEN Yinpeng, WANG Lijuan, et al. Large scale incremental learning[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 374–382. doi: 10.1109/CVPR.2019.00046. [13] ZHAI Mengyao, CHEN Lei, and MORI G. Hyper-LifelongGAN: Scalable lifelong learning for image conditioned generation[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 2246–2255. doi: 10.1109/CVPR46437.2021.00228. [14] GRAFFIETI G, MALTONI D, PELLEGRINI L, et al. Generative negative replay for continual learning[J]. Neural Networks, 2023, 162: 369–383. doi: 10.1016/j.neunet.2023.03.006. [15] MARACANI A, MICHIELI U, TOLDO M, et al. RECALL: Replay-based continual learning in semantic segmentation[C]. 2021 IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 7006–7015. doi: 10.1109/ICCV48922.2021.00694. [16] CERMELLI F, MANCINI M, BULÒ S R, et al. Modeling the background for incremental learning in semantic segmentation[C]. x2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 9230–9239. doi: 10.1109/CVPR42600.2020.00925. [17] BALDI P and SADOWSKI P J. Understanding dropout[C]. The 26th International Conference on Neural Information Processing Systems, Lake Tahoe, USA, 2013: 2814–2822. [18] MIRZADEH S I, FARAJTABAR M, and GHASEMZADEH H. Dropout as an implicit gating mechanism for continual learning[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, USA, 2020: 945–951. doi: 10.1109/CVPRW50498.2020.00124. [19] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. [20] CHEN L C, PAPANDREOU G, KOKKINOS I, et al. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(4): 834–848. doi: 10.1109/TPAMI.2017.2699184. [21] LI Zhizhong and HOIEM D. Learning without forgetting[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(12): 2935–2947. doi: 10.1109/TPAMI.2017.2773081. [22] REBUFFI S A, KOLESNIKOV A, SPERL G, et al. iCaRL: Incremental classifier and representation learning[C]. 2017 IEEE conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5533–5542. doi: 10.1109/CVPR.2017.587. [23] MICHIELI U and ZANUTTIGH P. Incremental learning techniques for semantic segmentation[C]. 2019 IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Korea (South), 2019: 3205–3212. doi: 10.1109/iccvw.2019.00400. [24] KLINGNER M, BÄR A, DONN P, et al. Class-incremental learning for semantic segmentation re-using neither old data nor old labels[C]. 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 2020: 1–8. doi: 10.1109/ITSC45102.2020.9294483. [25] MICHIELI U and ZANUTTIGH P. Continual semantic segmentation via repulsion-attraction of sparse and disentangled latent representations[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 1114–1124. doi: 10.1109/CVPR46437.2021.00117. [26] LI Junxi, SUN Xian, DIAO Wenhui, et al. Class-incremental learning network for small objects enhancing of semantic segmentation in aerial imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5612920. doi: 10.1109/TGRS.2021.3124303. [27] DOUILLARD A, CHEN Yifu, DAPOGNY A, et al. PLOP: Learning without forgetting for continual semantic segmentation[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 4039–4049. doi: 10.1109/CVPR46437.2021.00403. [28] ZHAO Danpei, YUAN Bo, and SHI Zhenwei. Inherit with distillation and evolve with contrast: Exploring class incremental semantic segmentation without exemplar memory[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(10): 11932–11947. doi: 10.1109/TPAMI.2023.3273574. -

下载:

下载:

下载:

下载: