Review of Deep Gradient Inversion Attacks and Defenses in Federated Learning

-

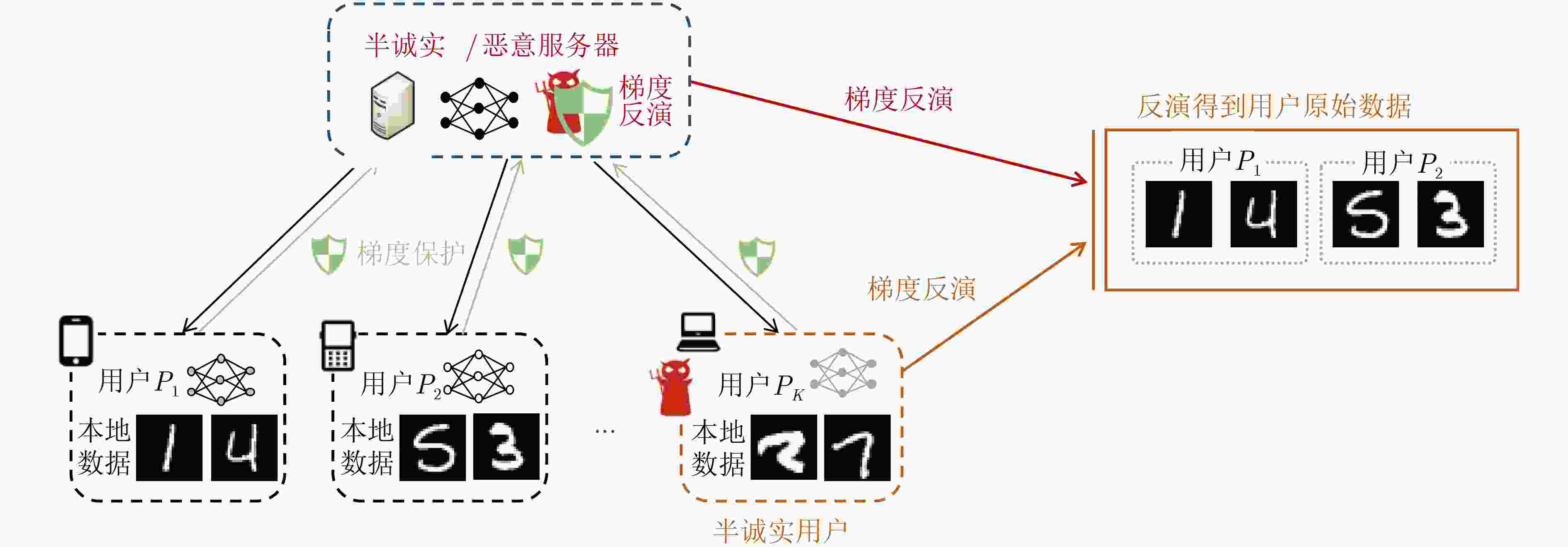

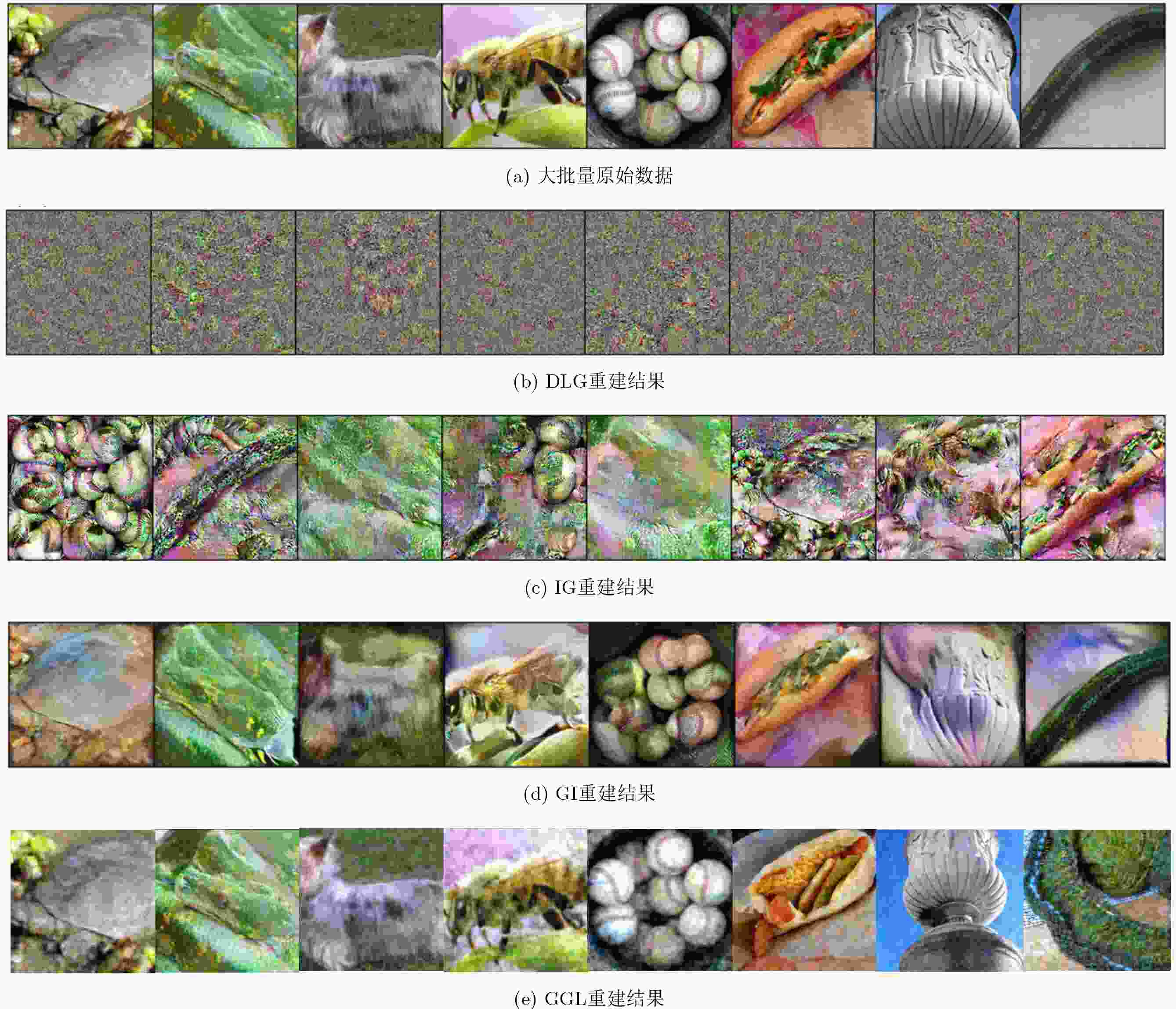

摘要: 联邦学习作为一种“保留数据所有权,释放数据使用权”的分布式机器学习方法,打破了阻碍大数据建模的数据孤岛。然而,联邦学习在训练过程中只交换梯度而不交换训练数据的特点并不能保证用户训练数据的机密性。近年来新型的深度梯度反演攻击表明,敌手可从共享梯度中重建用户的私有训练数据,从而对联邦学习的私密性产生了严重威胁。随着梯度反演技术的演进,敌手从深层网络恢复大批量原始数据的能力不断增强,甚至对加密梯度的隐私保护联邦学习(PPFL)发起了挑战。而有效的针对性防御方法主要基于扰动变换,旨在混淆梯度、输入或特征以隐藏敏感信息。该文首先指出了隐私保护联邦学习的梯度反演漏洞,并给出了梯度反演威胁模型。之后从攻击范式、攻击能力、攻击对象3个角度对深度梯度反演攻击进行详细梳理。随后将基于扰动变换的防御方法依据扰动对象的不同分为梯度扰动、输入扰动、特征扰动3类,并对各类方法中的代表性工作进行分析介绍。最后,对未来研究工作进行展望。Abstract: As a distributed machine learning approach that preserves data ownership while releasing data usage rights, federated learning overcomes the challenge of data silos that hinder large-scale modeling with big data. However, the characteristic of only sharing gradients without training data during the federated training process does not guarantee the confidentiality of users’ training data. In recent years, novel deep gradient inversion attacks have demonstrated the ability of adversaries to reconstruct private training data from shared gradients, which poses a serious threat to the privacy of federated learning. With the evolution of gradient inversion techniques, adversaries are increasingly capable of reconstructing large volumes of data from deep neural networks, which challenges the Privacy-Preserving Federated Learning (PPFL) with encrypted gradients. Effective defenses mainly rely on perturbation transformations to obscure original gradients, inputs, or features to conceal sensitive information. Firstly, the gradient inversion vulnerability in PPFL is highlighted and the threat model in gradient inversion is presented. Then a detailed review of deep gradient inversion attacks is conducted from the perspectives of paradigms, capabilities, and targets. The perturbation-based defenses are divided into three categories according to the perturbed objects: gradient perturbation, input perturbation, and feature perturbation. The representative works in each category are analyzed in detail. Finally, an outlook on future research directions is provided.

-

表 1 梯度反演攻击总结

攻击方法 攻击范式 攻击能力 攻击对象 数据类型 前提假设 特点 不足 DLG[20] 迭代优化 被动 数据重建+

标签恢复图像、

标签— 采用欧氏距离作为梯度匹配目标;同时优化数据与标签。 仅适用于小批量低分辨率图像,重建效果对模型权重初始化方法、训练阶段敏感。 SAPAG[31] 迭代优化 被动 数据重建 图像 — 采用基于加权高斯核的梯度匹配目标。 仅适用于小批量低分辨率图像。 CPL[32] 迭代优化 被动 数据重建 图像 — 引入标签正则化项。 仅适用于小批量低分辨率图像。 IG[33] 迭代优化 被动 数据重建 图像 标签已知。 基于余弦相似度的梯度匹配目标,引入全变分正则项,可重建高分辨率图像。 仅适用于小批量图像。 GI[34] 解析+

迭代优化被动 标签恢复

+数据重建标签、

图像采用非负激活函数,训练批次中无重复标签样本;已知BN统计信息。 引入保真度正则和组一致性正则。 无法恢复重复标签样本的具体数量;BN统计信息已知的假设太强。 GIAS[36] 迭代优化 被动 数据重建 图像 预训练GAN模型,标签已知。 依次在GAN隐空间和参数空间上优化。 重建图像失真。 GGL[37] 迭代优化 被动 数据重建 图像 预训练GAN模型,标签已知。 评估多种防御方法下的梯度反演效果。 重建图像失真。 HCGLA[39] 迭代优化 被动 数据重建 图像 预训练GAN模型、去噪模型。 对稀疏至0.1%的梯度成功反演。 仅针对单样本。 TAG[41] 迭代优化 被动 数据重建 文本 — L2、L1范数结合的梯度匹配目标,在嵌入空间上迭代优化。 仅针对单文本。 LAMP[42] 迭代优化 被动 数据重建 文本 标签已知,辅助语言模型(如GPT-2)。 交替进行嵌入空间上的连续优化和文本顺序上的离散优化。 仅适用于小批量文本。 Li等人[43] 迭代优化 被动 数据重建 语音 — 首个两阶段语音波形重建方法。 仅针对单样本。 TabLeak[44] 迭代优化 被动 数据重建 表格 — 首个表格数据重建方法。 — Phong

等人[21]解析 被动 数据重建 图像 针对全连接网络。 利用全连接网络输入与梯度的线性关系。 仅针对单样本且依赖梯度的完整性。 R-GAP[45]

COPA[46]解析 被动 数据重建 图像 针对全连接网络、卷积神经网络。 通过求解梯度约束、权重约束方程组重建输入。 仅针对单样本且依赖梯度的完整性。 CPA[47] 解析+

迭代优化被动 数据重建 图像 针对全连接网络、卷积神经网络。 将全连接网络梯度反演建模为盲源分离问题。 批次大小需小于首个全连接层神经元数,依赖梯度的完整性。 FILM[48] 解析 被动 数据重建 文本 — 从梯度中恢复所有词,再基于波束搜索和重排序重建句子。 依赖梯度的完整性。 Lam

等人[49]解析 主动 聚合梯度分解 — 固定模型权重。 将聚合梯度的分解问题表示为约束二元矩阵分解问题。 依赖梯度的完整性,易被用户检测出。 Boenisch

等人[50]解析 主动 数据重建 图像 篡改模型权重,针对ReLU激活的全连接网络。 通过恶意权重暴露更多的独占神经元。 依赖梯度完整性,攻击成功率提升有限。 Wen

等人[51]解析 主动 平均梯度退化 — 篡改模型最后一个分类层的权重。 通过钓鱼策略使平均梯度退化至单个目标样本产生的梯度 一次攻击仅能恢复一个样本,易被用户检测出。 Pasquini

等人[52]解析 主动 聚合梯度退化 — 篡改模型权重,采用ReLU激活函数。 通过恶意权重使聚合梯度退化至单个目标用户产生的梯度。 易被用户检测出。 Fowl

等人[53]解析 主动 数据重建 图像 篡改模型结构(插入印记模块)及权重。 通过恶意模型权重分离线性层梯度。 依赖梯度的完整性,易被用户检测出。 Zhao

等人[54]解析 主动 数据重建 图像 篡改模型结构及权重。 向印记模块前插入卷积层以分离不同用户在印记模块的梯度。 依赖梯度的完整性,易被用户检测出。 NEA[55] 解析+

迭代优化主动 数据重建 图像 篡改用户数据预处理算法,采用ReLU激活函数。 为全连接网络的输入与梯度建立线性方程组。 依赖梯度的完整性,易被用户检测出。 Fowl

等人[56]解析 主动 数据重建 文本 篡改模型权重。 通过恶意模型权重禁用注意力层,分离线性层梯度。 依赖梯度的完整性,易被用户检测出。 iDLG[57] 解析 被动 标签恢复 标签 — 根据最后一层的梯度

恢复样本标签。仅针对单样本。 RLG[58] 解析 被动 标签恢复 标签 批次大小小于模型

输出类别数。将标签恢复转化为矩阵分解

和线性规划问题求解。无法恢复重复标签样本的数量,训练后期的标签恢复精度下降 iLRG[59] 解析 被动 标签恢复 标签 — 将标签恢复粒度提升至实例数目,可恢复相同标签样本的具体数量 训练后期的恢复精度下降。 表 2 梯度反演防御方法总结

防御方法 扰动对象 特点 模型准确率损失 不足 梯度加噪[20] 梯度扰动 直接向梯度注入固定方差的高斯或拉普拉斯噪声。 –30.5%(CIFAR100) 难以在隐私性与模型准确率间取得平衡。 Fed-CDP[61] 梯度扰动 逐样本的客户端侧差分隐私。 –6.2%(MNIST) DP-dynS[62] 梯度扰动 动态隐私参数的差分隐私。 –6.6%(CIFAR10) LRP[63] 梯度扰动 根据梯度随输入变化的敏感度向梯度注入针对性噪声。 –1.58%(CIFAR10) 8比特梯度量化[20] 梯度扰动 将高精度梯度量化至低精度。 –22.6%(CIFAR100) InstaHide[64] 输入扰动 对训练图像进行实例编码。 –5.8%(CIFAR100) 已无法抵抗攻击能力不断增强的梯度反演。 ATS[65] 输入扰动 搜索隐私性强、对模型准确率影响小的数据增强策略。 +1.04%(CIFAR100) Soteria[40] 特征扰动 对网络中间特征进行扰动。 –0.5%(CIFAR100) TextHide[68] 特征扰动 对训练文本在网络的中间特征实例编码。 –1.9%(GLUE) PRECODE[69] 特征扰动 向网络中插入隐私增强模块。 –2.2%(CIFAR10) 表 3 典型深度梯度反演攻防能力矩阵

防御 DLG[20] IG[33] R-GAP[45] GI[34] NEA[55] GGL[37] BFGL[70] HCGLA[39] Carlini

等人[71]无防御措施

– 增大批次大小

– 增大输入尺寸

–

– Fed-CDP[61]

– – – – – – – DP-dynS[62]

– – – – – – – – LRP[63]

– – – – – – – 加性噪声[20]

– – – –

– – – 梯度量化[20]

– – – –

– – – 梯度稀疏[20]

– – – –

–

– Soteria[40]

– – –

– – PRECODE[69]

– – – –

– – ATS[65]

– – – –

– – InstaHide[64]

– – – – – – –

TextHide[68]

– – – – – – –

-

[1] JORDAN M I and MITCHELL T M. Machine learning: Trends, perspectives, and prospects[J]. Science, 2015, 349(6245): 255–260. doi: 10.1126/science.aaa8415. [2] LECUN Y, BENGIO Y, and HINTON G. Deep learning[J]. Nature, 2015, 521(7553): 436–444. doi: 10.1038/nature14539. [3] FANG Binxing. Breaking the conflict between data element flows and privacy protection[EB/OL]. http://event.chinaaet.com/huodong/cite2022/, 2022. [4] MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2017: 1273–1282. [5] YANG Qiang, LIU Yang, CHENG Yong, et al. Federated Learning[M]. San Rafael: Morgan & Claypool, 2020: 1–207. [6] YANG Qiang, LIU Yang, CHEN Tianjian, et al. Federated machine learning: Concept and applications[J]. ACM Transactions on Intelligent Systems and Technology, 2019, 10(2): 12. doi: 10.1145/3298981. [7] LIU Yang, FAN Tao, CHEN Tianjian, et al. FATE: An industrial grade platform for collaborative learning with data protection[J]. Journal of Machine Learning Research, 2021, 22: 1–1. [8] 马艳军, 于佃海, 吴甜, 等. 飞桨: 源于产业实践的开源深度学习平台[J]. 数据与计算发展前沿, 2019, 1(1): 105–115. doi: 10.11871/jfdc.issn.2096.742X.2019.01.011.MA Yanjun, YU Dianhai, WU Tian, et al. Paddlepaddle: An open-source deep learning platform from industrial practice[J]. Frontiers of Data and Computing, 2019, 1(1): 105–115. doi: 10.11871/jfdc.issn.2096.742X.2019.01.011. [9] BONAWITZ K A, EICHNER H, GRIESKAMP W, et al. Towards federated learning at scale: System design[C]. Machine Learning and Systems 2019, Stanford, USA, 2019: 374–388. doi: 10.48550/arXiv.1902.01046. [10] RYFFEL T, TRASK A, DAHL M, et al. A generic framework for privacy preserving deep learning[EB/OL]. https://arxiv.org/pdf/1811.04017v2.pdf, 2018. [11] HAO Meng, LI Hongwei, LUO Xizhao, et al. Efficient and privacy-enhanced federated learning for industrial artificial intelligence[J]. IEEE Transactions on Industrial Informatics, 2020, 16(10): 6532–6542. doi: 10.1109/TII.2019.2945367. [12] RIEKE N, HANCOX J, LI Wenqi, et al. The future of digital health with federated learning[J]. NPJ Digital Medicine, 2020, 3: 119. doi: 10.1038/s41746-020-00323-1. [13] XU Jie, GLICKSBERG B S, SU Chang, et al. Federated learning for healthcare informatics[J]. Journal of Healthcare Informatics Research, 2021, 5(1): 1–19. doi: 10.1007/s41666-020-00082-4. [14] MILLS J, HU Jia, and MIN Geyong. Communication-efficient federated learning for wireless edge intelligence in iot[J]. IEEE Internet of Things Journal, 2020, 7(7): 5986–5994. doi: 10.1109/JIOT.2019.2956615. [15] YANG Wensi, ZHANG Yuhang, YE Kejiang, et al. FFD: A federated learning based method for credit card fraud detection[C]. Proceedings of the 8th International Conference on Big Data, San Diego, USA, 2019: 18–32. doi: 10.1007/978-3-030-23551-2_2. [16] LONG Guodong, TAN Yue, JIANG Jing, et al. Federated learning for open banking[M]. YANG Qiang, FAN Lixin, and YU Han. Federated Learning: Privacy and Incentive. Cham: Springer, 2020: 240–254. doi 10.1007/978-3-030-63076-8_17. [17] NASR M, SHOKRI R, and HOUMANSADR A. Comprehensive privacy analysis of deep learning: Passive and active white-box inference attacks against centralized and federated learning[C]. 2019 IEEE Symposium on Security and Privacy, San Francisco, USA, 2019: 739–753. doi: 10.1109/SP.2019.00065. [18] MELIS L, SONG Congzheng, DE CRISTOFARO E, et al. Exploiting unintended feature leakage in collaborative learning[C]. 2019 IEEE Symposium on Security and Privacy, San Francisco, USA, 2019: 691–706. doi: 10.1109/SP.2019.00029. [19] WANG Zhibo, SONG Mengkai, ZHANG Zhifei, et al. Beyond inferring class representatives: User-level privacy leakage from federated learning[C]. Proceedings of 2019 IEEE Conference on Computer Communications, Paris, France, 2019: 2512–2520. doi: 10.1109/INFOCOM.2019.8737416. [20] ZHU Ligeng, LIU Zhijian, and HAN Song. Deep leakage from gradients[C]. Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, Canada, 2019: 1323. doi: 10.5555/3454287.3455610. [21] PHONG L T, AONO Y, HAYASHI T, et al. Privacy-preserving deep learning via additively homomorphic encryption[J]. IEEE Transactions on Information Forensics and Security, 2018, 13(5): 1333–1345. doi: 10.1109/TIFS.2017.2787987. [22] DONG Ye, CHEN Xiaojun, SHEN Liyan, et al. Eastfly: Efficient and secure ternary federated learning[J]. Computers & Security, 2020, 94: 101824. doi: 10.1016/j.cose.2020.101824. [23] ZHANG Chengliang, LI Suyi, XIA Junzhe, et al. Batchcrypt: Efficient homomorphic encryption for cross-silo federated learning[C/OL]. 2020 USENIX Annual Technical Conference, 2020: 493–506. [24] ZHU Hangyu, WANG Rui, JIN Yaochu, et al. Distributed additive encryption and quantization for privacy preserving federated deep learning[J]. Neurocomputing, 2021, 463: 309–327. doi: 10.1016/j.neucom.2021.08.062. [25] ZHANG Jiale, CHEN Bing, YU Shui, et al. PEFL: A privacy-enhanced federated learning scheme for big data analytics[C]. 2019 IEEE Global Communications Conference, Waikoloa, USA, 2019: 1–6. doi: 10.1109/GLOBECOM38437.2019.9014272. [26] BONAWITZ K, IVANOV V, KREUTER B, et al. Practical secure aggregation for privacy-preserving machine learning[C]. 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, USA, 2017: 1175–1191. doi: 10.1145/3133956.3133982. [27] XU Guowen, LI Hongwei, LIU Sen, et al. Verifynet: Secure and verifiable federated learning[J]. IEEE Transactions on Information Forensics and Security, 2019, 15: 911–926. doi: 10.1109/TIFS.2019.2929409. [28] GUO Xiaojie, LIU Zheli, LI Jin, et al. VeriFL: Communication-efficient and fast verifiable aggregation for federated learning[J]. IEEE Transactions on Information Forensics and Security, 2021, 16: 1736–1751. doi: 10.1109/TIFS.2020.3043139. [29] LUO Fucai, AL-KUWARI S, and DING Yong. SVFL: Efficient secure aggregation and verification for cross-silo federated learning[J]. IEEE Transactions on Mobile Computing, 2024, 23(1): 850–864. doi: 10.1109/TMC.2022.3219485. [30] HAHN C, KIM H, KIM M, et al. VerSA: Verifiable secure aggregation for cross-device federated learning[J]. IEEE Transactions on Dependable and Secure Computing, 2023, 20(1): 36–52. doi: 10.1109/TDSC.2021.3126323. [31] WANG Yijue, DENG Jieren, GUO Dan, et al. SAPAG: A self-adaptive privacy attack from gradients[EB/OL]. https://arxiv.org/pdf/2009.06228.pdf, 2020. [32] WEI Wenqi, LIU Ling, LOPER M, et al. A framework for evaluating gradient leakage attacks in federated learning[EB/OL]. https://arxiv.org/pdf/2004.10397v2.pdf, 2020. [33] GEIPING Jonas, BAUERMEISTER H, DRÖGE H, et al. Inverting gradients - how easy is it to break privacy in federated learning?[C]. The 34th Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 16937–16947. doi: 10.48550/arXiv.2003.14053. [34] YIN Hongxu, MALLYA A, VAHDAT A, et al. See through gradients: Image batch recovery via gradinversion[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 16332–16341. doi: 10.1109/CVPR46437.2021.01607. [35] HATAMIZADEH A, YIN Hongxu, ROTH H, et al. GradViT: Gradient inversion of vision transformers[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 10011–10020. doi: 10.1109/CVPR52688.2022.00978. [36] JEON J, KIM J, LEE K, et al. Gradient inversion with generative image prior[C/OL]. The 35th Conference on Neural Information Processing Systems, 2021: 29898–29908. [37] LI Zhuohang, ZHANG Jiaxin, LIU Luyang, et al. Auditing privacy defenses in federated learning via generative gradient leakage[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 10122–10132. doi: 10.1109/CVPR52688.2022.00989. [38] HUANG Yangsibo, GUPTA S, SONG Zhao, et al. Evaluating gradient inversion attacks and defenses in federated learning[C/OL]. The 35th Conference on Neural Information Processing Systems, 2021: 7232–7241. [39] YANG Haomiao, GE Mengyu, XIANG Kunlan, et al. Using highly compressed gradients in federated learning for data reconstruction attacks[J]. IEEE Transactions on Information Forensics and Security, 2022, 18: 818–830. doi: 10.1109/TIFS.2022.3227761. [40] SUN Jingwei, LI Ang, WANG Binghui, et al. Soteria: Provable defense against privacy leakage in federated learning from representation perspective[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 9307–9315. doi: 10.1109/CVPR46437.2021.00919. [41] DENG Jieren, WANG Yijue, LI Ji, et al. TAG: Gradient attack on transformer-based language models[C]. Findings of the Association for Computational Linguistics, Punta Cana, Dominican Republic, 2021: 3600–3610. doi: 10.18653/v1/2021.findings-emnlp.305. [42] BALUNOVIĆ M, DIMITROV D I, JOVANOVIĆ N, et al. LAMP: Extracting text from gradients with language model priors[C]. The 36th Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 7641–7654. doi: 10.48550/arXiv.2202.08827. [43] LI Zhuohang, ZHANG Jiaxin, and LIU Jian. Speech privacy leakage from shared gradients in distributed learning[C]. 2023 IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 2023: 1–5. doi: 10.1109/ICASSP49357.2023.10095443. [44] VERO M, BALUNOVIĆ M, DIMITROV D I, et al. TabLeak: Tabular data leakage in federated learning[C]. The 40th International Conference on Machine Learning, Hawaii, USA, 2023: 1460. doi: 10.5555/3618408.3619868. [45] ZHU Junyi and BLASCHKO M B. R-Gap: Recursive gradient attack on privacy[C/OL]. The 9th International Conference on Learning Representations, 2021: 1–17. [46] CHEN Cangxiong and CAMPBELL N D F. Understanding training-data leakage from gradients in neural networks for image classification[EB/OL]. https://arxiv.org/pdf/2111.10178.pdf, 2021. [47] KARIYAPPA S, GUO Chuan, MAENG K, et al. Cocktail party attack: Breaking aggregation-based privacy in federated learning using independent component analysis[C]. The 40th International Conference on Machine Learning, Honolulu, USA, 2023: 651. [48] GUPTA S, HUANG Yangsibo, ZHONG Zexuan, et al. Recovering private text in federated learning of language models[C]. The 36th Conference on Neural Information Processing Systems, New Orleans, USA, 2022: 8130–8143. [49] LAM M, WEI G Y, BROOKS D, et al. Gradient disaggregation: Breaking privacy in federated learning by reconstructing the user participant matrix[C/OL]. The 38th International Conference on Machine Learning, 2021: 5959–5968. [50] BOENISCH F, DZIEDZIC A, SCHUSTER R, et al. When the curious abandon honesty: Federated learning is not private[C]. The 2023 IEEE 8th European Symposium on Security and Privacy, Delft, Netherlands, 2021: 175–199,doi: 10.1109/EuroSP57164.2023.00020. [51] WEN Yuxin, GEIPING J A, FOWL L, et al. Fishing for user data in large-batch federated learning via gradient magnification[C]. The 39th International Conference on Machine Learning, Baltimore, USA, 2022: 23668–23684. doi: 10.48550/arXiv.2202.00580. [52] PASQUINI D, FRANCATI D, and ATENIESE G. Eluding secure aggregation in federated learning via model inconsistency[C]. 2022 ACM SIGSAC Conference on Computer and Communications Security, Los Angeles, USA, 2022: 2429–2443. doi: 10.1145/3548606.3560557. [53] FOWL L, GEIPING J, CZAJA W, et al. Robbing the fed: Directly obtaining private data in federated learning with modified models[C/OL]. The 10th International Conference on Learning Representations, 2021: 1–25. doi: 10.48550/arXiv.2110.13057. [54] ZHAO J C, ELKORDY A R, SHARMA A, et al. The resource problem of using linear layer leakage attack in federated learning[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 3974–3983. doi: 10.1109/CVPR52729.2023.00387. [55] PAN Xudong, ZHANG Mi, YAN Yifan, et al. Exploring the security boundary of data reconstruction via neuron exclusivity analysis[C]. The 31st USENIX Security Symposium, Boston, USA, 2020: 3989–4006. doi: 10.48550/arXiv.2010.13356. [56] FOWL L H, GEIPING J, REICH S, et al. Decepticons: Corrupted transformers breach privacy in federated learning for language models[C]. The 11th International Conference on Learning Representations, Kigali, Rwanda, 2022: 1–23. doi: 10.48550/arXiv.2201.12675. [57] ZHAO Bo, MOPURI K R, and BILEN H. iDLG: Improved deep leakage from gradients[EB/OL]. https://arxiv.org/pdf/2001.02610.pdf, 2020. [58] DANG T, THAKKAR O, RAMASWAMY S, et al. Revealing and protecting labels in distributed training[C]. The 35th Conference on Neural Information Processing Systems, Sydney, Australia, 2021: 1727–1738. doi: 10.48550/arXiv.2111.00556. [59] MA Kailang, SUN Yu, CUI Jian, et al. Instance-wise batch label restoration via gradients in federated learning[C]. The 11th International Conference on Learning Representations, Kigali, Rwanda, 2023: 1–15. [60] ABADI M, CHU A, GOODFELLOW I, et al. Deep learning with differential privacy[C]. 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 2016: 308–318. doi: 10.1145/2976749.2978318. [61] WEI Wenqi, LIU Ling, WU Yanzhao, et al. Gradient-leakage resilient federated learning[C]. The 2021 IEEE 41st International Conference on Distributed Computing Systems, Washington, USA, 2021: 797–807. doi: 10.1109/ICDCS51616.2021.00081. [62] WEI Wenqi and LIU Ling. Gradient leakage attack resilient deep learning[J]. IEEE Transactions on Information Forensics and Security, 2021, 17: 303–316. doi: 10.1109/TIFS.2021.3139777. [63] WANG Junxiao, GUO Song, XIE Xin, et al. Protect privacy from gradient leakage attack in federated learning[C]. 2022 IEEE Conference on Computer Communications, London, UK, 2022: 580–589. doi: 10.1109/INFOCOM48880.2022.9796841. [64] HUANG Yangsibo, SONG Zhao, LI Kai, et al. InstaHide: Instance-hiding schemes for private distributed learning[C/OL]. The 37th International Conference on Machine Learning, 2020: 419. doi: 10.5555/3524938.3525357. [65] GAO Wei, GUO Shangwei, ZHANG Tianwei, et al. Privacy-preserving collaborative learning with automatic transformation search[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 114–123. doi: 10.1109/CVPR46437.2021.00018. [66] MELLOR J, TURNER J, STORKEY A, et al. Neural architecture search without training[C/OL]. The 38th International Conference on Machine Learning, 2021: 7588–7598. [67] CUBUK E D, ZOPH B, MANÉ D, et al. Autoaugment: Learning augmentation strategies from data[C]. The 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 113–123. doi: 10.1109/CVPR.2019.00020. [68] HUANG Yangsibo, SONG Zhao, CHEN Danqi, et al. TextHide: Tackling data privacy in language understanding tasks[C/OL]. Findings of the Association for Computational Linguistics, 2020: 1368–1382. doi: 10.18653/v1/2020.findings-emnlp.123. [69] SCHELIGA D, MÄDER P, and SEELAND M. PRECODE - a generic model extension to prevent deep gradient leakage[C]. 2022 IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2022: 3605–3614. doi: 10.1109/WACV51458.2022.00366. [70] BALUNOVIĆ M, DIMITROV D I, STAAB R, et al. Bayesian framework for gradient leakage[C/OL]. The 10th International Conference on Learning Representations, 2021: 1–16. doi: 10.48550/arXiv.2111.04706. [71] CARLINI N, DENG S, GARG S, et al. Is private learning possible with instance encoding?[C]. Proceedings of 2021 IEEE Symposium on Security and Privacy, San Francisco, USA, 2021: 410–427. doi: 10.1109/SP40001.2021.00099. -

下载:

下载:

下载:

下载: