Review on Olfactory and Visual Neural Pathways in Drosophila

-

摘要: 果蝇嗅觉和视觉神经系统对于自然环境中嗅觉和视觉刺激具有高度的灵敏性,高灵敏的嗅视单模态感知决策和跨模态协同决策机制为仿生应用提供一定的启示作用。该文首先以果蝇嗅觉和视觉神经系统为基础,从嗅觉和视觉信号的捕获、加工、决策3个部分概述了果蝇嗅觉和视觉神经单模态感知决策生理机制与计算模型的研究现状,同时对果蝇嗅觉和视觉神经跨模态协同决策生理机制与计算模型进行阐述;然后对果蝇嗅觉和视觉的单模态感知和跨模态协同的典型仿生应用进行归纳;最后总结果蝇嗅视神经通路生理机制与计算建模当前面临的难题并展望未来发展趋势,为未来相关研究工作奠定了基础。Abstract: The olfactory and visual neural systems in Drosophila are highly sensitive to the olfactory and visual stimuli in the natural environment. The highly sensitive single-modal perception and cross-modal collaboration decision-making mechanisms of the olfactory and visual neural systems provide certain inspiration for bionic applications. Firstly, based on the olfactory and visual neural systems in Drosophila, the current research status of the physiological mechanisms and computational models of single-modal perception decision-making of the olfactory and visual neurons is summarized. The summary is divided into three parts: capturing, processing, and decision-making of the olfactory and visual signals. Meanwhile, the physiological mechanisms and computational models of cross-modal collaboration decision-making of the olfactory and visual neurons in Drosophila are further expounded. Then, the typical bionic applications of single-modal perception and cross-modal collaboration in Drosophila are summarized. Finally, the current challenges of the physiological mechanisms and computational models of the olfactory and visual neural pathways in Drosophila are summarized and the future development trends are outlooked for, which lays a foundation for future research work.

-

图 7 基于仿生果蝇嗅觉的分类决策应用处理示意图[63]

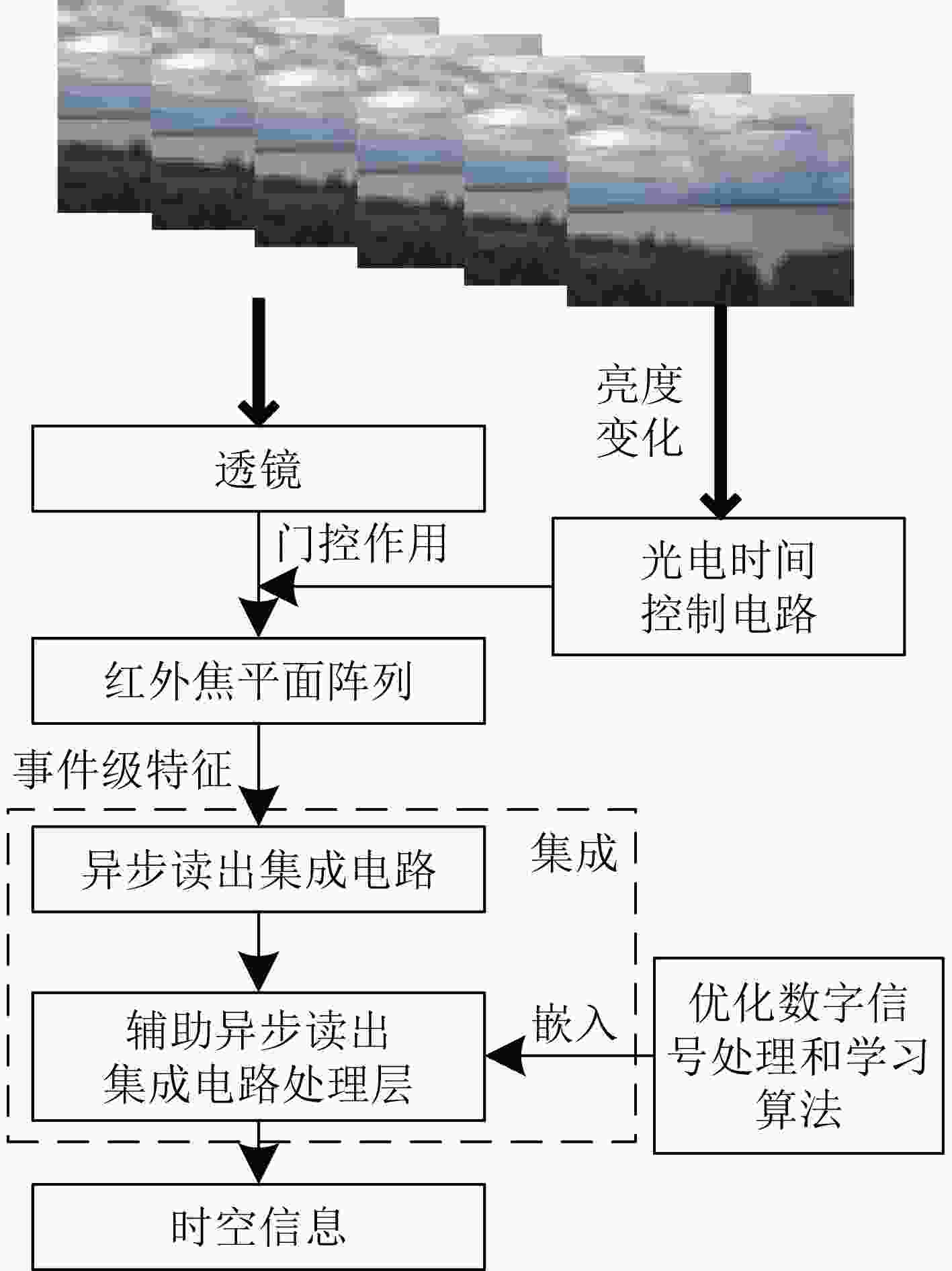

图 8 基于仿生果蝇视觉的避障应用处理示意图[65]

表 1 典型的果蝇嗅觉神经通路计算模型与简要描述

文献 示意图 简要描述 [22] 图2(a) 根据果蝇蘑菇体中凯尼恩细胞(KC)的稀疏性与蘑菇体输出神经元(MBON)的联想学习机制,Kennedy提出了果蝇嗅觉神经系统的动态计算模型验证嗅觉感受神经元(ORN)感知的自然气味表征学习分类,该模型适合于凯尼恩细胞(KC)对气味反应不相关,为了达到不相关性,在凯尼恩细胞层加入稳态阈值调谐机制,同时投射神经元(PN)存在局部神经元(LN)抑制机制和凯尼恩细胞(KC)存在前对侧神经元(APL)抑制作用。通过对110种自然气味进行测试,验证该计算模型的有效性与蘑菇体输出神经元(MBON)联想学习的泛化特性。区别在于凯尼恩细胞(KC)到蘑菇体输出神经元(MBON)之间通过数据库训练估计连接权重,利用固化的连接权重估计嗅觉信号的具体类型。 [23] 图2(b) 根据果蝇蘑菇体中凯尼恩细胞(KC)和蘑菇体输出神经元(MBON)之间的突触连接可塑性,Springer等人提出了基于果蝇蘑菇体的前馈电路模型验证食欲和厌恶条件反射以及记忆消退。蘑菇体输出神经元之间的侧抑制性与从蘑菇体输出神经元到多巴胺能神经元的兴奋反馈对于奖赏预测和消退机制至关重要。MVP2/M6神经元的激活介导接近行为,MV2/V2神经元的激活介导回避行为,对嗅觉信号的行为偏好(食欲或厌恶)是通过MVP2/M6和MV2/V2神经元之间的不平衡来计算。区别在于凯尼恩细胞(KC)与4个蘑菇体输出神经元(MBON)完全连接,并将四个蘑菇体输出神经元(MBON)平均分成两组,两两相互抑制输出,代表靠近和远离两种不同的行为。 [24] 图2(c) 根据果蝇中央脑能够有效地进行嗅觉信号类型的识别和气味浓度水平的估计,Mosqueiro等人提出基于Hebbian学习和输出抑制相互竞争机制的果蝇嗅觉信号感知模式识别通路模型,其中触角小叶神经层中嗅觉感受神经元(ORN)负责嗅觉信号采集,触角小叶神经层中投射神经元(PN)负责特征提取,蘑菇体中凯尼恩细胞(KC)负责模式识别。在决策过程中,果蝇嗅觉神经系统至少需要3条重要信息才能进行决策,即气味类型、气味浓度与气味源距离,其中气味类型用于了解果蝇是否对气味感兴趣,气味浓度用于了解有多少气味源可供捕获,气味源距离用于评估搜索来源的努力是否具有成本效益。区别在于凯尼恩细胞(KC)和蘑菇体输出神经元(MBON)之间使用Hebbian学习和蘑菇体输出神经元(MBON)之间相互竞争机制进行识别。 表 2 表征视网膜神经层生理机制的四种数学模型

文献 数学模型 [25] $ {{P}}\left( {x,y,t} \right) = L\left( {x,y,t} \right)\; - L\left( {x,y,t - 1} \right) $ [26] $ {{{P}}_{\mathrm{G}}}\left( {x,y,t} \right) = \iint {L\left( {{{u}},{{v}},t} \right){{{G}}_\sigma }\left( {x - {{u}},y - {{v}}} \right)}{\rm{d}}{{u}}{\rm{d}}{{v}} $ $ {G_\sigma }\left( {x,y} \right){\text{ = }}\dfrac{1}{{2{\pi}{\sigma ^2}}}\exp \left( { - \dfrac{{{x^2} + {y^2}}}{{2{\sigma ^2}}}} \right) $ [27] $ {\rm{Li}}\left( {x,y,t} \right){\text{ = }}\dfrac{{{{\left( {{P_{\mathrm{G}}}\left( {x,y,t} \right)} \right)}^n}}}{{{{\left( {{P_{\mathrm{G}}}\left( {x,y,t} \right)} \right)}^n} + {{\left( {{P_{\mathrm{C}}}\left( {x,y,t} \right)} \right)}^n}}} $ $ \dfrac{{{\rm{d}}{{{P}}_{\text{C}}}\left( {x,y,t} \right)}}{{{\rm{d}}{{t}}}} = \dfrac{1}{\tau }\left( {{{{P}}_{\text{G}}}\left( {x,y,t} \right) - {{{P}}_{\text{C}}}\left( {x,y,t} \right)} \right) $ [28] $ \begin{gathered} {{P}}\left( {x,y,t} \right) = L\left( {x,y,t} \right)\; - L\left( {x,y,t - 1} \right)\; \\ \;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\quad+\sum\limits_{i{\text{ = }}1}^{{n_p}} {\left( {{\lambda _{{i}}} \cdot P\left( {x,y,t - {{i}}} \right)} \right)} \\ \end{gathered} $ $ {\lambda _{{i}}}\;{\text{ = }}\;{\left( {1 + {{\text{e}}^{{i}}}} \right)^{ - 1}} $ 表 3 果蝇视觉神经决策通路计算模型相关研究机构与简要描述

研究机构 示意图 简要描述 英国林肯大学计算机

科学学院图4(a)–图4(d) 岳士岗团队专注于果蝇视觉、视觉行为偏好等的研究,提出了基于LPTC,LGMD1,LGMD2和STMD神经元的计算模型,区别在于基于LPTC和STMD神经元的计算模型直接将输入视觉信号分为ON和OFF两路视觉通路,然后传递到相应的运动神经元进行信号处理,而基于LGMD1和LGMD2计算模型首先将输入视觉信号分为兴奋性(Excitability)和抑制性(Inhibition)两路视觉通路,然后基于E和I视觉通路再分成ON和OFF两路视觉通路,LGMD1和LGMD2计算模型的区别在于对ON视觉通路的选择性,相关计算模型主要应用在无人机与机器人中。 英国纽卡斯尔大学行为

与进化中心图4(e) Rind团队专注于果蝇中央脑、认知行为等的研究,提出了基于LGMD神经元的计算模型,区别在于兴奋性(Excitability)和抑制性(Inhibition)两路视觉通路未再次分成ON和OFF两路视觉通路,而是直接传递到LGMD神经元进行信号处理,相关计算模型主要应用在无人和辅助驾驶中。 中国科学院自动化研究所与生物物理研究所 图4(f) 中国科学院自动化研究所的曾毅团队与生物物理研究所郭爱克团队专注于类脑认知计算模型等的研究,提出使用脉冲神经网络构建果蝇的中央脑神经层计算模型,区别在于将感知线性决策与价值非线性决策两部分集成到一起,高度表征了中央脑神经层的神经生理机制,相关计算模型主要应用在无人机中。 -

[1] CHOW D M L. Visuo-olfactory integration in Drosophila flight control: Neural circuits, behavior, and ecological implications[D]. [Ph. D. dissertation], University of California, 2011. [2] DVOŘÁČEK J, BEDNÁŘOVÁ A, KRISHNAN N, et al. Dopaminergic mushroom body neurons in Drosophila: Flexibility of neuron identity in a model organism?[J]. Neuroscience & Biobehavioral Reviews, 2022, 135: 104570. doi: 10.1016/j.neubiorev.2022.104570. [3] JOVANIC T. Studying neural circuits of decision-making in Drosophila larva[J]. Journal of Neurogenetics, 2020, 34(1): 162–170. doi: 10.1080/01677063.2020.1719407. [4] 郭爱克, 彭岳清, 张柯, 等. 小虫春秋: 果蝇的视觉学习记忆与认知[J]. 自然杂志, 2009, 31(2): 63–68. doi: 10.3969/j.issn.0253-9608.2009.02.001.GUO Aike, PENG Yueqing, ZHANG Ke, et al. Insect behavior: Visual cognition in fruit fly[J]. Chinese Journal of Nature, 2009, 31(2): 63–68. doi: 10.3969/j.issn.0253-9608.2009.02.001. [5] 张式兴. 多巴胺在果蝇惩罚性嗅觉记忆保持中的作用[D]. [博士论文], 中国科学院生物物理研究所, 2008.ZHANG Shixing. The effect of dopamine on the aversive olfactory memory retention in Drosophila[D]. [Ph. D. dissertation], Institute of Biophysics, Chinese Academy of Sciences, 2008. [6] 章盛, 沈洁, 郑胜男, 等. 类果蝇复眼视觉神经计算建模及仿生应用研究综述[J]. 红外技术, 2023, 45(3): 229–240.ZHANG Sheng, SHEN Jie, ZHENG Shengnan, et al. Research review of Drosophila-like compound eye visual neural computational modeling and bionic applications[J]. Infrared Technology, 2023, 45(3): 229–240. [7] VOGT K, SCHNAITMANN C, DYLLA K V, et al. Shared mushroom body circuits underlie visual and olfactory memories in Drosophila[J]. eLife, 2014, 3: e02395. doi: 10.7554/eLife.02395. [8] 张单可. 神经信息处理的简单模型研究[D]. [博士论文], 华南理工大学, 2013.ZHANG Danke. Researches of simple models on neural information processing[D]. [Ph. D. dissertation], South China University of Technology, 2013. [9] BACHTIAR L R, UNSWORTH C P, and NEWCOMB R D. Using multilayer perceptron computation to discover ideal insect olfactory receptor combinations in the mosquito and fruit fly for an efficient electronic nose[J]. Neural Computation, 2015, 27(1): 171–201. doi: 10.1162/NECO_a_00691. [10] LEVAKOVA M, KOSTAL L, MONSEMPÈS C, et al. Adaptive integrate-and-fire model reproduces the dynamics of olfactory receptor neuron responses in a moth[J]. Journal of the Royal Society Interface, 2019, 16(157): 20190246. doi: 10.1098/rsif.2019.0246. [11] LAZAR A A, LIU Tingkai, and YEH C H. An odorant encoding machine for sampling, reconstruction and robust representation of odorant identity[C]. 2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 2020: 1743–1747. doi: 10.1109/icassp40776.2020.9054588. [12] TEETER C, IYER R, MENON V, et al. Generalized leaky integrate-and-fire models classify multiple neuron types[J]. Nature Communications, 2018, 9(1): 709. doi: 10.1038/s41467-017-02717-4. [13] 张铁林, 李澄宇, 王刚, 等. 适合类脑脉冲神经网络的应用任务范式分析与展望[J]. 电子与信息学报, 2023, 45(8): 2675–2688. doi: 10.11999/JEIT221459.ZHANG Tielin, LI Chengyu, WANG Gang, et al. Research advances and new paradigms for biology- inspired spiking neural networks[J]. Journal of Electronics & Information Technology, 2023, 45(8): 2675–2688. doi: 10.11999/JEIT221459. [14] 刘伟, 王桂荣. 昆虫嗅觉中枢系统对外周信号的整合编码研究进展[J]. 昆虫学报, 2020, 63(12): 1536–1545. doi: 10.16380/j.kcxb.2020.12.012.LIU Wei and WANG Guirong. Research progress of integrated coding of peripheral olfactory signals in the central nervous system of insects[J]. Acta Entomologica Sinica, 2020, 63(12): 1536–1545. doi: 10.16380/j.kcxb.2020.12.012. [15] PEHLEVAN C, GENKIN A, and CHKLOVSKII D B. A clustering neural network model of insect olfaction[C]. The 51st Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, USA, 2017: 593–600. doi: 10.1109/acssc.2017.8335410. [16] ZHANG Y L and SHARPEE T O. A robust feedforward model of the olfactory system[J]. PLoS Computational Biology, 2016, 12(4): e1004850. doi: 10.1371/journal.pcbi.1004850. [17] LEE H, KOSTAL L, KANZAKI R, et al. Spike frequency adaptation facilitates the encoding of input gradient in insect olfactory projection neurons[J]. Biosystems, 2023, 223: 104802. doi: 10.1016/j.biosystems.2022.104802. [18] DAS CHAKRABORTY S and SACHSE S. Olfactory processing in the lateral horn of Drosophila[J]. Cell and Tissue Research, 2021, 383(1): 113–123. doi: 10.1007/s00441-020-03392-6. [19] 尹艳. 果蝇的嗅觉抉择策略是基于其记忆间的竞争[D]. [博士论文], 中国科学院生物物理研究所, 2009.YIN Yan. Choice strategies in Drosophila are based on competition between olfactory memories[D]. [Ph. D. dissertation], Institute of Biophysics, Chinese Academy of Sciences, 2009. [20] 田月. 嗅觉信息在果蝇蘑菇体中的传递及呈现方式的探究[D]. [博士论文], 中国科学院大学, 2020.TIAN Yue. Transmission and representation of olfactory information in Drosophila mushroom body[D]. [Ph. D. dissertation], University of Chinese Academy of Sciences, 2020. [21] ROHLFS C. A descriptive analysis of olfactory sensation and memory in Drosophila and its relation to artificial neural networks[J]. Neurocomputing, 2023, 518: 15–29. doi: 10.1016/j.neucom.2022.10.068. [22] KENNEDY A. Learning with naturalistic odor representations in a dynamic model of the Drosophila olfactory system[J]. bioRxiv, 2019. doi: 10.1101/783191. [23] SPRINGER M and NAWROT M P. A mechanistic model for reward prediction and extinction learning in the fruit fly[J]. eNeuro, 2021, 8(3): ENEURO. 0549-20.2021. doi: 10.1523/ENEURO.0549-20.2021. [24] MOSQUEIRO T S and HUERTA R. Computational models to understand decision making and pattern recognition in the insect brain[J]. Current Opinion in Insect Science, 2014, 6: 80–85. doi: 10.1016/j.cois.2014.10.005. [25] YUE Shigang and RIND F C. A collision detection system for a mobile robot inspired by the locust visual system[C]. The 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 2005: 3832–3837. doi: 10.1109/ROBOT.2005.1570705. [26] WANG Hongxin, PENG Jigen, and YUE Shigang. An improved LPTC neural model for background motion direction estimation[C]. 2017 Joint IEEE International Conference on Development and Learning and Epigenetic Robotics, Lisbon, Portugal, 2017: 47–52. doi: 10.1109/DEVLRN.2017.8329786. [27] WANG Hongxin, PENG Jigen, and YUE Shigang. Bio-inspired small target motion detector with a new lateral inhibition mechanism[C]. 2016 International Joint Conference on Neural Networks, Vancouver, Canada, 2016: 4751–4758. doi: 10.1109/IJCNN.2016.7727824. [28] FU Qinbing and YUE Shigang. Modelling Drosophila motion vision pathways for decoding the direction of translating objects against cluttered moving backgrounds[J]. Biological Cybernetics, 2020, 114(4/5): 443–460. doi: 10.1007/s00422-020-00841-x. [29] BEHNIA R, CLARK D A, CARTER A G, et al. Processing properties of ON and OFF pathways for Drosophila motion detection[J]. Nature, 2014, 512(7515): 427–430. doi: 10.1038/nature13427. [30] WANG Hongxin, PENG Jigen, and YUE Shigang. A feedback neural network for small target motion detection in cluttered backgrounds[C]. The 27th International Conference on Artificial Neural Networks, Rhodes, Greece, 2018: 728–737. doi: 10.1007/978-3-030-01424-7_71. [31] FU Qinbing, BELLOTTO N, and YUE Shigang. A directionally selective neural network with separated ON and OFF pathways for translational motion perception in a visually cluttered environment[J]. arXiv: 1808.07692, 2018. [32] STROTHER J A, WU S T, WONG A M, et al. The emergence of directional selectivity in the visual motion pathway of Drosophila[J]. Neuron, 2017, 94(1): 168–182. e10. doi: 10.1016/j.neuron.2017.03.010. [33] GRUNTMAN E, ROMANI S, and REISER M B. The computation of directional selectivity in the Drosophila OFF motion pathway[J]. eLife, 2019, 8: e50706. doi: 10.7554/eLife.50706. [34] FU Qinbing and YUE Shigang. Mimicking fly motion tracking and fixation behaviors with a hybrid visual neural network[C]. 2017 IEEE International Conference on Robotics and Biomimetics, Macau, China, 2017: 1636–1641. doi: 10.1109/ROBIO.2017.8324652. [35] FU Qinbing, BELLOTTO N, WANG Huatian, et al. A visual neural network for robust collision perception in vehicle driving scenarios[C]. The 15th IFIP Advances in Information and Communication Technology, Hersonissos, Greece, 2019, 559: 67–79. doi: 10.1007/978-3-030-19823-7_5. [36] WANG Hongxin, PENG Jigen, ZHENG Xuqiang, et al. A robust visual system for small target motion detection against cluttered moving backgrounds[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(3): 839–853. doi: 10.1109/tnnls.2019.2910418. [37] WU Zhihua and GUO Aike. Bioinspired figure-ground discrimination via visual motion smoothing[J]. PLoS Computational Biology, 2023, 19(4): e1011077. doi: 10.1371/journal.pcbi.1011077. [38] CLARK D A, BURSZTYN L, HOROWITZ M A, et al. Defining the computational structure of the motion detector in Drosophila[J]. Neuron, 2011, 70(6): 1165–1177. doi: 10.1016/j.neuron.2011.05.023. [39] SCHNEIDER J, MURALI N, TAYLOR G W, et al. Can Drosophila melanogaster tell who's who?[J]. PLoS One, 2018, 13(10): e0205043. doi: 10.1371/journal.pone.0205043. [40] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/cvpr.2016.90. [41] ZHAO Feifei, ZENG Yi, GUO Aike, et al. A neural algorithm for Drosophila linear and nonlinear decision-making[J]. Scientific Reports, 2020, 10(1): 18660. doi: 10.1038/s41598-020-75628-y. [42] WEI Hui, BU Yijie, and DAI Dawei. A decision-making model based on a spiking neural circuit and synaptic plasticity[J]. Cognitive Neurodynamics, 2017, 11(5): 415–431. doi: 10.1007/s11571-017-9436-2. [43] CAI Kuijie, SHEN Jihong, and WU Si. Decision-making in Drosophila with two conflicting cues[C]. The 8th International Symposium on Neural Networks, Guilin, China, 2011: 93–100. doi: 10.1007/978-3-642-21105-8_12. [44] WU Zhihua and GUO Aike. A model study on the circuit mechanism underlying decision-making in Drosophila[J]. Neural Networks, 2011, 24(4): 333–344. doi: 10.1016/j.neunet.2011.01.002. [45] FU Qinbing, HU Cheng, PENG Jigen, et al. Shaping the collision selectivity in a looming sensitive neuron model with parallel ON and OFF pathways and spike frequency adaptation[J]. Neural Networks, 2018, 106: 127–143. doi: 10.1016/j.neunet.2018.04.001. [46] GUO Jianzeng and GUO Aike. Crossmodal interactions between olfactory and visual learning in Drosophila[J]. Science, 2005, 309(5732): 307–310. doi: 10.1126/science.1111280. [47] HEISENBERG M and GERBER B. Behavioral analysis of learning and memory in Drosophila[J]. Learning and Memory:A Comprehensive Reference, 2008, 1: 549–559. doi: 10.1016/B978-012370509-9.00066-8. [48] 李昊. 果蝇嗅觉学习的神经机制以及多巴胺系统的作用[D]. [博士论文], 中国科学院大学, 2011.LI Hao. Neural mechanism of olfactory learning in Drosophila[D]. [Ph. D. dissertation], University of Chinese Academy of Sciences, 2011. [49] LIANG Yuchen, RYALI C K, HOOVER B, et al. Can a fruit fly learn word embeddings?[C]. The 9th International Conference on Learning Representations, Austria, 2021. [50] ZHOU Mingmin, CHEN Nannan, TIAN Jingsong, et al. Suppression of GABAergic neurons through D2-like receptor secures efficient conditioning in Drosophila aversive olfactory learning[J]. Proceedings of the National Academy of Sciences of the United States of America, 2019, 116(11): 5118–5125. doi: 10.1073/pnas.1812342116. [51] RAGUSO R A and WILLIS M A. Synergy between visual and olfactory cues in nectar feeding by wild hawkmoths, Manduca sexta[J]. Animal Behaviour, 2005, 69(2): 407–418. doi: 10.1016/j.anbehav.2004.04.015. [52] ZHANG Lizhen, ZHANG Shaowu, WANG Zilong, et al. Cross-modal interaction between visual and olfactory learning in Apis cerana[J]. Journal of Comparative Physiology A, 2014, 200(10): 899–909. doi: 10.1007/s00359-014-0934-y. [53] BALKENIUS A and BALKENIUS C. Multimodal interaction in the insect brain[J]. BMC Neuroscience, 2016, 17(1): 29. doi: 10.1186/s12868-016-0258-7. [54] HARRAP M J M, LAWSON D A, WHITNEY H M, et al. Cross-modal transfer in visual and nonvisual cues in bumblebees[J]. Journal of Comparative Physiology A, 2019, 205(3): 427–437. doi: 10.1007/s00359-019-01320-w. [55] 刘金莹. 基于仿生忆阻神经网络的学习与记忆电路设计及应用[D]. [硕士论文], 西南大学, 2023.LIU Jinying. Bioinspired memristive neural network circuit design of learning and memory and its application[D]. [Master dissertation], Southwest University, 2023. [56] THOMAS A. Memristor-based neural networks[J]. Journal of Physics D:Applied Physics, 2013, 46(9): 093001. doi: 10.1088/0022-3727/46/9/093001. [57] DAI Xinyu, HUO Dexuan, GAO Zhanyuan, et al. A visual-olfactory multisensory fusion spike neural network for early fire/smoke detection[J]. Research Square, 2023. doi: 10.21203/rs.3.rs-3192562/v1. [58] LU Yanli and LIU Qingjun. Insect olfactory system inspired biosensors for odorant detection[J]. Sensors & Diagnostics, 2022, 1(6): 1126–1142. doi: 10.1039/D2SD00112H. [59] HORIBE J, ANDO N, and KANZAKI R. Odor-searching robot with insect-behavior-based olfactory sensor[J]. Sensors and Materials, 2021, 33(12): 4185–4202. doi: 10.18494/SAM.2021.3369. [60] GARDNER J W and BARTLETT P N. A brief history of electronic noses[J]. Sensors and Actuators B:Chemical, 1994, 18(1/3): 210–211. doi: 10.1016/0925-4005(94)87085-3. [61] ALI M M, HASHIM N, AZIZ S A, et al. Principles and recent advances in electronic nose for quality inspection of agricultural and food products[J]. Trends in Food Science & Technology, 2020, 99: 1–10. doi: 10.1016/j.jpgs.2020.02.028. [62] WASILEWSKI T, BRITO N F, SZULCZYŃSKI B, et al. Olfactory receptor-based biosensors as potential future tools in medical diagnosis[J]. TrAC Trends in Analytical Chemistry, 2022, 150: 116599. doi: 10.1016/j.trac.2022.116599. [63] DWECK H K M, EBRAHIM S A M, RETZKE T, et al. The olfactory logic behind fruit odor preferences in larval and adult Drosophila[J]. Cell Reports, 2018, 23(8): 2524–2531. doi: 10.1016/j.celrep.2018.04.085. [64] 宋岩峰, 郝群, 曹杰, 等. 大视场仿生复眼光电成像探测技术的研究发展[J]. 红外与激光工程, 2022, 51(5): 20210593. doi: 10.3788/IRLA20210593.SONG Yanfeng, HAO Qun, CAO Jie, et al. Research and development of Wide-Field-of-View bionic compound eye photoelectric imaging detection technology[J]. Infrared and Laser Engineering, 2022, 51(5): 20210593. doi: 10.3788/IRLA20210593. [65] XU G J W, GUO Kun, PARK S H, et al. Bio-inspired vision mimetics toward next-generation collision-avoidance automation[J]. The Innovation, 2023, 4(1): 100368. doi: 10.1016/j.xinn.2022.100368. [66] HOLEŠOVSKÝ O, ŠKOVIERA R, HLAVÁČ V, et al. Experimental comparison between event and global shutter cameras[J]. Sensors, 2021, 21(4): 1137. doi: 10.3390/s21041137. -

下载:

下载:

下载:

下载: