Multi-Unmanned Aerial Vehicles Trajectory Optimization for Age of Information Minimization in Wireless Sensor Networks

-

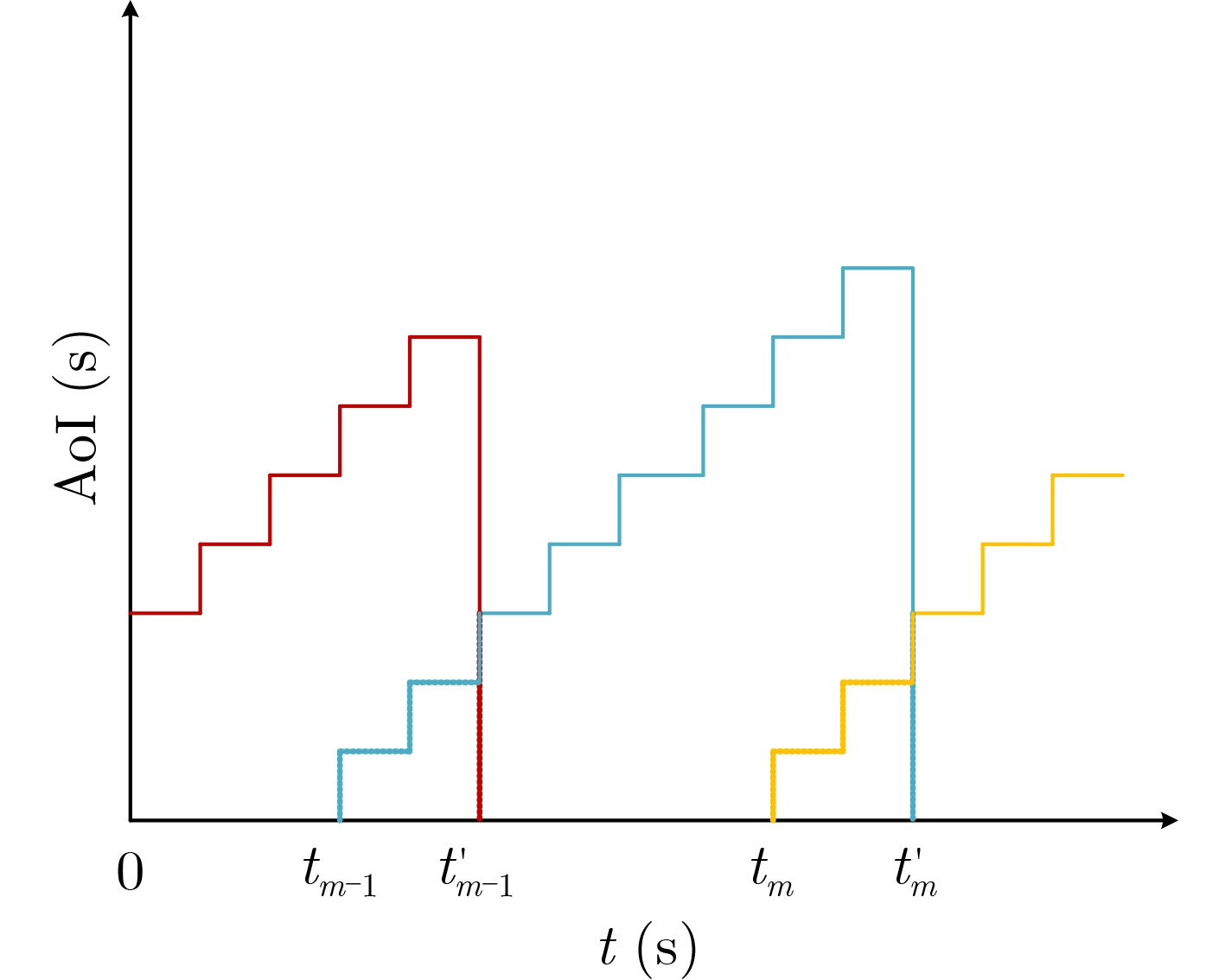

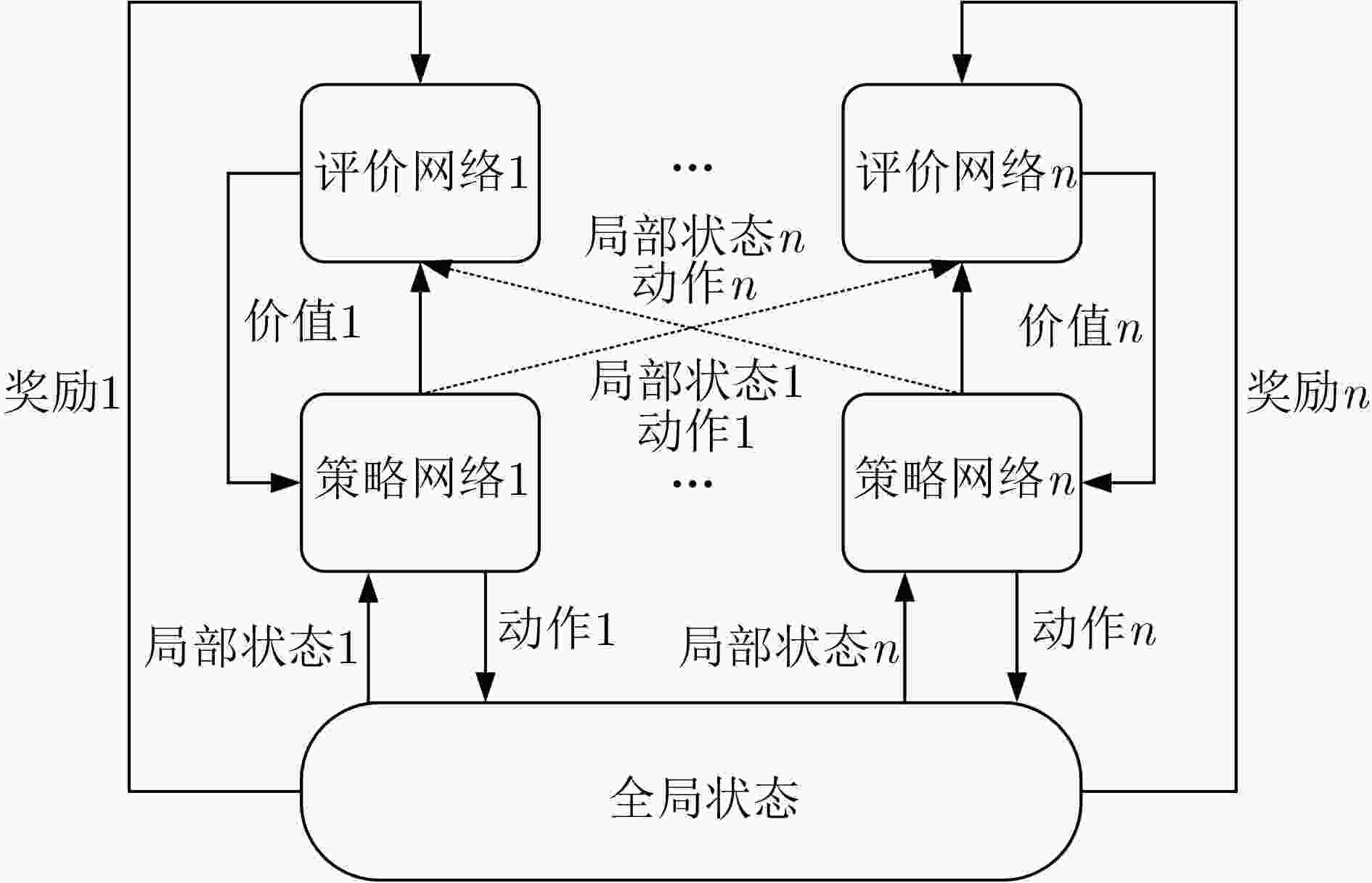

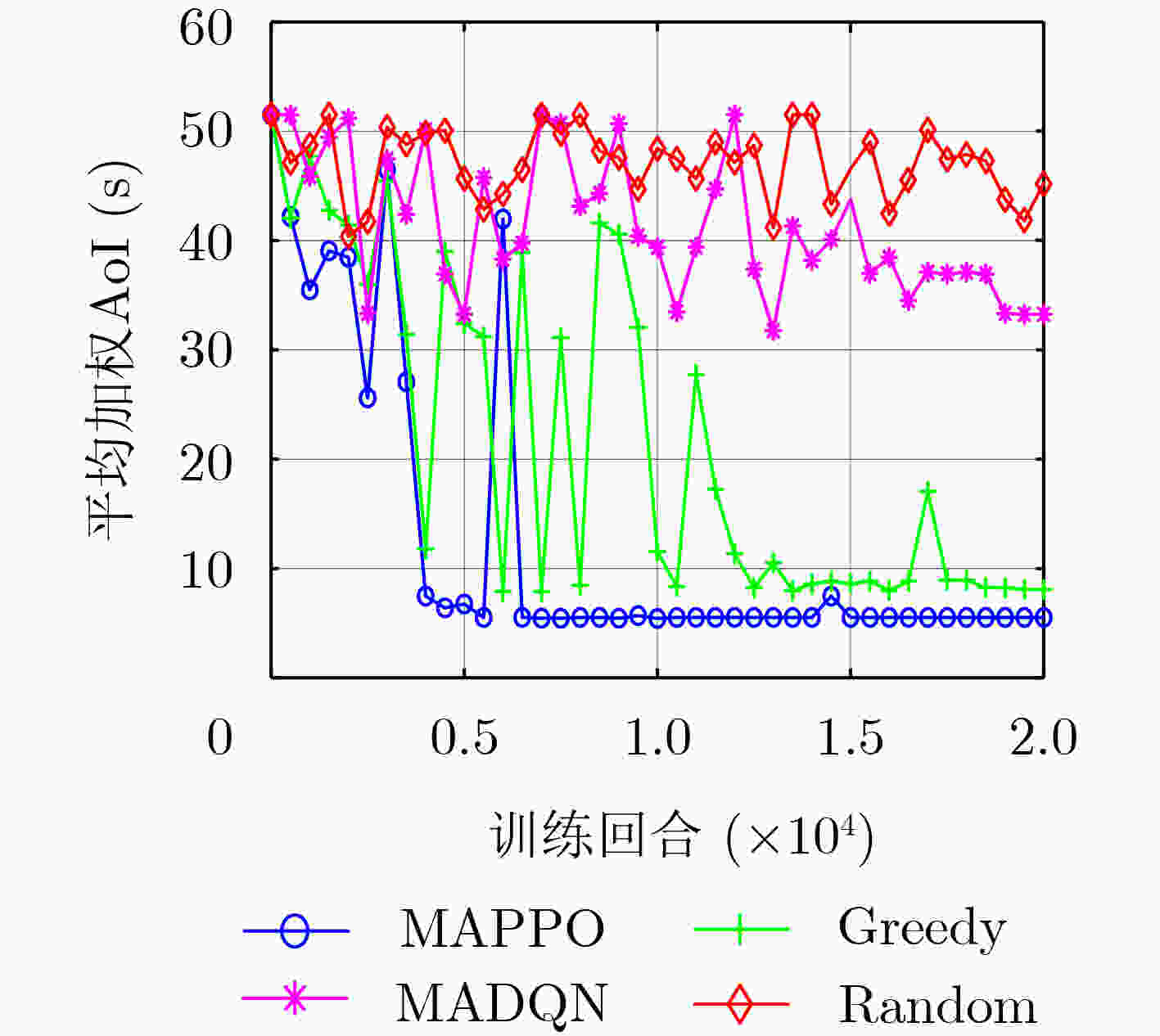

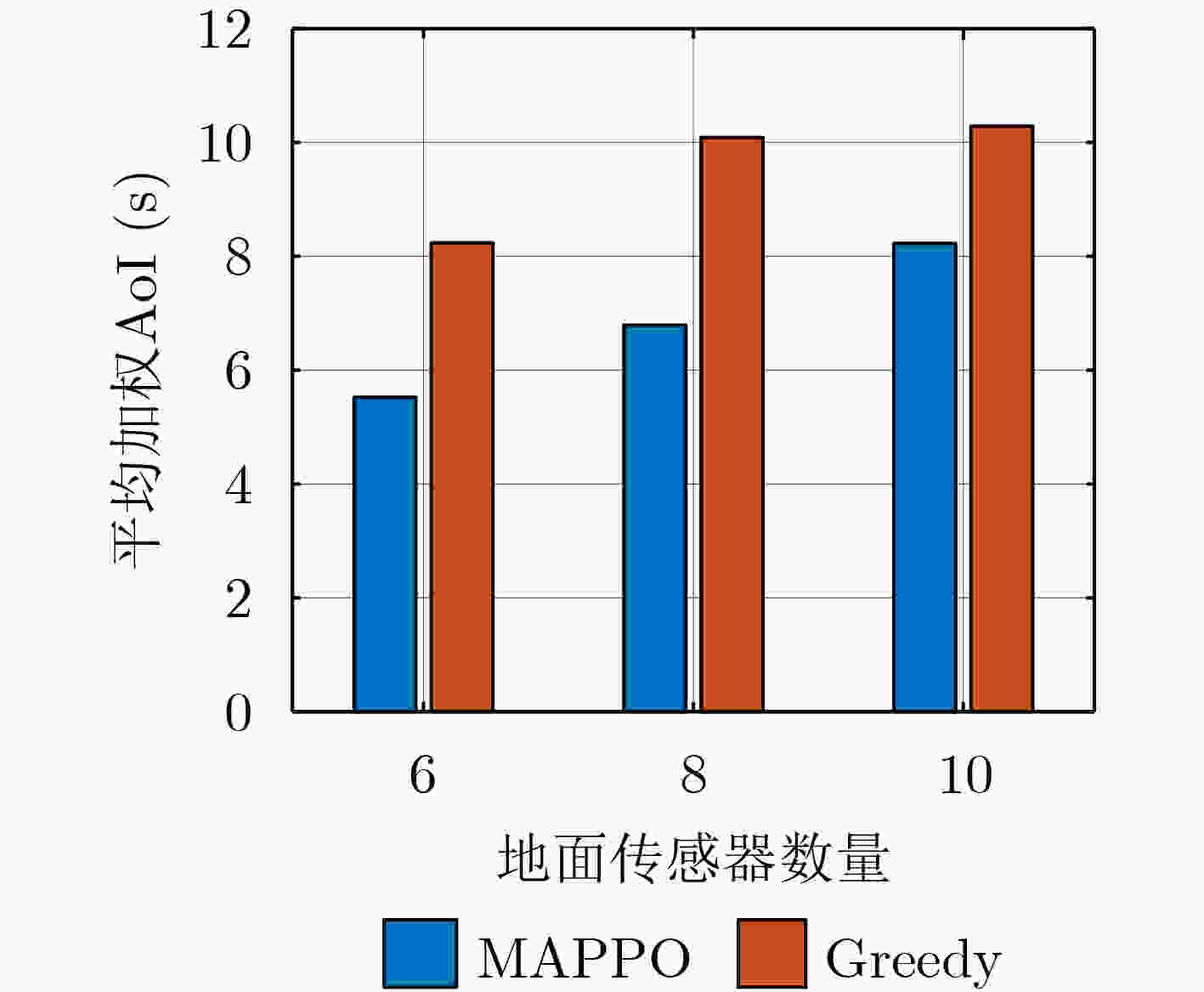

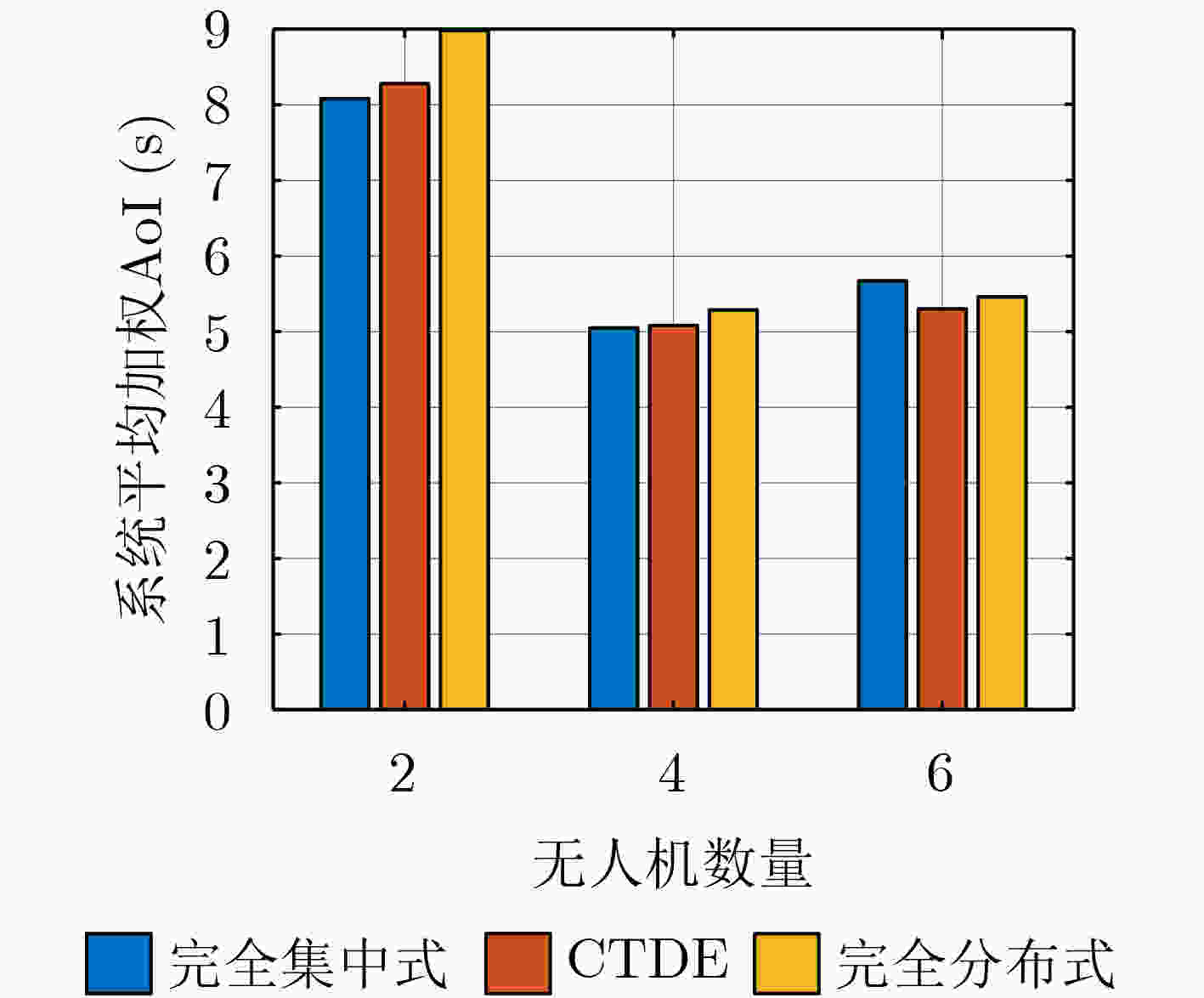

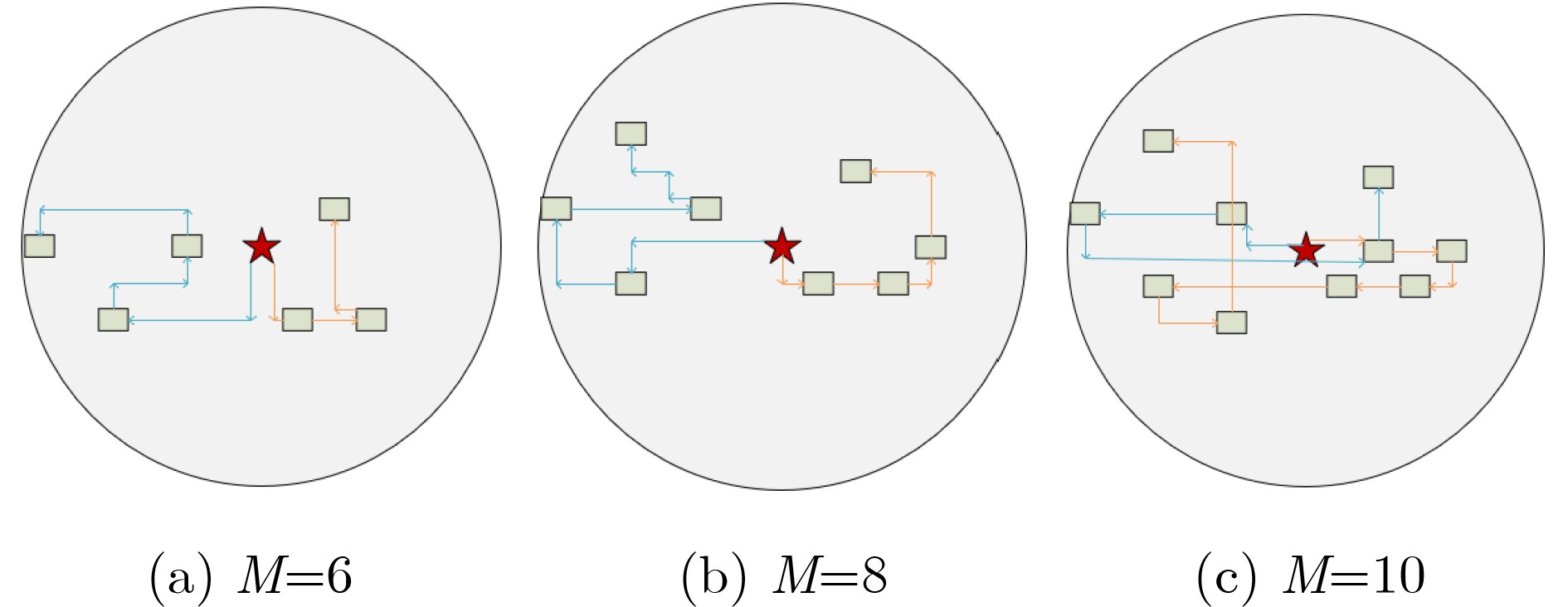

摘要: 由于无线传感器网络(WSN)中传感器的传输功率有限,同时可能与基站(BS)传输距离较远,造成无法及时交付数据,数据新鲜度过低,影响时延敏感型业务决策质量。因此,采用无人机(UAV)辅助收集传感器数据,成为提升无线传感器网络数据新鲜度的有效手段。该文通过信息年龄(AoI)性能指标评估无线传感器网络数据新鲜度,并基于集中式训练分布式执行框架的多智能体近端策略优化(MAPPO)方法研究了无人机轨迹优化算法。通过联合优化所有无人机的飞行轨迹,实现地面节点平均加权信息年龄的最小化。仿真结果验证了所提多无人机路径规划算法在降低无线传感器网络信息年龄方面的有效性。Abstract: Due to the limited transmitting power of sensors in the Wireless Sensor Network (WSN) and high probability of large distance between sensors and their associated Base Station(BS), the sensor data may not be received in time. This will reduce the data freshness of sensor data and affect the quality of decision for delay sensitive service. Therefore, the use of Unmanned Aerial Vehicles (UAVs) to assist in collecting sensor data has become an effective solution to decrease the data freshness, measured by Age of Information (AoI), in wireless sensor networks. A UAV trajectory optimization algorithm based on the Multi-Agent Proximal Policy Optimization (MAPPO) method is developed in this paper, which employs a centralized-training and distributed-execution framework. By jointly optimizing the flight trajectories of all UAVs, the average AoI of all ground nodes is minimized. The simulation results verify the effectiveness of our proposed UAV trajectory optimization algorithm on minimizing the AoI in the WSN.

-

算法1 基于MAPPO方法的多无人机路径规划算法 初始化:Actor网络、Critic网络的参数$ \theta $和$ \omega $,设置训练最大回

合数(episode)为$ T $,单个回合最大步数(step)为$ L $,无人机数量

为$ N $,批采样大小(batch size)为$ B $,迭代(epoch)大小为$ K $;For episode=1: $ T $ do 初始化每个无人机状态$ {s_i}(t) $、联合状态$ S(t) $ For step=1: $ L $ do 每个无人机根据自身策略选择动作$ {a_i}(t) $ 执行动作$ A(t) = \left\{ {{a_1}(t),{a_2}(t),\cdots,{a_n}(t)} \right\} $,得到奖励

$ R(t) $和下一状态$ S(t{\text{ + 1}}) $For UAV=1: $ N $ do 将采样轨迹$ ({s_i}(t),{a_i}(t),r(t),{s_i}(t + 1)) $存入经验池$ D $ 基于采样轨迹计算GAE For epoch=1:$ K $ do 从经验池$ D $随机抽取小批次数据$ B $来训练网络 应用Adam优化器求梯度下降 根据式(20)更新Actor网络参数$ \theta = \theta ' $ 根据式(21)更新Critic网络参数$ \omega = \omega ' $ End for End for $ s(t) = s(t + 1),\;S(t) = S(t + 1) $ End for End for 表 1 仿真环境参数

参数 数值 无人机飞行高度$ {h_i} $(m) 120 基站高度$ {h_{\text{b}}} $(m) 25 无人机的飞行速度$ v $(m) 10 最小防碰撞距离$ {d_{\min }} $(m) 5 无人机传输功率$ {P_{\text{u}}} $(dBm) 23 传感器传输功率$ {P_{\text{s}}} $(w) 0.1 噪声功率$ {\sigma ^2} $(dBm) –100 通信带宽$ B $(MHz) 5 时隙长度$ {T_{\text{c}}} $(s) 1 数据包大小$ S $(bits) 1e6 NLoS衰减因子$ \psi $ 0.6 路径衰落参数$ \mu $ 2 参考距离为1m时的信道增益$ {\beta _0} $(dB) –60 表 2 强化学习参数

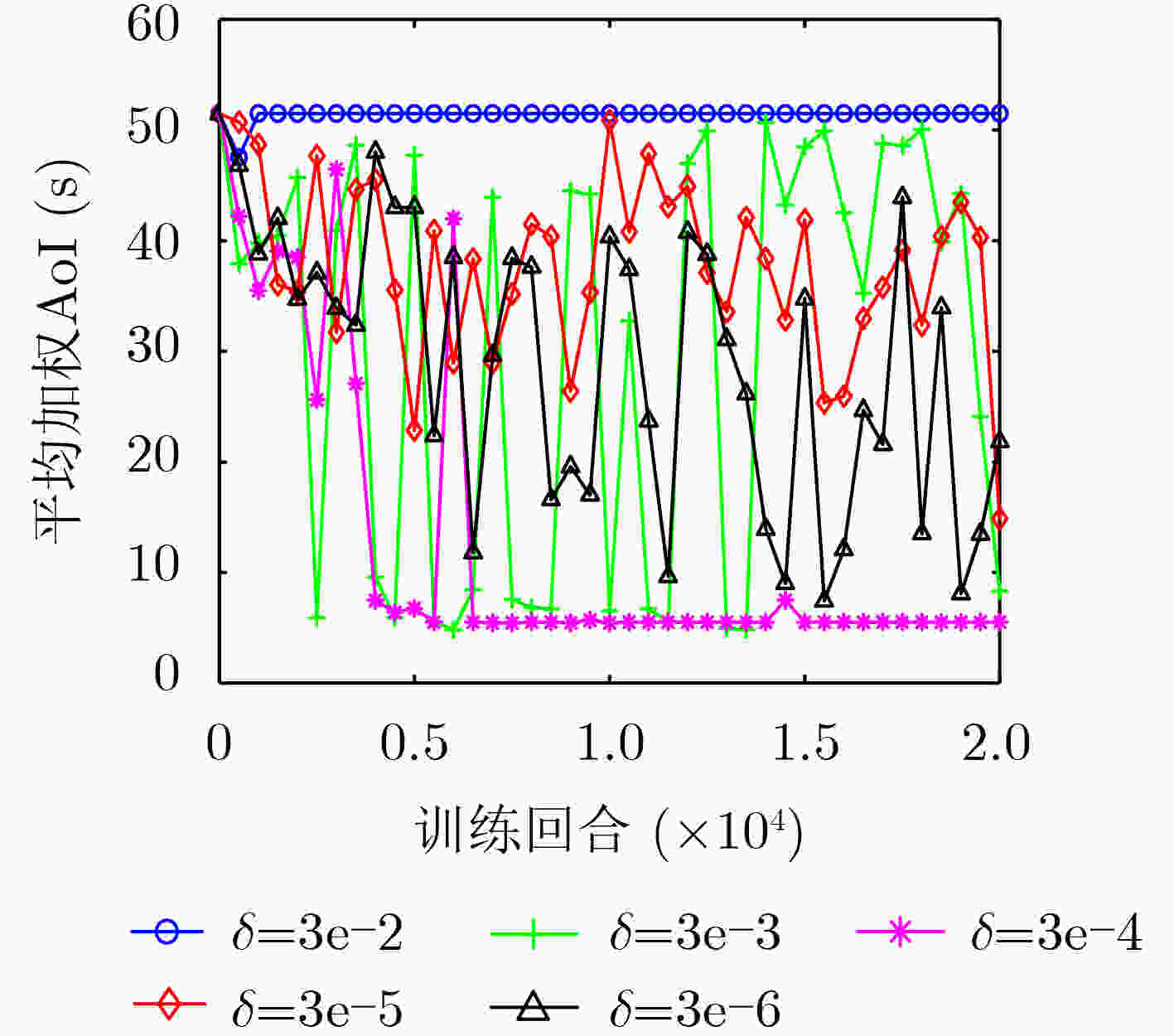

参数 数值 训练回合数$ {T_{\max }} $ 2e4 每个回合最大步数$ {l_{\max }} $ 100 经验池大小$ D $ 1e4 采样批次大小$ B $ 256 折扣因子$ \gamma $ 0.95 Actor网络学习率$ {\delta _1} $ 3e–4 Critic网络学习率$ {\delta _2} $ 3e–4 裁剪超参数$ \varepsilon $ 0.2 GAE的$ \lambda $ 0.95 -

[1] SHAH S K, JOSHI K, KHANTWAL S, et al. IoT and WSN integration for data acquisition and supervisory control[C]. 2022 IEEE World Conference on Applied Intelligence and Computing, Sonbhadra, India, 2022: 513–516. [2] AL-MASHHADANI M A, HAMDI M M, and MUSTAFA A S. Role and challenges of the use of UAV-aided WSN monitoring system in large-scale sectors[C]. 2021 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications, Ankara, Turkey, 2021: 1–5. [3] AL-SHARE R, SHURMAN M, and ALMA’AITAH A. A collaborative learning-based algorithm for task offloading in UAV-aided wireless sensor networks[J]. The Computer Journal, 2021, 64(10): 1575–1583. doi: 10.1093/comjnl/bxab100. [4] VERMA S and ADHYA A. Routing in UAVs-assisted 5G wireless sensor network: Recent advancements, challenges, research GAps, and future directions[C]. 2022 3rd International Conference on Intelligent Engineering and Management, London, United Kingdom, 2022: 422–428. [5] KAUL S, YATES R, and GRUTESER M. Real-time status: How often should one update?[C]. 2012 Proceedings IEEE INFOCOM, Orlando, USA, 2012: 2731–2735. [6] ZHANG Guangyang, SHEN Chao, SHI Qingjiang, et al. AoI minimization for WSN data collection with periodic updating scheme[J]. IEEE Transactions on Wireless Communications, 2023, 22(1): 32–46. doi: 10.1109/TWC.2022.3190986. [7] LIU Juan, WANG Xijun, BAI Bo, et al. Age-optimal trajectory planning for UAV-assisted data collection[C]. IEEE Conference on Computer Communications Workshops, Honolulu, USA, 2018: 553–558. [8] TONG Peng, LIU Juan, WANG Xijun, et al. UAV-enabled age-optimal data collection in wireless sensor networks[C]. 2019 IEEE International Conference on Communications Workshops, Shanghai, China, 2019: 1–6. [9] JIANG Wenwen, SHEN Chao, AI Bo, et al. Peak age of information minimization for UAV-aided wireless sensing and communications[C]. 2021 IEEE International Conference on Communications Workshops, Montreal, Canada, 2021: 1–6. [10] ZHOU Conghao, HE Hongli, YANG Peng, et al. Deep RL-based trajectory planning for AoI minimization in UAV-assisted IoT[C]. 2019 11th International Conference on Wireless Communications and Signal Processing, Xi'an, China, 2019: 1–6. [11] ABD-ELMAGID M, FERDOWSI A, DHILLON H S, et al. Deep reinforcement learning for minimizing age-of-information in UAV-assisted networks[C]. 2019 IEEE Global Communications Conference, Waikoloa, USA, 2019: 1–6. [12] YI Mengjie, WANG Xijun, LIU Juan, et al. Deep reinforcement learning for fresh data collection in UAV-assisted IoT networks[C]. IEEE Conference on Computer Communications Workshops, Toronto, Canada, 2020: 716–721. [13] ZHANG Qian, MIAO Jiansong, ZHANG Zhicai, et al. Energy-efficient video streaming in UAV-enabled wireless networks: A safe-DQN approach[C]. GLOBECOM 2020 - 2020 IEEE Global Communications Conference, Taipei, China, 2020: 1–7. [14] FU Fang, JIAO Qi, YU F R, et al. Securing UAV-to-vehicle communications: A curiosity-driven deep Q-learning network (C-DQN) approach[C]. 2021 IEEE International Conference on Communications Workshops, Montreal, Canada, 2021: 1–6. [15] HU Jingzhi, ZHANG Hongliang, SONG Lingyang, et al. Cooperative internet of UAVs: Distributed trajectory design by multi-agent deep reinforcement learning[J]. IEEE Transactions on Communications, 2020, 68(11): 6807–6821. doi: 10.1109/TCOMM.2020.3013599. [16] WU Fanyi, ZHANG Hongliang, WU Jianjun, et al. Cellular UAV-to-device communications: Trajectory design and mode selection by multi-agent deep reinforcement learning[J]. IEEE Transactions on Communications, 2020, 68(7): 4175–4189. doi: 10.1109/tcomm.2020.2986289. [17] WU Fanyi, ZHANG Hongliang, WU Jianjun, et al. UAV-to-device underlay communications: Age of information minimization by multi-agent deep reinforcement learning[J]. IEEE Transactions on Communications, 2021, 69(7): 4461–4475. doi: 10.1109/TCOMM.2021.3065135. [18] SAMIR M, ASSI C, SHARAFEDDINE S, et al. Age of information aware trajectory planning of UAVs in intelligent transportation systems: A deep learning approach[J]. IEEE Transactions on Vehicular Technology, 2020, 69(11): 12382–12395. doi: 10.1109/TVT.2020.3023861. [19] AL-HOURANI A, KANDEEPAN S, and JAMALIPOUR A. Modeling air-to-ground path loss for low altitude platforms in urban environments[C]. 2014 IEEE Global Communications Conference, Austin, USA, 2014: 2898–2904. [20] SCHULMAN J, WOLSKI F, DHARIWAL P, et al. Proximal policy optimization algorithms[EB/OL]. https://arxiv.org/abs/1707.06347, 2017. -

下载:

下载:

下载:

下载: