High-precision Gesture Recognition Based on DenseNet and Convolutional Block Attention Module

-

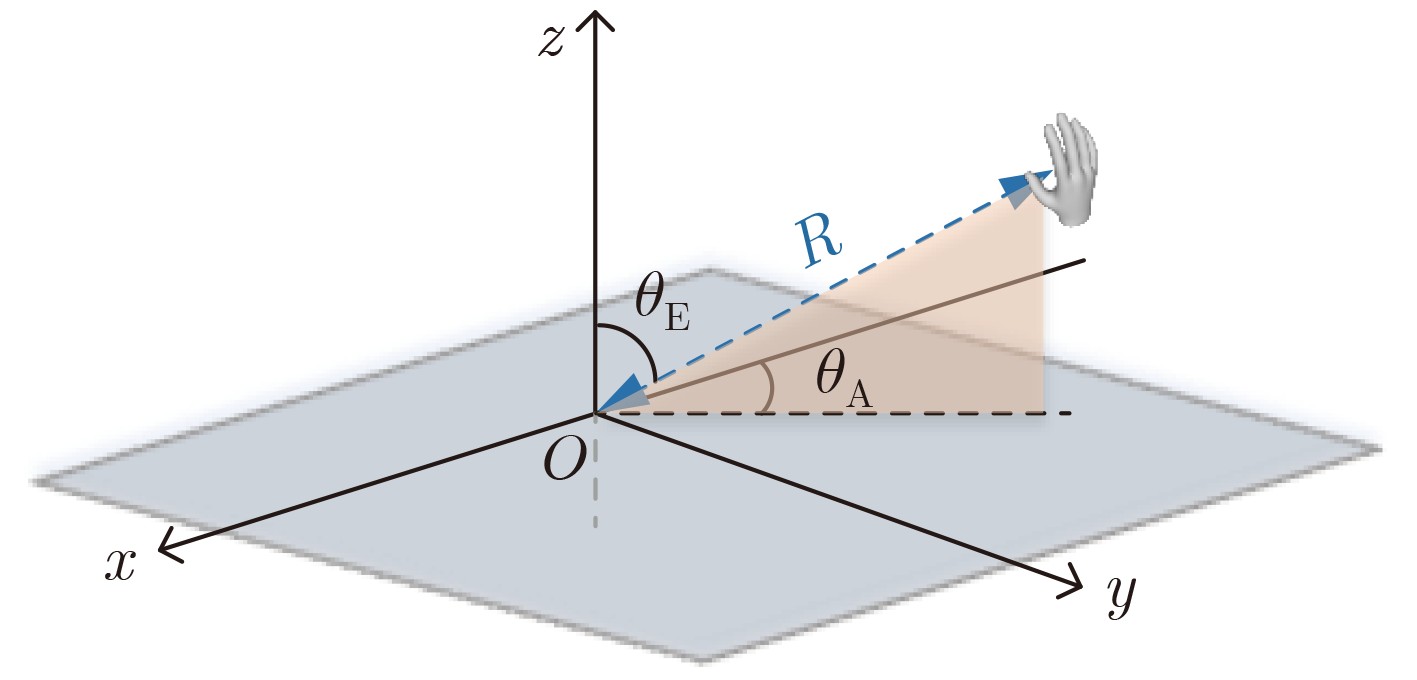

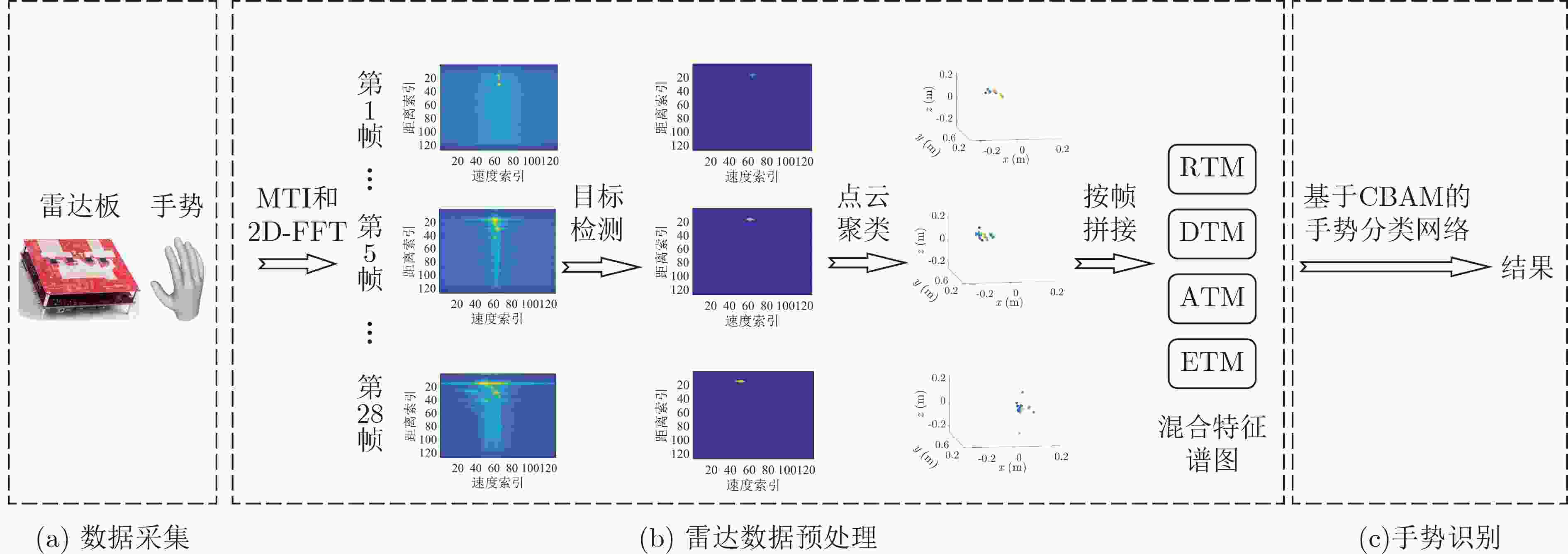

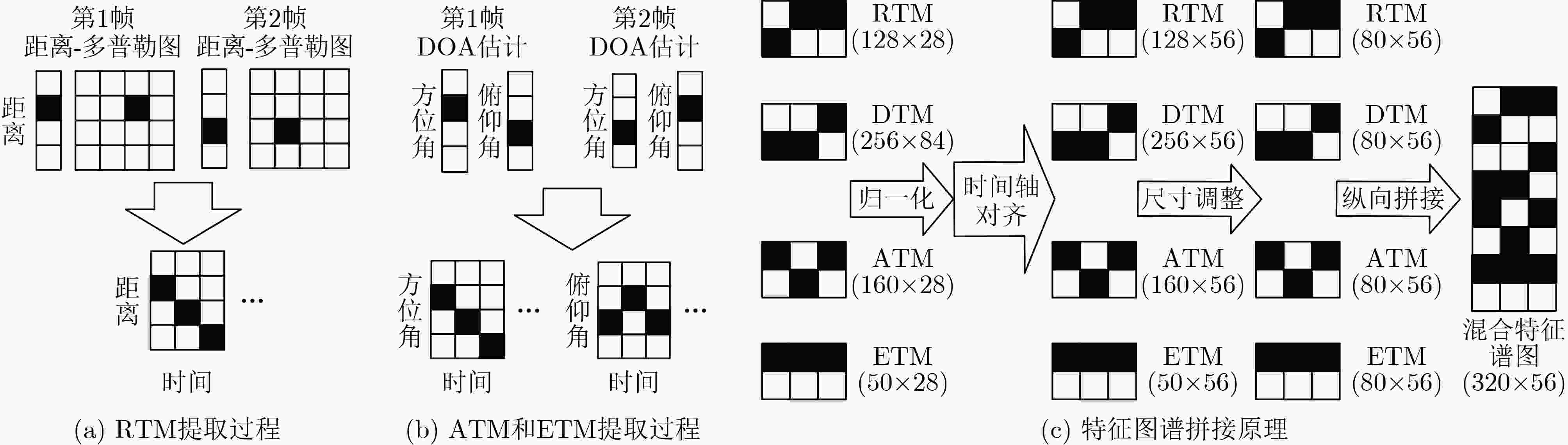

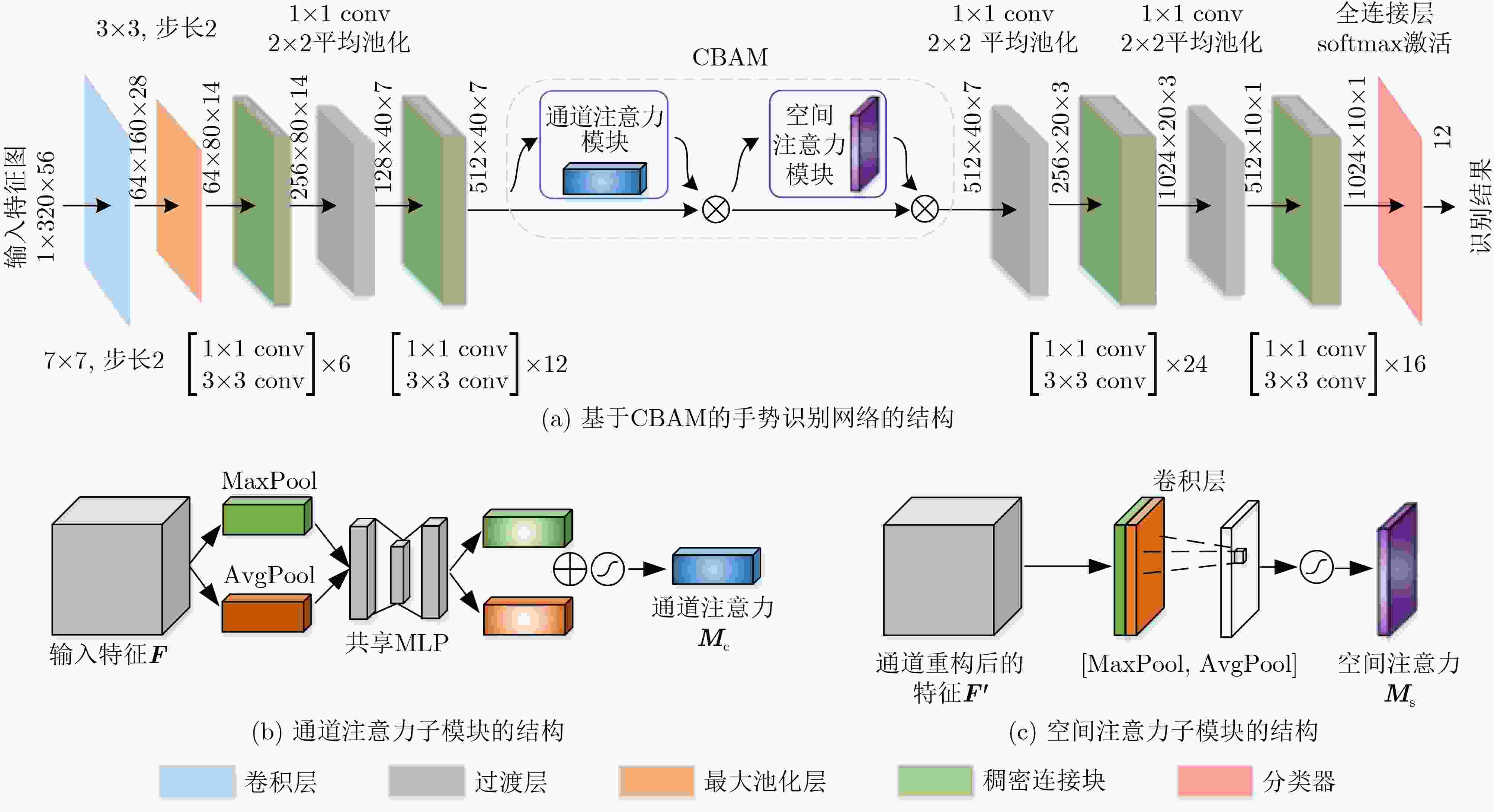

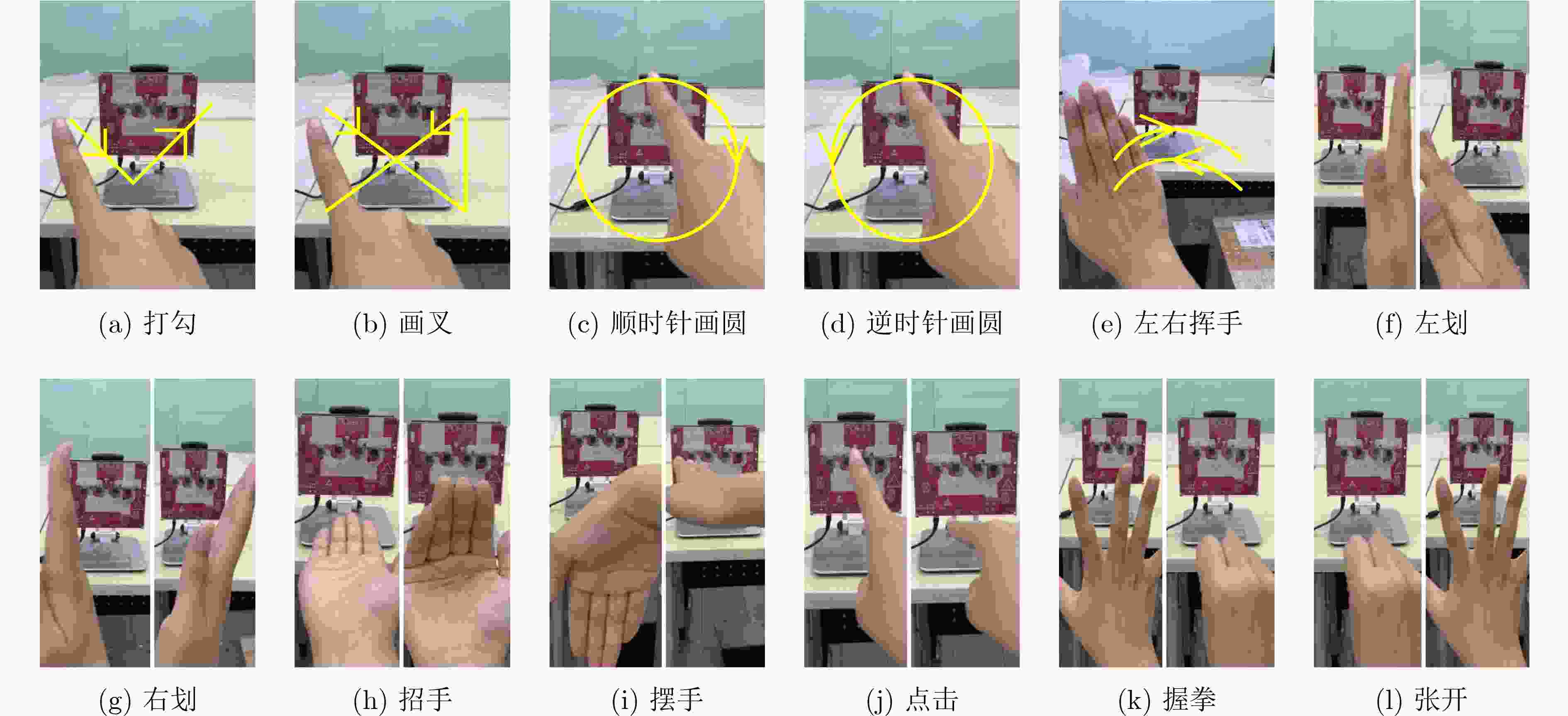

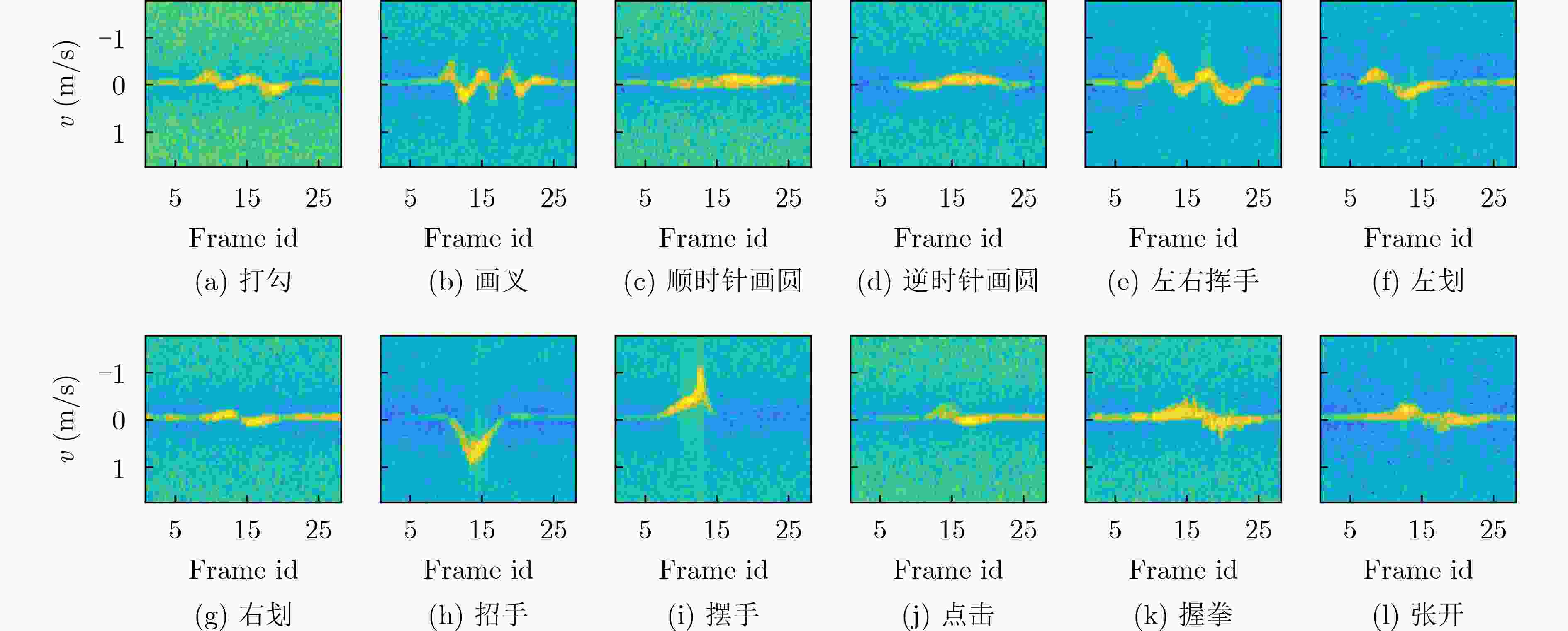

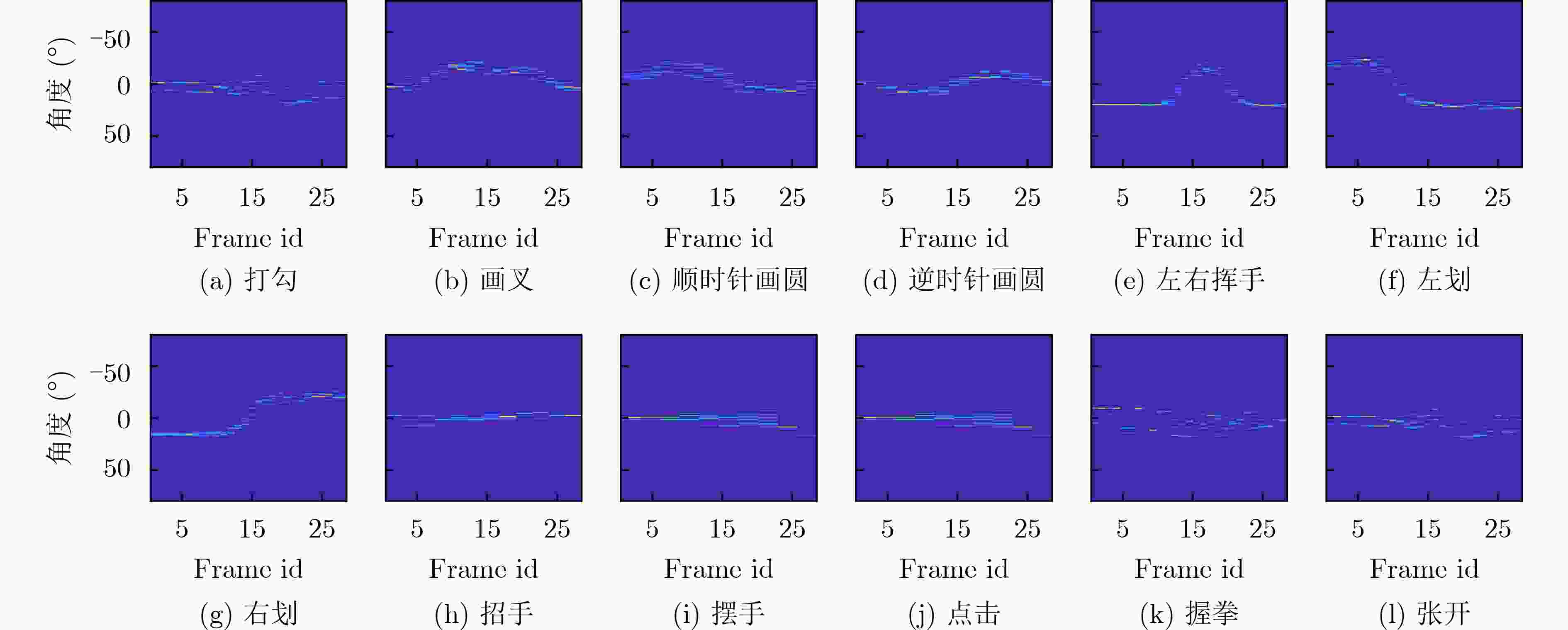

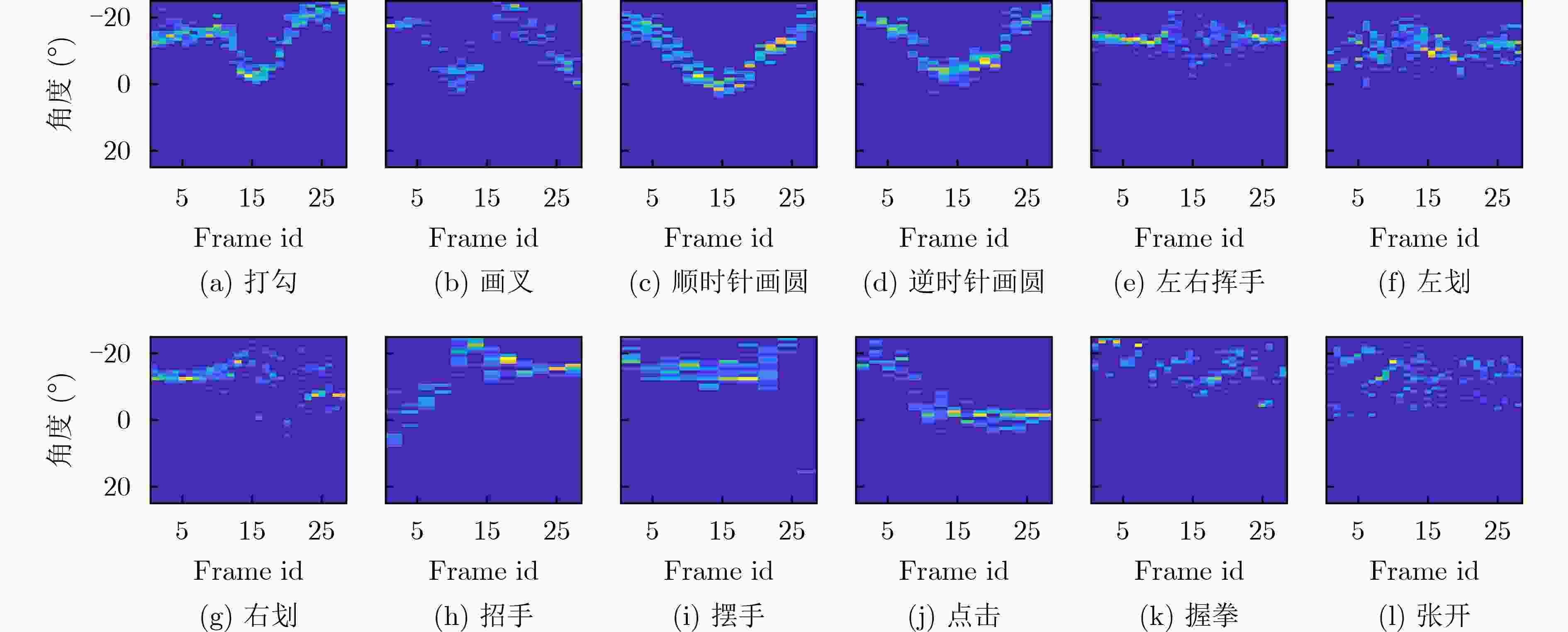

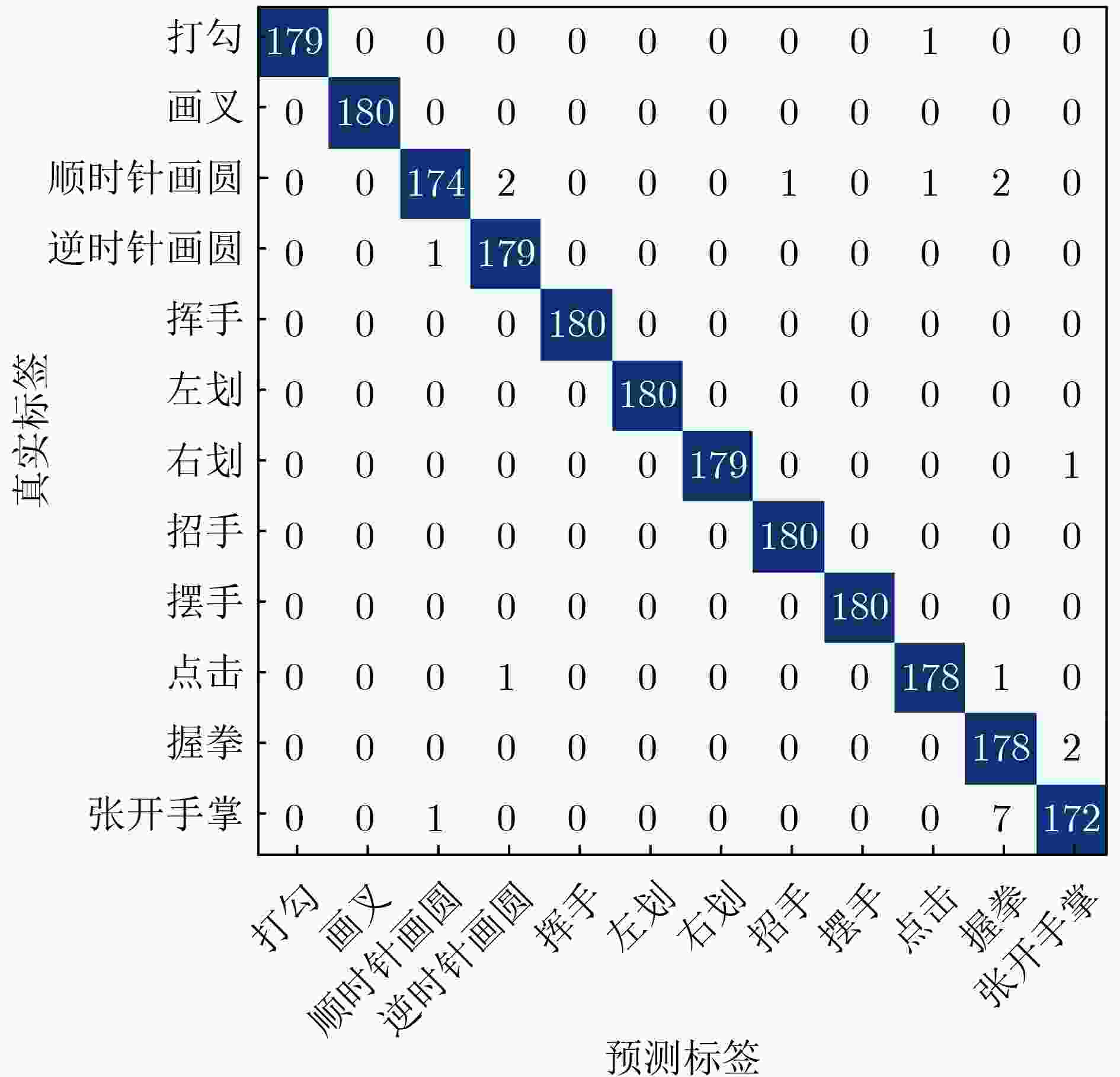

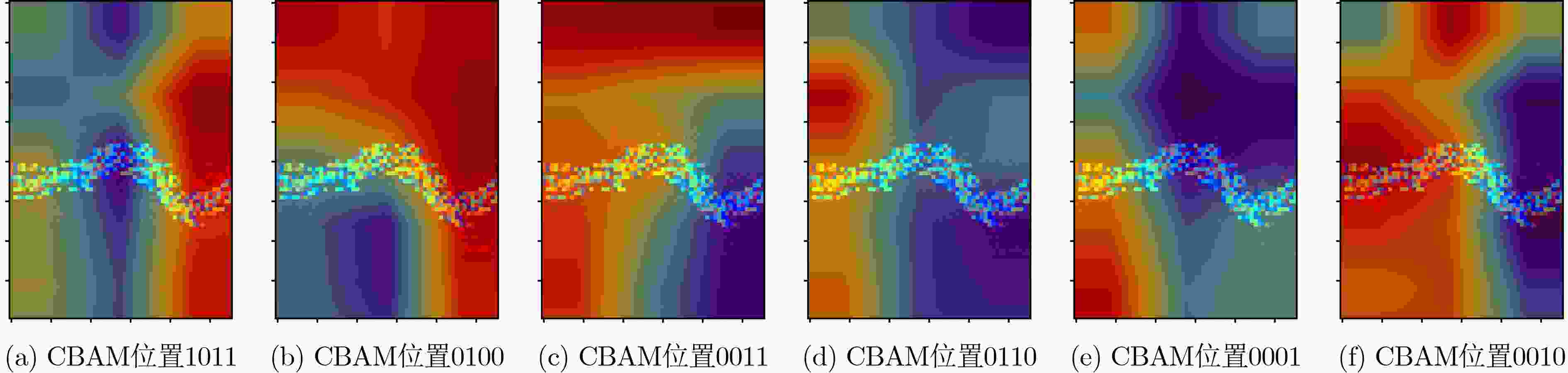

摘要: 非接触的手势识别是一种新型人机交互方式,在增强现实(AR)/虚拟现实(VR)、智能家居、智能医疗等方面有着广阔的应用前景,近年来成为一个研究热点。由于需要利用毫米波雷达进行更精确的微动手势识别,该文提出一种新型的基于MIMO毫米波雷达的微动手势识别方法。采用4片AWR1243雷达板级联而成的毫米波级联(MMWCAS)雷达采集手势回波,对手势回波进行时频分析,基于距离-多普勒(RD)图和3D点云检测出人手目标。通过数据预处理,提取手势目标的距离-时间谱图(RTM)、多普勒-时间谱图(DTM)、方位角-时间谱图(ATM)和俯仰角-时间谱图(ETM),更加全面地表征手势的运动特征,并形成混合特征谱图(FTM),对12种微动手势进行识别。设计了基于DenseNet和卷积注意力模块的手势识别网络,将混合特征谱图作为网络的输入,创新性地融合了卷积注意力模块(CBAM),实验表明,识别准确率达到99.03%,且该网络将注意力放在手势动作的前半段,实现了高精度的手势识别。Abstract: Non-contact gesture recognition is a new type of human-computer interaction method with broad application prospects. It can be used in Augmented Reality (AR)/Virtual Reality (VR), smart homes, smart medical, etc., and has recently become a research hotspot. Motivated by the need for more precise micro-motion gesture recognition using mm-wave radar in recent years, a novel micro-motion gesture recognition method based on MIMO millimeter wave radar is proposed in this paper. The MilliMeter Wave CAScaded (MMWCAS) radar cascaded with four AWR1243 radar boards is used to collect gesture echoes. Time-frequency analysis is performed on gesture echoes, and the hand target is detected based on the Range-Doppler (RD) map and 3D point cloud. Through data pre-processing, the Range-Time Map (RTM), Doppler-Time Map (DTM), Azimuth-Time Map (ATM) and Elevation-Time Map (ETM) of the gestures are extracted to more comprehensively characterize the motion of the hand gesture. The mixed Feature-Time Maps (FTM) are formed and adopted for the recognition of 12 types of micro-motion gestures. An innovative gesture recognition network based on DenseNet and Convolutional Block Attention Module (CBAM) is designed, and the mixed FTM is used as the input of the network. Experimental results show that the recognition accuracy reaches 99.03%, achieving high-accuracy gesture recognition. It is discovered that the network focuses on the first half of the gesture movement, which improves the recognition accuracy.

-

表 1 毫米波雷达参数设置

参数名称 值 参数名称 值 发射天线数 12 调频带宽 5 GHz 接收天线数 16 调频斜率 125 MHz/µs 采集帧数 28 ADC采样率 4000 ksps 每帧chirp数 128 帧周期 72 ms 每chirp采样数 128 表 2 实验平台

属性 型号/参数 CPU R5-4600H GPU GTX1660TI 内存 16 GB 操作系统 Windows10 64 bit 表 3 各种CNN模型进行数据扩充的效果对比

VGG16 ResNet50 ResNet101 DenseNet121 DenseNet161 原始(%) 96.57 79.21 95.09 97.36 97.68 数据扩充之后(%) 97.08 91.76 97.45 98.15 98.29 计算复杂度 单个batch计算量 5,509,133,824 1,558,820,864 3,082,369,024 956,748,800 2,550,592,320 网络参数个数 134,308,556 23,526,412 42,518,540 7,972,648 28,671,688 数据扩充后的单次迭代用时(s) 73.44 48.25 74.67 60.02 149.58 表 4 CBAM在DenseNet121不同位置的效果对比(%)

位置 0000 0001 0010 0011 0100 0101 0110 0111 识别率 98.15 97.87 99.03 98.47 98.80 98.47 98.89 97.96 位置 1000 1001 1010 1011 1100 1101 1110 1111 识别率 98.93 98.19 98.80 97.45 97.69 97.77 98.28 97.91 -

[1] VERDADERO M S, MARTINEZ-OJEDA C O, and CRUZ J C D. Hand gesture recognition system as an alternative interface for remote controlled home appliances[C]. The 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management, Baguio, Philippines, 2018: 1–5. [2] WISENER W J, RODRIGUEZ J D, OVANDO A, et al. A top-view hand gesture recognition system for IoT applications[C]. The 5th International Conference on Smart Systems and Inventive Technology, Tirunelveli, India, 2023: 430–434. [3] KIM K M and CHOI J I. Passengers’ gesture recognition model in self-driving vehicles: Gesture recognition model of the passengers’ obstruction of the vision of the driver[C]. The 4th International Conference on Computer and Communication Systems, Singapore, 2019: 239–242. [4] NOORUDDIN N, DEMBANI R, and MAITLO N. HGR: Hand-gesture-recognition based text input method for AR/VR wearable devices[C]. 2020 IEEE International Conference on Systems, Man, and Cybernetics, Toronto, Canada, 2020: 744–751. [5] LIU Zhenyu, LIU Haoming, and MA Chongrun. A robust hand gesture sensing and recognition based on dual-flow fusion with FMCW radar[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 4028105. doi: 10.1109/LGRS.2022.3217390. [6] ZHANG Wenjin, WANG Jiacun, and LAN Fangping. Dynamic hand gesture recognition based on short-term sampling neural networks[J]. IEEE/CAA Journal of Automatica Sinica, 2021, 8(1): 110–120. doi: 10.1109/JAS.2020.1003465. [7] LEÓN D G, GRÖLI J, YEDURI S R, et al. Video hand gestures recognition using depth camera and lightweight CNN[J]. IEEE Sensors Journal, 2022, 22(14): 14610–14619. doi: 10.1109/JSEN.2022.3181518. [8] LIEN J, GILLIAN N, KARAGOZLER M E, et al. Soli: Ubiquitous gesture sensing with millimeter wave radar[J]. ACM Transactions on Graphics, 2016, 35(4): 142. doi: 10.1145/2897824.2925953. [9] WANG Saiwen, SONG Jie, LIEN J, et al. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum[C]. The 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 2016: 851–860. [10] SHEN Xiangyu, ZHENG Haifeng, FENG Xinxin, et al. ML-HGR-Net: A meta-learning network for FMCW radar based hand gesture recognition[J]. IEEE Sensors Journal, 2022, 22(11): 10808–10817. doi: 10.1109/JSEN.2022.3169231. [11] ZHANG Xuhao, WU Qisong, and ZHAO Dixian. Dynamic hand gesture recognition using FMCW radar sensor for driving assistance[C]. 2018 10th International Conference on Wireless Communications and Signal Processing, Hangzhou, China, 2018: 1–6. [12] YU J T, YEN L, and TSENG P H. mmWave radar-based hand gesture recognition using range-angle image[C]. 2020 IEEE 91st Vehicular Technology Conference, Antwerp, Belgium, 2020: 1–5. [13] LIU Haipeng, ZHOU Anfu, DONG Zihe, et al. M-Gesture: Person-independent real-time in-air gesture recognition using commodity millimeter wave radar[J]. IEEE Internet of Things Journal, 2022, 9(5): 3397–3415. doi: 10.1109/JIOT.2021.3098338. [14] SMITH J W, THIAGARAJAN S, WILLIS R, et al. Improved static hand gesture classification on deep convolutional neural networks using novel sterile training technique[J]. IEEE Access, 2021, 9: 10893–10902. doi: 10.1109/ACCESS.2021.3051454. [15] GAN Liangyu, LIU Yuan, LI Yanzhong, et al. Gesture recognition system using 24 GHz FMCW radar sensor realized on real-time edge computing platform[J]. IEEE Sensors Journal, 2022, 22(9): 8904–8914. doi: 10.1109/JSEN.2022.3163449. [16] 王勇, 吴金君, 田增山, 等. 基于FMCW雷达的多维参数手势识别算法[J]. 电子与信息学报, 2019, 41(4): 822–829. doi: 10.11999/JEIT180485.WANG Yong, WU Jinjun, TIAN Zengshan, et al. Gesture recognition with multi-dimensional parameter using FMCW radar[J]. Journal of Electronics &Information Technology, 2019, 41(4): 822–829. doi: 10.11999/JEIT180485. [17] 王勇, 王沙沙, 田增山, 等. 基于FMCW雷达的双流融合神经网络手势识别方法[J]. 电子学报, 2019, 47(7): 1408–1415. doi: 10.3969/j.issn.0372-2112.2019.07.003.WANG Yong, WANG Shasha, TIAN Zengshan, et al. Two-stream fusion neural network approach for hand gesture recognition based on FMCW radar[J]. Acta Electronica Sinica, 2019, 47(7): 1408–1415. doi: 10.3969/j.issn.0372-2112.2019.07.003. [18] AHMED S, KIM W, PARK J, et al. Radar-based air-writing gesture recognition using a novel multistream CNN approach[J]. IEEE Internet of Things Journal, 2022, 9(23): 23869–23880. doi: 10.1109/JIOT.2022.3189395. [19] ALIREZAZAD K and MAURER L. FMCW radar-based hand gesture recognition using dual-stream CNN-GRU model[C]. 2022 24th International Microwave and Radar Conference, Gdansk, Poland, 2022: 1–5. [20] PARK G, CHANDRASEGAR V K, PARK J, et al. Increasing accuracy of hand gesture recognition using convolutional neural network[C]. 2022 International Conference on Artificial Intelligence in Information and Communication, Jeju Island, Korea, Republic of, 2022: 251–255. [21] DANG T L, NGUYEN H T, DAO D M, et al. SHAPE: A dataset for hand gesture recognition[J]. Neural Computing and Applications, 2022, 34(24): 21849–21862. doi: 10.1007/s00521-022-07651-1. [22] WANG Lingling, CHEN Xiaoyan, XIONG Wei, et al. Research on gesture recognition and classification based on attention mechanism[C]. 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference, Chongqing, China, 2022: 1617–1621. [23] FANG Juan, XU Chao, WANG Chao, et al. Dynamic gesture recognition based on multimodal fusion model[C]. 2021 20th International Conference on Ubiquitous Computing and Communications, London, United Kingdom, 2021: 172–177. [24] GUO He, ZHANG Rui, LI Yang, et al. Research on human-vehicle gesture interaction technology based on computer visionbility[C]. 2022 IEEE 6th Advanced Information Technology, Electronic and Automation Control Conference, Beijing, China, 2022: 1161–1165. [25] STOVE A G. Linear FMCW radar techniques[J]. IEE Proceedings F (Radar and Signal Processing), 1992, 139(5): 343–350. doi: 10.1049/ip-f-2.1992.0048. [26] WINKLER V. Range Doppler detection for automotive FMCW radars[C]. 2007 European Microwave Conference, Munich, Germany, 2007: 166–169. [27] 夏朝阳, 周成龙, 介钧誉, 等. 基于多通道调频连续波毫米波雷达的微动手势识别[J]. 电子与信息学报, 2020, 42(1): 164–172. doi: 10.11999/JEIT190797.XIA Zhaoyang, ZHOU Chenglong, JIE Junyu, et al. Micro-motion gesture recognition based on multi-channel frequency modulated continuous wave millimeter wave radar[J]. Journal of Electronics &Information Technology, 2020, 42(1): 164–172. doi: 10.11999/JEIT190797. [28] 樊瑞宣, 姜高霞, 王文剑. 一种个性化k近邻的离群点检测算法[J]. 小型微型计算机系统, 2020, 41(4): 752–757. doi: 10.3969/j.issn.1000-1220.2020.04.014.FAN Ruixuan, JIANG Gaoxia, and WANG Wenjian. Outlier detection algorithm with personalized k-nearest neighbor[J]. Journal of Chinese Computer Systems, 2020, 41(4): 752–757. doi: 10.3969/j.issn.1000-1220.2020.04.014. [29] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. The European Conference on Computer Vision, Munich, Germany, 2018: 3–19. [30] HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2261–2269. -

下载:

下载:

下载:

下载: