Research Advances and New Paradigms for Biology-inspired Spiking Neural Networks

-

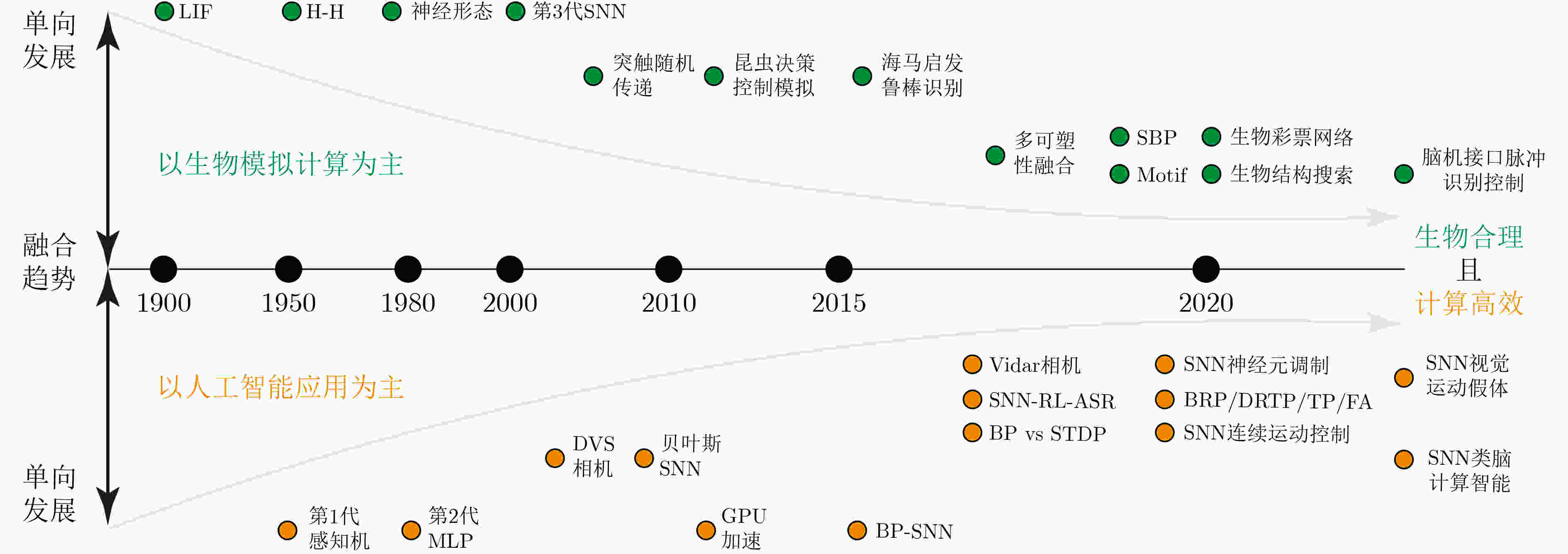

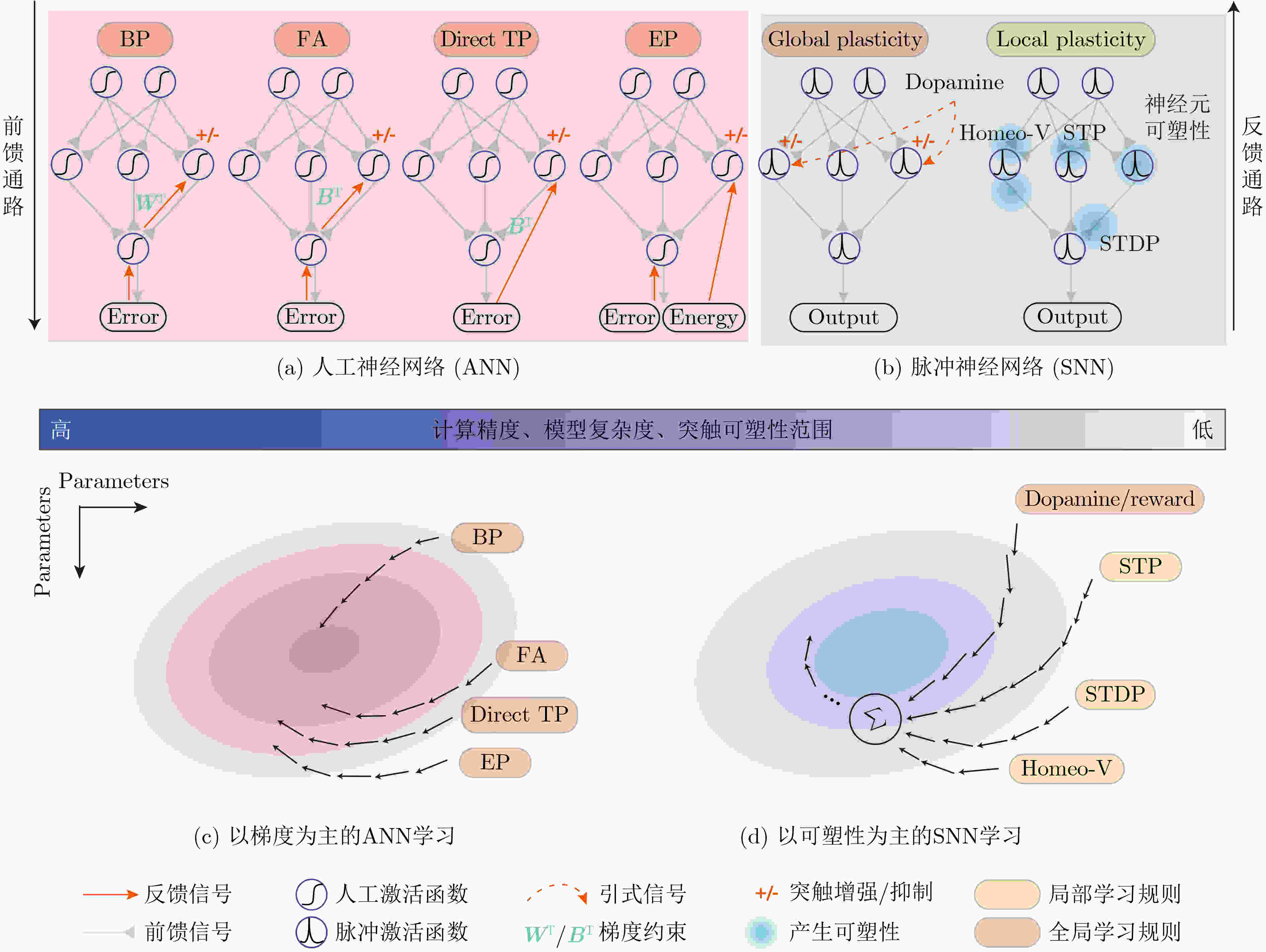

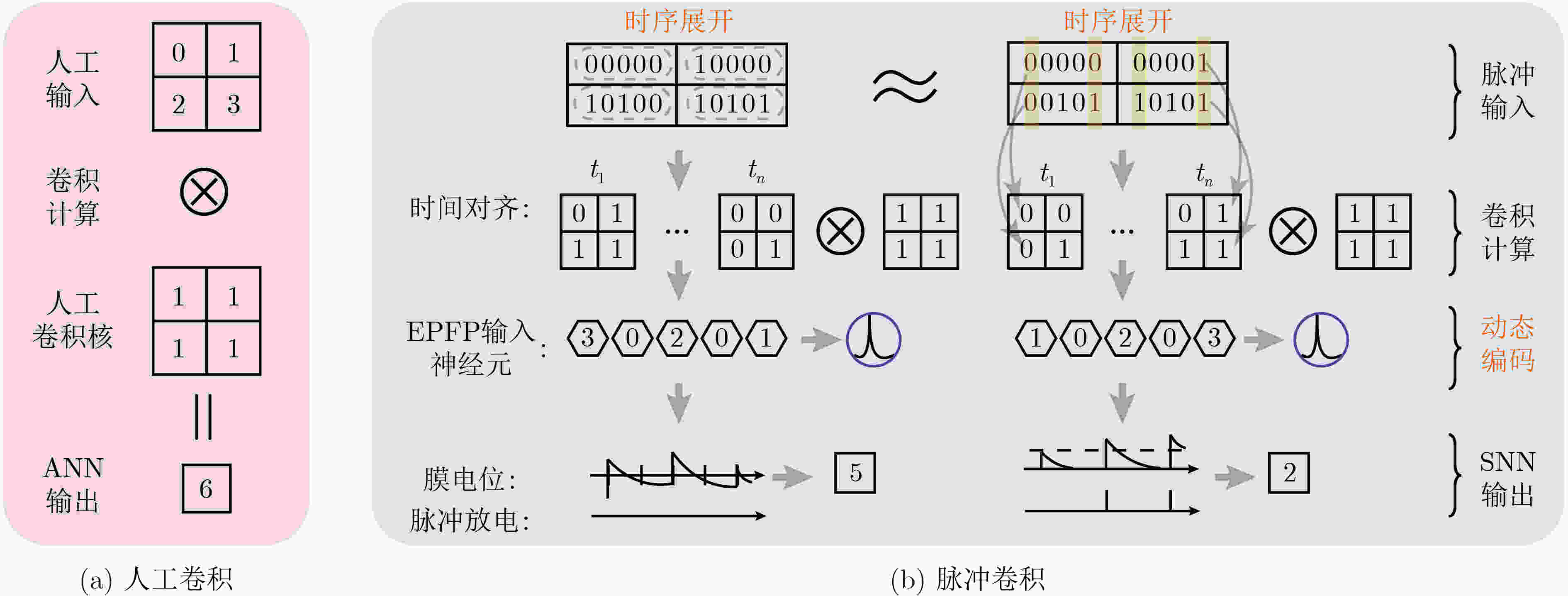

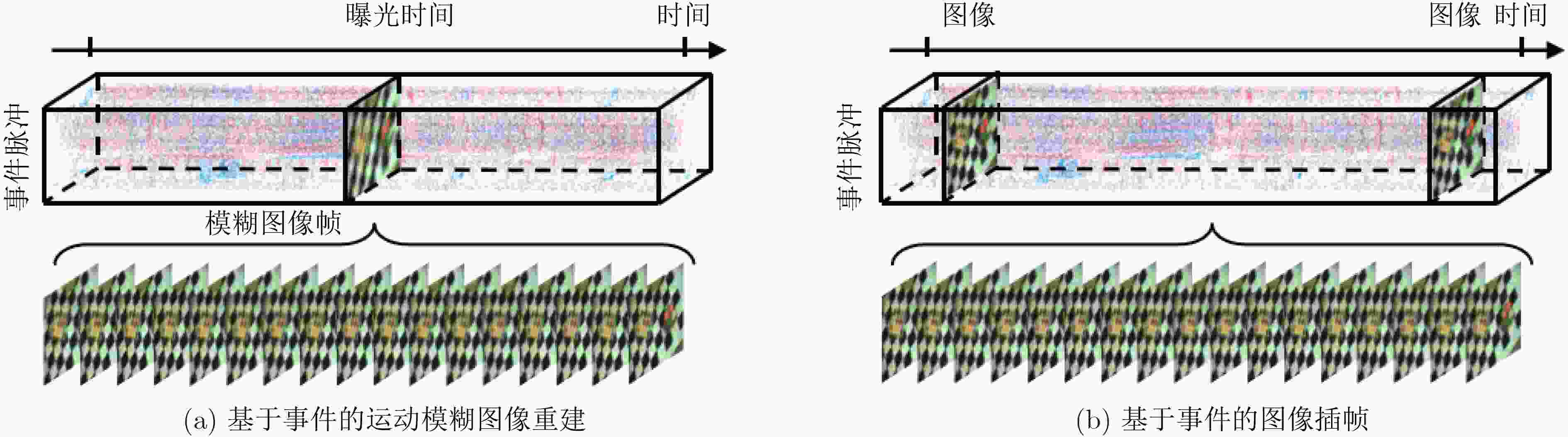

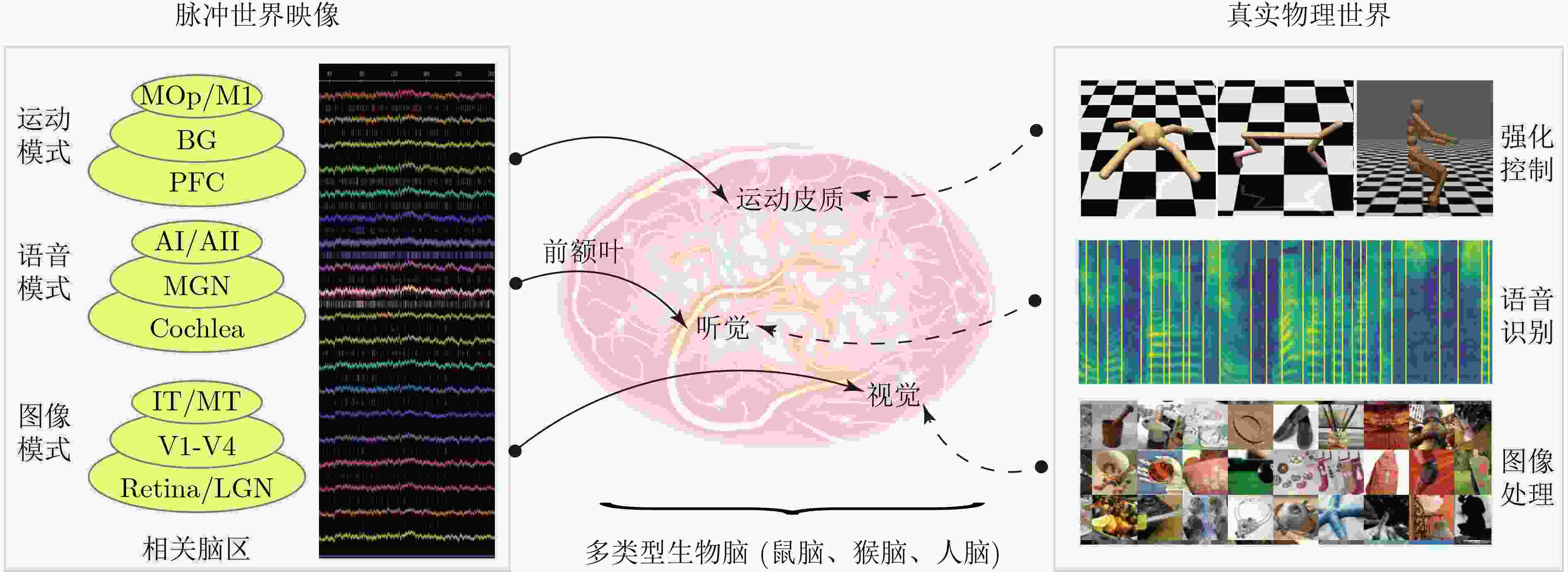

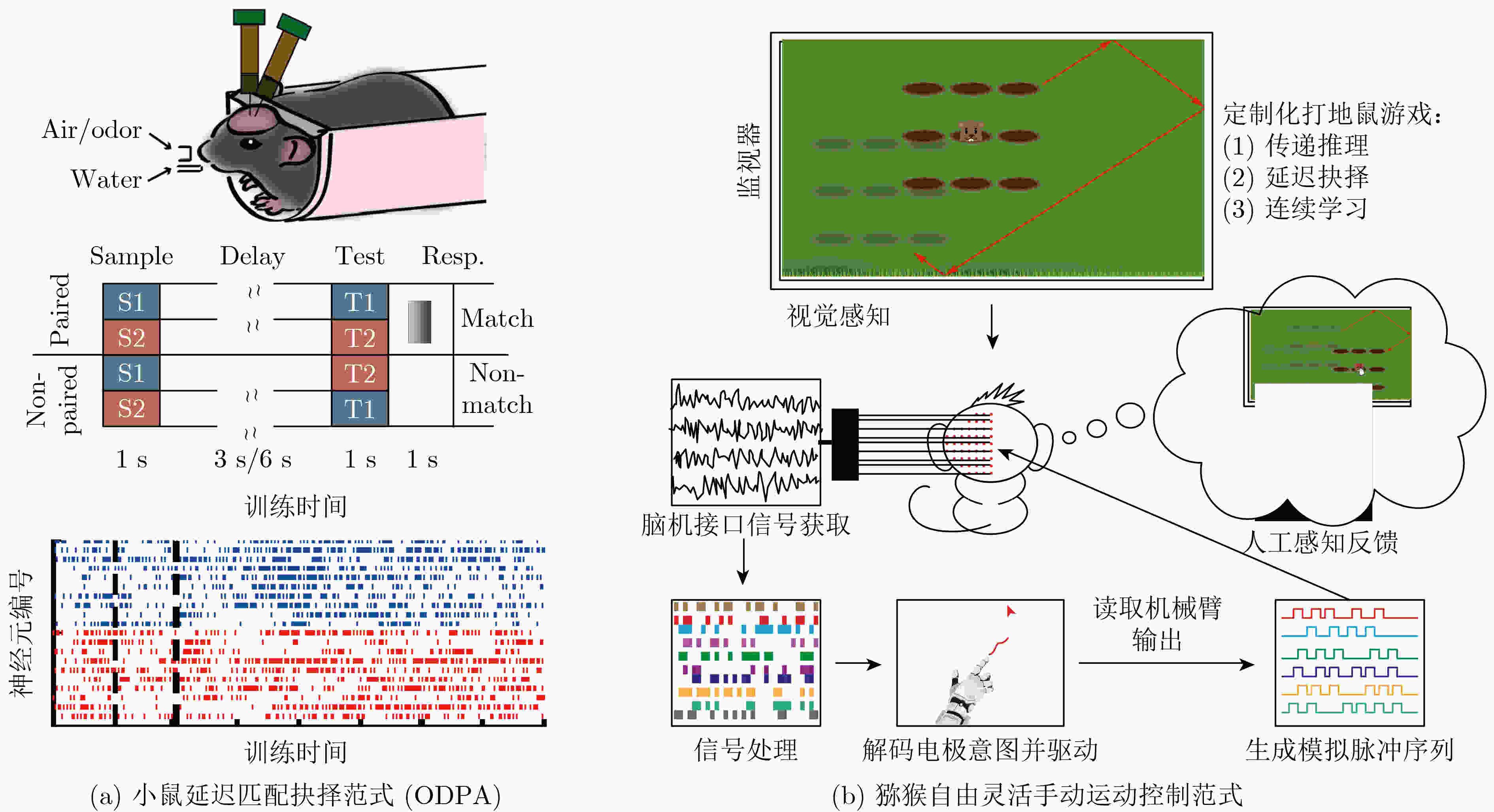

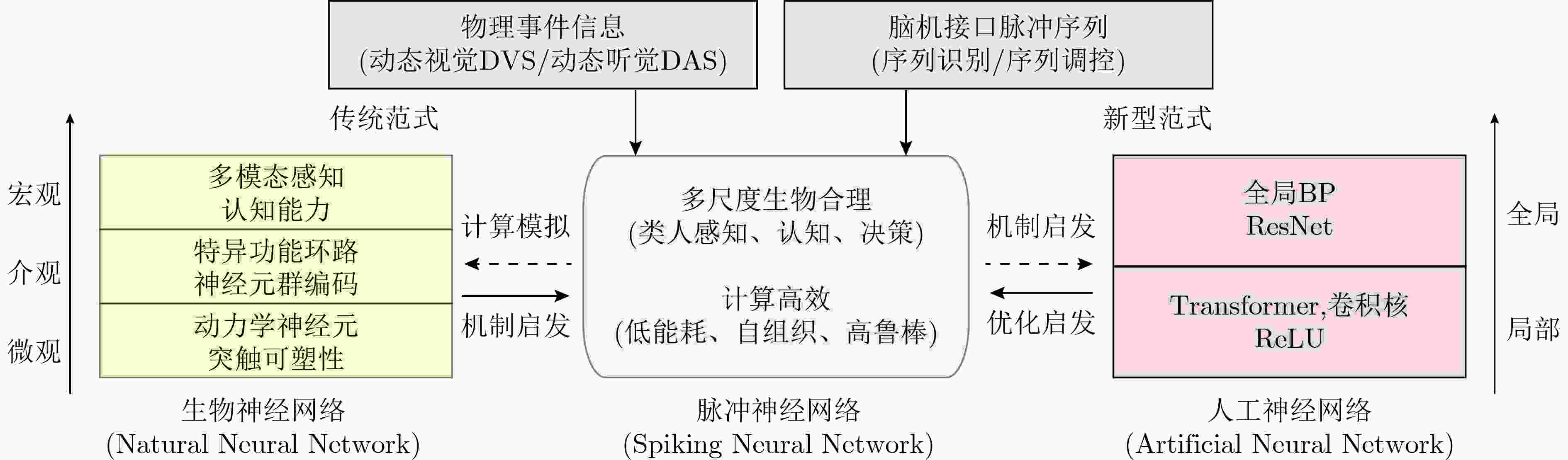

摘要: 类脑脉冲神经网络(SNN)由于同时具有生物合理性和计算高效性等特点,因而在生物模拟计算和人工智能应用两个方向都受到了广泛关注。该文通过对SNN发展历史演进的分析,发现上述两个原本相对独立的研究方向正在朝向快速交叉融合的趋势发展。回顾历史,动态异步事件信息采集装置的成熟,如动态视觉相机(DVS)、动态音频传感(DAS)的成功应用,使得SNN可以有机会充分发挥其在脉冲时空编码、神经元异质性、功能环路特异性、多尺度可塑性等方面的优势,并在一些传统典型的应用任务中崭露头角,如动态视觉信号追踪、听觉信息处理、强化学习连续控制等。与这些物理世界的应用任务范式相比,生物大脑内部存在着一个特殊的生物脉冲世界,这个脉冲世界与外界物理世界互为映像且复杂度相似。展望未来,随着侵入式、高通量脑机接口设备的逐步成熟,脑内脉冲序列的在线识别和反向控制,将逐渐成为一个天然适合SNN最大化发挥其低能耗、鲁棒性、灵活性等优势的新型任务范式。类脑SNN从生物启发而来,并将最终应用到生物机制探索中去,相信这类正反馈式的科研方式将极大的加速后续相关的脑科学和类脑智能研究。

-

关键词:

- 脉冲神经网络(SNN) /

- 类脑智能 /

- 脑机接口(BCI) /

- 实验范式

Abstract: Spiking Neural Networks (SNN) are gaining popularity in the computational simulation and artificial intelligence fields owing to their biological plausibility and computational efficiency. Herein, the historical development of SNN are analyzed to conclude that these two fields are intersecting and merging rapidly. After the successful application of Dynamic Vision Sensors (DVS) and Dynamic Audio Sensors (DAS), SNNs have found some proper paradigms, such as continuous visual signal tracking, automatic speech recognition, and reinforcement learning of continuous control, that have extensively supported their key features, including spiking encoding, neuronal heterogeneity, specific functional circuits, and multiscale plasticity. In comparison to these real-world paradigms, the brain contains a spiked version of the biology-world paradigm, which exhibits a similar level of complexity and is usually considered a mirror of the real world. Considering the projected rapid development of invasive and parallel Brain-computer Interface (BCI), as well as the new BCI-based paradigm, which includes online pattern recognition and stimulus control of biological spike trains, it is natural for SNNs to exhibit their key advantages of energy efficiency, robustness, and flexibility. The biological brain has inspired the present study of SNNs and effective SNN machine-learning algorithms, which can help enhance neuroscience discoveries in the brain by applying them to the new BCI paradigm. Such two-way interactions with positive feedback can accelerate brain science research and brain-inspired intelligence technology. -

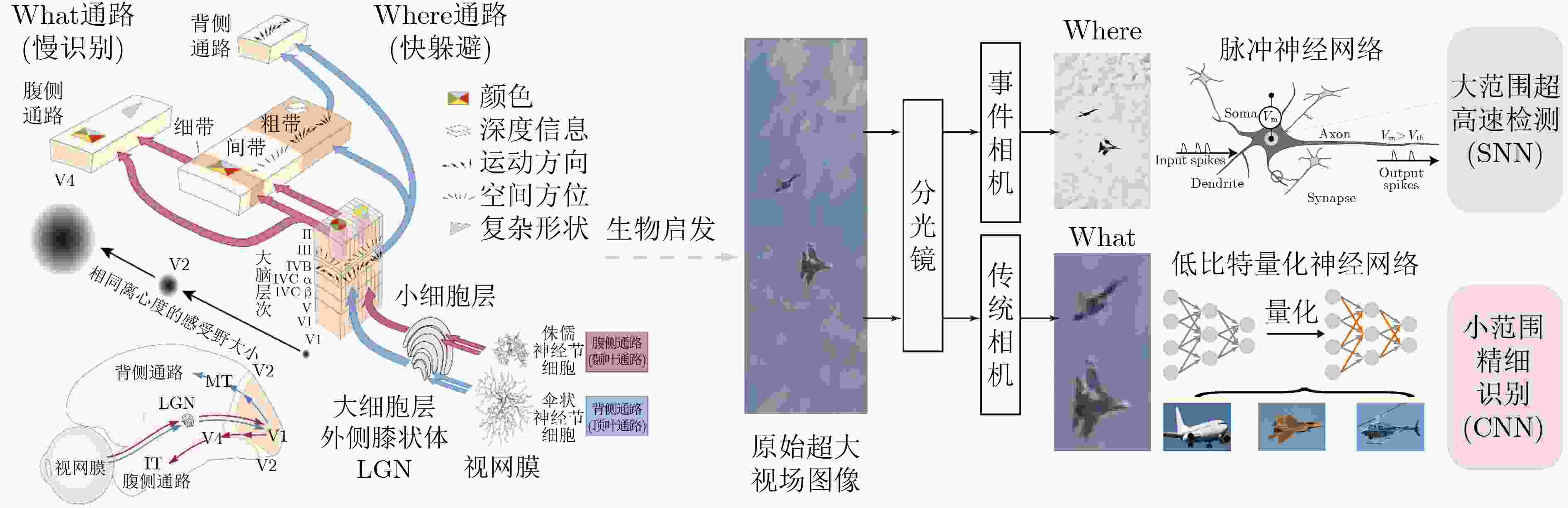

图 5 一种生物视觉双通路启发的类脑ANN-SNN视觉感知应用示例[48]

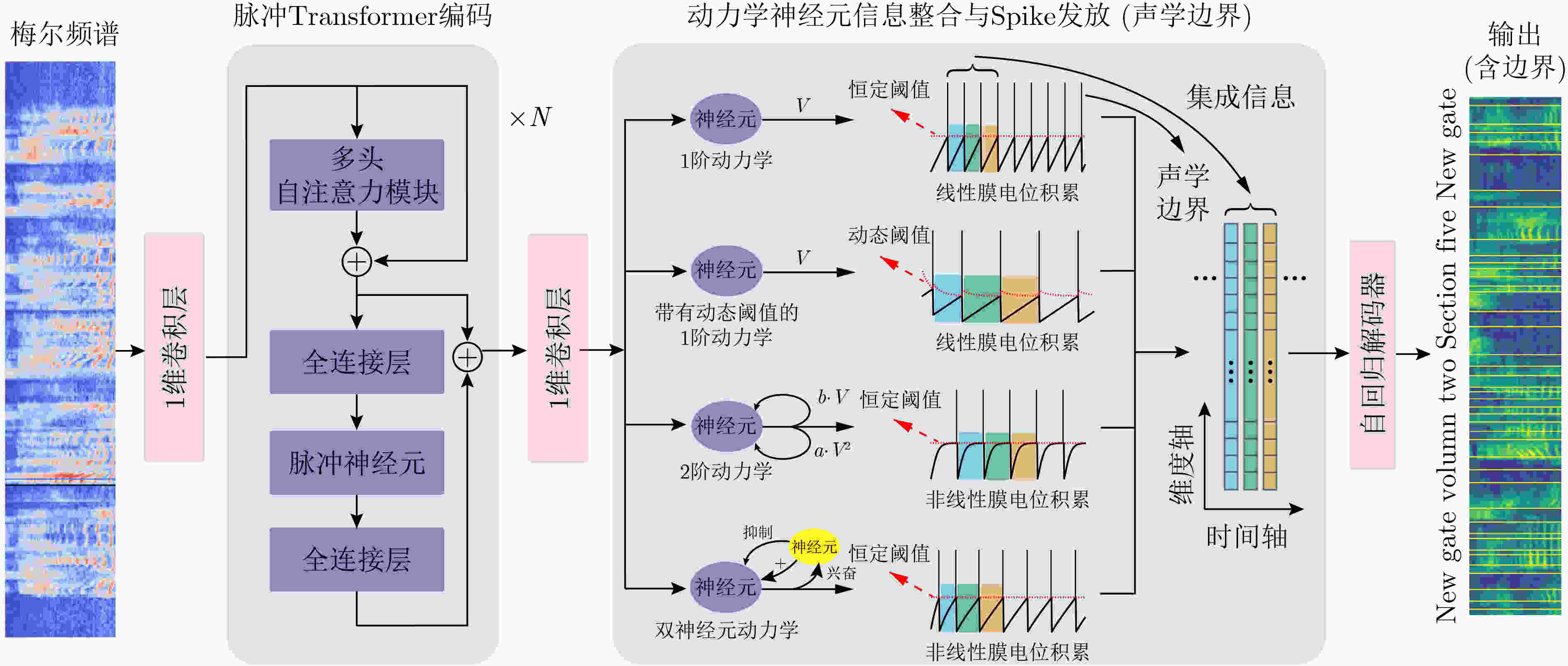

图 6 一种结合脉冲Transformer和多类动力学脉冲神经元的SNN听觉信息处理示例[52]

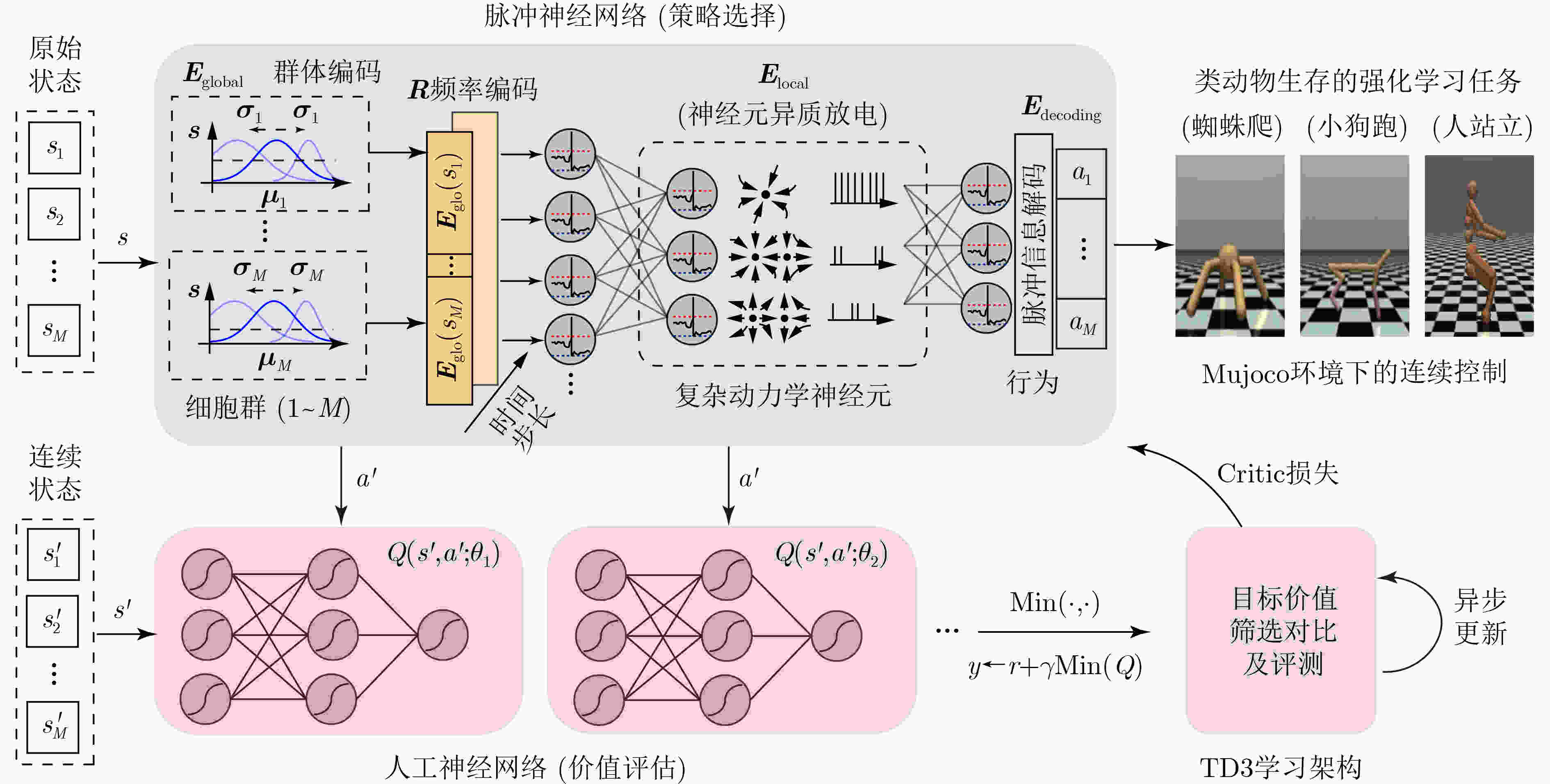

图 7 一种脉冲策略网络为主、人工评估网络为辅的强化学习连续控制应用示例[21]

-

[1] MAASS W. Networks of spiking neurons: The third generation of neural network models[J]. Neural Networks, 1997, 10(9): 1659–1671. doi: 10.1016/S0893-6080(97)00011-7 [2] SEUNG H S. Learning in spiking neural networks by reinforcement of stochastic synaptic transmission[J]. Neuron, 2003, 40(6): 1063–1073. doi: 10.1016/s0896-6273(03)00761-x [3] FIETE I R and SEUNG H S. Gradient learning in spiking neural networks by dynamic perturbation of conductances[J]. Physical Review Letters, 2006, 97(4): 048104. doi: 10.1103/PhysRevLett.97.048104 [4] ZHANG Xu, XU Ziye, HENRIQUEZ C, et al. Spike-based indirect training of a spiking neural network-controlled virtual insect[C]. 2013 IEEE 52nd Annual Conference on Decision and Control (Cdc), Firenze, Italy, 2013: 6798–6805. [5] ZENKE F, AGNES E J, and GERSTNER W. Diverse synaptic plasticity mechanisms orchestrated to form and retrieve memories in spiking neural networks[J]. Nature Communications, 2015, 6: 6922. doi: 10.1038/ncomms7922 [6] ZHANG Tielin, ZENG Yi, ZHAO Dongcheng, et al. HMSNN: Hippocampus inspired memory spiking neural network[C]. 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 2016: 2301–2306. [7] ZENKE F. Memory formation and recall in recurrent spiking neural networks[R]. 2014. [8] ZHAO Bo, DING Ruoxi, CHEN Shoushun, et al. Feedforward categorization on AER motion events using cortex-like features in a spiking neural network[J]. IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(9): 1963–1978. doi: 10.1109/TNNLS.2014.2362542 [9] NICOLA W and CLOPATH C. Supervised learning in spiking neural networks with FORCE training[J]. Nature Communications, 2017, 8(1): 2208. doi: 10.1038/s41467-017-01827-3 [10] ZENG Yi, ZHANG Tielin, and XU Bo. Improving multi-layer spiking neural networks by incorporating brain-inspired rules[J]. Science China Information Sciences, 2017, 60(5): 052201. doi: 10.1007/s11432-016-0439-4 [11] ZHANG Tielin, ZENG Yi, ZHAO Dongcheng, et al. A plasticity-centric approach to train the non-differential spiking neural networks[C]. Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, USA, 2018: 620–628. [12] ZHANG Tielin, ZENG Yi, ZHAO Dongcheng, et al. Brain-inspired balanced tuning for spiking neural networks[C]. Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 2018: 1653–1659. [13] ZHANG Tielin, CHENG Xiang, JIA Shuncheng, et al. Self-backpropagation of synaptic modifications elevates the efficiency of spiking and artificial neural networks[J]. Science Advances, 2021, 7(43): eabh0146. doi: 10.1126/sciadv.abh0146 [14] ZHANG Tielin, ZENG Yi, and XU Bo. A computational approach towards the microscale mouse brain connectome from the mesoscale[J]. Journal of Integrative Neuroscience, 2017, 16(3): 291–306. doi: 10.3233/JIN-170019 [15] JIA Shuncheng, ZUO Ruichen, ZHANG Tielin, et al. Motif-topology and reward-learning improved spiking neural network for efficient multi-sensory integration[C]. International Conference on Acoustics, Speech and Signal Processing, Singapore, 2022: 8917–8921. [16] KIM Y, LI Yuhang, PARK H, et al. Neural architecture search for spiking neural networks[C]. 17th European Conference on Computer Vision, Tel-Aviv, Israel, 2022: 36–56. [17] KIM Y, LI Yuhang, PARK H, et al. Exploring lottery ticket hypothesis in spiking neural networks[C]. 17th European Conference on Computer Vision, Tel-Aviv, Israel, 2022: 102–120. [18] ZHANG Tielin, JIA Shuncheng, CHENG Xiang, et al. Tuning convolutional spiking neural network with biologically plausible reward propagation[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 33(12): 7621–7631. doi: 10.1109/TNNLS.2021.3085966 [19] JIA Shuncheng, ZHANG Tielin, CHENG Xiang, et al. Neuronal-plasticity and reward-propagation improved recurrent spiking neural networks[J]. Frontiers in Neuroscience, 2021, 15: 654786. doi: 10.3389/fnins.2021.654786 [20] BELLEC G, SCHERR F, SUBRAMONEY A, et al. A solution to the learning dilemma for recurrent networks of spiking neurons[J]. Nature Communications, 2020, 11(1): 3625. doi: 10.1038/s41467-020-17236-y [21] ZHANG Duzhen, ZHANG Tielin, JIA Shuncheng, et al. Multi-sacle dynamic coding improved spiking actor network for reinforcement learning[C/OL]. Proceedings of the 36th AAAI Conference on Artificial Intelligence, 2022: 59–67. [22] WU Yujie, DENG Lei, LI Guoqi, et al. Direct training for spiking neural networks: Faster, larger, better[C]. Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Honolulu, USA, 2019: 1311–1318. [23] WU Yujie, DENG Lei, LI Guoqi, et al. Spatio-temporal backpropagation for training high-performance spiking neural networks[J]. Frontiers in Neuroscience, 2018, 12: 331. doi: 10.3389/fnins.2018.00331 [24] LEE J H, DELBRUCK T, and PFEIFFER M. Training deep spiking neural networks using backpropagation[J]. Frontiers in Neuroscience, 2016, 10: 508. doi: 10.3389/fnins.2016.00508 [25] STROMATIAS E, SOTO M, SERRANO-GOTARREDONA T, et al. An event-driven classifier for spiking neural networks fed with synthetic or dynamic vision sensor data[J]. Frontiers in Neuroscience, 2017, 11: 350. doi: 10.3389/fnins.2017.00350 [26] BOBROWSKI O, MEIR R, and ELDAR Y C. Bayesian filtering in spiking neural networks: Noise, adaptation, and multisensory integration[J]. Neural Computation, 2009, 21(5): 1277–1320. doi: 10.1162/neco.2008.01-08-692 [27] BRETTE R and GOODMAN D F M. Simulating spiking neural networks on GPU[J]. Network:Computation in Neural Systems, 2012, 23(4): 167–182. doi: 10.3109/0954898X.2012.730170 [28] LILLICRAP T P, SANTORO A, MARRIS L, et al. Backpropagation and the brain[J]. Nature Reviews Neuroscience, 2020, 21(6): 335–346. doi: 10.1038/s41583-020-0277-3 [29] LILLICRAP T P, COWNDEN D, TWEED D B, et al. Random synaptic feedback weights support error backpropagation for deep learning[J]. Nature Communications, 2016, 7: 13276. doi: 10.1038/ncomms13276 [30] MEULEMANS A, CARZANIGA F S, SUYKENS J A K, et al. A theoretical framework for target propagation[C]. Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2020: 1681. [31] FRENKEL C, LEFEBVRE M, and BOL D. Learning without feedback: Fixed random learning signals allow for feedforward training of deep neural networks[J]. Frontiers in Neuroscience, 2021, 15: 629892. doi: 10.3389/fnins.2021.629892 [32] MNIH A and HINTON G. Learning nonlinear constraints with contrastive backpropagation[C]. IEEE International Joint Conference on Neural Networks, Montreal, Canada, 2005: 1302–1307. [33] BI Guoqiang and POO M M. Synaptic modification by correlated activity: Hebb's postulate revisited[J]. Annual Review of Neuroscience, 2001, 24: 139–166. doi: 10.1146/annurev.neuro.24.1.139 [34] DU Jiulin, WEI Hongping, WANG Zuoren, et al. Long-range retrograde spread of LTP and LTD from optic tectum to retina[J]. Proceedings of the National Academy of Sciences of the United States of America, 2009, 106(45): 18890–18896. doi: 10.1073/pnas.0910659106 [35] ZHAO Dongcheng, ZENG Yi, ZHANG Tielin, et al. GLSNN: A multi-layer spiking neural network based on global feedback alignment and local STDP plasticity[J]. Frontiers in Computational Neuroscience, 2020, 14: 576841. doi: 10.3389/fncom.2020.576841 [36] MOZAFARI M, GANJTABESH M, NOWZARI-DALINI A, et al. SpykeTorch: Efficient simulation of convolutional spiking neural networks with at most one spike per neuron[J]. Frontiers in Neuroscience, 2019, 13: 625. doi: 10.3389/fnins.2019.00625 [37] SENGUPTA A, YE Yuting, WANG R, et al. Going deeper in spiking neural networks: VGG and residual architectures[J]. Frontiers in Neuroscience, 2019, 13: 95. doi: 10.3389/fnins.2019.00095 [38] TAVANAEI A, GHODRATI M, KHERADPISHEH S R, et al. Deep learning in spiking neural networks[J]. Neural Networks, 2019, 111: 47–63. doi: 10.1016/j.neunet.2018.12.002 [39] WU Jibin, CHUA Yansong, ZHANG Malu, et al. A spiking neural network framework for robust sound classification[J]. Frontiers in Neuroscience, 2018, 12: 836. doi: 10.3389/fnins.2018.00836 [40] YUAN Mengwen, WU Xi, YAN Rui, et al. Reinforcement learning in spiking neural networks with stochastic and deterministic synapses[J]. Neural Computation, 2019, 31(12): 2368–2389. doi: 10.1162/neco_a_01238 [41] 张铁林, 徐波. 脉冲神经网络研究现状及展望[J]. 计算机学报, 2021, 44(9): 1767–1785. doi: 10.11897/SP.J.1016.2021.01767ZHANG Tielin and XU Bo. Research advances and perspectives on spiking neural networks[J]. Chinese Journal of Computers, 2021, 44(9): 1767–1785. doi: 10.11897/SP.J.1016.2021.01767 [42] ABBOTT L F, DEPASQUALE B, and MEMMESHEIMER R M. Building functional networks of spiking model neurons[J]. Nature Neuroscience, 2016, 19(3): 350–355. doi: 10.1038/nn.4241 [43] DAN Yang and POO M M. Spike timing-dependent plasticity: From synapse to perception[J]. Physiological Reviews, 2006, 86(3): 1033–1048. doi: 10.1152/physrev.00030.2005 [44] WANG Bishan, HE Jingwei, YU Lei, et al. Event enhanced high-quality image recovery[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 155–171. [45] LIN Shijie, ZHANG Yinqiang, YU Lei, et al. Autofocus for event cameras[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 16323–16332. [46] ZHANG Xiang and YU Lei. Unifying motion deblurring and frame interpolation with events[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 17744–17753. [47] LIAO Wei, ZHANG Xiang, YU Lei, et al. Synthetic aperture imaging with events and frames[C]. IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 17714–17723. [48] KANDEL E R, SCHWARTZ J H, and JESSELL T M. Principles of Neural Science[M]. 3rd ed. Norwalk: Appleton & Lange, 1991. [49] GÜTIG R and SOMPOLINSKY H. Time-warp–invariant neuronal processing[J]. PLoS Biology, 2009, 7(7): e1000141. doi: 10.1371/journal.pbio.1000141 [50] DENNIS J, YU Qiang, TANG Huajin, et al. Temporal coding of local spectrogram features for robust sound recognition[C]. IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, Canada, 2013: 803–807. [51] LIU S C, VAN SCHAIK A, MINCH B A, et al. Asynchronous binaural spatial audition sensor with 2×64×4 channel output[J]. IEEE Transactions on Biomedical Circuits and Systems, 2014, 8(4): 453–464. doi: 10.1109/TBCAS.2013.2281834 [52] WANG Qingyu, ZHANG Tielin, HAN Minglun, et al. Complex dynamic neurons improved spiking transformer network for efficient automatic speech recognition[C]. Proceedings of the 37th AAAI Conference on Artificial Intelligence, Washington, USA, 2023. [53] DONG Linhao and XU Bo. CIF: Continuous integrate-and-fire for end-to-end speech recognition[C]. IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 2020: 6079–6083. [54] HAN Xuan, JIA Kebin, and ZHANG Tielin. Mouse-brain topology improved evolutionary neural network for efficient reinforcement learning[C]. 5th IFIP TC 12 International Conference on Intelligence Science IV (ICIS 2022), Xi'an, China, 2022: 3–10. [55] LIU Siqi, LEVER G, WANG Zhe, et al. From motor control to team play in simulated humanoid football[J]. Science Robotics, 2022, 7(69): eabo0235. doi: 10.1126/scirobotics.abo0235 [56] BADDELEY A. Working memory: Theories, models, and controversies[J]. Annual Review of Psychology, 2012, 63: 1–29. doi: 10.1146/annurev-psych-120710-100422 [57] ZHU Jia, CHENG Qi, CHEN Yulei, et al. Transient delay-period activity of agranular insular cortex controls working memory maintenance in learning novel tasks[J]. Neuron, 2020, 105(5): 934–946.e5. doi: 10.1016/j.neuron.2019.12.008 [58] LOZANO A M, LIPSMAN N, BERGMAN H, et al. Deep brain stimulation: Current challenges and future directions[J]. Nature Reviews Neurology, 2019, 15(3): 148–160. doi: 10.1038/s41582-018-0128-2 [59] KRAUSS J K, LIPSMAN N, AZIZ T, et al. Technology of deep brain stimulation: Current status and future directions[J]. Nature Reviews Neurology, 2021, 17(2): 75–87. doi: 10.1038/s41582-020-00426-z [60] BEUDEL M and BROWN P. Adaptive deep brain stimulation in Parkinson's disease[J]. Parkinsonism & Related Disorders, 2016, 22(S1): S123–S126. doi: 10.1016/j.parkreldis.2015.09.028 [61] LU Meili, WEI Xile, CHE Yanqiu, et al. Application of reinforcement learning to deep brain stimulation in a computational model of Parkinson’s disease[J]. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2020, 28(1): 339–349. doi: 10.1109/TNSRE.2019.2952637 -

下载:

下载:

下载:

下载: