A Review of Image Reconstruction Based on Event Cameras

-

摘要: 事件相机是一种新型仿生视觉传感器,当像素点的亮度变化超过阈值后,会输出一系列事件信息。该类视觉传感器异步输出像素的坐标、时间戳以及事件的极性,因此具有低延迟、低功耗、高时间分辨率和高动态范围等特点。它能够捕捉到高速运动和高动态场景中的信息,可以用来重构高动态范围和高速运动场景。图像重构后可以应用在物体识别、分割、跟踪以及光流估计等任务中,是视觉领域重要的研究方向之一。该文从事件相机出发,首先简要叙述事件相机的现状、发展过程、优势与挑战,然后介绍了各种类型事件相机的工作原理和一些基于事件相机的图像重构算法,最后阐述了事件相机面对的挑战和未来趋势,并对文章进行了总结。Abstract: Event cameras are bio-inspired sensors that outputs a stream of events when the brightness change of pixels exceeds the threshold. This type of visual sensor asynchronously outputs events that encode the time, location and sign of the brightness changes. Hence, event cameras offer attractive properties, such as high temporal resolution, very high dynamic range, low latency, low power consumption, and high pixel bandwidth. It can capture information in high-speed motion and high-dynamic scenes, which can be used to reconstruct high-dynamic range and high-speed motion scenes. Brightness images obtained by image reconstruction can be interpreted as a representation, and be used for recognition, segmentation, tracking and optical flow estimation, which is one of the important research directions in the field of vision. This survey first briefly introduces event cameras from their working principle, developmental history, advantages, and challenges of event cameras. Then, the working principles of various types of event cameras and some event camera-based image reconstruction algorithms are introduced. Finally, the challenges and future trends faced by event cameras are described, and the article is concluded.

-

Key words:

- Event camera /

- Dynamic vision sensors /

- Image reconstruction

-

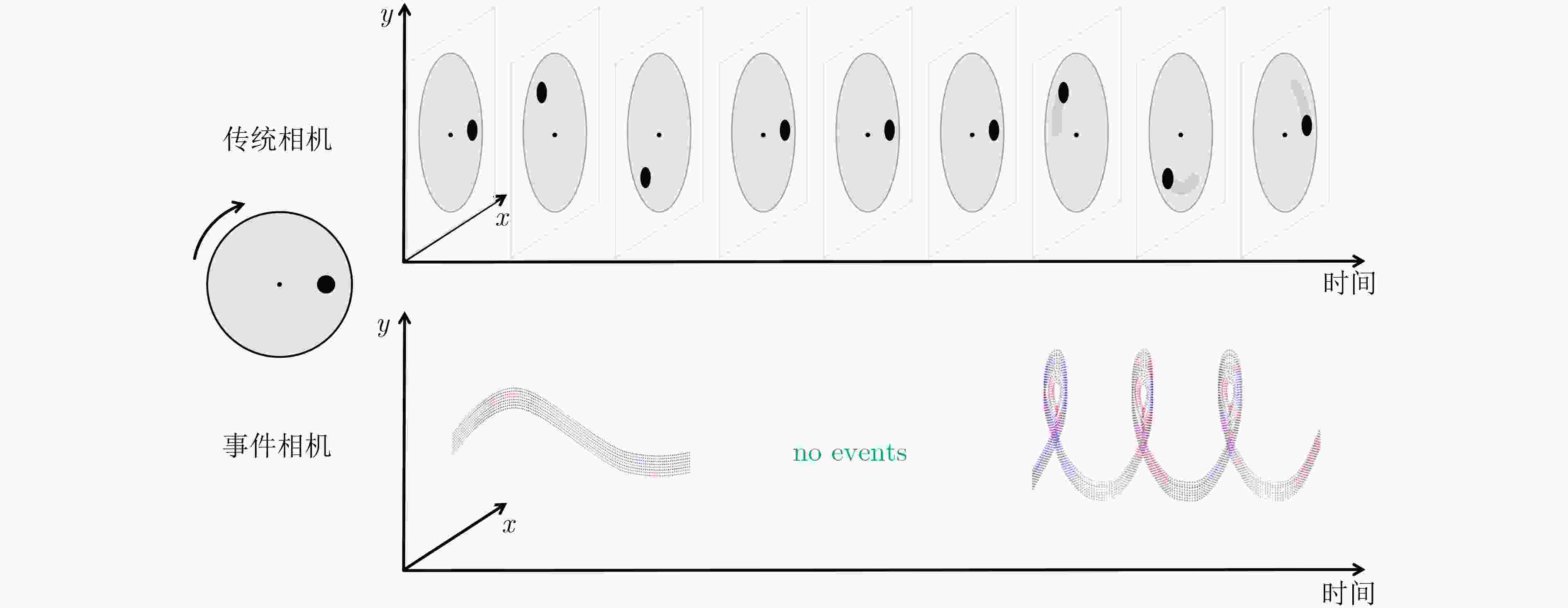

图 1 传统相机与事件相机的输出比较[1]

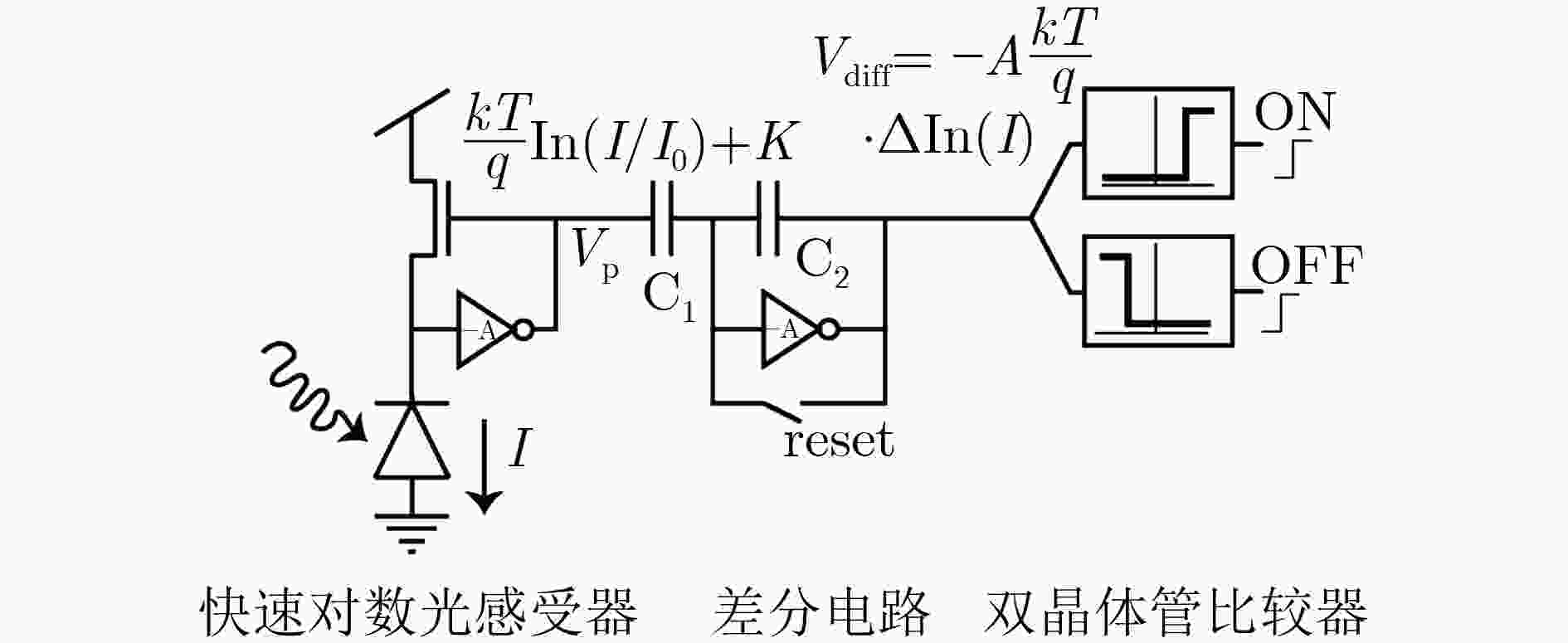

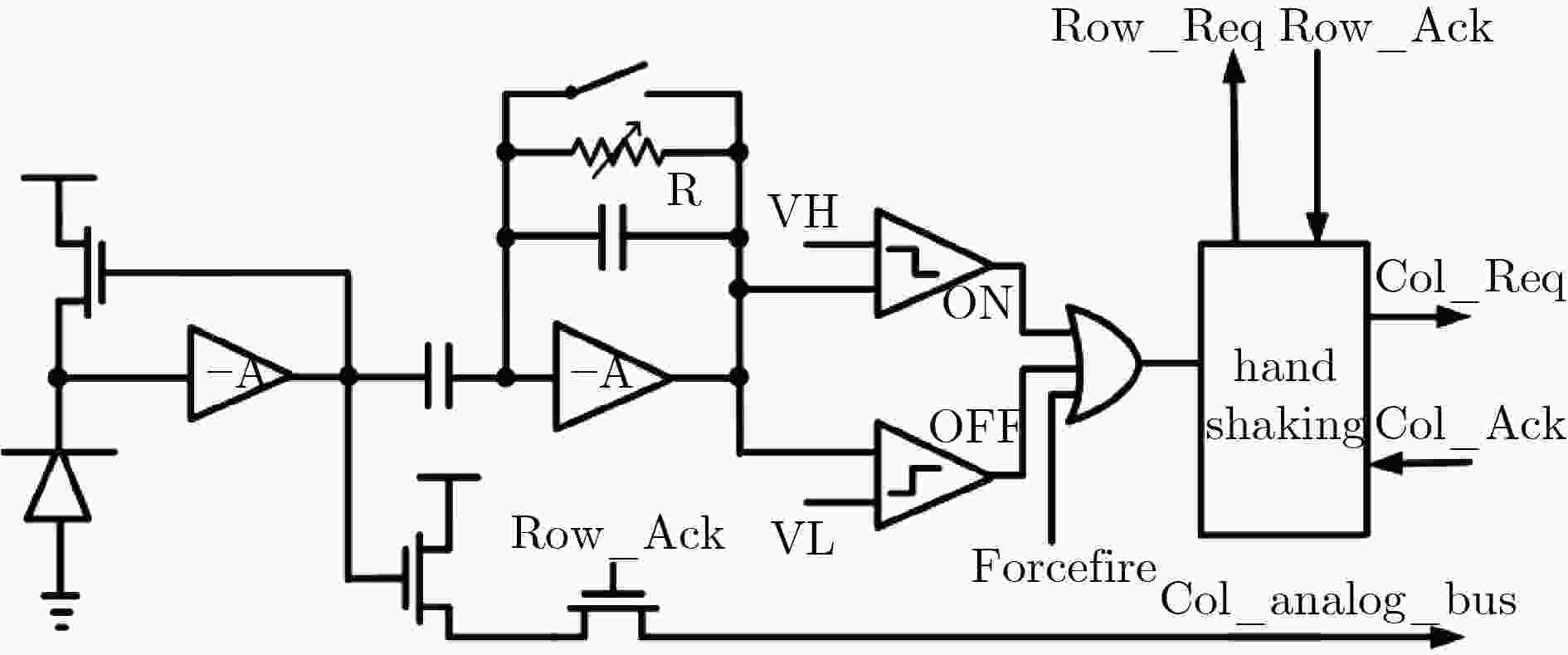

图 2 DVS电路结构原理图[14]

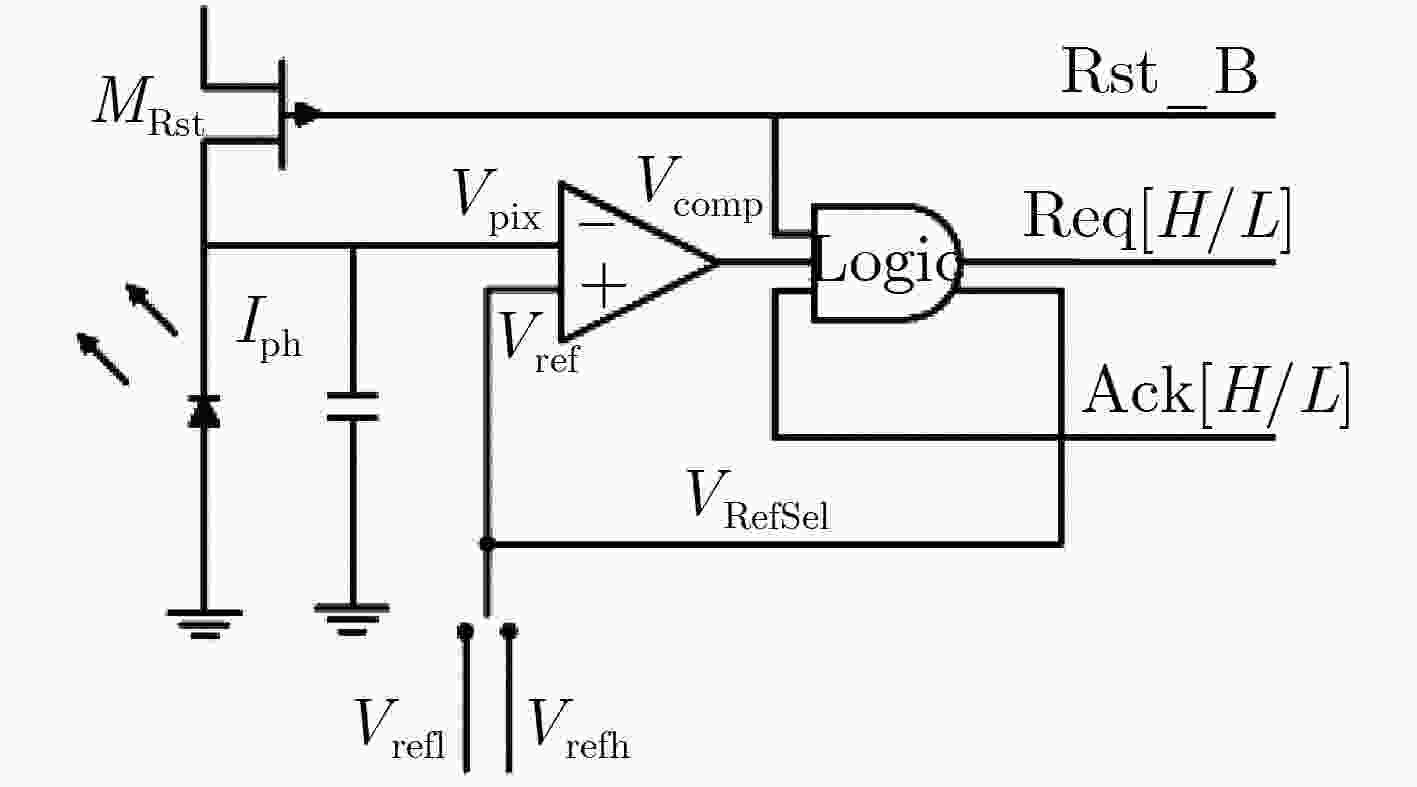

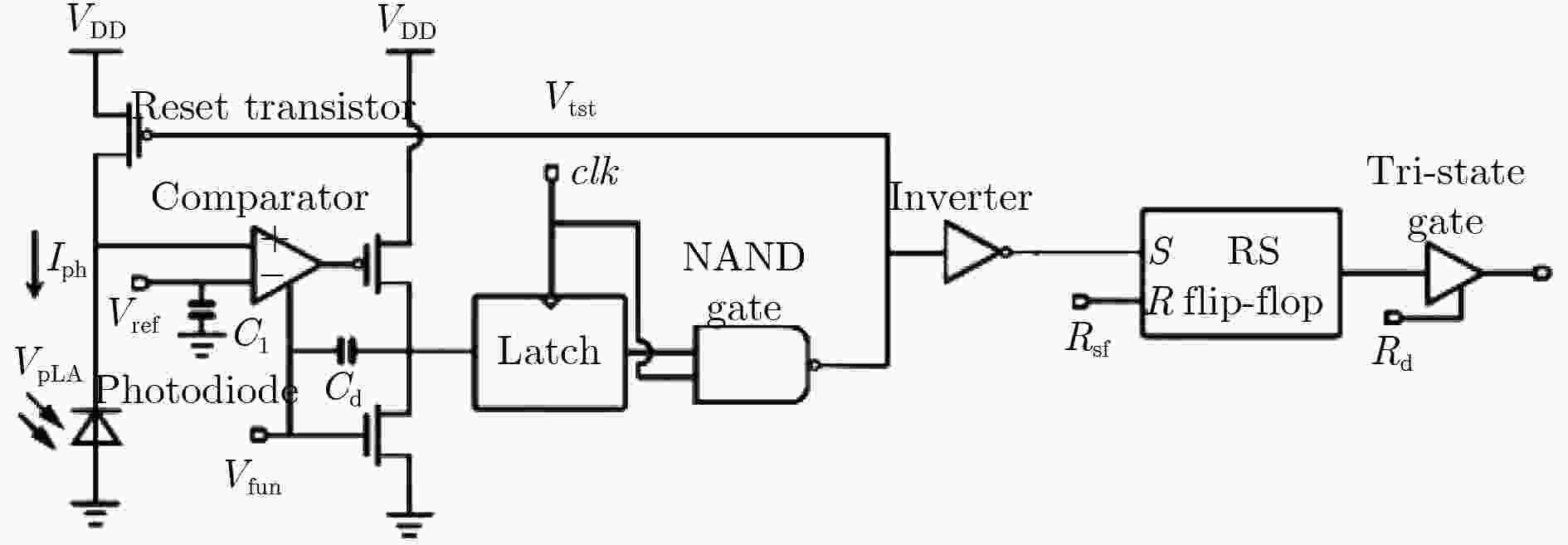

图 3 ATIS脉宽调制曝光测量电路[15]

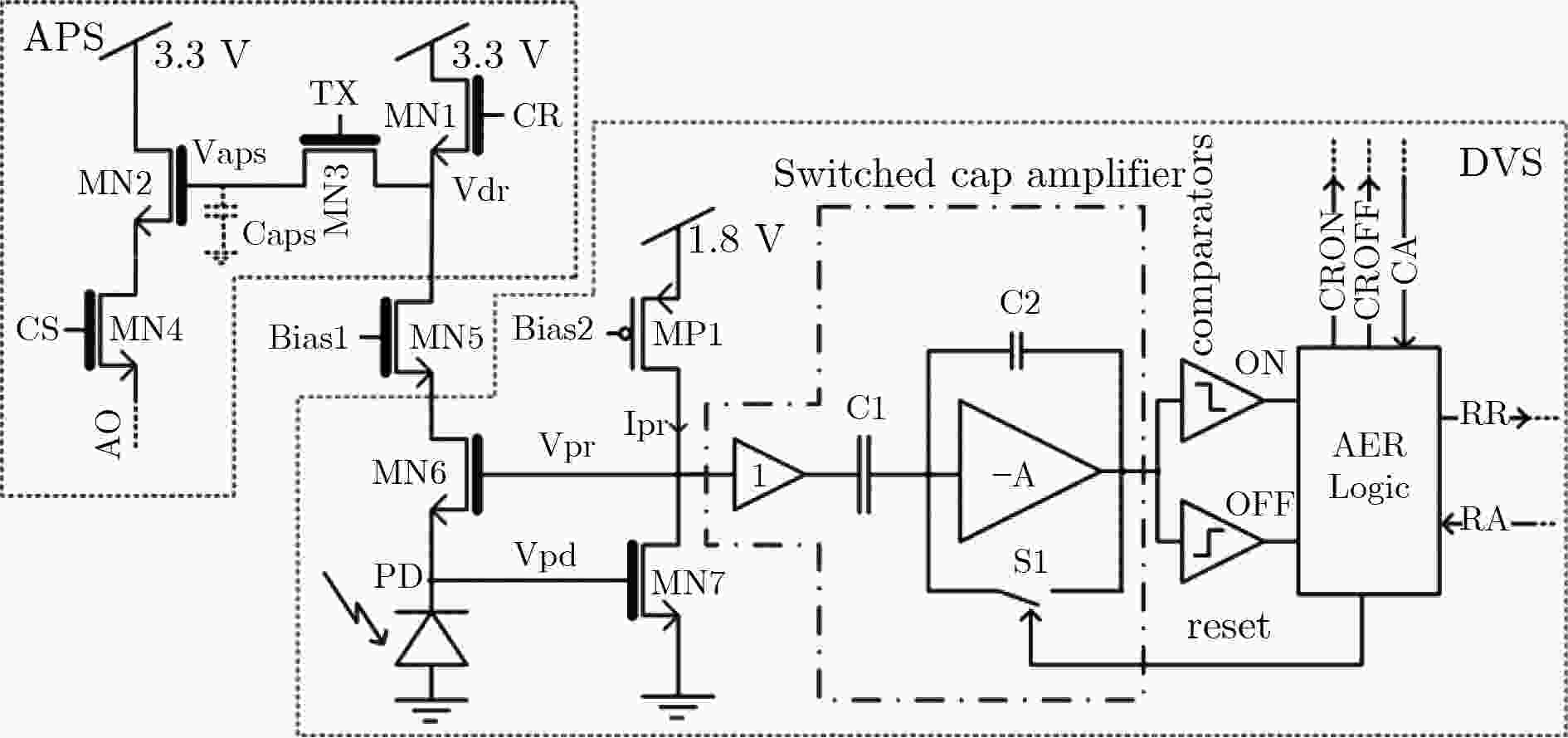

图 4 DAVIS像素原理图[16]

图 5 CeleX像素原理图[18]

图 6 Vidar电路[19]

表 1 几种事件相机的性能比较

类型 分辨率 时间

分辨率(µs)最小延时

(μs)宽度

(MEPS)功耗(mA) 重量(无镜头)

(g)动态范围(dB) 最小对比

敏感度(%)是否能输

出强度图DVXplorer Lite 320$ \times $240 65–200 < 200 100 < 140 75 Events: > 90 0.13 否 DAVIS346 346$ \times $260 1 < 100 12 < 180 100 g DVS: 120

APS: 56.714.3 (on)

22.5 (off)是 DVXplorer 640$ \times $480 65–200 < 200 165 < 140 100 g Events: > 90 0.13 否 Gen 4 CD 1280$ \times $720 – 20-150 1066 32–84 – > 124 11 否 CeleX-IV 768$ \times $640 – 10 200 – – 90 30 是 CeleX-V 1280$ \times $800 – 8 140 400 – 120 10 是 表 2 基于纯事件流的不同重构的比较

文献 应用场景 适用范围 适配相机 特点 [23] 精确跟踪的相机旋转 静态场景

相机轻微旋转运动DVS128 能够进行高时间分辨率和高动态范围场景;

对环境和相机的运动有严格的限制。[24] 360°深度全景成像 静态场景,高速旋转 360°HDR深度相机

TUCO-3D实现高分辨率和高动态范围的自然场景

实时3维360°HDR全景成像;

对环境或相机的运动有严格的限制。[5] 极端、快速运动和高动态

范围场景的跟踪和重建动态场景,普通运动 DVS128 不会对相机运动和场景内容施加任何限制;

重构中具有严重的细节损失。[11] 实时重建高质量的图像 动态场景,普通运动 DVS128 重构的图像出现了一些噪声像素。 [25] 基于事件的视频重建、

基于事件的直接人脸检测- DVS128 从事件流中以超过2000 Frames/s的速度重构图像和视频,第1个从事件流中直接检测人脸的

方法。[26] 基于事件的物体分类

和视觉惯性测程任意运动

(包括高速运动)DAVIS240C 在模拟事件数据上训练的循环卷积网络的

事件到视频重构,优于以往最先进的方法。

重构具有更精细的细节和更少的噪声。[27] 基于事件的物体分类

和视觉惯性测程高动态范围场景

和弱光场景DAVIS240C,

Samsung DVS Gen3,

Color-DAVIS346合成快速物理现象的高帧率视频、

高动态范围视频和彩色视频。[28] 基于事件的物体分类

和视觉惯性测程高动态范围场景 DAVIS240, DAVIS346,

Prophesee,

CeleX sensors性能几乎与最先进的(E2VID[29,30])一样好,

但是计算成本只有一小部分,速度提高了3倍,FLOPs降低了10倍,参数减少了280倍。[30] 生成极高帧率视频 快速运动,高动态范围,极端照明条件 DAVIS 构建了具有更多细节的HDR图像和高帧率视频,在极端照明条件和快速运动时也不模糊。 [31] 重构超分辨率图像 快速运动

高动态范围DAVIS 从模拟和真实数据的事件中重构高质量的超分辨率图像,可以恢复非常复杂的物体,如人脸。 [32] 语义分割、对象识别和检测 高动态范围 Samsung DVS Gen3, DAVIS240C 进一步扩展到锐化图像重建

和彩色事件图像重建。[33] 高速视觉任务 常速和高速场景 spike camera 提出一种脉冲神经模型,在高速运动和静态场景下都能重建出高质量的视觉图像。 表 3 基于事件流和传统图像的不同重构的比较

文献 应用场景 适用范围 适配相机 特点 [37] 生成高速视频 前景是高速运动

背景是静态的DVS(事件流) 只能为形状简单的物体恢复大而平滑的运动,

不能处理有变形或经历3维刚性运动的物体。[12] 重构高动态范围,

高时间分辨率图像和视频高速、高动态范围 DAVIS240C 在夜间行驶的高速、弱光条件下,能够恢复

运动模糊的物体;相机直接对准太阳过度曝光的

场景,能恢复树叶和树枝等特征。[38] 从单个模糊帧及其事件数据重构高帧率、清晰的视频 低光照和复杂

动态场景DAVIS 在低光照和复杂动态场景等不同条件下

高效地生成高质量的高帧率视频。[39] 消除模糊重构高时间

分辨率视频低光照和复杂的

动态场景DAVIS346 极端的灯光变化会降低该方法在高动态场景下的性能,事件误差累积的噪声会降低重构图像的质量。 -

[1] KIM H, LEUTENEGGER S, and DAVISON A J. Real-time 3D reconstruction and 6-DoF tracking with an event camera[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 349–364. [2] VIDAL A R, REBECQ H, HORSTSCHAEFER T, et al. Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high-speed scenarios[J]. IEEE Robotics and Automation Letters, 2018, 3(2): 994–1001. doi: 10.1109/LRA.2018.2793357 [3] LAGORCE X, MEYER C, IENG S H, et al. Asynchronous event-based multikernel algorithm for high-speed visual features tracking[J]. IEEE Transactions on Neural Networks and Learning Systems, 2015, 26(8): 1710–1720. doi: 10.1109/TNNLS.2014.2352401 [4] BARDOW P, DAVISON A J, and LEUTENEGGER S. Simultaneous optical flow and intensity estimation from an event camera[C]. 2016 IEEE Conference on Computer Vision and pattern Recognition, Las Vegas, USA, 2016: 884–892. [5] LAGORCE X, ORCHARD G, GALLUPPI F, et al. HOTS: A hierarchy of event-based time-surfaces for pattern recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(7): 1346–1359. doi: 10.1109/TPAMI.2016.2574707 [6] SIRONI A, BRAMBILLA M, BOURDIS N, et al. HATS: Histograms of averaged time surfaces for robust event-based object classification[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1731–1740. [7] 张远辉, 许璐钧, 徐柏锐, 等. 基于事件相机的人体动作识别方法[J]. 计量学报, 2022, 43(5): 583–589. doi: 10.3969/j.issn.1000-1158.2022.05.04ZHANG Yuanhui, XU Lujun, XU Borui, et al. Human action recognition method based on event camera[J]. Acta Metrologica Sinica, 2022, 43(5): 583–589. doi: 10.3969/j.issn.1000-1158.2022.05.04 [8] GALLEGO G, LUND J E A, MUEGGLER E, et al. Event-based, 6-DOF camera tracking from photometric depth maps[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(10): 2402–2412. doi: 10.1109/TPAMI.2017.2769655 [9] GEHRIG D, LOQUERCIO A, DERPANIS K, et al. End-to-end learning of representations for asynchronous event-based data[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 5633–5643. [10] CORDONE L, MIRAMOND B, and THIERION P. Object detection with spiking neural networks on automotive event data[C]. International Joint Conference on Neural Networks, Padua, Italy, 2022.doi: 10.1109/IJCNN55064.2022.9892618. [11] MUNDA G, REINBACHER C, and POCK T. Real-time intensity-image reconstruction for event cameras using manifold regularisation[J]. International Journal of Computer Vision, 2018, 126(12): 1381–1393. doi: 10.1007/s11263-018-1106-2 [12] SCHEERLINCK C, BARNES N, and MAHONY R. Continuous-time intensity estimation using event cameras[C]. The 14th Asian Conference on Computer Vision, Perth, Australia, 2018: 308–324. [13] MAHOWALD M. VLSI analogs of neuronal visual processing: A synthesis of form and function[D]. [Ph. D. dissertation], California Institute of Technology, 1992. [14] LICHTSTEINER P, POSCH C, and DELBRUCK T. A 128×128 120 dB 15 µs latency asynchronous temporal contrast vision sensor[J]. IEEE Journal of Solid-State Circuits, 2008, 43(2): 566–576. doi: 10.1109/JSSC.2007.914337 [15] POSCH C, MATOLIN D, and WOHLGENANNT R. A QVGA 143 dB dynamic range frame-free PWM image sensor with lossless pixel-level video compression and time-domain CDS[J]. IEEE Journal of Solid-State Circuits, 2011, 46(1): 259–275. doi: 10.1109/JSSC.2010.2085952 [16] BRANDLI C, BERNER R, YANG Minhao, et al. A 240×180 130 db 3 µs latency global shutter spatiotemporal vision sensor[J]. IEEE Journal of Solid-State Circuits, 2014, 49(10): 2333–2341. doi: 10.1109/JSSC.2014.2342715 [17] MOEYS D P, CORRADI F, LI Chenghan, et al. A sensitive dynamic and active pixel vision sensor for color or neural imaging applications[J]. IEEE Transactions on Biomedical Circuits and Systems, 2018, 12(1): 123–136. doi: 10.1109/TBCAS.2017.2759783 [18] HUANG Jing, GUO Menghan, and CHEN Shoushun. A dynamic vision sensor with direct logarithmic output and full-frame picture-on-demand[C]. 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, USA, 2017: 1–4. [19] HUANG Tiejun, ZHENG Yajing, YU Zhaofei, et al. 1000×faster Camera and machine vision with ordinary devices[EB/OL]. https://www.sciecedirect.com/scince/articel/pii/s2095809922002077. [20] FOSSUM E R. CMOS image sensors: Electronic camera-on-a-chip[J]. IEEE Transactions on Electron Devices, 1997, 44(10): 1689–1698. doi: 10.1109/16.628824 [21] DONG Siwei, HUANG Tiejun, and TIAN Yonghong. Spike camera and its coding methods[C]. 2017 Data Compression Conference, Snowbird, USA, 2021: 437. [22] COOK M, GUGELMANN L, JUG F, et al. Interacting maps for fast visual interpretation[C]. 2011 International Joint Conference on Neural Networks, San Jose, USA, 2011: 770–776. [23] KIM H, HANDA A, BENOSMAN R, et al. Simultaneous mosaicing and tracking with an event camera[J]. J Solid State Circ, 2008, 43: 566–576. [24] BELBACHIR A N, SCHRAML S, MAYERHOFER M, et al. A novel HDR depth camera for real-time 3D 360° panoramic vision[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, USA, 2014: 425–432. [25] BARUA S, MIYATANI Y, and VEERARAGHAVAN A. Direct face detection and video reconstruction from event cameras[C]. 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, USA, 2016: 1–9. [26] REBECQ H, RANFTL R, KOLTUN V, et al. Events-to-video: Bringing modern computer vision to event cameras[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3852–3861. [27] REBECQ H, RANFTL R, KOLTUN V, et al. High speed and high dynamic range video with an event camera[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(6): 1964–1980. doi: 10.1109/TPAMI.2019.2963386 [28] SCHEERLINCK C, REBECQ H, GEHRIG D, et al. Fast image reconstruction with an event camera[C]. 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, USA, 2020: 156–163. [29] REBECQ H, GEHRIG D, and SCARAMUZZA D. ESIM: An open event camera simulator[C]. The 2nd Conference on Robot Learning, Zürich, Switzerland, 2018: 969–982. [30] WANG Lin, MOSTAFAVI I S M, HO Y S, et al. Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networks[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 10073–10082. [31] WANG Lin, KIM T K, and YOON K J. EventSR: From asynchronous events to image reconstruction, restoration, and super-resolution via end-to-end adversarial learning[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 8312–8322. [32] WANG Lin, KIM T K, and YOON K J. Joint framework for single image reconstruction and super-resolution with an event camera[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(11): 7657–7673. doi: 10.1109/TPAMI.2021.3113352 [33] ZHU Lin, DONG Siwei, LI Jianing, et al. Retina-like visual image reconstruction via spiking neural model[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 1435–1443. [34] ZHU Lin, WANG Xiao, CHANG Yi, et al. Event-based video reconstruction via potential-assisted spiking neural network[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 3584–3594. [35] DUWEK H C, SHALUMOV A, and TSUR E E. Image reconstruction from neuromorphic event cameras using Laplacian-prediction and Poisson integration with spiking and artificial neural networks[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Nashville, USA, 2021: 1333–1341. [36] BRANDLI C, MULLER L, and DELBRUCK T. Real-time, high-speed video decompression using a frame-and event-based DAVIS sensor[C]. 2014 IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 2014: 686–689. [37] LIU Hanchao, ZHANG Fanglue, MARSHALL D, et al. High-speed video generation with an event camera[J]. The Visual Computer, 2017, 33(6): 749–759. [38] PAN Liyuan, SCHEERLINCK C, YU Xin, et al. Bringing a blurry frame alive at high frame-rate with an event camera[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 6813–6822. [39] PAN Liyuan, HARTLEY R, SCHEERLINCK C, et al. High frame rate video reconstruction based on an event camera[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(5): 2519–2533. doi: 10.1109/TPAMI.2020.3036667 [40] SHEDLIGERI P and MITRA K. Photorealistic image reconstruction from hybrid intensity and event-based sensor[J]. Journal of Electronic Imaging, 2019, 28(6): 063012. doi: 10.1117/1.JEI.28.6.063012 -

下载:

下载:

下载:

下载: