Visual Optical Flow Computing: Algorithms and Applications

-

摘要: 视觉光流计算是计算机视觉从处理2维图像走向加工3维视频的重要技术手段,是描述视觉运动信息的主要方式。光流计算技术已经发展了较长的时间,随着相关技术尤其是深度学习技术在近些年的迅速发展,光流计算的性能得到了极大提升,但仍然有大量的局限性问题没有解决,准确、快速且稳健的光流计算目前仍然是一个有挑战性的研究领域和业内研究热点。光流计算作为一种低层视觉信息处理技术,其技术进展也将有助于相关中高层级视觉任务的实现。该文主要内容是介绍基于计算机视觉的光流计算及其技术发展路线,从经典算法和深度学习算法这两个主流技术路线出发,总结了技术发展过程中产生的重要理论、方法与模型,着重介绍了各类方法与模型的核心思想,说明了各类数据集及相关性能指标,同时简要介绍了光流计算技术的主要应用场景,并对今后的技术方向进行了展望。Abstract: Visual optical flow calculation is an important technique for computer vision to move from processing 2D images to processing 3D videos, and is the main way of describing visual motion information. The optical flow calculation technique has been developed for a long time. With the rapid development of related technologies, especially deep learning technology in recent years, the performance of optical flow calculation has been greatly improved. However, there are still many limitations that have not been solved. Accurate, fast, and robust optical flow calculation is still a challenging research field and a hot topic in the industry. As a low-level visual information processing technology, the implementation of related high-level visual tasks will also be contributed by the technological advances of optical flow calculation. In this paper, the development path of optical flow calculation based on computer vision is mainly introduced. The important theories, methods, and models generated during the technological development process from the two mainstream technology paths of classical algorithms and deep learning algorithms are summarized, the core ideas of various methods and models are being introduced and the various datasets and performance indicators are explained, the main application scenarios of optical flow calculation technology are briefly introduced, and the future technical directions are also prospected.

-

Key words:

- Computer vision /

- Optical flow /

- Deep learning /

- Overview

-

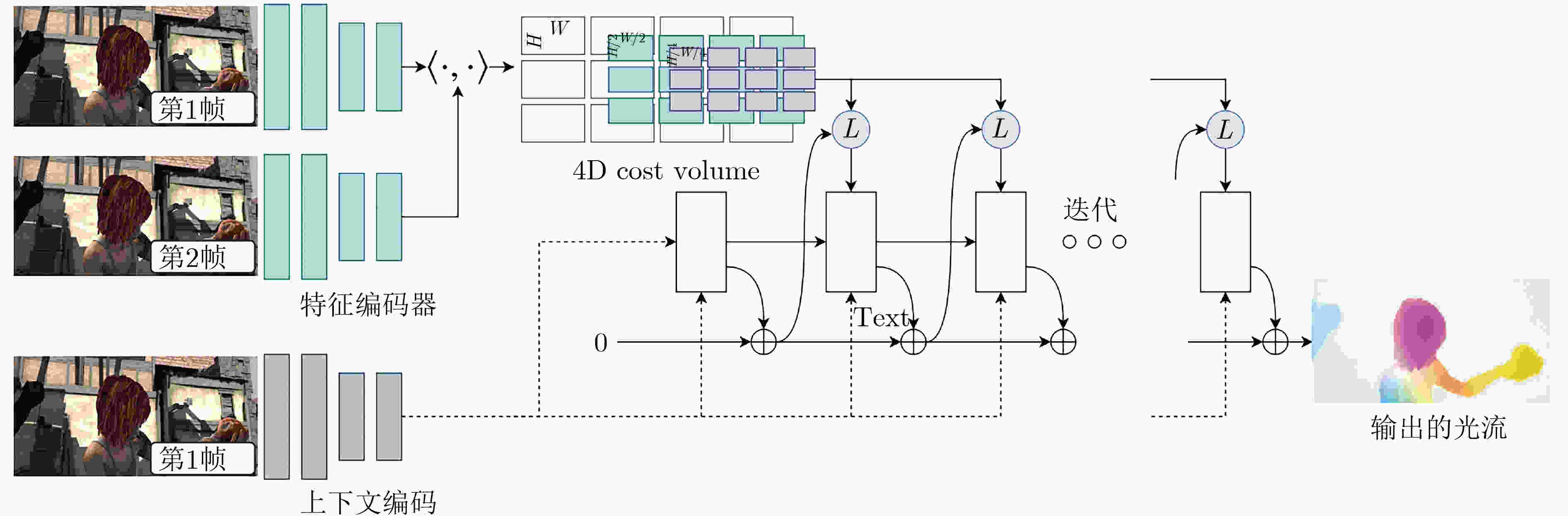

图 1 传统框架与PWC-Net框架图对比图(图片改绘自文献[10])

图 2 RATF模型框架图(图片改绘自文献[13])

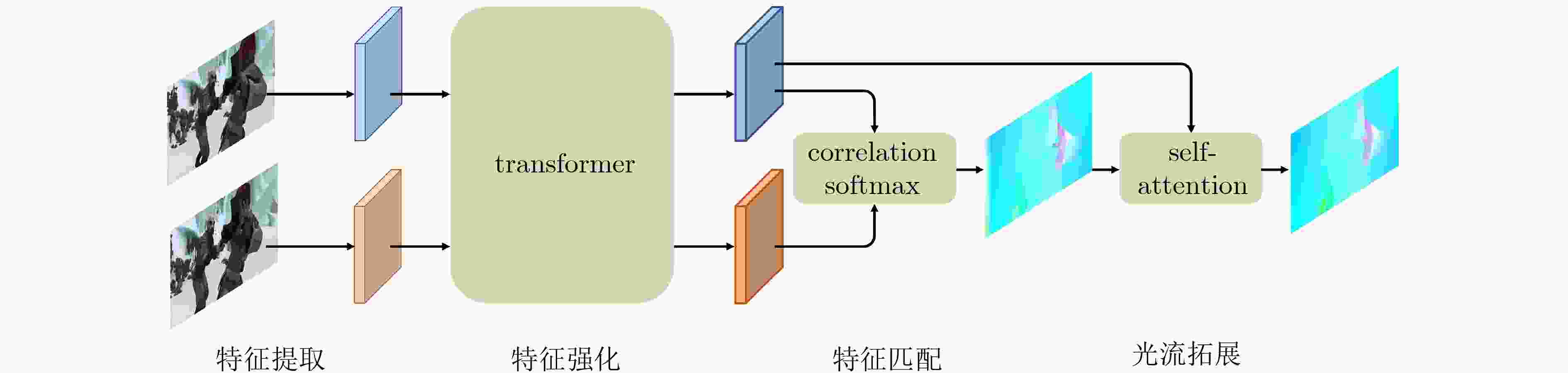

图 3 GMFlow的框架图(图片改绘自文献[18])

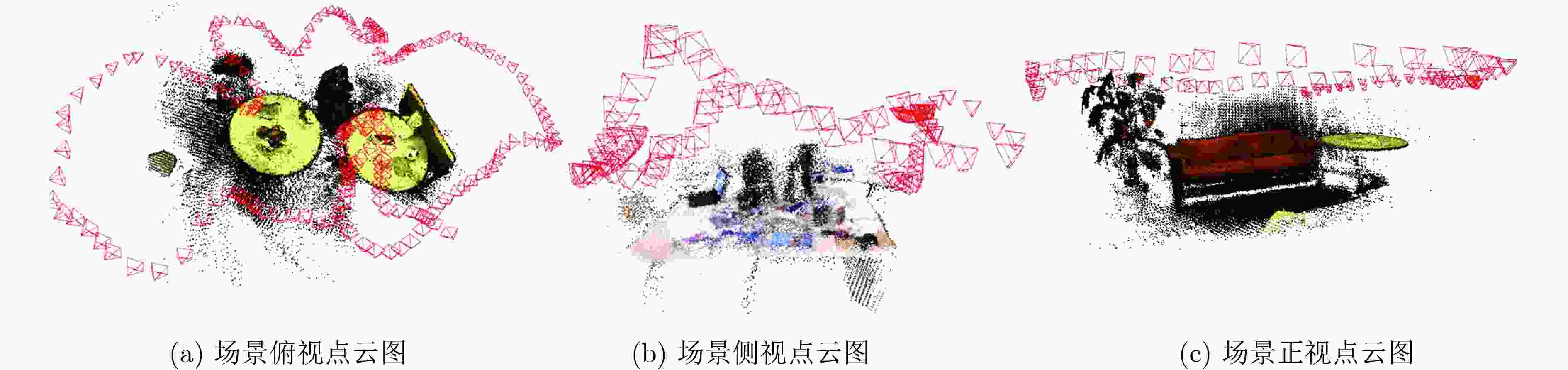

图 4 光流SLAM效果图(图片出自文献[43])

表 1 光流估计监督模型汇总

年份 模型名称 主要问题方向 突出特点 2015 FlowNet[6] 表明端到端的CNN可以做光流估计 Encoder-decoder结构 2016 PatchBatch[15] 利用CNN提取高维特征做描述符计算匹配然后用Epic Flow(经典算法)稠密插值 使用STD结构

用CNN提取高维描述符FlowNet2.0[9] 相较FlowNet提高了光流精度 对CNN结构组合进行探索 2017 DCFlow[7] 提高了光流精度 提出4D 代价体以及CTF结构范式 2018 PWC-Net[10] 相较FlowNet2.0减少了模型参数

提高了光流精度

提高了光流实时性融合了特征金字塔

使用了扭曲(warp)层

以及标准CTF框架LiteFlowNet[11] 相较FlowNet2.0减少了网络参数 提出了对特征层

使用扭曲(warp)

结合残差网络减少参数数量

提出了级联流推断结构2019 IRR-PWC[12] 相较PWC-Net减少参数数量,提高了精度 提出了轻量化的迭代残差细化模块

可集合遮挡预测模块提高预测精度2020 RAFT[13] 在CTF后处理阶段使用GRU结构进行处理,可以有效使用上下文信息进行更新

保持模型参数情况下,有效提高了精度和处理速度使用GRU处理4D 代价体 2021 GMA[19] 通过互相关技术构建了Global Motion Aggregation(GMA)模块

优化了遮挡处理应用互相关技术

提高了遮挡下模型的性能SCV[20] 减少了4D 代价体的计算量

提高了计算速度与精度通过KNN建立稀疏的4D 代价体

通过多尺度位移编码器得到稠密的光流2022 AGFlow[21] 对场景整体运动的理解

遮挡问题结合运动信息与上下文信息

结合图神经网络自适应图推理模块进行光流估计GMFLowNet[22] 优化了大位移问题

优化了边缘在RAFT基础上融合了基于attention机制的匹配模块 GMFlow[18] 基于attention技术优化了代价体与卷积固有的局部相关性限制

优化了大位移基于transformer架构

采用全局匹配表 2 光流估计自监督模型汇总

年份 模型名称 主要问题方向 突出特点 2016 MIND[23] 自监督学习可以有效学习前后两帧之间的关联关系,并用于初始化光流计算 自监督

端到端Unsupervised

FlowNet[24]得到与FlowNet近似的精度自监督模型 借鉴传统能量优化中的光度损失和平滑损失

训练自监督模型2017 DFDFlow[26] 提高了精度 利用全卷积的DenseNet自监督巡训练

端到端UnFlow[25] 提高了精度 交换前后两帧顺序

对误差进行学习2018 Unsupervised

OAFlow[31]精度超越部分监督模型

优化遮挡问题设计的对遮挡的mask层屏蔽错误匹配 2019 DDFlow[26] 优化遮挡问题 采用知识蒸馏,老师网络类似UnFlow

学生网络增加针对遮挡的lossSelFlow[27] 提高抗遮挡能力 基于PWC-Net框架

利用超像素分割随机遮挡增强数据UFlow[28] 分析了之前自监督光流各个组件的性能以及优化组合

提高了光流估计精度提出的改进方法是代价体归一化、用于遮挡估计的梯度停止、在本地流分辨率下应用平滑性,以及用于自我监督的图像大小调整 2021 UPflow[29] 提高了精度

优化了边缘细节用CNN改进了基于双线性插值的coarse to fine方法

金字塔蒸馏损失SMURF[30] 提高精度超过PWC-Net和FlowNet2

RAFT自监督实现基于RAFT模型

full-image warping预测真外运动

多帧自我监督,以改善遮挡区域2022 SEBFlow[32] 基于事件摄像机的自监督方法 采用新传感器 表 3 光流估计模型评估公开数据集

名称 年份 特点 评价指标 KITTI2012[34] 2012 EPE,AEPE,fps,AE,AAE KITTI2015[35] 2015 真实驾驶场景测量数据集,其中 2012 测试图像集仅包含静止背景,2015 测试图像集则采用动态背景,显著增强了测试图像集的光流估计难度 异常光流占的百分比(Fl )

背景区域异常光流占的百分比(bg)

前景区域异常光流占的百分比(fg)

异常光流占所有标签的百分比(all)MPI-Sintel[36] 2012 使用开源动画电影《Sintel》由开源软件动画制作软件blender渲染出的合成数据集,场景效果可控,光流值准确。数据集仅截取部分典型场景,分为Sintel Clean和Sintel Final两组,其中Clean 数据集包含大位移、弱纹理、非刚性,大形变等困难场景,以测试光流计算模型的准确性; Final数据集通过添加运动模糊、雾化效果以及图像噪声使其更加贴近于现实场景,用于测试光流估计的可靠性 整幅图像上的端点误差(EPE,AEPE)

相邻帧中可见区域的端点误差(EPE -matched)

相邻帧中不可见区域的端点误差(EPE -unmatched)

距离最近遮挡边缘在 0~10 像素的端点误差(d0-10 )

速度在 0~10 像素的帧区域端点误差(s0-10)

速度在 10~40 像素的帧区域端点误差(s10-40)FlyingChairs[6] 2015 合成椅子与随机背景的组合的场景,利用仿射变换模拟运动场景,FlowNet基于此合成数据集训练 EPE,AEPE,fps,AE,AAE Scene Flow Datasets[38] 2016 包涵Driving、Monkaa、FlyingThings3D 3个数据集,其中FlyingThings3D组合不同物体与背景,模拟真实3维空间运动,以及动画Driving, Monkaa共同构成此数据集。此合成数据集除光流外还提供场景流 EPE,AEPE,fps,AE,AAE HD1K[39] 2016 城市自动驾驶真实数据集,涵盖了以前没有的、具有挑战性的情况,如光线不足或下雨,并带有像素级的不确定性 EPE,AEPE,fps,AE,AAE AutoFlow[40] 2021 基于学习的自动化数据集生成工具,由可学习的超参数控制数据集的生成,提供包括准确光流、数据增强、跨数据集泛化能力等,可以极大提高模型训练效果 在训练时使用本数据集,测试时利用其他数据集进行测试,评价指标同测试数据集 SHIFT[41] 2022 目前最大合成数据集包含了雾天、雨天、一天的时间、车辆人群的密集和离散,且具有全面的传感器套件和注释,可以提供连续域数据 EPE,AEPE,fps,AE,AAE 表 4 部分模型在Sintel及KITTI数据集上的性能(截至2023年3月)

模型名称 MPI Sintel

Clean(EPE-ALL)MPI Sintel

Clean排名MPI Sintel

Final(EPE-ALL)MPI Sintel

Final排名KITTI 2012

(Avg-All)KITTI

排名GMFlow+ 1.028 2 2.367 18 1.0 1 RATF 1.609 57 2.855 61 – – SMURF 3.152 143 4.183 121 1.4 20 PWC-Net 4.386 256 5.042 184 1.7 39 -

[1] HORN B and SCHUNCK B G. Determining optical flow[C]. SPIE 0281, Techniques and Applications of Image Understanding, Washington, USA, 1981. [2] LUCAS B D and KANADE T. An iterative image registration technique with an application to stereo vision[C]. The 7th International Joint Conference on Artificial Intelligence, Vancouver, Canada, 1981: 674–679. [3] BOUGUET J Y. Pyramidal implementation of the lucas kanade feature tracker. Intel Corporation, Microprocessor Research Labs, 1999. [4] BERTSEKAS D P. Constrained Optimization and Lagrange Multiplier Methods[M]. Boston: Academic Press, 1982: 724. [5] SONG Xiaojing. A Kalman filter-integrated optical flow method for velocity sensing of mobile robots[J]. Journal of Intelligent & Robotic Systems, 2014, 77(1): 13–26. [6] DOSOVITSKIY A, FISCHER P, ILG E, et al. FlowNet: Learning optical flow with convolutional networks[C]. 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015: 2758–2766. [7] XU Jia, RANFTL R, and KOLTUN V. Accurate optical flow via direct cost volume processing[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 5807–5815. [8] CHEN Qifeng and KOLTUN V. Full flow: Optical flow estimation by global optimization over regular grids[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 4706–4714. [9] ILG E, MAYER N, SAIKIA T, et al. FlowNet 2.0: Evolution of optical flow estimation with deep networks[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 1647–1655. [10] SUN Deqing, YANG Xiaodong, LIU Mingyu, et al. PWC-Net: CNNs for optical flow using pyramid, warping, and cost volume[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8934–8943. [11] HUI T W, TANG Xiaoou, and LOY C C. LiteFlowNet: A lightweight convolutional neural network for optical flow estimation[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8981–8989. [12] HUR J and ROTH S. Iterative residual refinement for joint optical flow and occlusion estimation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 5747–5756. [13] TEED Z and DENG Jia. RAFT: Recurrent all-pairs field transforms for optical flow[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 402–419. [14] REVAUD J, WEINZAEPFEL P, HARCHAOUI Z, et al. EpicFlow: Edge-preserving interpolation of correspondences for optical flow[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1164–1172. [15] GADOT D and WOLF L. PatchBatch: A batch augmented loss for optical flow[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 4236–4245. [16] THEWLIS J, ZHENG Shuai, TORR P H S, et al. Fully-trainable deep matching[C]. Proceedings of the British Machine Vision Conference 2016, York, UK, 2016. [17] WANNENWETSCH A S and ROTH S. Probabilistic pixel-adaptive refinement networks[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 11639–11648. [18] XU Haofei, ZHANG Jing, CAI Jianfei, et al. GMFlow: Learning optical flow via global matching[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 8111–8120. [19] JIANG Shihao, CAMPBELL D, LU Yao, et al. Learning to estimate hidden motions with global motion aggregation[C]. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, Canada, 2021: 9752–9761. [20] JIANG Shihao, LU Yao, LI Hongdong, et al. Learning optical flow from a few matches[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 16587–16595. [21] LUO Ao, YANG Fan, LUO Kunming, et al. Learning optical flow with adaptive graph reasoning[C]. Thirty-Sixth AAAI Conference on Artificial Intelligence, Vancouver Canada, 2021. [22] ZHAO Shiyu, ZHAO Long, ZHANG Zhixing, et al. Global matching with overlapping attention for optical flow estimation[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 17571–17580. [23] LONG Gucan, KNEIP L, ALVAREZ J M, et al. Learning image matching by simply watching video[C]. 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 434–450. [24] YU J J, HARLEY A W, and DERPANIS K G. Back to basics: Unsupervised learning of optical flow via brightness constancy and motion smoothness[C]. European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 3–10. [25] MEISTER S, HUR J, and ROTH S. UnFlow: Unsupervised learning of optical flow with a bidirectional census loss[C]. Thirty-Sixth AAAI Conference on Artificial Intelligence, New Orleans, USA, 2018. [26] LIU Pengpeng, KING I, LYU M R, et al. DDFlow: Learning optical flow with unlabeled data distillation[C]. Thirty-Sixth AAAI Conference on Artificial Intelligence, Honolulu, USA, 2019: 8770–8777. [27] LIU Pengpeng, LYU M, KING I, et al. SelFlow: Self-supervised learning of optical flow[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 4566–4575. [28] JONSCHKOWSKI R, STONE A, BARRON J T, et al. What matters in unsupervised optical flow[C]. 16th European Conference on Computer Vision, Glasgow, UK, 2020: 557–572. [29] LUO Kunming, WANG Chuan, LIU Shuaicheng, et al. UPFlow: Upsampling pyramid for unsupervised optical flow learning[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 1045–1054. [30] STONE A, MAURER D, AYVACI A, et al. SMURF: Self-teaching multi-frame unsupervised RAFT with full-image warping[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 3886–3895. [31] WANG Yang, YANG Yi, YANG Zhenheng, et al. Occlusion aware unsupervised learning of optical flow[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 4884–4893. [32] SHIBA S, AOKI Y, and GALLEGO G. Secrets of event-based optical flow[C]. 17th European Conference on Computer Vision, Tel Aviv, Israel, 2022. [33] LAI Weisheng, HUANG Jiabin, and YANG M H. Semi-supervised learning for optical flow with generative adversarial networks[C]. The 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2018: 892–896. [34] GEIGER A, LENZ P, and URTASUN R. Are we ready for autonomous driving? The KITTI vision benchmark suite[C]. 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 3354–3361. [35] KENNEDY R and TAYLOR C J. Optical flow with geometric occlusion estimation and fusion of multiple frames[C]. The 10th International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, Hong Kong, China, 2015: 364–377. [36] BUTLER D J, WULFF J, STANLEY G B, et al. A naturalistic open source movie for optical flow evaluation[C]. The 12th European Conference on Computer Vision, Florence, Italy, 2012: 611–625. [37] MAYER N, ILG E, HÄUSSER P, et al. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation[C]. The 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 4040–4048. [38] SCHRÖDER G, SENST T, BOCHINSKI E, et al. Optical flow dataset and benchmark for visual crowd analysis[C]. 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 2018: 1–6. [39] KONDERMANN D, NAIR R, HONAUER K, et al. The HCI benchmark suite: Stereo and flow ground truth with uncertainties for urban autonomous driving[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, USA, 2016: 19–28. [40] SUN Deqing, VLASIC D, HERRMANN C, et al. AutoFlow: Learning a better training set for optical flow[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021: 10088–10097. [41] SUN Tao, SEGU M, POSTELS J, et al. SHIFT: A synthetic driving dataset for continuous multi-task domain adaptation[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022: 21339–21350. [42] KALAL Z, MIKOLAJCZYK K, and MATAS J. Tracking-learning-detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(7): 1409–1422. doi: 10.1109/TPAMI.2011.239 [43] YE Weicai, YU Xingyuan, LAN Xinyue, et al. DeFlowSLAM: Self-supervised scene motion decomposition for dynamic dense SLAM[EB/OL]. https://arxiv.org/abs/2207.08794, 2022. [44] SANES J R and MASLAND R H. The types of retinal ganglion cells: Current status and implications for neuronal classification[J]. Annual Review of Neuroscience, 2015, 38(1): 221–246. doi: 10.1146/annurev-neuro-071714-034120 [45] GALLETTI C and FATTORI P. The dorsal visual stream revisited: Stable circuits or dynamic pathways?[J]. Cortex, 2018, 98: 203–217. doi: 10.1016/j.cortex.2017.01.009 [46] WEI Wei. Neural mechanisms of motion processing in the mammalian retina[J]. Annual Review of Vision Science, 2018, 4: 165–192. doi: 10.1146/annurev-vision-091517-034048 [47] ROSSI L F, HARRIS K D, and CARANDINI M. Spatial connectivity matches direction selectivity in visual cortex[J]. Nature, 2020, 588(7839): 648–652. doi: 10.1038/s41586-020-2894-4 -

下载:

下载:

下载:

下载: