An Incentivized Federated Learning Model Based on Contract Theory

-

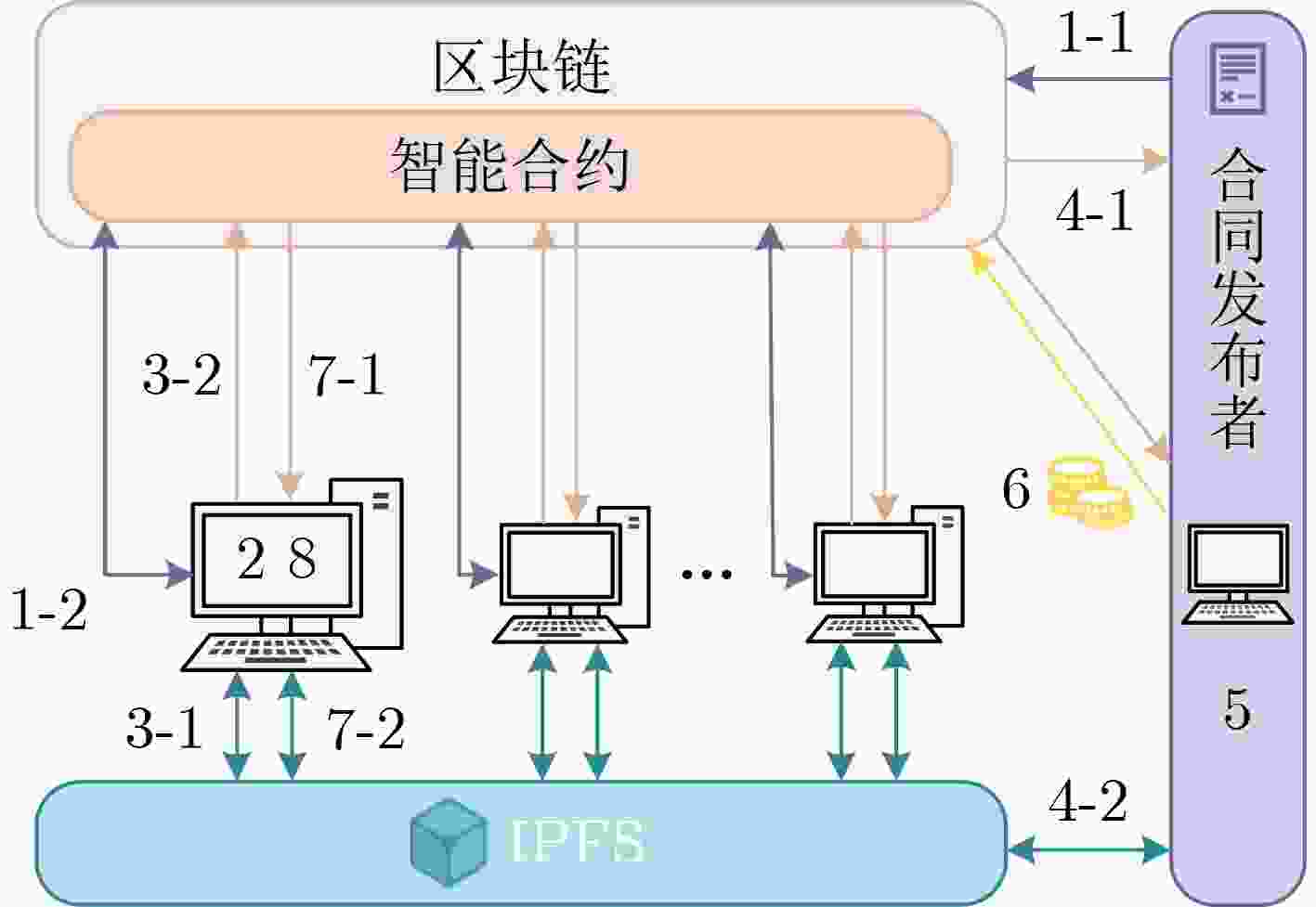

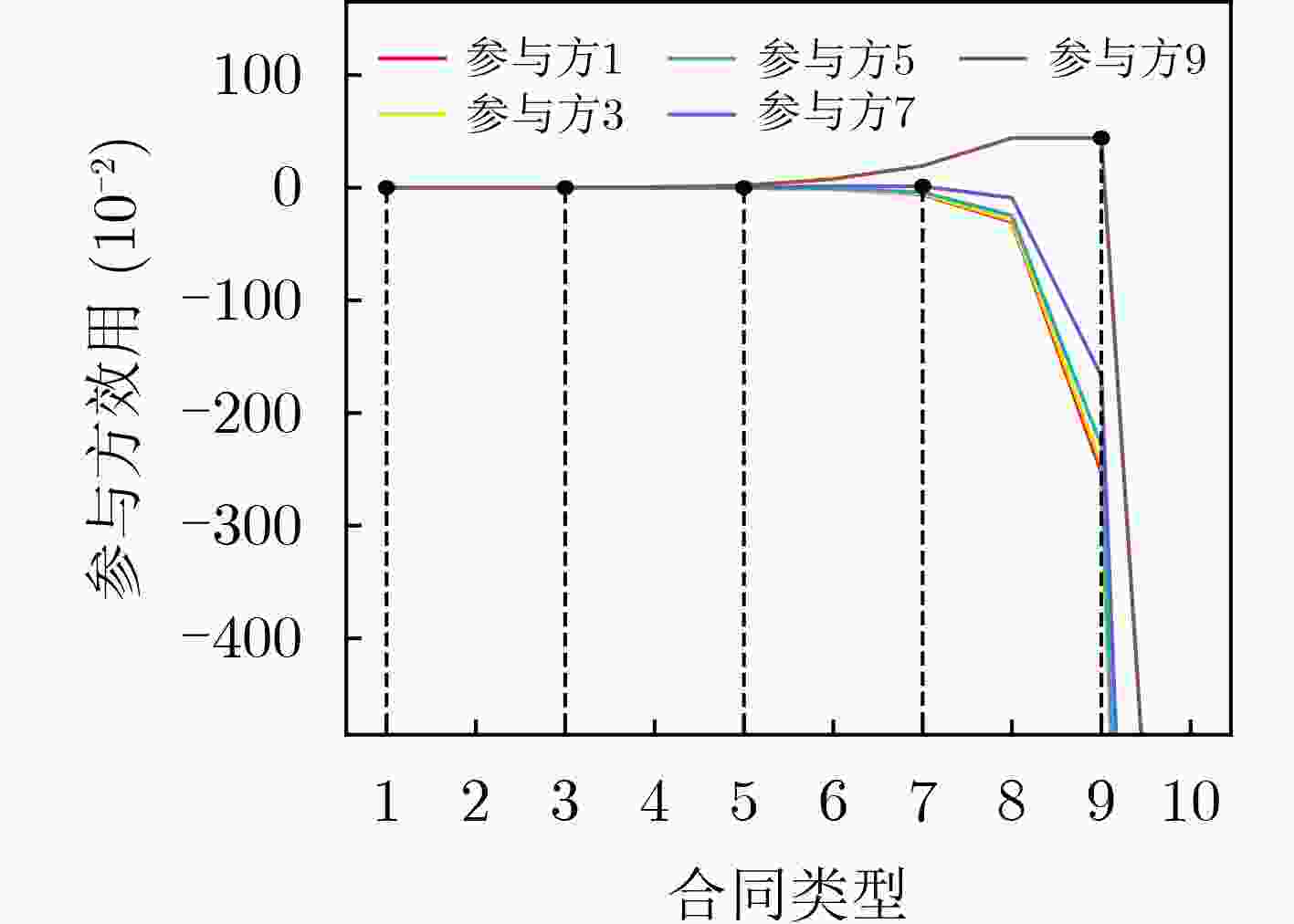

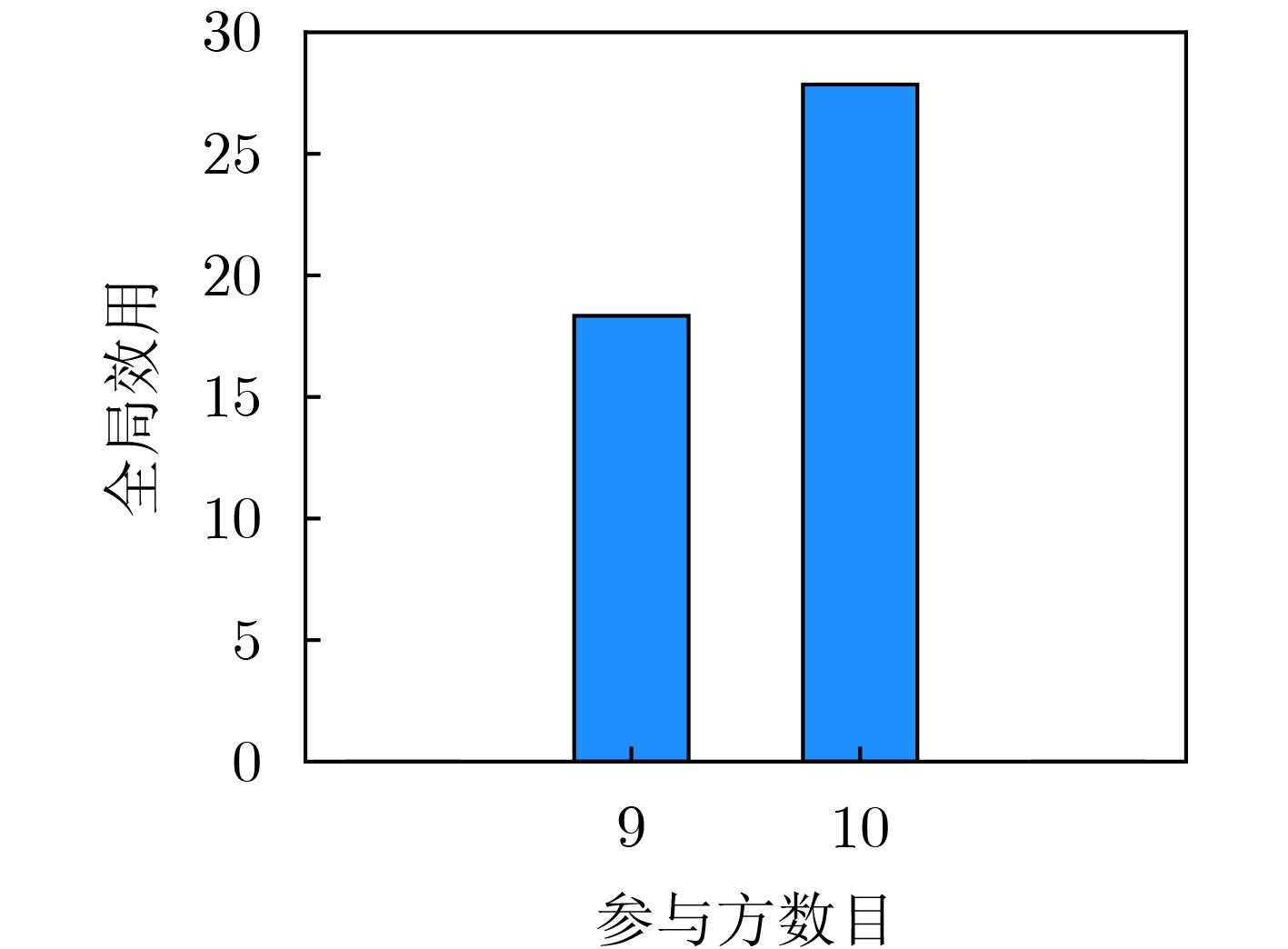

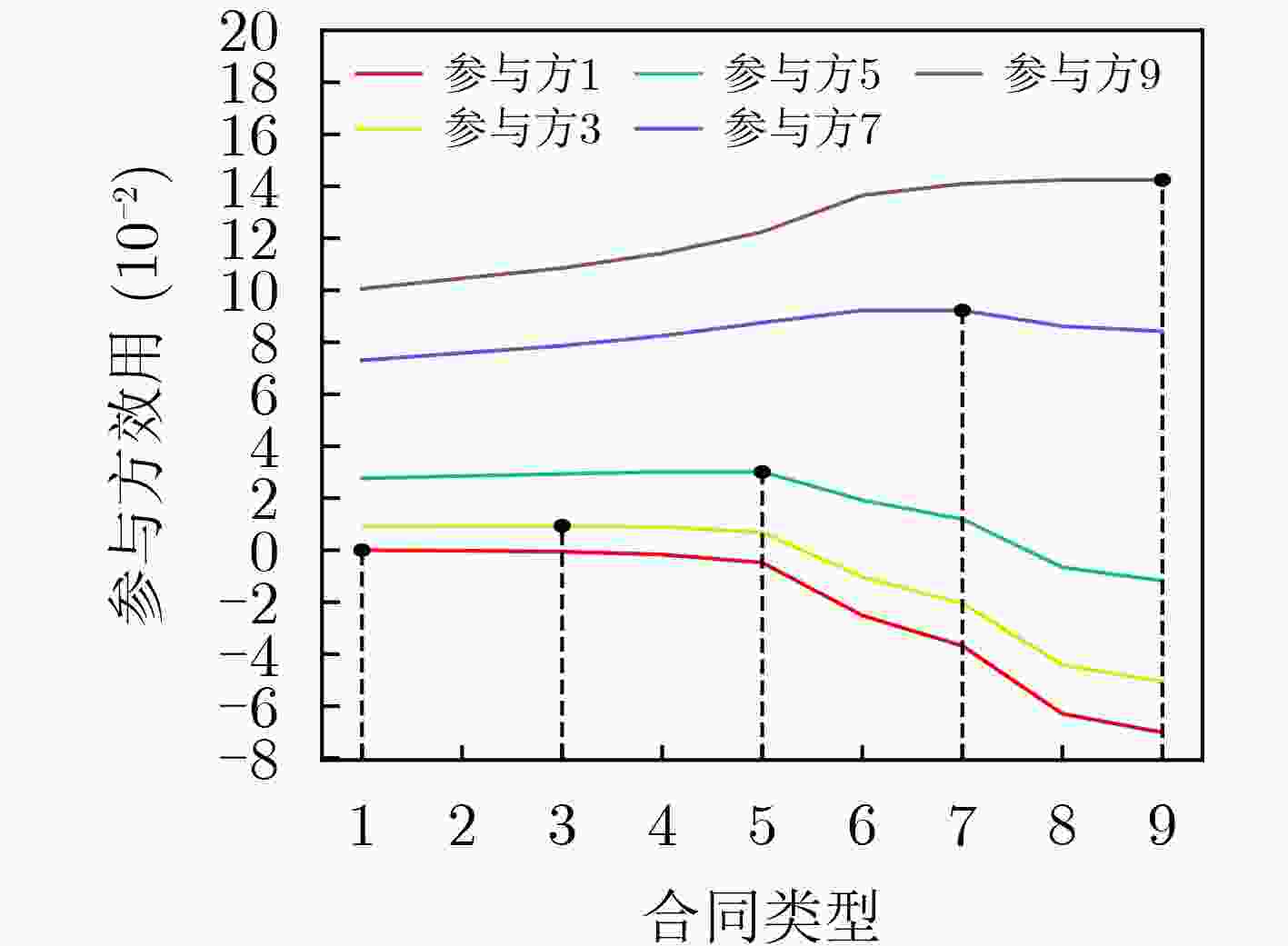

摘要: 针对目前较少研究去中心化联邦学习中的激励机制设计,且已有联邦学习激励机制较少以全局模型效果为出发点的现状,该文为去中心化联邦学习加入了基于合同理论的联邦学习激励机制,提出一种新的可激励的联邦学习模型。使用区块链与星际文件系统(IPFS)取代传统联邦学习的中央服务器,用于模型参数存储与分发,在此基础上使用一个合同发布者来负责合同的制定和发布,各个联邦学习参与方结合本地数据质量选择签订合同。每轮本地训练结束后合同发布者将对各个本地训练模型进行评估,若满足签订合同时约定的奖励发放条件则发放相应的奖励,同时全局模型的聚合也基于奖励结果进行模型参数的聚合。通过在MNIST数据集以及行业用电量数据集上进行实验验证,相比于传统联邦学习,加入激励机制后的联邦学习训练得到的全局模型效果更优,同时去中心化的结构也提高了联邦学习的鲁棒性。Abstract: In view of the fact that there is rare research on the incentive mechanism design in decentralized federated learning, and the existing incentive mechanisms for federated learning are seldom based on the global model effect, an incentive mechanism based on contract theory, is added into decentralized federated learning and a new incentivized federated learning model is proposed. A blockchain and an InterPlanetary File System (IPFS) are used to replace the central server of traditional federated learning for model parameter storage and distribution, based on which a contract publisher is responsible for contract formulation and distribution, and each federated learning participant chooses to sign a contract based on its local data quality. The contract publisher evaluates each local training model after each round of local training and issues a reward based on the agreed-upon conditions in the contract. The global model aggregation also aggregates model parameters based on the reward results. Experimental validation on the MNIST dataset and industry electricity consumption dataset show that the proposed incentivized federated learning model outperforms traditional federated learning and its decentralized structure improves its robustness.

-

Key words:

- Federated learning /

- Incentive mechanism /

- Contract theory /

- Decentralization /

- Power big data

-

表 1 MNIST数据各组实验参与方数据设置情况表

实验编号 参与方数目 数据不均匀比例 数据数量 1 9 0.8 [1500, 2000, 2500, 3500, 5000, 7000, 9500, 13000, 16000] 2 10 0.8 [1000, 1500, 2000, 2500, 3500, 5000, 6500, 8500, 12000, 17500] 3 10 0.9 [1000, 1500, 2000, 2500, 3500, 5000, 6500, 8500, 12000, 17500] 表 2 MNIST数据各组实验合同设置情况表

实验编号 合同数目 参与方数据质量 模型准确率标准线 合同注册费 合同奖励 1 9 [0.1500, 0.2000, 0.2500,

0.3500, 0.5000, 0.7000,

0.9500, 1.3000, 1.6000][0.0075, 0.0100, 0.0125,

0.0175, 0.0250, 0.0350,

0.0475, 0.0650, 0.0800][0.00001, 0.00006, 0.00020,

0.00156, 0.01343, 0.10045,

0.61885, 4.06914, 13.53474][0.2250, 0.4000, 0.6250,

1.2250, 2.5000, 4.9000,

9.0250, 16.9000, 25.6000]2 10 [0.1000, 0.1500, 0.2000,

0.2500, 0.3500, 0.5000,

0.6500, 0.8500, 1.2000, 1.7500][0.0050, 0.0075, 0.0100,

0.0125, 0.0175, 0.0250,

0.0325, 0.0425, 0.0600, 0.0875][0.000001, 0.00001, 0.00005,

0.00019, 0.00155, 0.01342,

0.06243, 0.31061, 2.54491, 24.91741][0.1000, 0.2250, 0.4000,

0.6250, 1.2250, 2.5000,

4.2250, 7.2250, 14.4000, 30.6250]3 10 [0.1000, 0.1500, 0.2000,

0.2500, 0.3500, 0.5000,

0.6500, 0.8500, 1.2000, 1.7500][0.0050, 0.0075, 0.0100,

0.0125, 0.0175, 0.0250,

0.0325, 0.0425, 0.0600, 0.0875][0.000001, 0.00001, 0.00005,

0.00019, 0.00155, 0.01342,

0.06243, 0.31061, 2.54491, 24.91741][0.1000, 0.2250, 0.4000,

0.6250, 1.2250, 2.5000,

4.2250, 7.2250, 14.4000, 30.6250]表 3 MNIST数据各组实验结果对照表

实验编号 传统联邦学习(FedAvg聚合)全局模型准确率 传统联邦学习(FedProx聚合)全局模型准确率 传统联邦学习(SCAFFOLD聚合)全局模型准确率 本文可激励联邦学习(奖励比例聚合)全局模型准确率 1 0.8945 0.8958 0.8971 0.9031 2 0.8933 0.8961 0.8973 0.9003 3 0.7790 0.78310.7905 0.7905 0.8022 表 4 行业用电量数据各组实验合同设置情况表

实验编号 合同数目 参与方数据质量 模型测试标准线 合同注册费 合同奖励 1 9 [0.7766, 0.7855, 0.7944, 0.8055, 0.8251, 0.8757, 0.8967, 0.9297, 0.9386] [0.0388, 0.0393, 0.0397, 0.0403, 0.0413, 0.0438, 0.0448, 0.0465, 0.0469] [0.2187, 0.2305, 0.2405, 0.2564, 0.2860, 0.3806, 0.4253, 0.5148, 0.5378] [6.0218, 6.1780, 6.3044, 6.4964, 6.8228, 7.6738, 8.0282, 8.6490, 8.7984] 2 50 [0.5074, 0.5076, 0.5206, 0.6992, 0.7312, 0.7550, 0.7812, 0.8709, 0.8723, 0.8750, ···] [0.0254, 0.0254, 0.0260, 0.0350, 0.0366, 0.0378, 0.0391, 0.0435, 0.0436, 0.0438, ···] [0.0171, 0.0171, 0.0189, 0.1005, 0.1256, 0.1481, 0.1770, 0.3279, 0.3319, 0.3401, ···] [2.5806, 2.5806, 2.7040, 4.9000, 5.3582, 5.7154, 6.1152, 7.5690, 7.6038, 7.6738, ···] 3 100 [0.5460, 0.5460, 0.6424, 0.7820, 0.7881, 0.8711, 0.8749, 0.8860, 0.8971, 0.9042, ···] [0.0273, 0.0273, 0.0321, 0.0391, 0.0394, 0.0436, 0.0437, 0.0443, 0.0449, 0.0452, ···] [0.0265, 0.0265, 0.0599, 0.1847, 0.1919, 0.3381, 0.3422, 0.3679, 0.3953, 0.4097, ···] [2.9812, 2.9812, 4.1216, 6.1152, 6.2094, 7.6038, 7.6388, 7.8500, 8.0640, 8.1722, ···] 表 5 行业用电量数据各组实验设置与结果对照表

实验编号 参与方数目 传统联邦学习(FedAvg聚合)全局模型RMSE 传统联邦学习(FedProx聚合)全局模型RMSE 传统联邦学习(SCAFFOLD聚合)全局模型RMSE 本文可激励联邦学习(奖励比例聚合)全局模型RMSE 1 9 0.143608 0.140512 0.139842 0.135423 2 50 0.135517 0.135411 0.135375 0.135252 3 100 0.135652 0.135508 0.135419 0.135396 -

[1] MCMAHAN H B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2017: 1273–1282. [2] TRAN N H, BAO Wei, ZOMAYA A, et al. Federated learning over wireless networks: Optimization model design and analysis[C]. IEEE INFOCOM 2019 - IEEE Conference on Computer Communications, Paris, France, 2019: 1387–1395. [3] YAN Zhigang, LI Dong, YU Xianhua, et al. Latency-efficient wireless federated learning with quantization and scheduling[J]. IEEE Communications Letters, 2022, 26(11): 2621–2625. doi: 10.1109/LCOMM.2022.3199490 [4] KONEČNÝ J, MCMAHAN H B, YU F X, et al. Federated learning: Strategies for improving communication efficiency[EB/OL]. https://arxiv.org/abs/1610.05492, 2017. [5] TIAN Mengmeng, CHEN Yuxin, LIU Yuan, et al. A contract theory based incentive mechanism for federated learning[C/OL]. International Workshop on Federated and Transfer Learning for Data Sparsity and Confidentiality in Conjunction with IJCAI 2021 (FTL-IJCAI'21), 2021. [6] WITT L, HEYER M, TOYODA K, et al. Decentral and incentivized federated learning frameworks: A systematic literature review[J]. IEEE Internet of Things Journal, 2023, 10(4): 3642–3663. doi: 10.1109/JIOT.2022.3231363 [7] ZHAN Yufeng, ZHANG Jie, HONG Zicong, et al. A survey of incentive mechanism design for federated learning[J]. IEEE Transactions on Emerging Topics in Computing, 2022, 10(2): 1035–1044. doi: 10.1109/TETC.2021.3063517 [8] LYU Lingjuan, YU Jiangshan, NANDAKUMAR K, et al. Towards fair and privacy-preserving federated deep models[J]. IEEE Transactions on Parallel and Distributed Systems, 2020, 31(11): 2524–2541. doi: 10.1109/TPDS.2020.2996273 [9] LI Yuzheng, CHEN Chuan, LIU Nan, et al. A blockchain-based decentralized federated learning framework with committee consensus[J]. IEEE Network, 2021, 35(1): 234–241. doi: 10.1109/MNET.011.2000263 [10] WENG Jiasi, WENG Jian, ZHANG Jilian, et al. DeepChain: Auditable and privacy-preserving deep learning with blockchain-based incentive[J]. IEEE Transactions on Dependable and Secure Computing, 2021, 18(5): 2438–2455. doi: 10.1109/TDSC.2019.2952332 [11] BAO Xianglin, SU Cheng, XIONG Yan, et al. FLChain: A blockchain for auditable federated learning with trust and incentive[C]. 2019 5th International Conference on Big Data Computing and Communications (BIGCOM), Qingdao, China, 2019: 151–159. [12] 王鑫, 周泽宝, 余芸, 等. 一种面向电能量数据的联邦学习可靠性激励机制[J]. 计算机科学, 2022, 49(3): 31–38. doi: 10.11896/jsjkx.210700195WANG Xin, ZHOU Zebao, YU Yun, et al. Reliable incentive mechanism for federated learning of electric metering data[J]. Computer Science, 2022, 49(3): 31–38. doi: 10.11896/jsjkx.210700195 [13] TU Xuezhen, ZHU Kun, LUONG N C, et al. Incentive mechanisms for federated learning: From economic and game theoretic perspective[J]. IEEE Transactions on Cognitive Communications and Networking, 2022, 8(3): 1566–1593. doi: 10.1109/TCCN.2022.3177522 [14] KANG Jiawen, XIONG Zehui, NIYATO D, et al. Incentive mechanism for reliable federated learning: A joint optimization approach to combining reputation and contract theory[J]. IEEE Internet of Things Journal, 2019, 6(6): 10700–10714. doi: 10.1109/JIOT.2019.2940820 [15] LIM W Y B, XIONG Zehui, MIAO Chunyan, et al. Hierarchical incentive mechanism design for federated machine learning in mobile networks[J]. IEEE Internet of Things Journal, 2020, 7(10): 9575–9588. doi: 10.1109/JIOT.2020.2985694 [16] LIM W Y B, GARG S, XIONG Zehui, et al. Dynamic contract design for federated learning in smart healthcare applications[J]. IEEE Internet of Things Journal, 2021, 8(23): 16853–16862. doi: 10.1109/JIOT.2020.3033806 [17] LE T H T, TRAN N H, TUN Y K, et al. An incentive mechanism for federated learning in wireless cellular networks: An auction approach[J]. IEEE Transactions on Wireless Communications, 2021, 20(8): 4874–4887. doi: 10.1109/TWC.2021.3062708 [18] WU Maoqiang, YE Dongdong, DING Jiahao, et al. Incentivizing differentially private federated learning: A multidimensional contract approach[J]. IEEE Internet of Things Journal, 2021, 8(13): 10639–10651. doi: 10.1109/JIOT.2021.3050163 [19] DING Ningning, FANG Zhixuan, and HUANG Jianwei. Optimal contract design for efficient federated learning with multi-dimensional private information[J]. IEEE Journal on Selected Areas in Communications, 2021, 39(1): 186–200. doi: 10.1109/JSAC.2020.3036944 [20] WANG Yuntao, SU Zhou, LUAN T H, et al. Federated learning with fair incentives and robust aggregation for UAV-aided crowdsensing[J]. IEEE Transactions on Network Science and Engineering, 2022, 9(5): 3179–3196. doi: 10.1109/TNSE.2021.3138928 [21] LIM W Y B, XIONG Zehui, KANG Jiawen, et al. When information freshness meets service latency in federated learning: A task-aware incentive scheme for smart industries[J]. IEEE Transactions on Industrial Informatics, 2022, 18(1): 457–466. doi: 10.1109/TII.2020.3046028 [22] YE Dongdong, HUANG Xumin, WU Yuan, et al. Incentivizing semisupervised vehicular federated learning: A multidimensional contract approach with bounded rationality[J]. IEEE Internet of Things Journal, 2022, 9(19): 18573–18588. doi: 10.1109/JIOT.2022.3161551 [23] LIM W Y B, HUANG Jianqiang, XIONG Zehui, et al. Towards federated learning in UAV-enabled internet of vehicles: A multi-dimensional contract-matching approach[J]. IEEE Transactions on Intelligent Transportation Systems, 2021, 22(8): 5140–5154. doi: 10.1109/TITS.2021.3056341 [24] 帕特里克·博尔顿, 马赛厄斯·德瓦特里庞, 费方域, 蒋士成, 郑育家, 等译. 合同理论[M]. 上海: 格致出版社, 2008: 1–6.BOLTON P, DEWATRIPONT M, FEI Fangyu, JIANG Shicheng, ZHENG Yujia, et al. translation. Contract Theory[M]. Shanghai: Truth & Wisdom Press, 2008: 1–6. [25] ZHANG Yanru, PAN Miao, SONG Lingyang, et al. A survey of contract theory-based incentive mechanism design in wireless networks[J]. IEEE Wireless Communications, 2017, 24(3): 80–85. doi: 10.1109/MWC.2017.1500371WC [26] LI Tian, SAHU A K, ZAHEER M, et al. Federated optimization in heterogeneous networks[C]. Machine Learning and Systems 2, Austin, USA, 2020: 429–450. [27] KARIMIREDDY S P, KALE S, MOHRI M, et al. SCAFFOLD: Stochastic controlled averaging for federated learning[C/OL]. The 37th International Conference on Machine Learning, 2020: 5132–5143. -

下载:

下载:

下载:

下载: