Research on Multi-scale Residual UNet Fused with Depthwise Separable Convolution in PolSAR Terrain Classification

-

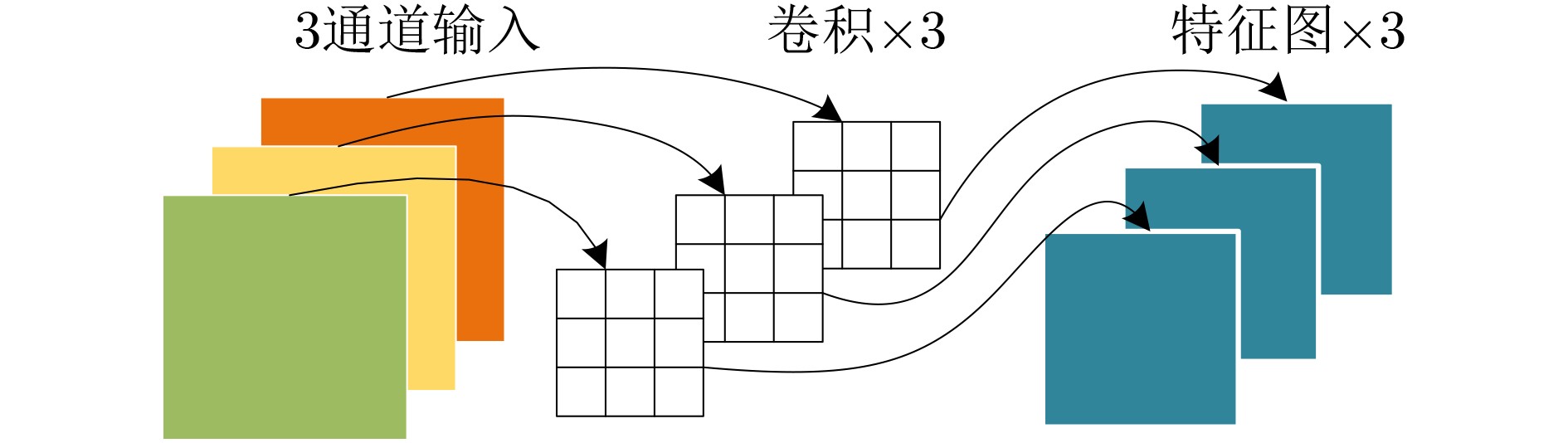

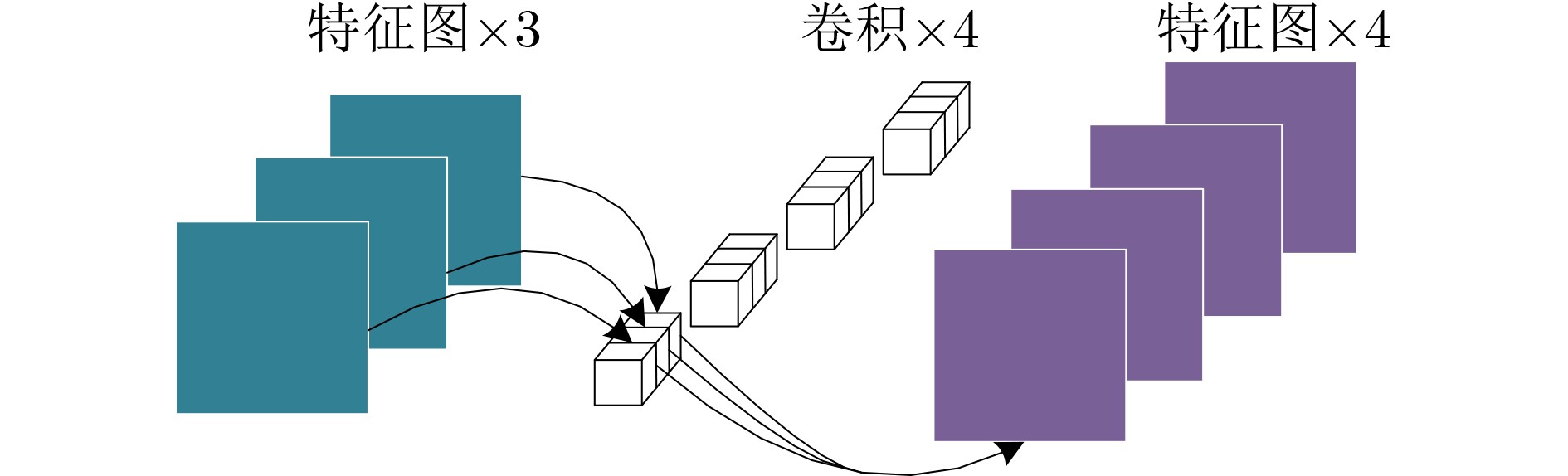

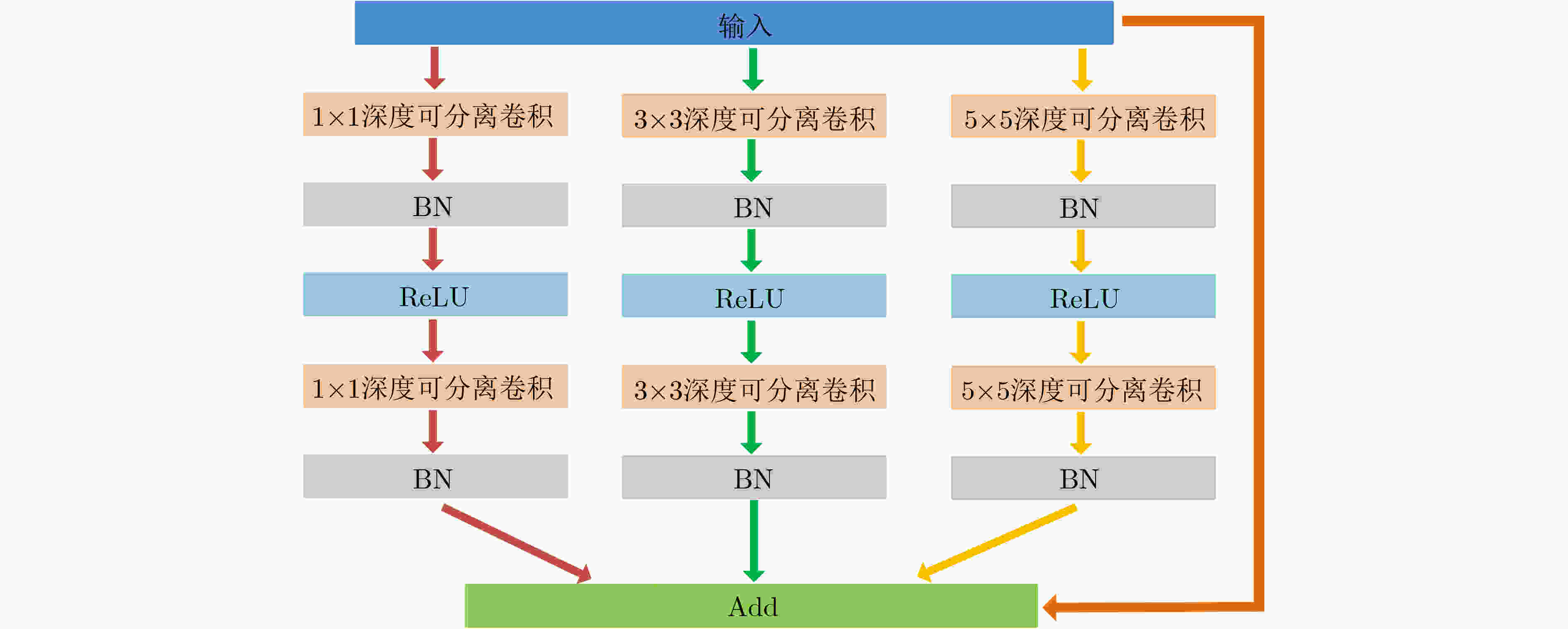

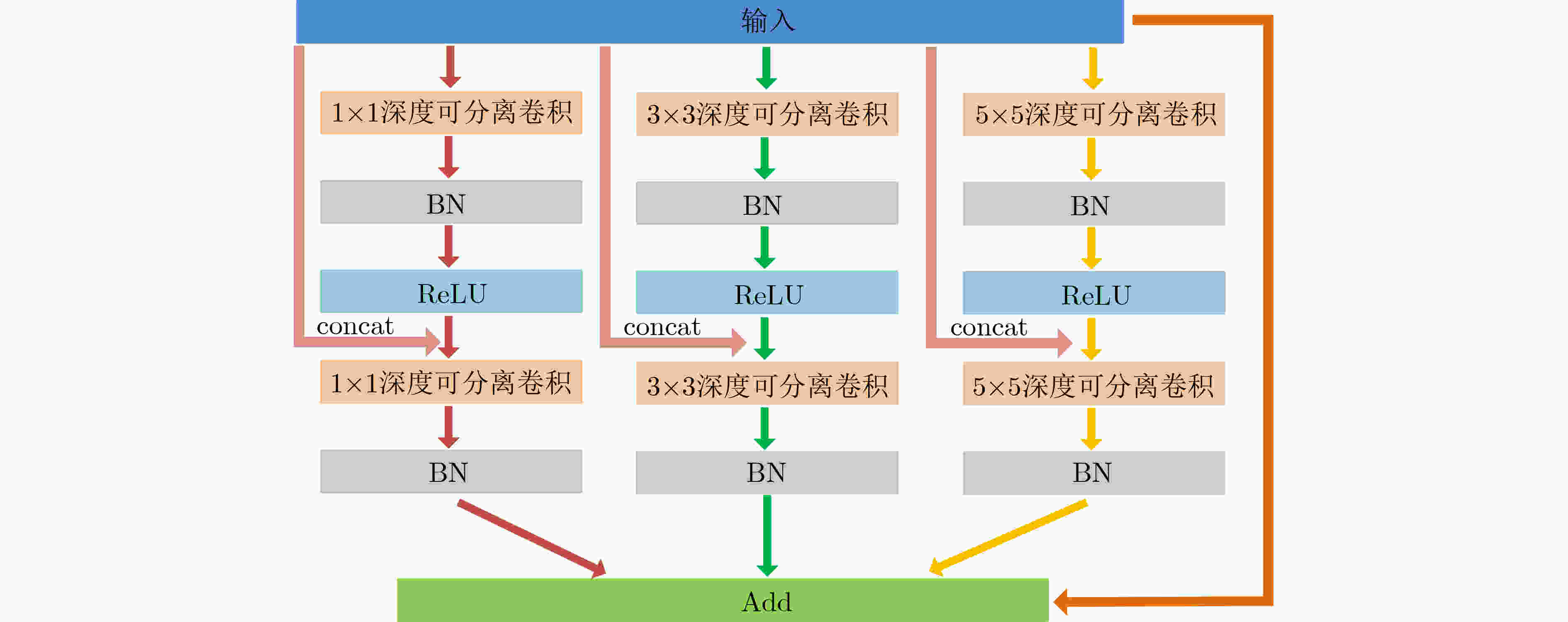

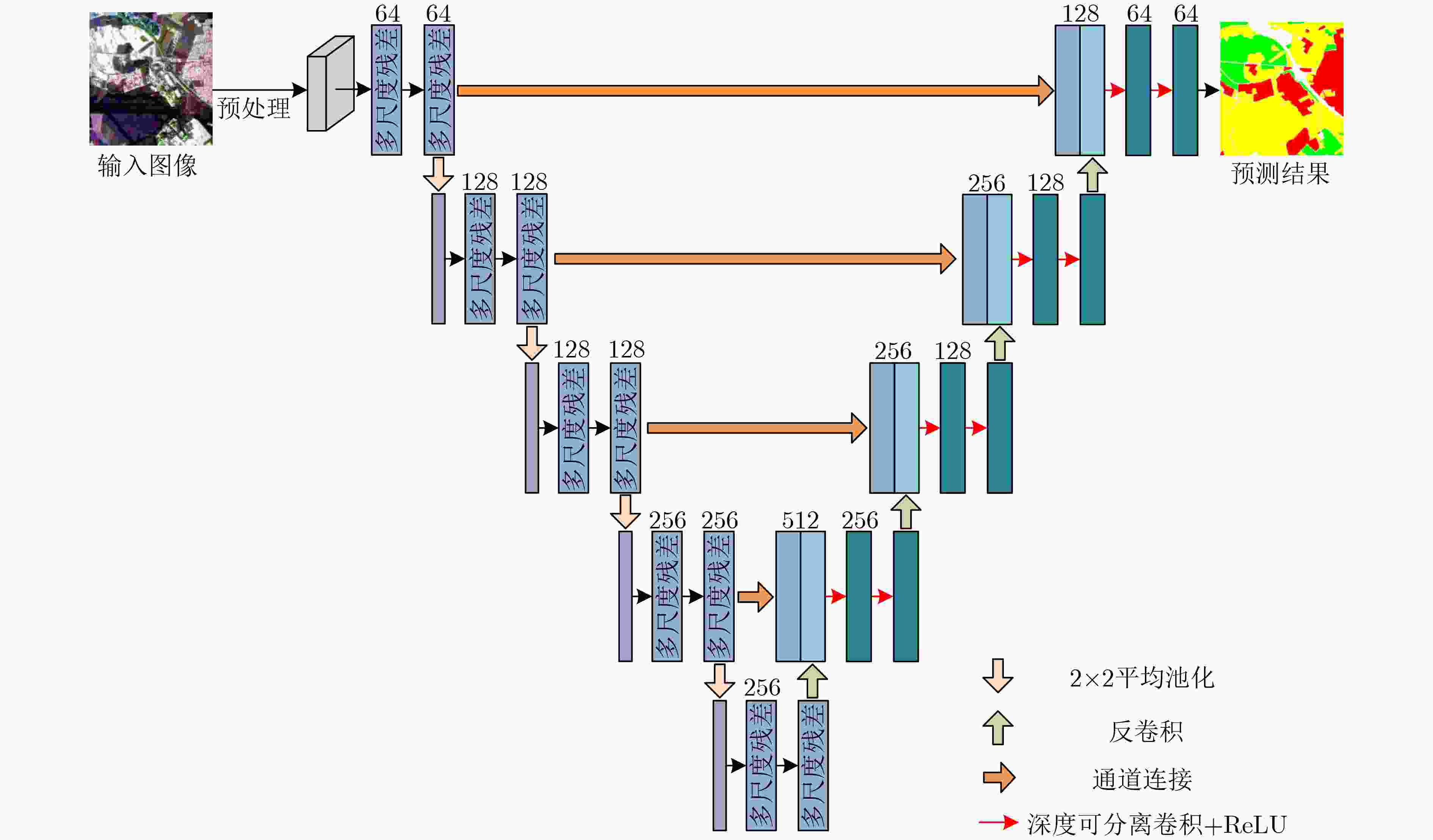

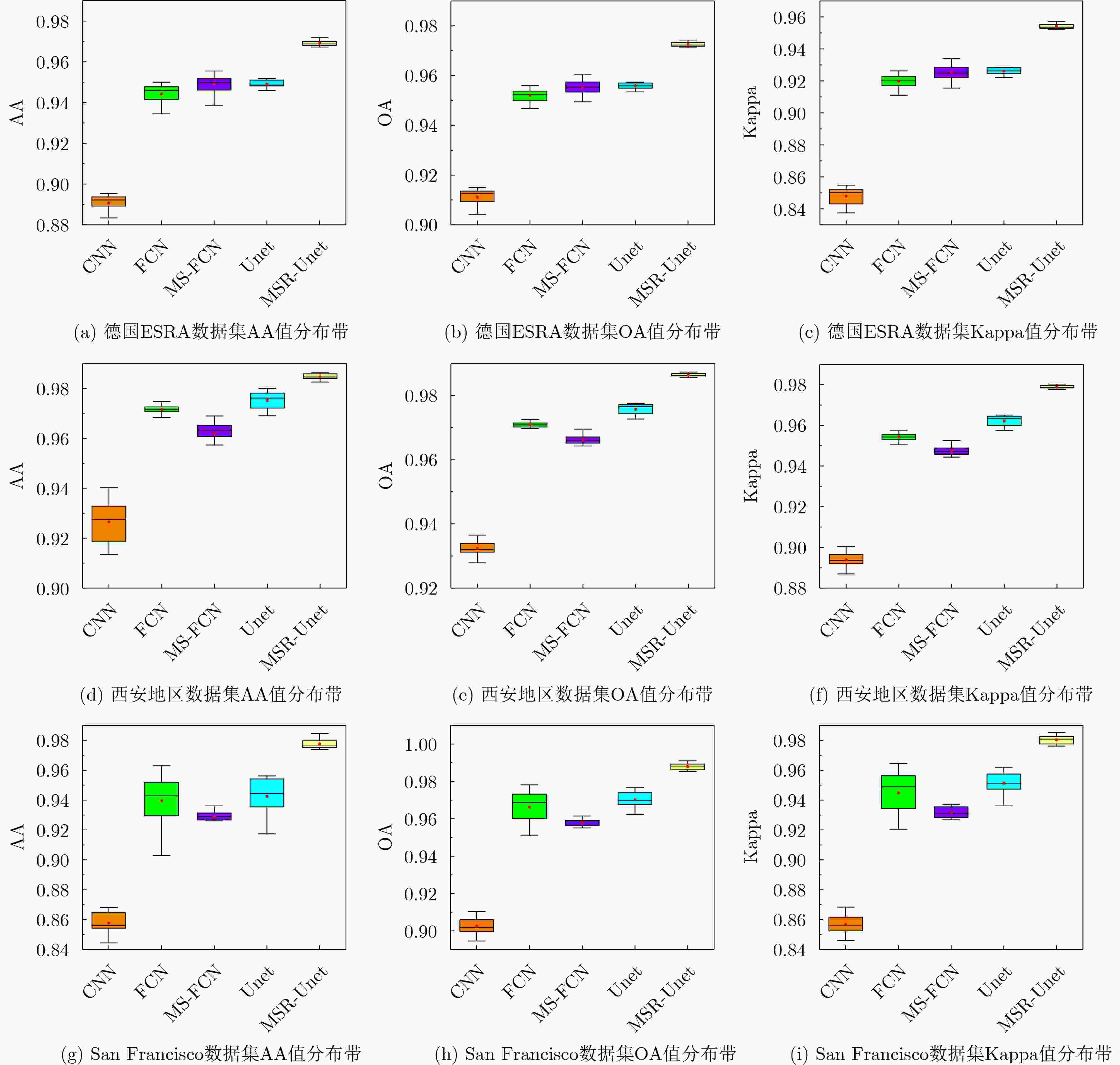

摘要: 极化合成孔径雷达(Polarimetric Synthetic Aperture Radar, PolSAR)地物分类作为合成孔径雷达(Synthetic Aperture Radar, SAR)图像解译的重要研究内容之一,越来越受到国内外学者的广泛关注。不同于自然图像,PolSAR数据集不仅具有独特的数据属性同时还属于小样本数据集,因此如何更充分地利用数据特性以及标签样本是需要重点考虑的内容。基于以上问题,该文在UNet基础上提出了一种新的用于PolSAR地物分类的网络架构——多尺度可分离残差UNet (Multiscale Separable Residual Unet, MSR-Unet)。该网络结构首先利用深度可分离卷积替代普通2D卷积,分别提取输入数据的空间特征和通道特征,降低特征的冗余度;其次提出改进的多尺度残差结构,该结构以残差结构为基础,通过设置不同大小的卷积核获得不同尺度的特征,同时采用密集连接对特征进行复用,使用该结构不仅能在一定程度上增加网络深度,获取更优特征,还能使网络充分利用标签样本,增强特征传递效率,从而提高PolSAR地物的分类精度。在3个标准数据集上的实验结果表明:与传统分类方法及其它主流深度学习网络模型如UNet相比,MSR-Unet网络结构能够在不同程度上提高平均准确率、总体准确率和Kappa系数且具有更好的鲁棒性。

-

关键词:

- PolSAR地物分类 /

- UNet /

- 残差结构 /

- 深度可分离卷积

Abstract: As one of the important research contents of Synthetic Aperture Radar(SAR) image interpretation, Polarimetric Synthetic Aperture Radar(PolSAR) terrain classification has attracted more and more attention from scholars at home and abroad. Different from natural images, the PolSAR dataset not only has unique data attributes but also belongs to a small sample dataset. Therefore, how to make full use of the data characteristics and label samples is a key consideration. Based on the above problems, a new network on the basis of UNet for PolSAR terrain classification—Multiscale Separable Residual Unet(MSR-Unet) is proposed in this paper. In order to extract separately the spatial and channel features of the input data while reducing the redundancy of features, the ordinary 2D convolution is replaced by the depthwise separable convolution in MSR-Unet. Then, an improved multi-scale residual structure based on the residual structure is proposed. This structure obtains features of different scales by setting convolution kernels of different sizes, and at the same time the feature is reused by dense connection, using the structure can not only increase the depth of the network to a certain extent and obtain better features, but also enable the network to make full use of label samples and enhance the transmission efficiency of features information, thereby improving the classification accuracy of PolSAR terrain. The experimental results on three standard datasets show that compared with the traditional classification methods and other mainstream deep learning network models such as UNet, the MSR-Unet can improve average accuracy, overall accuracy and Kappa coefficient in different degrees and has better robustness. -

表 1 消融实验结果表

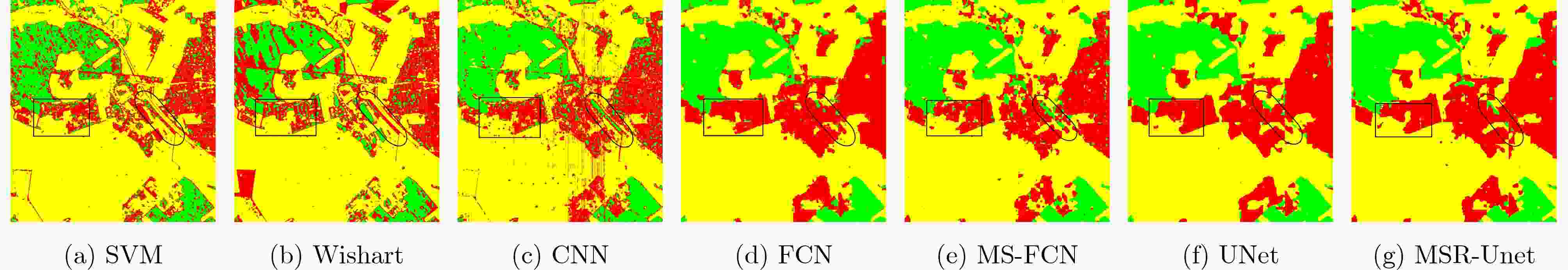

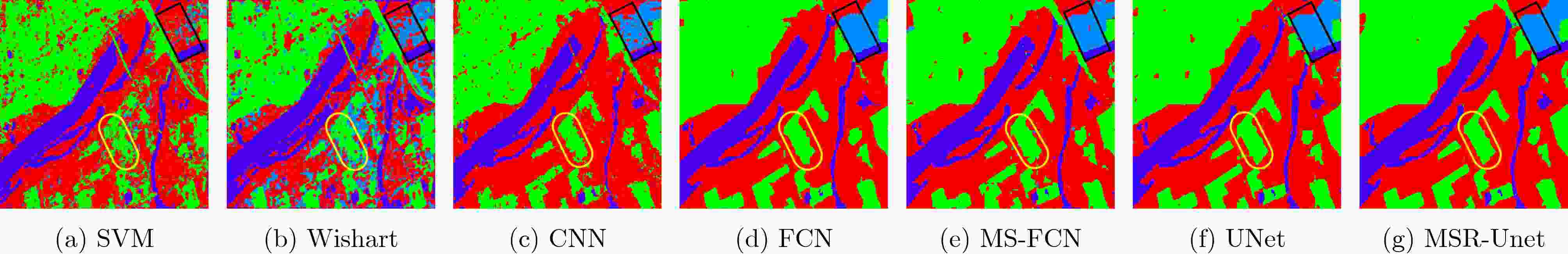

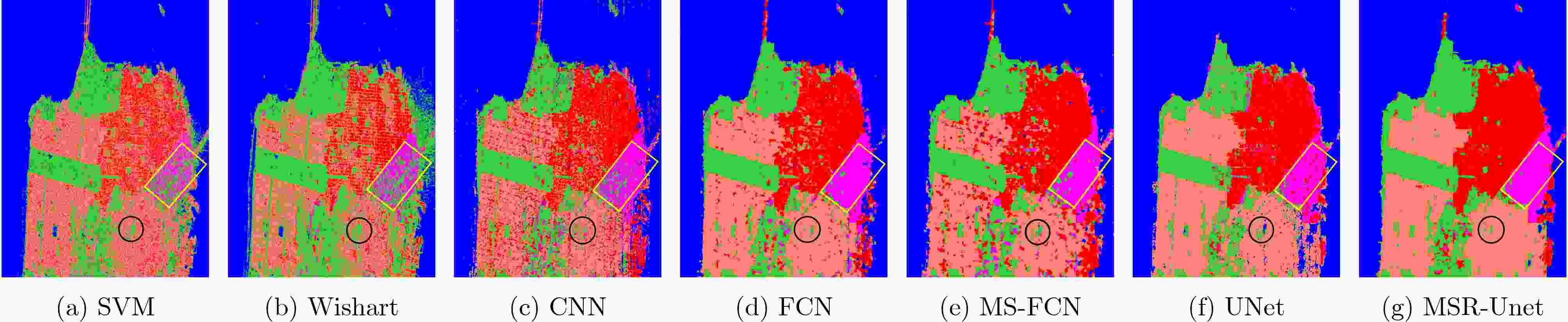

模型 Unet Res DSC MRes EMRes 德国ESRA数据集 西安地区数据集 San Francisco数据集 AA OA Kappa AA OA Kappa AA OA Kappa A √ 0.9489 0.9559 0.9262 0.9752 0.9757 0.9622 0.9415 0.9692 0.9499 B √ √ 0.9564 0.9612 0.9349 0.9721 0.9785 0.9666 0.9487 0.9717 0.9538 C √ √ √ 0.9639 0.9675 0.9456 0.9758 0.9791 0.9675 0.9512 0.9754 0.9599 D √ √ √ 0.9669 0.9708 0.9511 0.9852 0.9847 0.9760 0.9709 0.9851 0.9756 E √ √ √ 0.9691 0.9726 0.9542 0.9844 0.9864 0.9788 0.9776 0.9879 0.9803 表 2 不同方法在德国ESRA数据集上的分类精度

方法 SVM[3] Wishart[5] CNN[10] FCN[18] MS-FCN[28] UNet[19] MSR-Unet 建筑区 0.7316 0.6732 0.8447 0.9124 0.9339 0.9180 0.9487 林区 0.8477 0.8109 0.8762 0.9502 0.9445 0.9557 0.9765 开放区 0.8845 0.9062 0.9515 0.9711 0.9691 0.9732 0.9820 AA 0.8213 0.7968 0.8907 0.9442 0.9492 0.9489 0.9691 OA 0.8463 0.8346 0.9113 0.9520 0.9554 0.9559 0.9726 Kappa 0.7324 0.7118 0.8479 0.9197 0.9252 0.9262 0.9542 表 3 不同方法在西安地区数据集上的分类精度及AA、OA、Kappa值

方法 SVM[3] Wishart[5] CNN[10] FCN[18] MS-FCN[28] UNet[19] MSR-Unet 草地 0.7870 0.8209 0.9208 0.9740 0.9677 0.9797 0.9887 城市 0.8202 0.8745 0.9528 0.9725 0.9701 0.9762 0.9903 庄稼 0.2711 0.1544 0.9004 0.9852 0.9531 0.9838 0.9880 河流 0.9053 0.7343 0.9319 0.9528 0.9571 0.9612 0.9707 AA 0.6959 0.6460 0.9265 0.9711 0.9620 0.9752 0.9844 OA 0.7958 0.7153 0.9324 0.9706 0.9663 0.9757 0.9864 Kappa 0.6803 0.5942 0.8940 0.9542 0.9475 0.9622 0.9788 表 4 不同方法在San Francisco数据集上的分类精度及AA、OA、Kappa值

方法 SVM[3] Wishart[5] CNN[10] FCN[18] MS-FCN[28] UNet[19] MSR-Unet 发达城市 08125 0.8167 0.8096 0.9044 0.9277 0.8989 0.9738 水域 0.9758 0.9954 0.9898 0.9952 0.9982 0.9978 0.9991 高密度城市 0.7047 0.7828 0.7936 0.9449 0.8943 0.9421 0.9762 低密度城市 0.6249 0.6623 0.7905 0.9222 0.8840 0.9309 0.9742 植物 0.7642 0.5979 0.9051 0.9305 0.9334 0.9406 0.9647 AA 0.7764 0.7710 0.8577 0.9394 0.9287 0.9415 0.9776 OA 0.8293 0.8239 0.9026 0.9662 0.9582 0.9692 0.9879 Kappa 0.7511 0.7445 0.8569 0.9448 0.9316 0.9499 0.9803 表 5 不同深度学习方法的参数量及FLOPs比较结果

数据集 CNN FCN MS-FCN Unet MSR-Unet 西安数据集 参数量(M) 23.38 16.52 34.97 5.88 4.05 FLOPs (G) 0.66 2.99 17.91 3.87 1.94 德国ESAR数据集

San Francisco数据集参数量 (M) 23.38 16.52 34.97 5.88 4.05 FLOPs (G) 0.66 12.00 71.65 15.50 7.77 -

[1] HUANG Zhongling, DATCU M, PAN Zongxu, et al. Deep SAR-Net: Learning objects from signals[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2020, 161: 179–193. doi: 10.1016/j.isprsjprs.2020.01.016 [2] JAFARI M, MAGHSOUDI Y, and VALADAN ZOEJ M J. A new method for land cover characterization and classification of polarimetric SAR data using polarimetric signatures[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2015, 8(7): 3595–3607. doi: 10.1109/JSTARS.2014.2387374 [3] FUKUDA S and HIROSAWA H. Support vector machine classification of land cover: Application to polarimetric SAR data[C]. Proceedings of IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, Australia, 2001: 187–189. [4] OKWUASHI O, NDEHEDEHE C E, OLAYINKA D N, et al. Deep support vector machine for PolSAR image classification[J]. International Journal of Remote Sensing, 2021, 42(17): 6498–6536. doi: 10.1080/01431161.2021.1939910 [5] LEE J S, GRUNES M R, AINSWORTH T L, et al. Quantitative comparison of classification capability: Fully-polarimetric versus partially polarimetric SAR[C]. Proceedings of IEEE 2000 International Geoscience and Remote Sensing Symposium. Taking the Pulse of the Planet: The Role of Remote Sensing in Managing the Environment, Honolulu, USA, 2000: 1101–1103. [6] 魏志强, 毕海霞. 基于聚类识别的极化SAR图像分类[J]. 电子与信息学报, 2018, 40(12): 2795–2803. doi: 10.11999/JEIT180229WEI Zhiqiang and BI Haixia. PolSAR image classification based on discriminative clustering[J]. Journal of Electronics &Information Technology, 2018, 40(12): 2795–2803. doi: 10.11999/JEIT180229 [7] CHEN Yanqiao, JIAO Licheng, LI Yangyang, et al. Multilayer projective dictionary pair learning and sparse autoencoder for PolSAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(12): 6683–6694. doi: 10.1109/TGRS.2017.2727067 [8] XIE Wen, MA Gaini, HUA Wenqiang, et al. Complex-valued wishart stacked auto-encoder network for Polsar image classification[C]. Proceedings of 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 2019: 3193–3196. [9] AI Jiaqiu, WANG Feifan, MAO Yuxiang, et al. A fine PolSAR terrain classification algorithm using the texture feature fusion-based improved convolutional autoencoder[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5218714. doi: 10.1109/TGRS.2021.3131986 [10] CHEN Siwei and TAO Chensong. PolSAR image classification using polarimetric-feature-driven deep convolutional neural network[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 15(4): 627–631. doi: 10.1109/LGRS.2018.2799877 [11] HUA Wenqiang, ZHANG Cong, XIE Wen, et al. Polarimetric SAR image classification based on ensemble dual-branch CNN and superpixel algorithm[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022, 15: 2759–2772. doi: 10.1109/JSTARS.2022.3162953 [12] CUI Yuanhao, LIU Fang, JIAO Licheng, et al. Polarimetric multipath convolutional neural network for PolSAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5207118. doi: 10.1109/TGRS.2021.3071559 [13] 秦先祥, 余旺盛, 王鹏, 等. 基于复值卷积神经网络样本精选的极化SAR图像弱监督分类方法[J]. 雷达学报, 2020, 9(3): 525–538. doi: 10.12000/JR20062QIN Xianxiang, YU Wangsheng, WANG Peng, et al. Weakly supervised classification of PolSAR images based on sample refinement with complex-valued convolutional neural network[J]. Journal of Radars, 2020, 9(3): 525–538. doi: 10.12000/JR20062 [14] LIU Fang, JIAO Licheng, and TANG Xu. Task-oriented GAN for PolSAR image classification and clustering[J]. IEEE Transactions on Neural Networks and Learning Systems, 2019, 30(9): 2707–2719. doi: 10.1109/TNNLS.2018.2885799 [15] LI Xiufang, SUN Qigong, LI Lingling, et al. SSCV-GANs: Semi-supervised complex-valued GANs for PolSAR image classification[J]. IEEE Access, 2020, 8: 146560–146576. doi: 10.1109/ACCESS.2020.3004591 [16] YANG Chen, HOU Biao, CHANUSSOT J, et al. N-Cluster loss and hard sample generative deep metric learning for PolSAR image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5210516. doi: 10.1109/TGRS.2021.3099840 [17] 贺丰收, 何友, 刘准钆, 等. 卷积神经网络在雷达自动目标识别中的研究进展[J]. 电子与信息学报, 2020, 42(1): 119–131. doi: 10.11999/JEIT180899HE Fengshou, HE You, LIU Zhunga, et al. Research and development on applications of convolutional neural networks of radar automatic target recognition[J]. Journal of Electronics &Information Technology, 2020, 42(1): 119–131. doi: 10.11999/JEIT180899 [18] LI Yangyang, CHEN Yanqiao, LIU Guangyuan, et al. A novel deep fully convolutional network for PolSAR image classification[J]. Remote Sensing, 2018, 10(12): 1984. doi: 10.3390/rs10121984 [19] RONNEBERGER O, FISCHER P, and BROX T. U-Net: Convolutional networks for biomedical image segmentation[C]. Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. [20] KOTRU R, SHAIKH M, TURKAR V, et al. Semantic segmentation of PolSAR images for various land cover features[C]. Proceedings of 2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 2021: 351–354. [21] HOWARD A G, ZHU Menglong, CHEN Bo, et al. MobileNets: Efficient convolutional neural networks for mobile vision applications[J]. arXiv: 1704.04861, 2017. [22] CHOLLET F. Xception: Deep learning with depthwise separable convolutions[C]. Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1800–1807. [23] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [24] HUANG Gao, LIU Zhuang, VAN DER MAATEN L, et al. Densely connected convolutional networks[C]. Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2261–2269. [25] AI Jiaqiu, MAO Yuxiang, LUO Qiwu, et al. SAR target classification using the multikernel-size feature fusion-based convolutional neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 5214313. doi: 10.1109/TGRS.2021.3106915 [26] SHANG Ronghua, HE Jianghai, WANG Jiaming, et al. Dense connection and depthwise separable convolution based CNN for polarimetric SAR image classification[J]. Knowledge-Based Systems, 2020, 194: 105542. doi: 10.1016/j.knosys.2020.105542 [27] 孙军梅, 葛青青, 李秀梅, 等. 一种具有边缘增强特点的医学图像分割网络[J]. 电子与信息学报, 2022, 44(5): 1643–1652. doi: 10.11999/JEIT210784SUN Junmei, GE Qingqing, LI Xiumei, et al. A medical image segmentation network with boundary enhancement[J]. Journal of Electronics &Information Technology, 2022, 44(5): 1643–1652. doi: 10.11999/JEIT210784 [28] WU Wenjin, LI Hailei, LI Xinwu, et al. PolSAR image semantic segmentation based on deep transfer learning—realizing smooth classification with small training sets[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(6): 977–981. doi: 10.1109/LGRS.2018.2886559 -

下载:

下载:

下载:

下载: