Multi-resolution Fusion Input U-shaped Retinal Vessel Segmentation Algorithm

-

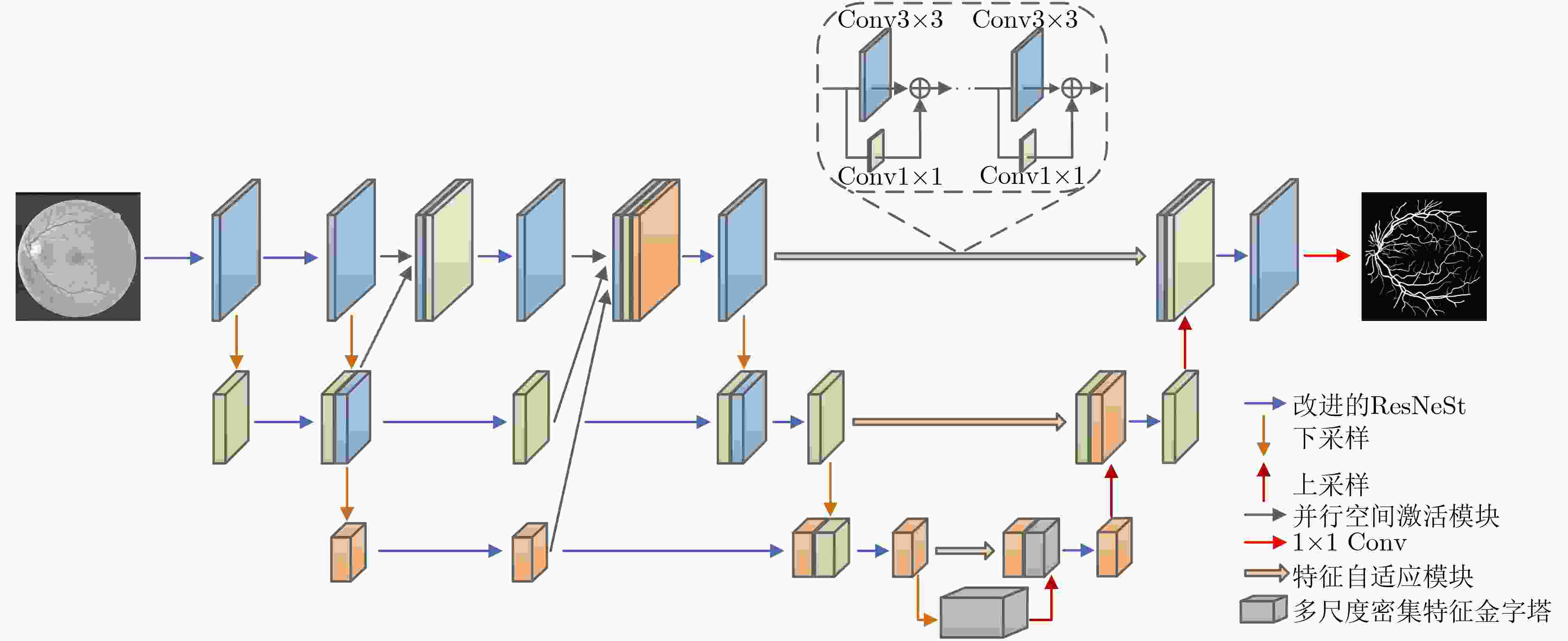

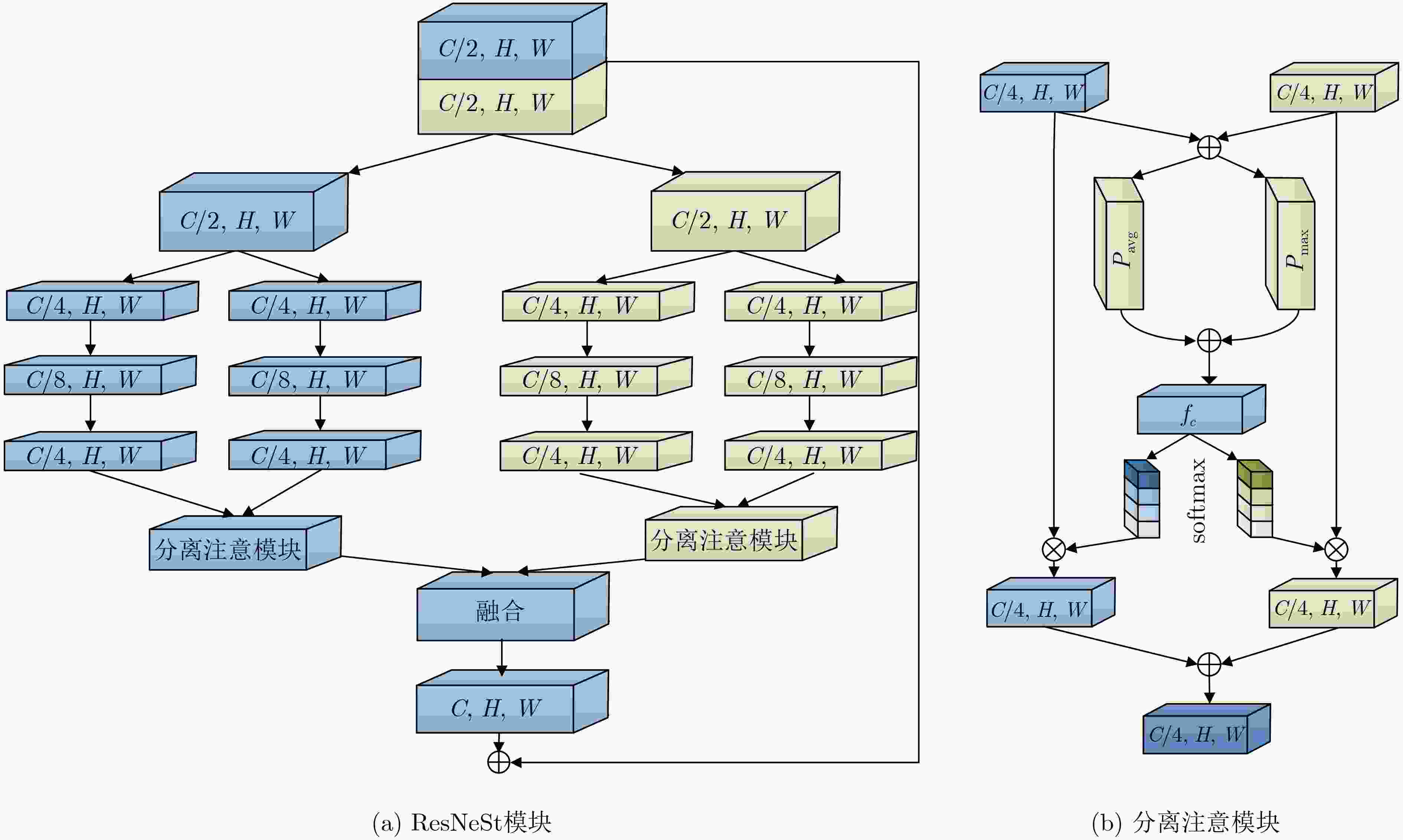

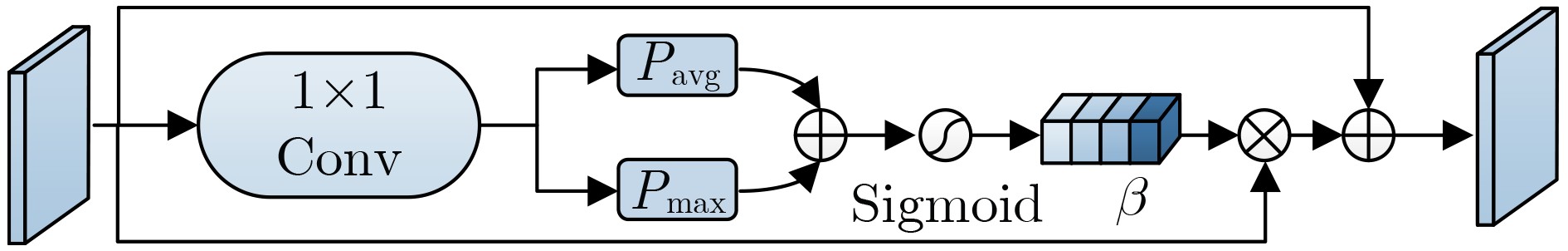

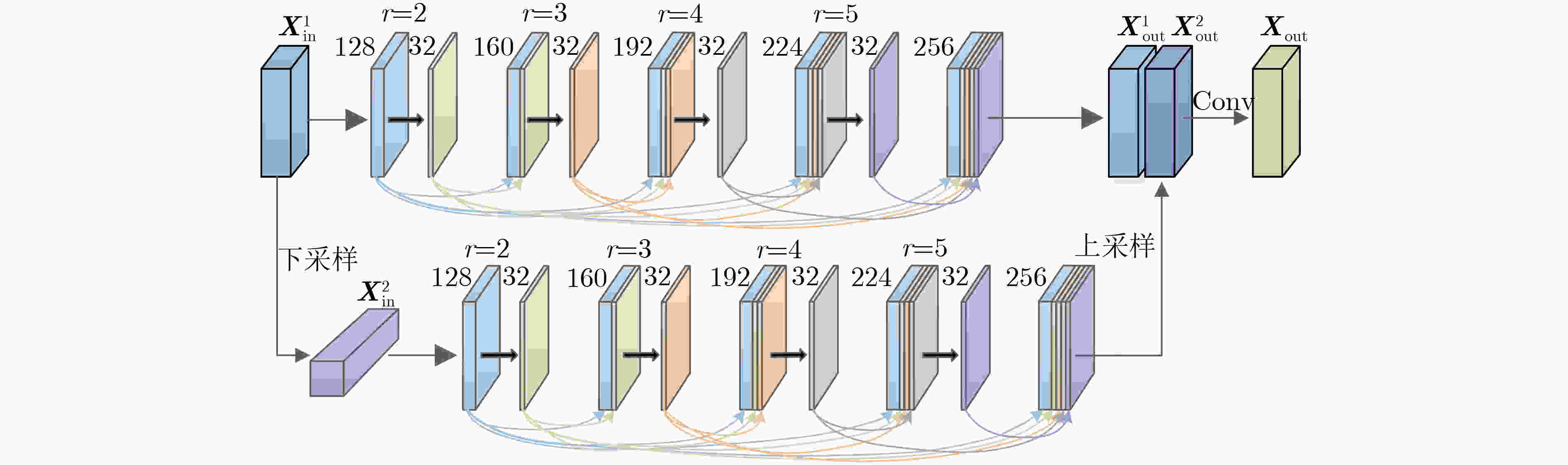

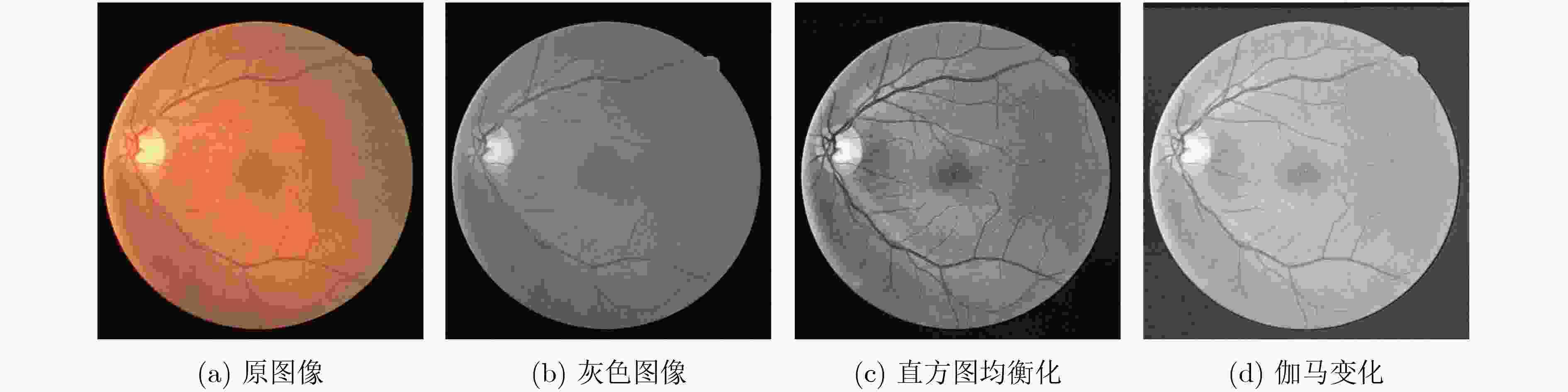

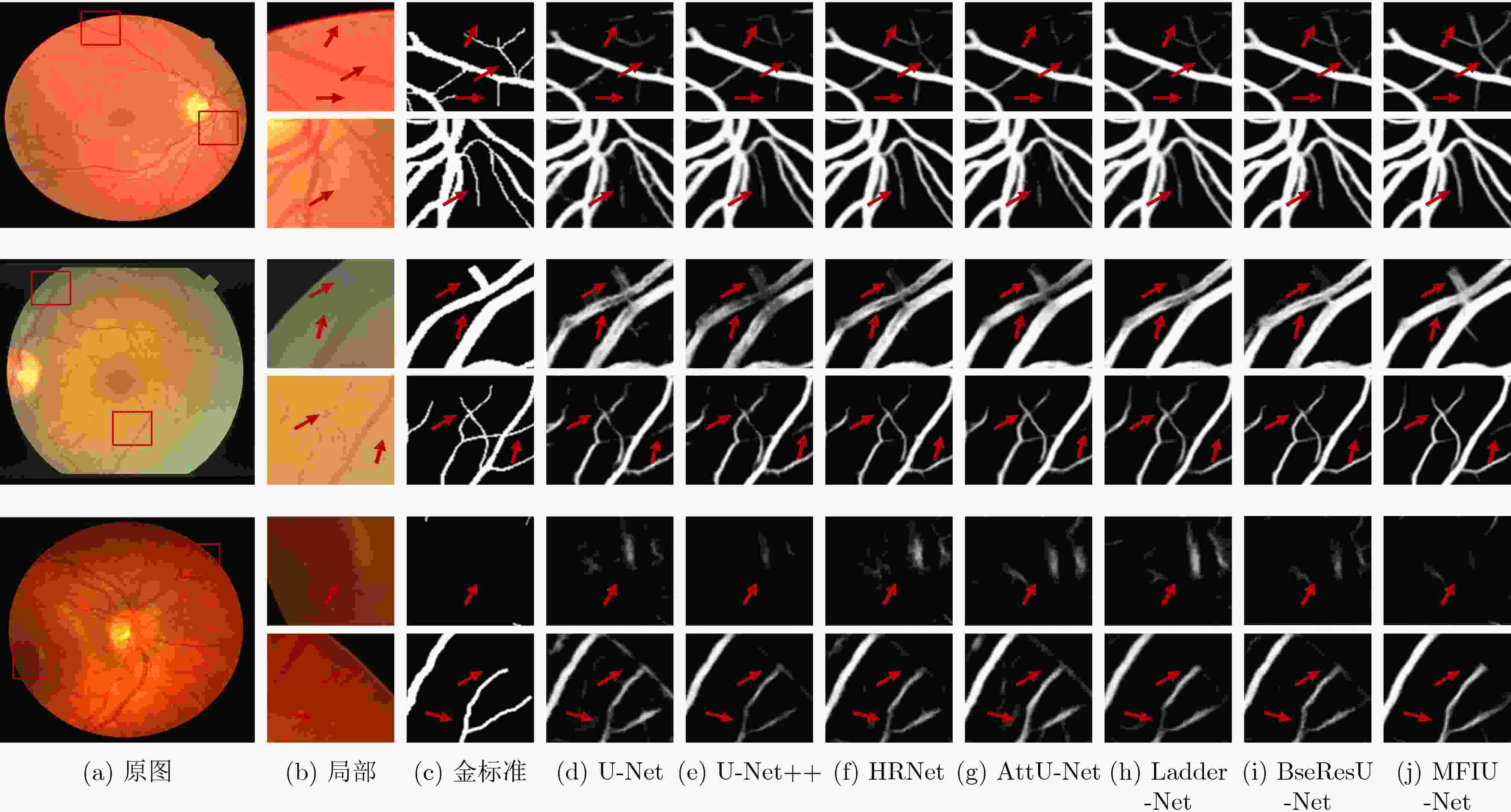

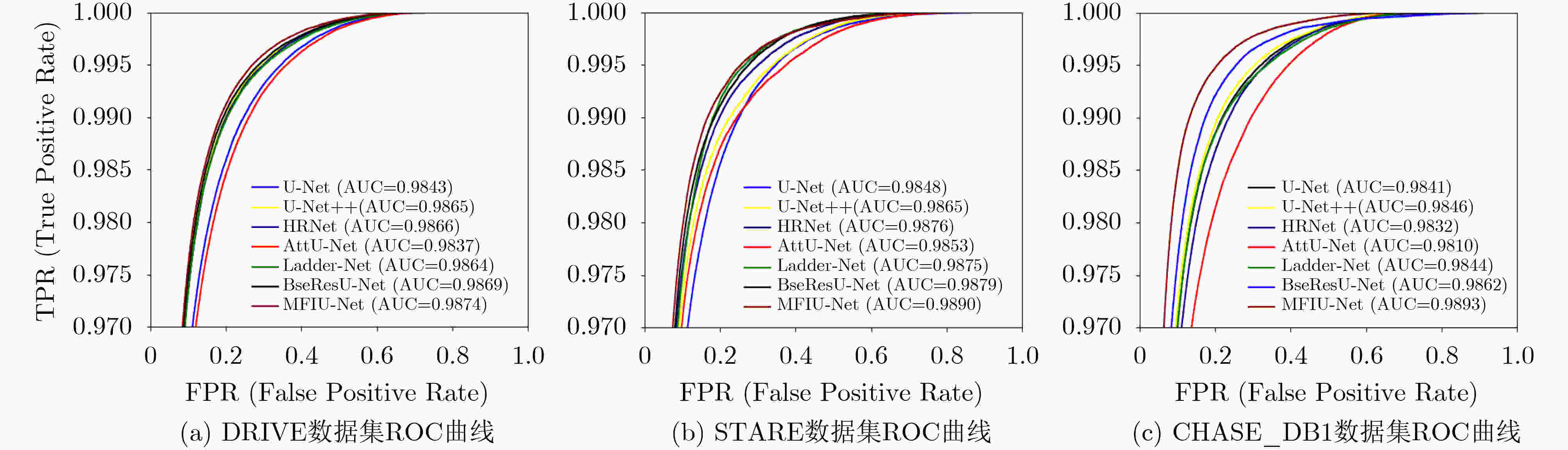

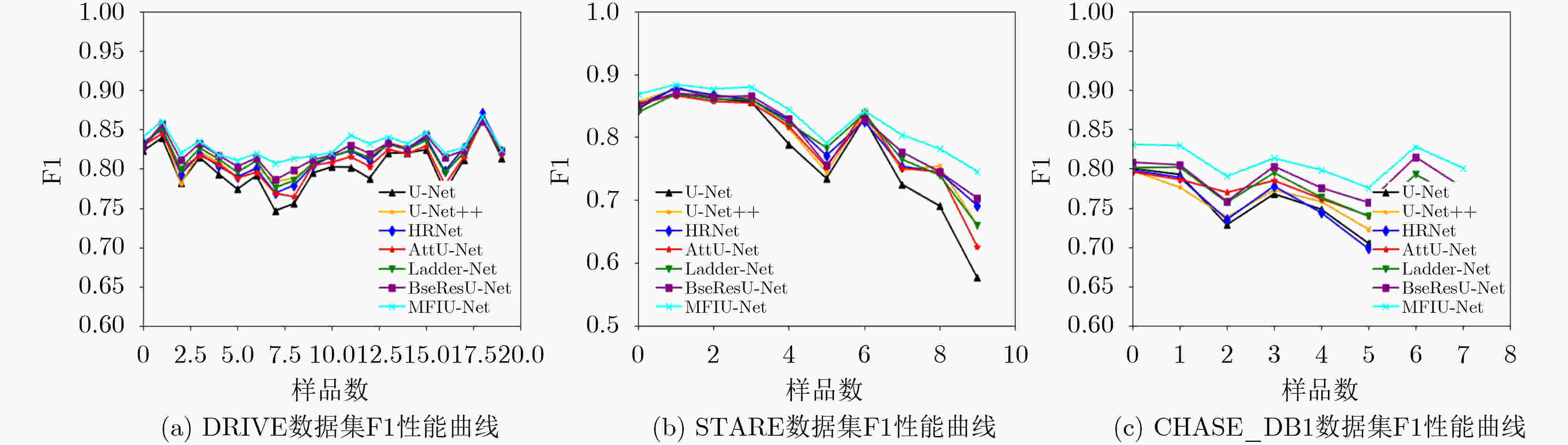

摘要: 针对视网膜血管拓扑结构不规则、形态复杂和尺度变化多样的特点,该文提出一种多分辨率融合输入的U型网络(MFIU-Net),旨在实现视网膜血管精准分割。设计以多分辨率融合输入为主干的粗略分割网络,生成高分辨率特征。采用改进的ResNeSt代替传统卷积,优化血管分割边界特征;将并行空间激活模块嵌入其中,捕获更多的语义和空间信息。构架另一U型精细分割网络,提高模型的微观表示和识别能力。一是底层采用多尺度密集特征金字塔模块提取血管的多尺度特征信息。二是利用特征自适应模块增强粗、细网络之间的特征融合,抑制不相关的背景噪声。三是设计面向细节的双重损失函数融合,以引导网络专注于学习特征。在眼底数据用于血管提取的数字视网膜图像(DRIVE)、视网膜结构分析(STARE)和儿童心脏与健康研究(CHASE_DB1)上进行实验,其准确率分别为97.00%, 97.47%和97.48%,灵敏度分别为82.73%, 82.86%和83.24%,曲线下的面积(AUC)值分别为98.74%, 98.90%和98.93%。其模型整体性能优于现有算法。

-

关键词:

- 视网膜血管分割 /

- U型网络 /

- 并行空间激活模块 /

- 多尺度密集特征金字塔模块 /

- 双重损失函数融合

Abstract: Considering the characteristics of irregular retinal blood vessel topology, complex morphology and diverse scale changes, a Multi-resolution Fusion Input U-Netword (MFIU-Net) is proposed to achieve accurate segmentation of retinal blood vessels. A rough segmentation network based on multi-resolution fusion input is designed to generate high-resolution features.. The improved ResNeSt is used to replace the traditional convolution to optimize the boundary features of blood vessel segmentation, and the parallel spatial activation module is embedded to capture more semantic and spatial information. Another U-shaped fine segmentation network is constructed to improve the microscopic representation and recognition ability of the model. Firstly, the multi-scale dense feature pyramid module to extract the multi-scale feature information of blood vessels is adopted at the bottom layer. Secondly, the feature adaptive module is used to enhance the feature fusion between coarse and fine networks to suppress irrelevant background noise. Thirdly, a detail-oriented double loss function fusion is designed to guide the network to focus on learning features. Experiments are carried out on the fundus data Digital Retinal Images for Vessel Extraction (DRIVE), STructured Analysis of the REtinal (STARE) and Child Heart and Health Study (CHASE_DB1), the accuracy rates are 97.00%, 97.47% and 97.48%, the sensitivity is 82.73%, 82.86% and 83.24%, and the Area Under Cure (AUC) values are 98.74%, 98.90% and 98.93%, respectively. The overall performance of its model is better than that of existing algorithms. -

表 1 对比实验结果

$ {\lambda _1} $ $ {\lambda _2} $ Acc Se Sp F1 AUC 0.3 0.7 0.9691 0.7982 0.9868 0.8249 0.9865 0.4 0.6 0.9689 0.8153 0.9836 0.8210 0.9850 0.5 0.5 0.9696 0.7866 0.9872 0.8194 0.9859 0.6 0.4 0.9688 0.8269 0.9825 0.8232 0.9860 0.7 0.3 0.9700 0.8273 0.9836 0.8279 0.9874 表 2 不同算法的性能指标(%)

方法 DRIVE STARE CHASE_DB1 Acc Se Sp F1 AUC Acc Se Sp F1 AUC Acc Se Sp F1 AUC 文献[23] 96.84 75.43 98.89 80.68 98.43 97.24 73.33 99.22 80.29 98.48 97.19 70.78 98.96 76.03 98.41 文献[24] 96.95 79.00 98.68 81.96 98.65 97.35 76.80 99.06 81.63 98.65 97.21 72.74 98.86 76.67 98.46 文献[25] 96.97 78.22 98.78 81.92 98.66 97.39 77.61 99.03 82.01 98.76 97.19 72.04 98.88 76.36 98.32 文献[18] 96.85 78.15 98.65 81.30 98.37 97.25 77.18 98.91 81.12 98.53 97.08 78.00 98.36 77.11 98.10 文献[26] 96.94 79.50 98.62 82.00 98.64 97.33 78.70 98.87 81.86 98.75 97.22 77.35 98.56 77.83 98.44 文献[27] 96.95 81.59 98.43 82.41 98.69 97.34 78.92 98.87 81.98 98.79 97.23 81.16 98.30 78.64 98.62 MFIU-Net 97.00 82.73 98.36 82.79 98.74 97.47 82.86 98.68 83.37 98.90 97.48 83.24 98.43 80.57 98.93 表 3 DRIVE数据集对比结果

方法 Acc Se Sp F1 AUC 文献[7] 0.9568 0.8115 0.9780 0.8272 0.9810 文献[8] 0.9692 0.8027 0.9852 0.8206 0.9850 文献9] 0.9581 0.7991 0.9813 0.8293 0.9823 文献[10] 0.9565 0.7853 0.9818 0.8203 0.9834 文献[26] 0.9561 0.7856 0.9810 0.8202 0.9793 文献[28] 0.9566 0.7963 0.9800 0.8237 0.9802 文献[29] 0.9573 0.7735 0.9838 0.8205 0.9816 文献[30] 0.9858 0.7941 0.9798 0.8216 0.9847 文献[31] 0.9581 0.8046 0.9805 0.8303 0.9827 MFIU-Net 0.9700 0.8273 0.9836 0.8279 0.9874 表 4 STARE数据集对比结果

方法 Acc Se Sp F1 AUC 文献[8] 0.9735 0.7951 0.9883 0.8216 0.9856 文献[9] 0.9673 0.8186 0.9844 0.8379 0.9881 文献[10] 0.9668 0.8002 0.9864 0.8289 0.9900 文献[28] 0.9641 0.7595 0.9878 0.8143 0.9832 文献[29] 0.9701 0.7715 0.9886 0.8146 0.9881 文献[30] 0.9640 0.7598 0.9878 0.8142 0.9824 文献[31] 0.9665 0.7914 0.9870 0.8276 0.9864 MFIU-Net 0.9747 0.8286 0.9868 0.8337 0.9890 表 5 CHASE_DB1数据集对比结果

方法 Acc Se Sp F1 AUC 文献[7] 0.9664 0.8075 0.9841 0.8278 0.9872 文献[9] 0.9670 0.8239 0.9813 0.8191 0.9871 文献[10] 0.9667 0.8132 0.9840 0.8293 0.9893 文献[26] 0.9656 0.7978 0.9818 0.8031 0.9839 文献[28] 0.9610 0.8155 0.9752 0.7883 0.9804 文献[29] 0.9655 0.7970 0.9823 0.8073 0.9851 文献[30] 0.9608 0.8176 0.9704 0.7892 0.9865 文献[31] 0.9673 0.8402 0.9801 0.8248 0.9874 TP 0.9748 0.8324 0.9843 0.8057 0.9893 表 6 消融实验分析(%)

方法 DRIVE STARE CHASE_DB1 Acc Se Sp F1 AUC Acc Se Sp F1 AUC Acc Se Sp F1 AUC M1 96.84 75.43 98.89 80.68 98.40 97.24 73.33 99.22 80.29 98.53 97.19 70.78 98.96 76.03 98.41 M2 96.89 77.88 98.72 81.44 98.56 97.35 75.11 99.20 81.30 98.75 97.24 75.26 98.72 77.47 98.43 M3 96.91 80.09 98.57 82.13 98.58 97.38 77.90 98.99 81.98 98.69 97.30 79.76 98.48 78.82 98.65 M4 96.95 80.43 98.53 82.18 98.61 97.40 80.88 98.77 82.66 98.85 97.41 81.43 98.49 79.89 98.83 M5 96.99 81.69 98.47 82.66 98.67 97.43 81.52 98.74 82.91 98.88 97.45 81.84 98.50 80.19 98.87 M6 97.00 82.73 98.36 82.79 98.74 97.47 82.86 98.68 83.37 98.90 97.48 83.24 98.43 80.57 98.93 -

[1] 王娟, 赵建勇, 童龙. 老年2型糖尿病患者并发周围神经病变的影响因素分析[J]. 中国慢性病预防与控制, 2019, 27(1): 52–54. doi: 10.16386/j.cjpccd.issn.1004-6194.2019.01.014WANG Juan, ZHAO Jianyong, and TONG Long. Analysis of the influencing factors of peripheral neuropathy in elderly patients with type 2 diabetes[J]. China Journal of Chronic Disease Prevention and Control, 2019, 27(1): 52–54. doi: 10.16386/j.cjpccd.issn.1004-6194.2019.01.014 [2] YU Linfang, QIN Zhen, ZHUANG Tianming, et al. A framework for hierarchical division of retinal vascular networks[J]. Neurocomputing, 2020, 392: 221–232. doi: 10.1016/j.neucom.2018.11.113 [3] KHAWAJA A, KHAN T M, KHAN M A U, et al. A multi-scale directional line detector for retinal vessel segmentation[J]. Sensors, 2019, 19(22): 4949. doi: 10.3390/s19224949 [4] KHAWAJA A, KHAN T M, NAVEEDK, et al. An improved retinal vessel segmentation framework using frangi filter coupled with the probabilistic patch based denoiser[J]. IEEE Access, 2019, 7: 164344–164361. doi: 10.1109/access.2019.2953259 [5] 田丰, 李莹, 王静. 基于多尺度小波变换融合的视网膜血管分割[J]. 光学学报, 2021, 41(4): 0410001. doi: 10.3788/AOS202141.0410001TIAN Feng, LI Ying, and WANG Jing. Retinal blood vessel segmentation based on multi-scale wavelet transform fusion[J]. Acta Optica Sinica, 2021, 41(4): 0410001. doi: 10.3788/AOS202141.0410001 [6] 梁礼明, 盛校棋, 蓝智敏, 等. 基于多尺度滤波的视网膜血管分割算法[J]. 计算机应用与软件, 2019, 36(10): 190–196,204. doi: 10.3969/j.issn.1000-386x.2019.10.033LIANG Liming, SHENG Xiaoqi, LAN Zhimin, et al. Retinal vessels segmentation algorithom based on multi-scale filtering[J]. Computer Applications and Software, 2019, 36(10): 190–196,204. doi: 10.3969/j.issn.1000-386x.2019.10.033 [7] YANG Xin, LI Zhiqiang, GUO Yingqing, et al. DCU-Net: A deformable convolutional neural network based on cascade U-net for retinal vessel segmentation[J]. Multimedia Tools and Applications, 2022, 81(11): 15593–15607. doi: 10.1007/s11042-022-12418-w [8] CHEN Yixuan, DONG Yuhan, ZHANG Yi, et al. RNA-Net: Residual nonlocal attention network for retinal vessel segmentation[C]. 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, Canada, 2020: 1560–1565. [9] WANG Dongyi, HAYTHAM A, POTTENBURGH J, et al. Hard attention net for automatic retinal vessel segmentation[J]. IEEE Journal of Biomedical and Health Informatics, 2020, 24(12): 3384–3396. doi: 10.1109/jbhi.2020.3002985 [10] ZHANG Yuan, HE Miao, CHEN Zhineng, et al. Bridge-Net: Context-involved U-net with patch-based loss weight mapping for retinal blood vessel segmentation[J]. Expert Systems with Applications, 2022, 195: 116526. doi: 10.1016/j.eswa.2022.116526 [11] IBTEHAZ N and RAHMAN M S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation[J]. Neural Networks, 2020, 121: 74–87. doi: 10.1016/j.neunet.2019.08.025 [12] ZHANG Hang, WU Chongruo, ZHANG Zhongyue, et al. ResNeSt: Split-attention networks[EB/OL]. https://arxiv.org/abs/2004.08955, 2022. [13] LI Di, DHARMAWAN D A, NG B P, et al. Residual U-Net for retinal vessel segmentation[C]. 2019 IEEE International Conference on Image Processing (ICIP), Taipei, China, 2019: 1425–1429. [14] ZHOU Tianyan, ZHAO Yong, and WU Jian. ResNeXt and Res2Net structures for speaker verification[C]. 2021 IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 2021: 301–307. [15] HU Jie, SHEN Li, and SUN Gang. Squeeze-and-excitation networks[C]. The 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7132–7141. [16] WANG Chang, ZHAO Zongya, REN Qiongqiong, et al. Dense U-net based on patch-based learning for retinal vessel segmentation[J]. Entropy, 2019, 21(2): 168. doi: 10.3390/e21020168 [17] HU Peijun, LI Xiang, TIAN Yu, et al. Automatic pancreas segmentation in CT images with distance-based saliency-aware DenseASPP network[J]. IEEE Journal of Biomedical and Health Informatics, 2021, 25(5): 1601–1611. doi: 10.1109/jbhi.2020.3023462 [18] ABRAHAM N and KHAN N M. A novel focal tversky loss function with improved attention U-Net for lesion segmentation[C]. 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 2019: 683–687. [19] ZHANG Guokai, SHEN Xiaoang, CHEN Sirui, et al. DSM: A deep supervised multi-scale network learning for skin cancer segmentation[J]. IEEE Access, 2019, 7: 140936–140945. doi: 10.1109/access.2019.2943628 [20] STAAL J, ABRAMOFF M D, NIEMEIJER M, et al. Ridge-based vessel segmentation in color images of the retina[J]. IEEE Transactions on Medical Imaging, 2004, 23(4): 501–509. doi: 10.1109/tmi.2004.825627 [21] HOOVER A D, KOUZNETSOVA V, and GOLDBAUM M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response[J]. IEEE Transactions on Medical Imaging, 2000, 19(3): 203–210. doi: 10.1109/42.845178 [22] OWEN C G, RUDNICKA A R, MULLEN R, et al. Measuring retinal vessel tortuosity in 10-year-old children: Validation of the computer-assisted image analysis of the retina (CAIAR) program[J]. Investigative Ophthalmology & Visual Science, 2009, 50(5): 2004–2010. doi: 10.1167/iovs.08-3018 [23] ALOM Z, HASAN M, YAKOPCIC C, et al. Recurrent residual convolutional neural network based on U-Net (R2u-Net) for medical image segmentation[EB/OL]. https://arxiv.org/abs/1802.06955, 2018. [24] ZHOU Zongwei, SIDDIQUEE M R, TAJBAKHSH N, et al. UNet++: Redesigning skip connections to exploit multiscale features in image segmentation[J]. IEEE Transactions on Medical Imaging, 2020, 39(6): 1856–1867. doi: 10.1109/tmi.2019.2959609 [25] YU Changqian, XIAO Bin, GAO Changxin, et al. Lite-HRNet: A lightweight high-resolution network[C]. The 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, USA, 2021: 10435–10445. [26] ZHUANG Juntang. LadderNet: Multi-path networks based on U-Net for medical image segmentation[EB/OL]. https://arxiv.org/abs/1810.07810, 2018. [27] LI Di and RAHARDJA S. BSEResU-Net: An attention-based before-activation residual U-Net for retinal vessel segmentation[J]. Computer Methods and Programs in Biomedicine, 2021, 205: 106070. doi: 10.1016/j.cmpb.2021.106070 [28] JIN Qiangguo, MENG Zhaopeng, PHAM T D, et al. DUNet: A deformable network for retinal vessel segmentation[J]. Knowledge-Based Systems, 2019, 178: 149–162. doi: 10.1016/j.knosys.2019.04.025 [29] LI Xiang, JIANG Yuchen, LI Minglei, et al. Lightweight attention convolutional neural network for retinal vessel image segmentation[J]. IEEE Transactions on Industrial Informatics, 2021, 17(3): 1958–1967. doi: 10.1109/tii.2020.2993842 [30] LV Yan, MA Hui, LI Jianian, et al. Attention guided U-Net with atrous convolution for accurate retinal vessels segmentation[J]. IEEE Access, 2020, 8: 32826–32839. doi: 10.1109/access.2020.2974027 [31] YUAN Yuchen, ZHANG Lei, WANG Lituan, et al. Multi-level attention network for retinal vessel segmentation[J]. IEEE Journal of Biomedical and Health Informatics, 2022, 26(1): 312–323. doi: 10.1109/jbhi.2021.3089201 -

下载:

下载:

下载:

下载: