Crowded Pedestrian Detection Method Combining Anchor Free and Anchor Base Algorithm

-

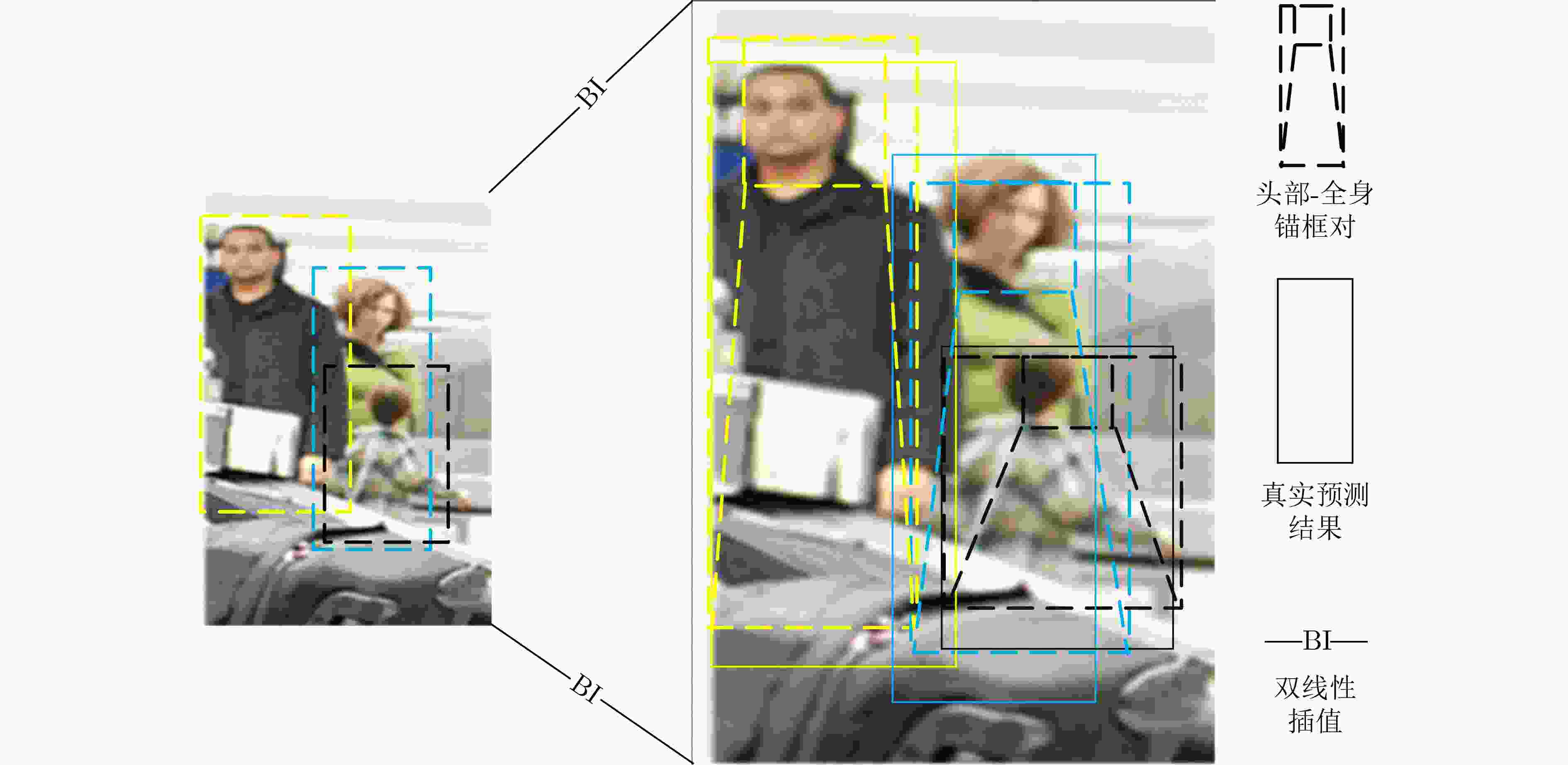

摘要: 由于精度相对较高,Anchor base算法目前已成为拥挤场景下行人检测的研究热点。但是,该算法需要手工设计锚框,限制了其通用性。同时,单一的非极大值抑制(NMS)筛选阈值作用于不同密度的人群区域会导致一定程度的漏检和误检。为此,该文提出一种Anchor free与Anchor base检测器相结合的双头检测算法。具体地,先利用Anchor free检测器对图像进行粗检测,将粗检测结果进行自动聚类生成锚框后反馈给区域建议网络(RPN)模块,以代替RPN阶段手工设计锚框的步骤。同时,通过对粗检测结果信息的统计可得到不同区域人群的密度信息。该文设计一个行人头部-全身互监督检测框架,利用头部检测结果与全身的检测结果互相监督,从而有效减少被抑制与漏检的目标实例。提出一种新的NMS算法,该方法可以自适应地为不同密度的人群区域选择合适的筛选阈值,从而最大限度地减少NMS处理引起的误检。所提出的检测器在CrowdHuman数据集和CityPersons数据集进行了实验验证,取得了与目前最先进的行人检测方法相当的性能。

-

关键词:

- 行人检测 /

- Anchor base /

- Anchor free /

- 非极大值抑制

Abstract: Due to its relatively higher accuracy, the Anchor base algorithm has become a research hotspot for pedestrian detection in crowded scenes. However, the algorithm needs to design manually anchor boxes, which limits its generality. At the same time, a single Non-Maximum Suppression (NMS) screening threshold acting on crowd areas with different densities will lead to a certain degree of missed detection or false detection. To this end, a dual-head detection algorithm combining Anchor free and Anchor base detectors is proposed. Specifically, the Anchor free detector is used to perform rough detection on the image, and the coarse detection results are automatically clustered to generate anchor frames and then fed back to the Region Proposal Network (RPN) module, instead of manually designing the anchor frames in the RPN stage. Meanwhile, the density information of the population in different regions can be obtained through the statistics of the rough detection result information. A pedestrian head-whole body mutual supervision detection framework is designed, and the head detection results and the whole body detection results supervise each other, so as to reduce effectively the suppressed and missed target instances. A novel NMS method is proposed, which can adaptively select appropriate screening thresholds for crowd regions of different densities, thereby minimizing false detections caused by NMS process. The proposed detector is experimentally validated on the CrowdHuman dataset and the CityPersons dataset, achieving comparable performance to current state-of-the-art pedestrian detection methods.-

Key words:

- Pedestrian detection /

- Anchor base /

- Anchor free /

- Non-Maximum Suppression (NMS)

-

算法1 Stripping-NMS算法 输入: 预测得分: S = { s 1, s 2,···, s n },全身预测框 :B f = { b f1 , b f2 ,···, b fm },头部预测框: B h = { b h1 , b h2 ,···, b hn }, 固定框: B a = { b a1 , b a2 ,···, b ai },不同的密度区域: A = { A 0, A 1, A 2, A 3, A 4},NMS threshold: N D = [0.5;0.6;0.65;0.7;0.8], B = { b 1, b 2,···, b i }, B m表示最大得分框, M表示最大得分预测 框集合, R表示最终预测框集合。 begin: R← B a while B ≠ empty do m ← argmax S ; M ← b m R ← F∪ M; B← B− M for b i in B do if IoU( b ai , b i ) ≥ 0.9 then B← B− b i ; S = S- s i end end for A i , N D i in A, N D do if A i $ \supset $ b i then if IoU( b m , b fi/hi ) ≥ N D i then B ← B– b fi ( b hi ); s i ← s i (1–IoU( M, b i )) else: B ← B– b fi ( b hi ); S ← s – s i end end end return R, S 表 1 CityPersons训练集与CrowdHuman训练集

图像数目 人数 每张图人数 有效行人 CityPersons 2975 19238 6.47 19238 CrowdHuman 15000 339565 22.64 339565 表 2 CityPersons数据集与CrowdHuman数据集的行人遮挡程度 [ 16]

IoU>0.5 IoU>0.6 IoU>0.7 IoU>0.8 IoU>0.9 CityPersons 0.32 0.17 0.08 0.02 0.00 CrowdHuman 2.40 1.01 0.33 0.07 0.01 表 3 在CityPersons数据集上的消融实验结果(%)

方法 交叉网络 互监督检测器 Stripping-NMS Reasonable(MR –2) Heavy(MR –2) baseline 11.74 45.24 本文 √√ √ √ 11.4810.76 43.6442.44 √ √ √ 10.48 40.81 表 4 在CrowdHuman数据集上的消融实验结果(%)

方法 新的第一阶段 互监督检测器 Stripping-NMS MR –2 AP Recall baseline 42.73 85.88 80.74 本文 √√ √ √ 42.3841.88 88.6389.44 83.0483.41 √ √ √ 41.04 91.25 84.26 表 5 不同方法在CityPersons数据集上的性能比较(%)

方法 主干网络 输入尺度 Reasonable(MR –2) Heavy(MR –2) OR-CNN [ 20] MGAN [ 21] Adaptive-NMS [ 9] R 2NMS [ 10] EGCL [ 22] RepLoss [ 6] CrowDet [ 23] 文献[ 24]RepLoss [ 6] CrowDet [ 23] NOH-NMS [ 25] baseline本文方法 VGG-16VGG-16VGG-16VGG-16VGG-16ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50 1×1×1×1×1×1×1×–1.3×1.3×1.3×1.3×1.3× 12.8011.5012.9011.1011.5013.2012.1011.6011.6010.7010.8011.74 10.48 55.7051.7056.4053.3050.0056.9040.0047.3055.30 38.00–45.2440.81 表 6 不同方法在CrowdHuman数据集上的性能比较(%)

方法 主干网络 MR –2 AP Recall Faster R-CNN [ 11] Adaptive-NMS [ 9] JointDet [ 7] R 2NMS [ 10] CrowdDet [ 23] DETR [ 26] NOH-NMS [ 25] 文献[ 8]V2F-Net [ 27] baseline本文方法 VGG-16VGG-16ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50ResNet-50 51.2149.7346.5043.3541.4045.5743.9050.1642.2842.73 41.04 85.0984.71–89.2990.7089.5489.0087.3191.0385.88 91.25 77.2491.27–93.3383.70 94.0092.90FPN84.2080.7484.26 增益 – –1.69 +5.37 +3.52 表 7 DetNet与Cascade R-CNN结合的性能(%)

方法 主干网络 MR –2 AP Recall 本文方法Cascade R-CNN+本文方法 Detnet-59Detnet-59 39.9438.02 91.2391.75 93.0593.14 表 8 本文方法的泛化性能(%)

方法 训练 测试 Reasonable(MR –2) Heavy(MR –2) 文献[ 8] CrowdHuman CityPersons 10.10 50.20 本文方法 CrowdHuman(h&f) CityPersons 40.23 CrowdHuman+CityPersons(v&f) CityPersons 8.84 39.27 -

[1] YE Mang, SHEN Jianbing, LIN Gaojie, et al. Deep learning for person Re-identification: A survey and outlook[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(6): 2872–2893. doi: 10.1109/TPAMI.2021.3054775 [2] MARVASTI-ZADEH S M, CHENG Li, GHANEI-YAKHDAN H, et al. Deep learning for visual tracking: A comprehensive survey[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(5): 3943–3968. doi: 10.1109/TITS.2020.3046478 [3] 贲晛烨, 徐森, 王科俊. 行人步态的特征表达及识别综述[J]. 模式识别与人工智能, 2012, 25(1): 71–81. doi: 10.3969/j.issn.1003-6059.2012.01.010BEN Xianye, XU Sen, and WANG Kejun. Review on pedestrian gait feature expression and recognition[J]. Pattern Recognition and Artificial Intelligence, 2012, 25(1): 71–81. doi: 10.3969/j.issn.1003-6059.2012.01.010 [4] 邹逸群, 肖志红, 唐夏菲, 等. Anchor-free的尺度自适应行人检测算法[J]. 控制与决策, 2021, 36(2): 295–302. doi: 10.13195/j.kzyjc.2020.0124ZOU Yiqun, XIAO Zhihong, TANG Xiafei, et al. Anchor-free scale adaptive pedestrian detection algorithm[J]. Control and Decision, 2021, 36(2): 295–302. doi: 10.13195/j.kzyjc.2020.0124 [5] ZHOU Chunluan and YUAN Junsong. Bi-box regression for pedestrian detection and occlusion estimation[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 135–151. [6] WANG Xinlong, XIAO Tete, JIANG Yuning, et al. Repulsion loss: Detecting pedestrians in a crowd[C]. The 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7774–7783. [7] CHI Cheng, ZHANG Shifeng, XING Junliang, et al. Relational learning for joint head and human detection[C]. The Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, USA, 2020: 10647–10654. [8] 陈勇, 谢文阳, 刘焕淋, 等. 结合头部和整体信息的多特征融合行人检测[J]. 电子与信息学报, 2022, 44(4): 1453–1460. doi: 10.11999/JEIT210268CHEN Yong, XIE Wenyang, LIU Huanlin, et al. Multi-feature fusion pedestrian detection combining head and overall information[J]. Journal of Electronics& Information Technology, 2022, 44(4): 1453–1460. doi: 10.11999/JEIT210268 [9] LIU Songtao, HUANG Di, and WANG Yunhong. Adaptive NMS: Refining pedestrian detection in a crowd[C]. The 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 6452–6461. [10] HUANG Xin, GE Zheng, JIE Zequn, et al. NMS by representative region: Towards crowded pedestrian detection by proposal pairing[C]. The 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 10747–10756. [11] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031 [12] ZHOU Xingyi, WANG Dequan, and KRÄHENBÜHL P. Objects as points[EB/OL]. https://arxiv.org/abs/1904.07850, 2019. [13] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(2): 318–327. doi: 10.1109/TPAMI.2018.2858826 [14] ZHENG Zhaohui, WANG Ping, REN Dongwei, et al. Enhancing geometric factors in model learning and inference for object detection and instance segmentation[J]. IEEE Transactions on Cybernetics, 2022, 52(8): 8574–8586. doi: 10.1109/TCYB.2021.3095305 [15] BODLA N, SINGH B, CHELLAPPA R, et al. Soft-NMS--improving object detection with one line of code[C]. The 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 5562–5570. [16] SHAO Shuai, ZHAO Zijian, LI Boxun, et al. CrowdHuman: A benchmark for detecting human in a crowd[EB/OL]. https://arxiv.org/abs/1805.00123, 2018. [17] ZHANG Shanshan, BENENSON R, and SCHIELE B. CityPersons: A diverse dataset for pedestrian detection[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017, 4457–4465. [18] SHAO Xiaotao, WANG Qing, YANG Wei, et al. Multi-scale feature pyramid network: A heavily occluded pedestrian detection network based on ResNet[J]. Sensors, 2021, 21(5): 1820. doi: 10.3390/s21051820 [19] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. The 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 936–944. [20] ZHANG Shifeng, WEN Longyin, BIAN Xiaobian, et al. Occlusion-aware R-CNN: Detecting pedestrians in a crowd[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 657–674. [21] PANG Yanwei, XIE Jin, KHAN M H, et al. Mask-guided attention network for occluded pedestrian detection[C]. The 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 4966–4974. [22] LIN Zebin, PEI Wenjie, CHEN Fanglin, et al. Pedestrian detection by exemplar-guided contrastive learning[J]. IEEE Transactions on Image Processing, 2023, 32: 2003–2016. doi: 10.1109/TIP.2022.3189803 [23] CHU Xuangeng, ZHENG Anlin, ZHANG Xiangyu, et al. Detection in crowded scenes: One proposal, multiple predictions[C]. The 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 12211–12220. [24] 陈勇, 刘曦, 刘焕淋. 基于特征通道和空间联合注意机制的遮挡行人检测方法[J]. 电子与信息学报, 2020, 42(6): 1486–1493. doi: 10.11999/JEIT190606CHEN Yong, LIU Xi, and LIU Huanlin. Occluded pedestrian detection based on joint attention mechanism of channel-wise and spatial information[J]. Journal of Electronics& Information Technology, 2020, 42(6): 1486–1493. doi: 10.11999/JEIT190606 [25] ZHOU Penghao, ZHOU Chong, PENG Pai, et al. NOH-NMS: Improving pedestrian detection by nearby objects hallucination[C]. The 28th ACM International Conference on Multimedia, Seattle, USA, 2020: 1967–1975. [26] LIN M, LI Chuming, BU Xingyuan, et al. DETR for crowd pedestrian detection[EB/OL]. https://arxiv.org/abs/2012.06785, 2020. [27] SHANG Mingyang, XIANG Dawei, WANG Zhicheng, et al. V2F-Net: Explicit decomposition of occluded pedestrian detection[EB/OL]. https://arxiv.org/abs/2104.03106, 2021. [28] LI Zeming, PENG Chao, YU Gang, et al. DetNet: A backbone network for object detection[EB/OL]. https://arxiv.org/abs/1804.06215, 2018. [29] CAI Zhaowei and VASCONCELOS N. Cascade R-CNN: Delving into high quality object detection[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 6154–6162. -

下载:

下载:

下载:

下载: