Transferable Adversarial Example Generation Method For Face Verification

-

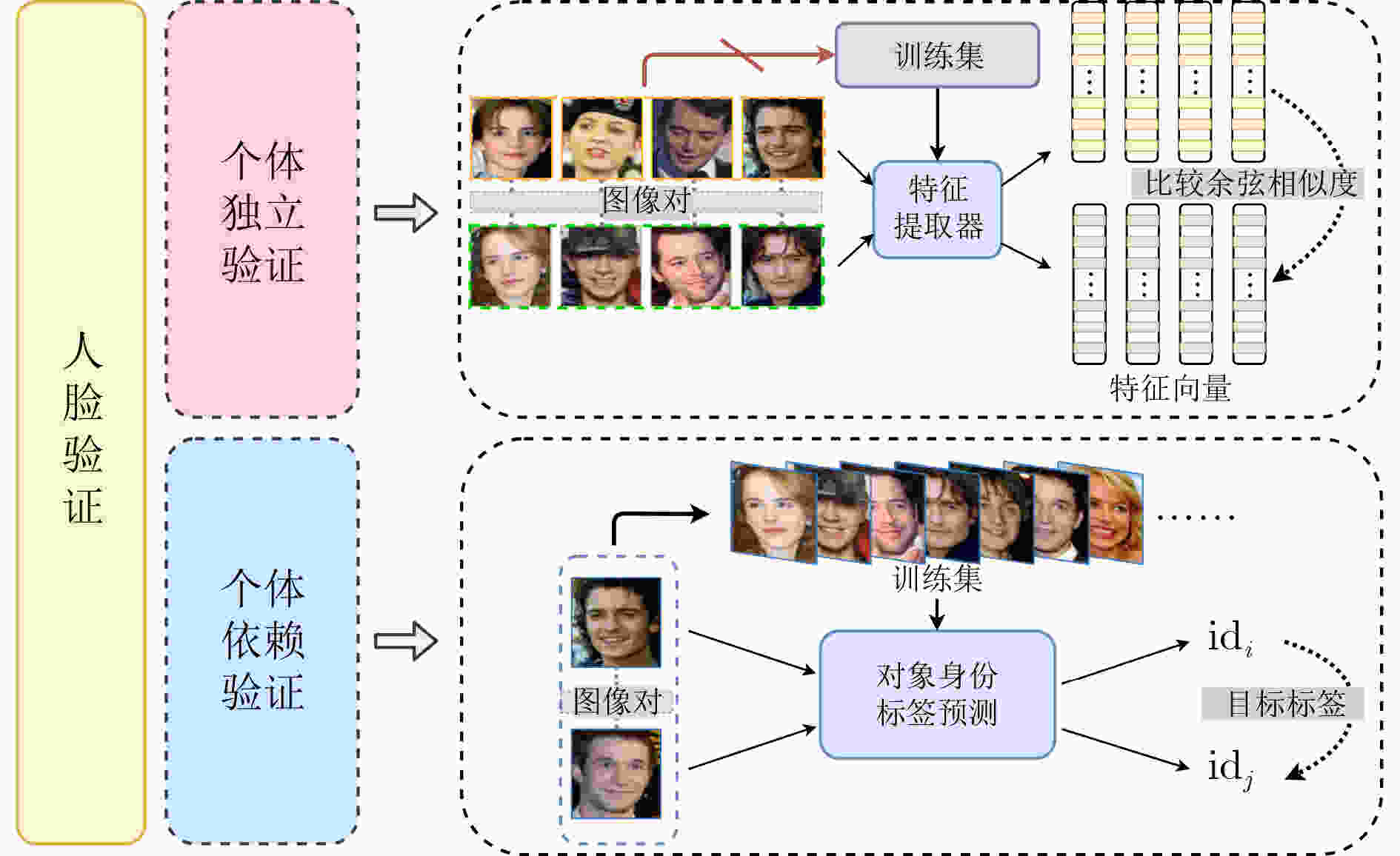

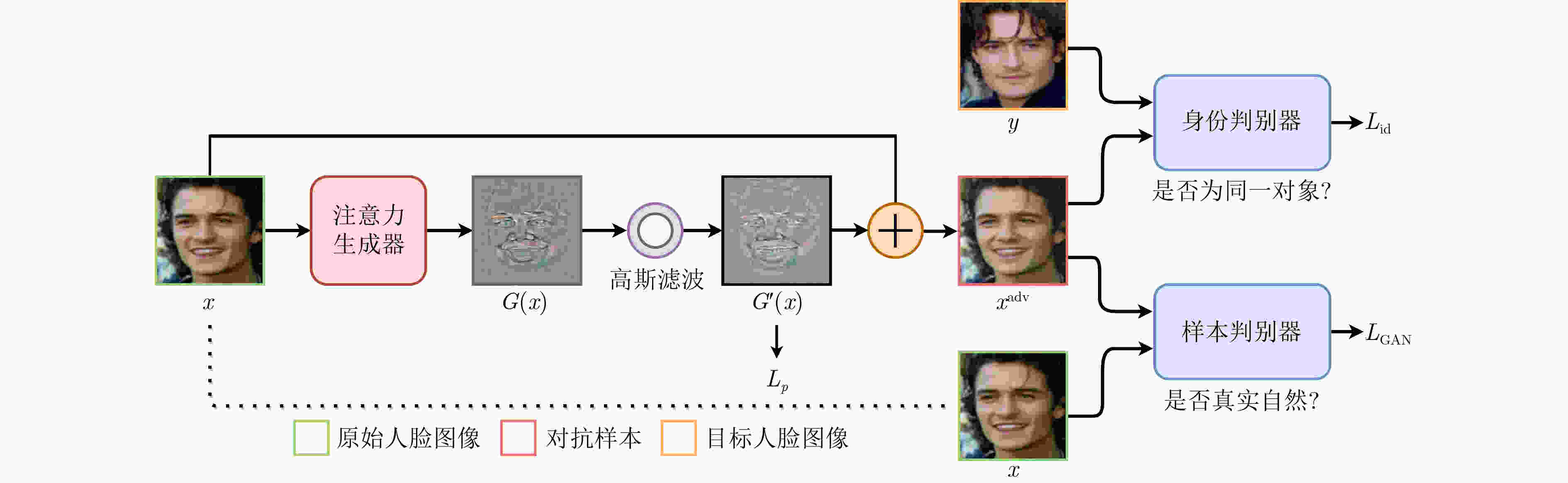

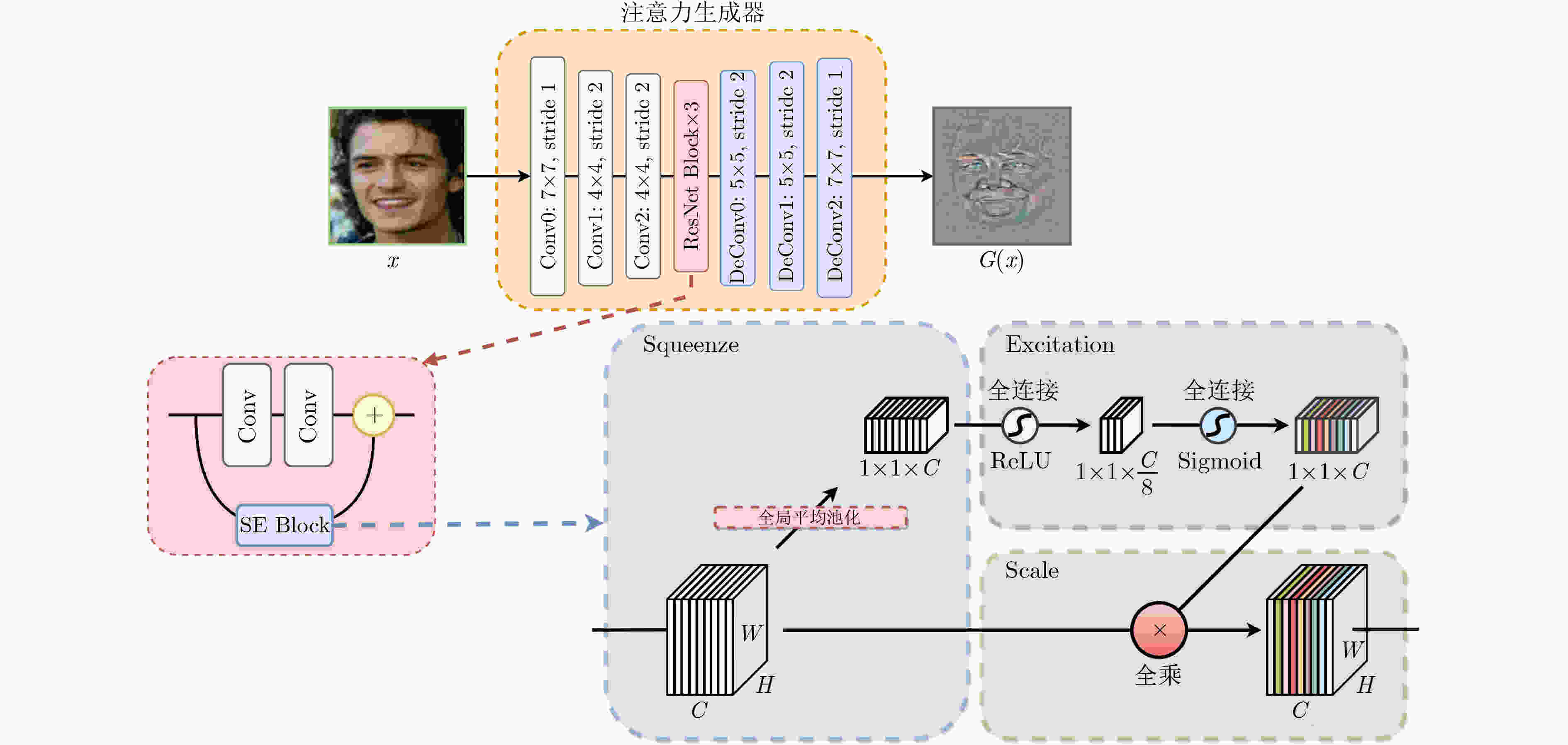

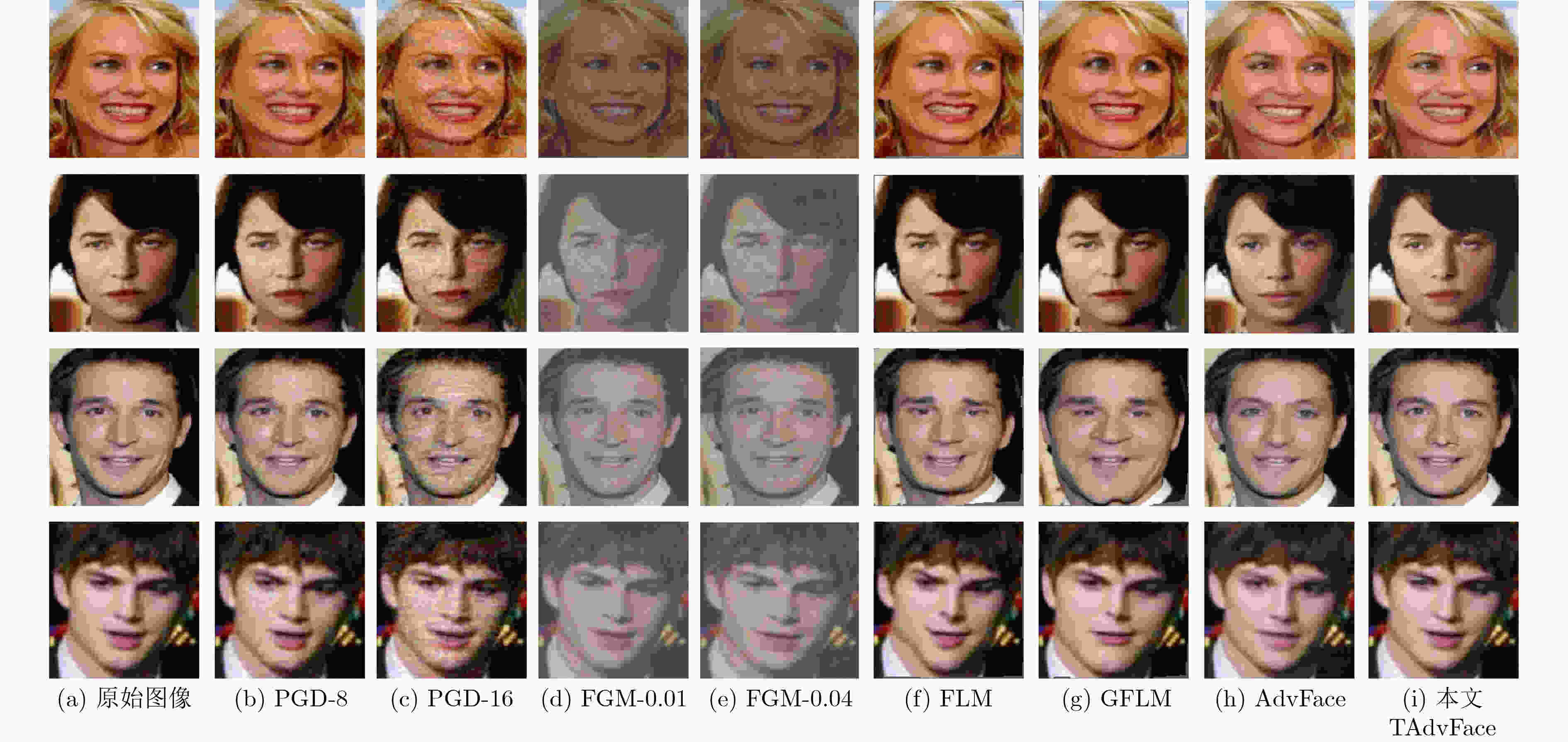

摘要: 在人脸识别模型的人脸验证任务中,传统的对抗攻击方法无法快速生成真实自然的对抗样本,且对单模型的白盒攻击迁移到其他人脸识别模型上时攻击效果欠佳。该文提出一种基于生成对抗网络的可迁移对抗样本生成方法TAdvFace。TAdvFace采用注意力生成器提高面部特征的提取能力,利用高斯滤波操作提高对抗样本的平滑度,并用自动调整策略调节身份判别损失权重,能够根据不同的人脸图像快速地生成高质量可迁移的对抗样本。实验结果表明,TAdvFace通过单模型的白盒训练,生成的对抗样本能够在多种人脸识别模型和商业API模型上都取得较好的攻击效果,拥有较好的迁移性。Abstract: In the face verification task of the face recognition model, traditional adversarial attack methods can not quickly generate real and natural adversarial examples, and the adversarial examples generated for one model under the white-box setting perform worse when transferred to other models. A GAN-based method TAdvFace is proposed for transferable adversarial example generation. TAdvFace uses an attention generator to improve the extraction of facial features. A Gaussian filtering operation is used to improve the smoothness of the adversarial samples. An automatic adjustment strategy is used to adjust the loss weight of identity discrimination, which can quickly generate high-quality migratable adversarial samples based on different face images. Experimental results show that through the white box training of a single model, the adversarial examples generated by the TAdvFace can achieve great attack results and transferability in a variety of face recognition models and commercial API models.

-

Key words:

- Face verification /

- Adversarial example /

- Generate adversarial networks /

- Transferability

-

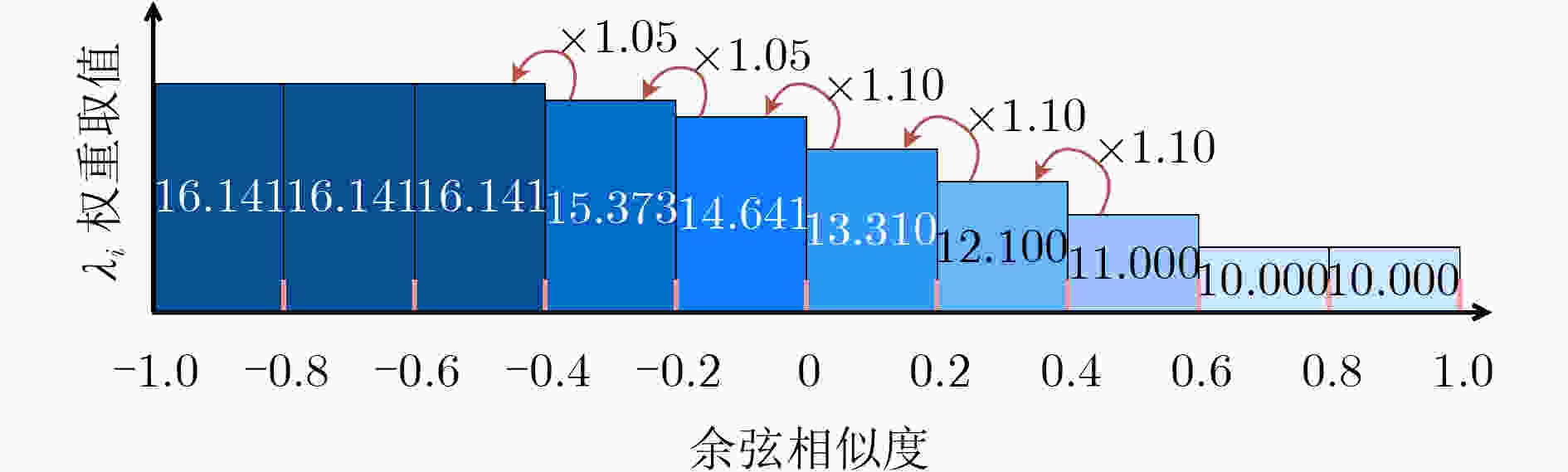

算法1 自动调整算法 输入:对抗损失函数权重${\lambda _i}$;目标人脸图像$y$; 输入:最大迭代次数$T$;对抗样本${x^t}$;目标人脸图像$y$ (1) for $t = 0$ to $T - 1$ do (a) ${\lambda _i}$=10 (b) generate Adversarial Example ${x^t}$ within $\varepsilon $ (c) Get the cosine pair by Cosine Similarity$({x^t},y)$ (d) if cosine pair <0.6 then (e) ${\lambda _i}$=1.1$ \times $${\lambda _i}$ $ \triangleright $cosine pair[–1,0.6] (f) if cosine pair <0.4 then (g) ${\lambda _i}$=1.1$ \times $${\lambda _i}$ $\triangleright $cosine pair[–1,0.4] (h) if cosine pair <0 then (i) ${\lambda _i}$=1.1$ \times $${\lambda _i}$ $\triangleright $cosine pair[–1,0] (j) if cosine pair <-0.2 then (k) ${\lambda _i}$=1.05$ \times $${\lambda _i}$ $\triangleright $cosine pair[–1,–0.2] (l) if cosine pair <-0.4 then (m) ${\lambda _i}$=1.05$ \times $${\lambda _i}$ $\triangleright $cosine pair[–1,–0.4] (n) update L with new(${\lambda _i}$) $\triangleright $计算总损失函数 (o) $t = t + 1$ (2) end for 表 1 各攻击方法生成的对抗样本对人脸识别模型的非定向攻击成功率(%)

攻击方法 FaceNet SphereFace InsightFace VGG-Face API-Baidu API-Face++ API-Xfyun PGD-8 99.90 26.29 26.29 21.61 72.21 22.40 6.31 PGD-16 99.90 52.95 52.54 32.67 94.57 56.25 16.95 FGM-0.01 87.47 17.74 13.47 16.00 50.75 10.35 2.71 FGM-0.04 91.60 26.76 28.22 18.26 74.81 26.74 7.62 FLM 100.00 24.01 16.40 18.13 74.85 20.32 5.24 GFLM 99.83 33.51 26.40 23.07 89.62 42.48 11.78 AdvFace 100.00 63.04 51.51 57.13 94.18 59.71 13.05 本文TAdvFace 99.98 78.59 58.22 73.47 98.37 74.22 28.14 表 2 各对抗攻击方法生成的对抗样本的图像质量评价和生成时间

评价指标 PGD-8 PGD-16 FGM-0.01 FGM-0.04 FLM GFLM AdvFace 本文TAdvFace ↑SSIM 0.89±0.01 0.75±0.03 0.82±0.07 0.82±0.07 0.82±0.05 0.62±0.10 0.91±0.02 0.92±0.02 ↑PSNR(dB) 34.11±0.39 29.23±0.41 19.01±3.27 18.99±3.24 23.25±1.81 19.50±2.34 28.62±2.67 29.80±4.08 ↓LPIPS 0.037±0.012 0.086±0.021 0.073±0.041 0.072±0.041 0.033±0.010 0.058±0.025 0.020±0.006 0.020±0.007 ↓时间(s) 8.27 7.86 0.01 0.01 0.12 0.53 0.01 0.01 表 3 不同高斯核尺寸下对抗样本的非定向攻击成功率(%)

尺寸 FaceNet SphereFace InsightFace VGG-Face API-Baidu API-Face++ / 99.72 26.73 16.16 29.63 67.23 17.22 1$ \times $1 99.69 24.50 15.30 28.03 64.61 15.71 3$ \times $3 99.70 25.12 15.30 26.93 65.74 16.03 5$ \times $5 99.70 38.67 21.19 41.68 78.56 27.69 7$ \times $7 99.79 65.71 40.25 65.14 95.60 60.46 9$ \times $9 99.78 87.73 62.74 79.24 98.51 83.46 表 4 不同高斯核尺寸下对抗样本的图像质量评价

尺寸 ↑SSIM ↑PSNR ↓LPIPS / 0.9732±0.013 34.40±4.82 0.0053±0.002 1$ \times $1 0.9730±0.013 34.31±4.85 0.0052±0.002 3$ \times $3 0.9725±0.014 34.37±4.82 0.0052±0.002 5$ \times $5 0.9656±0.017 33.32±4.75 0.0073±0.003 7$ \times $7 0.9406±0.026 30.63±4.60 0.0170±0.006 9$ \times $9 0.8415±0.042 25.08±4.15 0.0676±0.019 表 5 不同嵌入阶段下的对抗样本非定向攻击成功率(%)

嵌入阶段 FaceNet Sphere

FaceInsight

FaceVGGFace API-Baidu API-Face++ / 99.79 65.71 40.25 65.14 95.60 60.46 R256-1 99.68 67.64 38.52 61.09 94.43 58.02 R256-2 99.79 61.42 36.07 59.09 93.07 52.07 R256-3 99.80 66.91 37.63 65.78 93.97 56.98 All 99.82 72.27 44.17 68.18 95.25 62.01 表 6 SE模块不同组合方式下的对抗样本的攻击成功率(%)

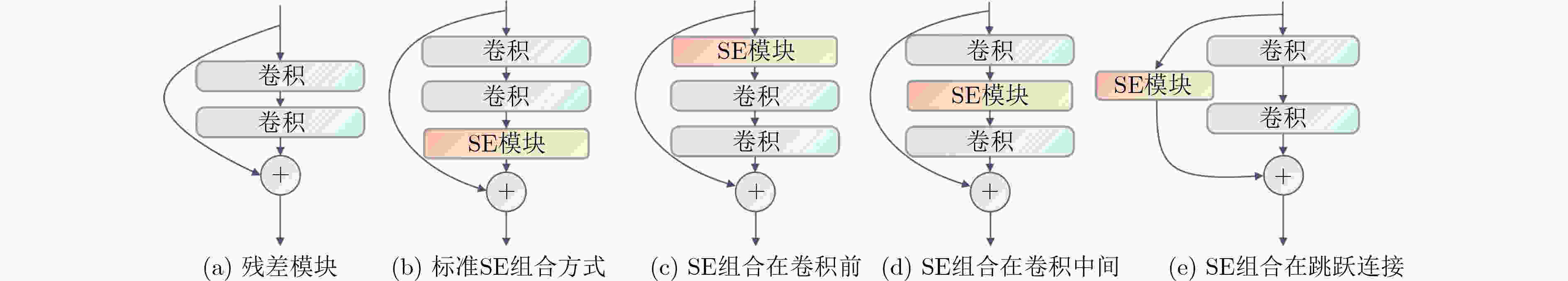

组合方式 FaceNet SphereFace InsightFace VGG-Face API-Baidu API-Face++ 标准组合 99.73 68.34 38.04 62.95 93.98 56.24 卷积操作前 99.82 69.14 41.18 67.26 95.85 63.45 卷积操作间 99.70 65.84 38.84 64.03 94.76 57.96 跳跃连接中 99.82 72.27 44.17 68.18 95.25 62.01 表 7 不同身份判别损失权重策略下非定向攻击成功率(%)

权重策略 Face

NetSphere

FaceInsight

FaceVGG-Face API-Baidu API-Face++ ${\lambda _i}$=10 99.82 72.27 44.17 68.18 95.25 62.01 自动调整 99.98 78.59 58.22 73.47 98.37 74.22 -

[1] ZHONG Yaoyao and DENG Weihong. Towards transferable adversarial attack against deep face recognition[J]. IEEE Transactions on Information Forensics and Security, 2021, 16: 1452–1466. doi: 10.1109/TIFS.2020.3036801 [2] SZEGEDY C, ZAREMBA W, SUTSKEVER I, et al. Intriguing properties of neural networks[C]. The 2nd International Conference on Learning Representations, Banff, Canada, 2014. [3] GOODFELLOW I J, SHLENS J, and SZEGEDY C. Explaining and harnessing adversarial examples[C]. The 3rd International Conference on Learning Representations, San Diego, USA, 2015. [4] MIYATO T, DAI A M, and GOODFELLOW I J. Adversarial training methods for semi-supervised text classification[C]. The 5th International Conference on Learning Representations, Toulon, France, 2017. [5] MADRY A, MAKELOV A, SCHMIDT L, et al. Towards deep learning models resistant to adversarial attacks[C]. The 6th International Conference on Learning Representations, Vancouver, Canada, 2018. [6] DABOUEI A, SOLEYMANI S, DAWSON J, et al. Fast geometrically-perturbed adversarial faces[C]. 2019 IEEE Winter conference on Applications of Computer Vision, Waikoloa, USA, 2019: 1979–1988. [7] DONG Yinpeng, SU Hang, WU Baoyuan, et al. Efficient decision-based black-box adversarial attacks on face recognition[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 7709–7714. [8] HANSEN N and OSTERMEIER A. Completely derandomized self-adaptation in evolution strategies[J]. Evolutionary Computation, 20o1, 9(2): 159–195. [9] YANG Xiao, YANG Dingcheng, DONG Yinpeng, et al. Delving into the adversarial robustness on face recognition[EB/OL]. https://arxiv.org/pdf/2007.04118v1.pdf, 2022. [10] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial networks[J]. Communications of the ACM, 2020, 63(11): 139–144. doi: 10.1145/3422622 [11] XIAO Chaowei, LI Bo, ZHU Junyan, et al. Generating adversarial examples with adversarial networks[C]. The 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 2018: 3905–3911. [12] YANG Lu, SONG Qing, and WU Yingqi. Attacks on state-of-the-art face recognition using attentional adversarial attack generative network[J]. Multimedia Tools and Applications, 2021, 80(1): 855–875. doi: 10.1007/s11042-020-09604-z [13] QIU Haonan, XIAO Chaowei, YANG Lei, et al. SemanticAdv: Generating adversarial examples via attribute-conditioned image editing[C]. 2020 16th European Conference on Computer Vision, Glasgow, UK, 2020: 19–37. [14] JOSHI A, MUKHERJEE A, SARKAR S, et al. Semantic adversarial attacks: Parametric transformations that fool deep classifiers[C]. The 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 4773–4783. [15] MIRJALILI V, RASCHKA S, and ROSS A. PrivacyNet: Semi-adversarial networks for multi-attribute face privacy[J]. IEEE Transactions on Image Processing , 2020, 29: 9400–9412. doi: 10.1109/TIP.2020.3024026 [16] ZHU Zheng’an, LU Yunzhong, and CHIANG C K. Generating adversarial examples by makeup attacks on face recognition[C]. 2019 IEEE International Conference on Image Processing, Taipei, China, 2019: 2516–2520. [17] DEB D, ZHANG Jianbang, and JAIN A K. AdvFaces: Adversarial face synthesis[C]. 2020 IEEE International Joint Conference on Biometrics, Houston, USA, 2020: 1–10. [18] HU Jie, SHEN Li, ALBANIE S, et al. Squeeze-and-excitation networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(8): 2011–2023. doi: 10.1109/TPAMI.2019.2913372 [19] SHARMA Y, DING G W, and BRUBAKER M A. On the effectiveness of low frequency perturbations[C]. Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 2019: 3389–3396. [20] YI Dong, LEI Zhen, LIAO Shengcai, et al. Learning face representation from scratch[EB/OL]. https://arxiv.org/abs/1411.7923.pdf, 2022. [21] HUANG G B, MATTAR M, BERG T, et al. Labeled faces in the wild: A database for studying face recognition in unconstrained environments[EB/OL]. http://vis-www.cs.umass.edu/papers/lfw.pdf, 2022. -

下载:

下载:

下载:

下载: