An Underwater Object Detection Method for Sonar Image Based on YOLOv3 Model

-

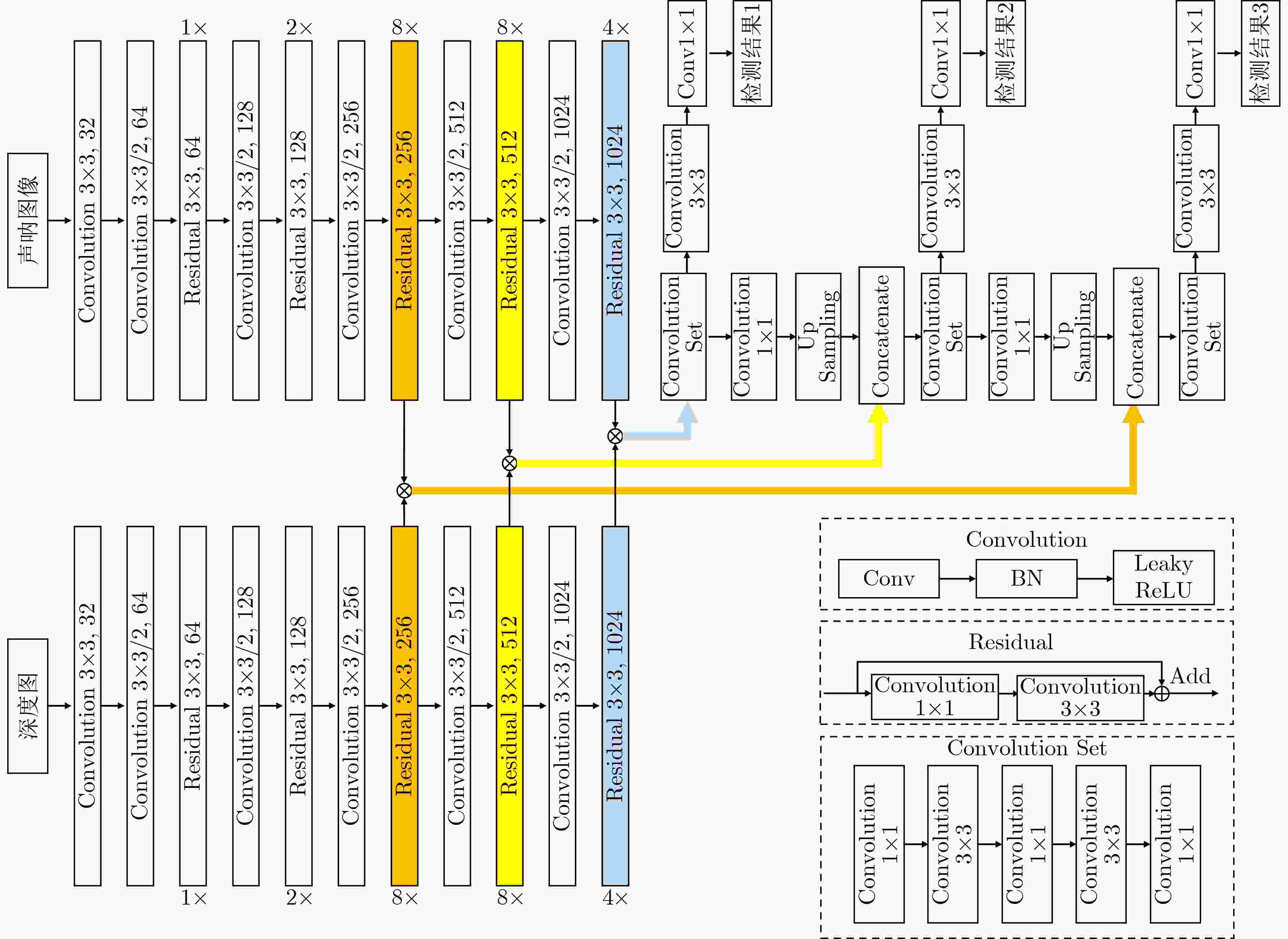

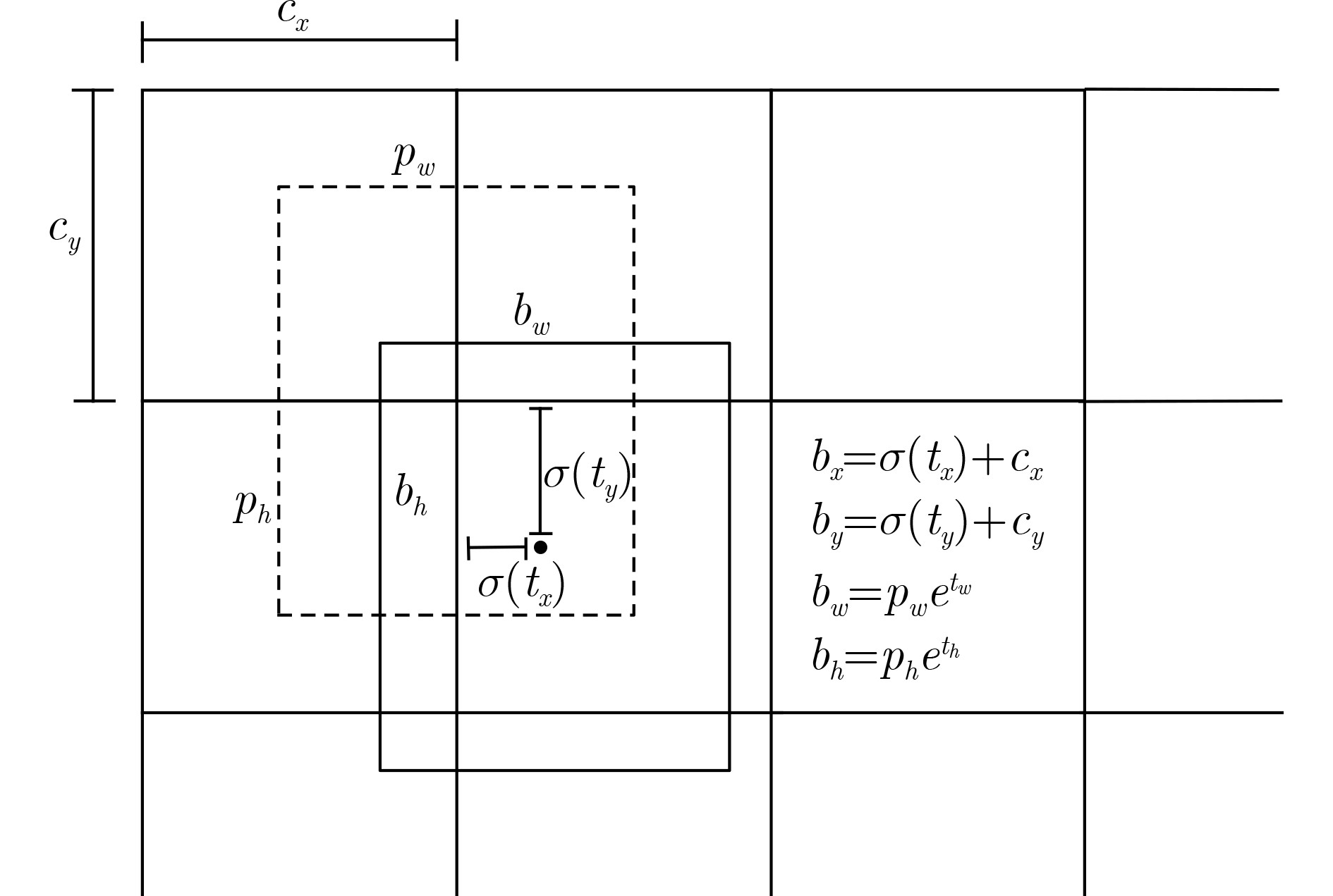

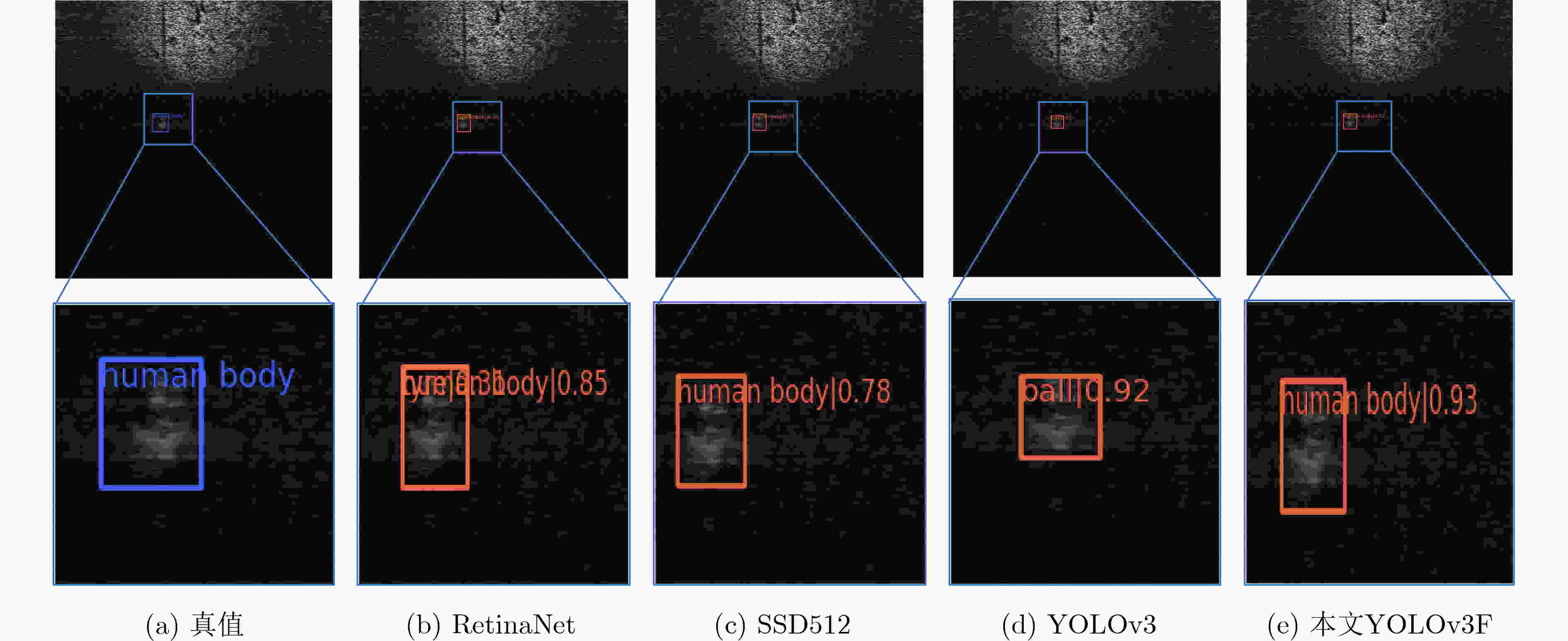

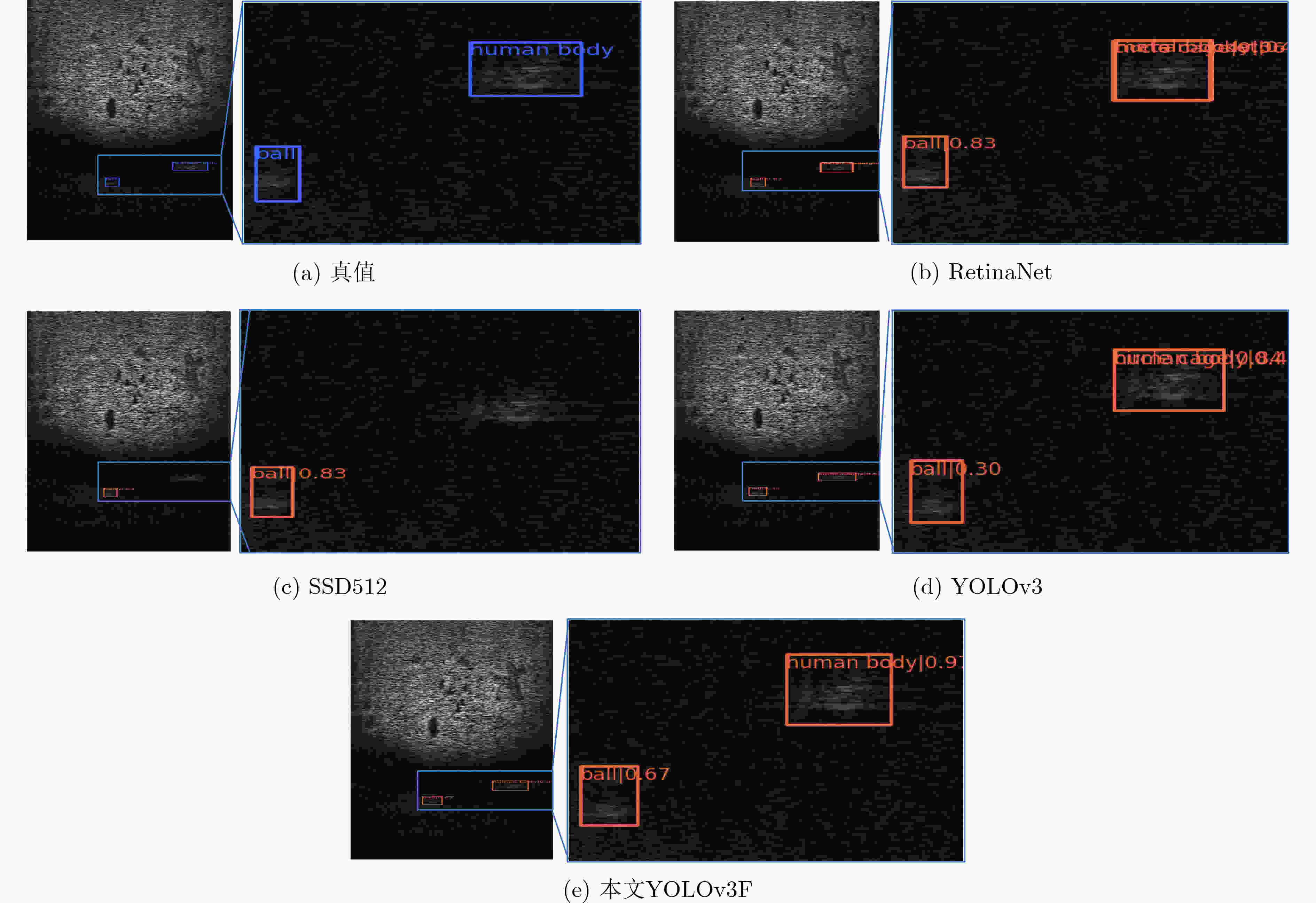

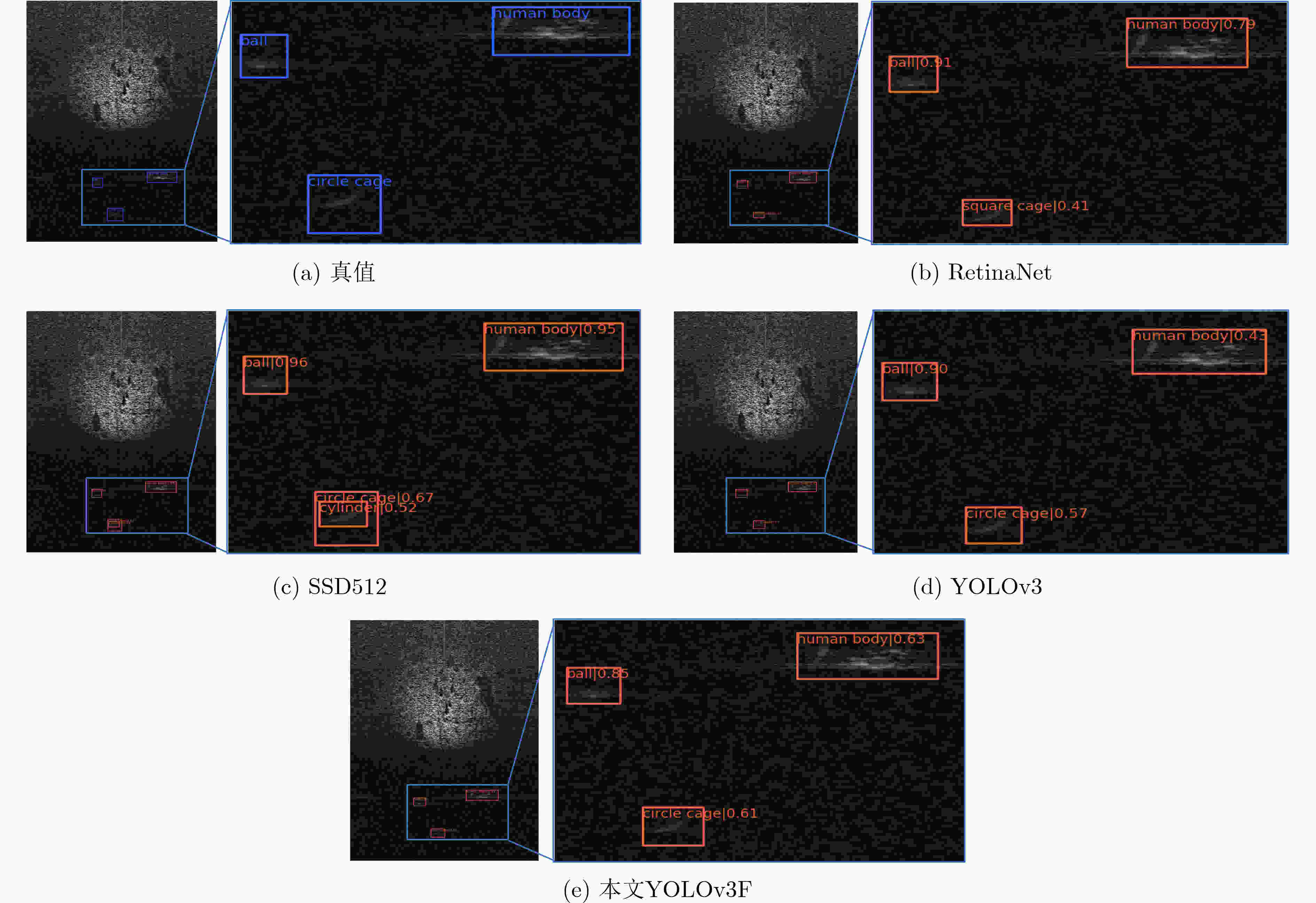

摘要: 将目标检测框架应用于水下声呐图像处理是近期的高热度话题,现有水下声呐目标检测方法多基于声呐图像的纹理特征识别不同物体,难以解决声呐图像中由于形状畸变造成的几何特征不稳定问题。为此,该文提出一种基于YOLOv3的水下物体检测模型YOLOv3F,该模型将从声呐图像中提取的纹理特征和从深度图中提取的空间几何特征相融合,利用深度图中相对稳定的空间几何特征弥补纹理特征表述能力的不足,再将融合后的特征用于目标检测。实验结果表明,所提改进模型的检测性能相较于3个基线模型在识别精度方面具有明显提升;在对单个类别的物体进行检测的情况下,与YOLOv3相比,改进模型也表现出了更出色的检测效果。Abstract: Applying the object detection framework to the processing of underwater sonar images is a recent high-profile topic. Existing detection methods for sonar data are mainly based on the texture of sonar image. These methods are not able to handle the unstable geometric shape of objects in sonar image. To this end, a YOLOv3-based underwater object detection model YOLOv3F is proposed, which fuses texture features extracted from sonar images with spatial geometric features extracted from point clouds, and then the fused features are used for target detection. The experimental results show that the performance of the proposed improved model is significantly improved compared with the three control models in the experimental setup when different intersection of detection frames are set and compared to the detection of targets of different scales; The improved model also shows better detection results compared with YOLOv3 in the case of detection of a single class of objects.

-

Key words:

- Underwater object detection /

- Sonar image /

- Depth image /

- YOLOv3 model

-

表 1 检测框不同交并比mAP对照

网络模型 mAP@[0.5,0.95] mAP@0.5 mAP@0.75 RetinaNet

SSD

YOLOv3

本文YOLOv3F0.467

0.467

0.465

0.5010.888

0.920

0.941

0.9580.425

0.400

0.380

0.468表 2 不同尺度目标检测mAP对照

网络模型 mAP(S) mAP(M) mAP(L) RetinaNet

SSD

YOLOv3

本文YOLOv3F0.194

0.235

0.211

0.2440.467

0.464

0.462

0.4990.482

0.510

0.466

0.527表 3 不同类别目标检测mAP对照

网络模型 球体 方形地笼 轮胎 圆形地笼 立方体 铁桶 人体模型 圆柱体 RetinaNet

SSD

YOLOv3

本文YOLOv3F0.507

0.527

0.498

0.5220.432

0.423

0.434

0.4600.451

0.447

0.476

0.5130.446

0.495

0.459

0.5030.529

0.479

0.499

0.5430.498

0.424

0.468

0.5010.458

0.463

0.458

0.4870.411

0.477

0.427

0.478 -

[1] ZHOU Jingchun, YANG Tongyu, CHU Weishen, et al. Underwater image restoration via backscatter pixel prior and color compensation[J]. Engineering Applications of Artificial Intelligence, 2022, 111: 10478. doi: 10.1016/j.engappai.2022.104785 [2] ZHOU Jingchun, ZHANG Dehuan, and ZHANG Weishi. Underwater image enhancement method via multi-feature prior fusion[J]. Applied Intelligence, 2022, 111: 10489. doi: 10.1007/s10489-022-03275-z [3] MAUSSANG F, CHANUSSOT J, HETET A, et al. Mean-standard deviation representation of sonar images for echo detection: Application to SAS images[J]. IEEE Journal of Oceanic Engineering, 2007, 32(4): 956–970. doi: 10.1109/JOE.2007.907936 [4] YAN Xiaowei, LI Jianlong, and HE Zhiguang. Measurement of the echo reduction for underwater acoustic passive materials by using the time reversal technique[J]. Chinese Journal of Acoustics, 2016, 35(3): 309–320. doi: 10.15949/j.cnki.0217-9776.2016.03.009 [5] MUKHERJEE K, GUPTA S, RAY A, et al. Symbolic analysis of sonar data for underwater target detection[J]. IEEE Journal of Oceanic Engineering, 2011, 36(2): 219–230. doi: 10.1109/JOE.2011.2122590 [6] BUHL M and KENNEL M B. Statistically relaxing to generating partitions for observed time-series data[J]. Physical Review E, 2005, 71(4 Pt 2): 046213. [7] GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. [8] GIRSHICK R. Fast R-CNN[C]. Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448. [9] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. doi: 10.1109/TPAMI.2016.2577031 [10] HE Kaiming, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]. Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980–2988. [11] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779–788. [12] LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot MultiBox detector[C]. 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 21–37. [13] REDMON J and FARHADI A. YOLO9000: Better, faster, stronger[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6517–6525. [14] JEONG J, PARK H, and KWAK N. Enhancement of SSD by concatenating feature maps for object detection[C]. Proceedings of the British Machine Vision Conference, London, UK, 2017: 76.1–76.12. [15] REDMON J and FARHADI A. YOLOv3: An incremental improvement[J]. arXiv: 1804.02767, 2018. [16] LAW H and DENG Jia. CornerNet: Detecting objects as paired keypoints[C]. 15th European Conference on Computer Vision, Munich, Germany, 2018: 765–781. [17] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 936–944. [18] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]. Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2999–3007. -

下载:

下载:

下载:

下载: