Research on Efficient Federated Learning Communication Mechanism Based on Adaptive Gradient Compression

-

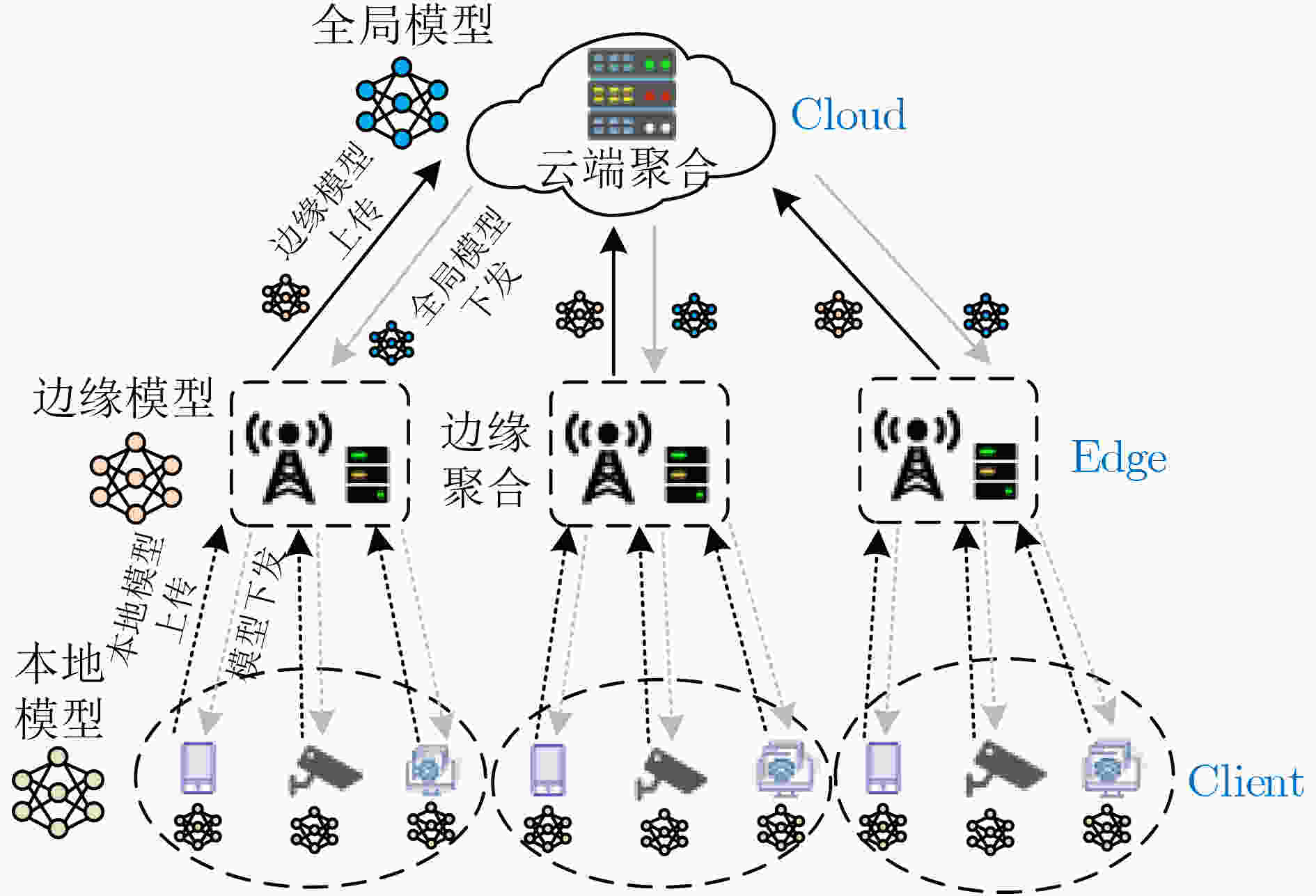

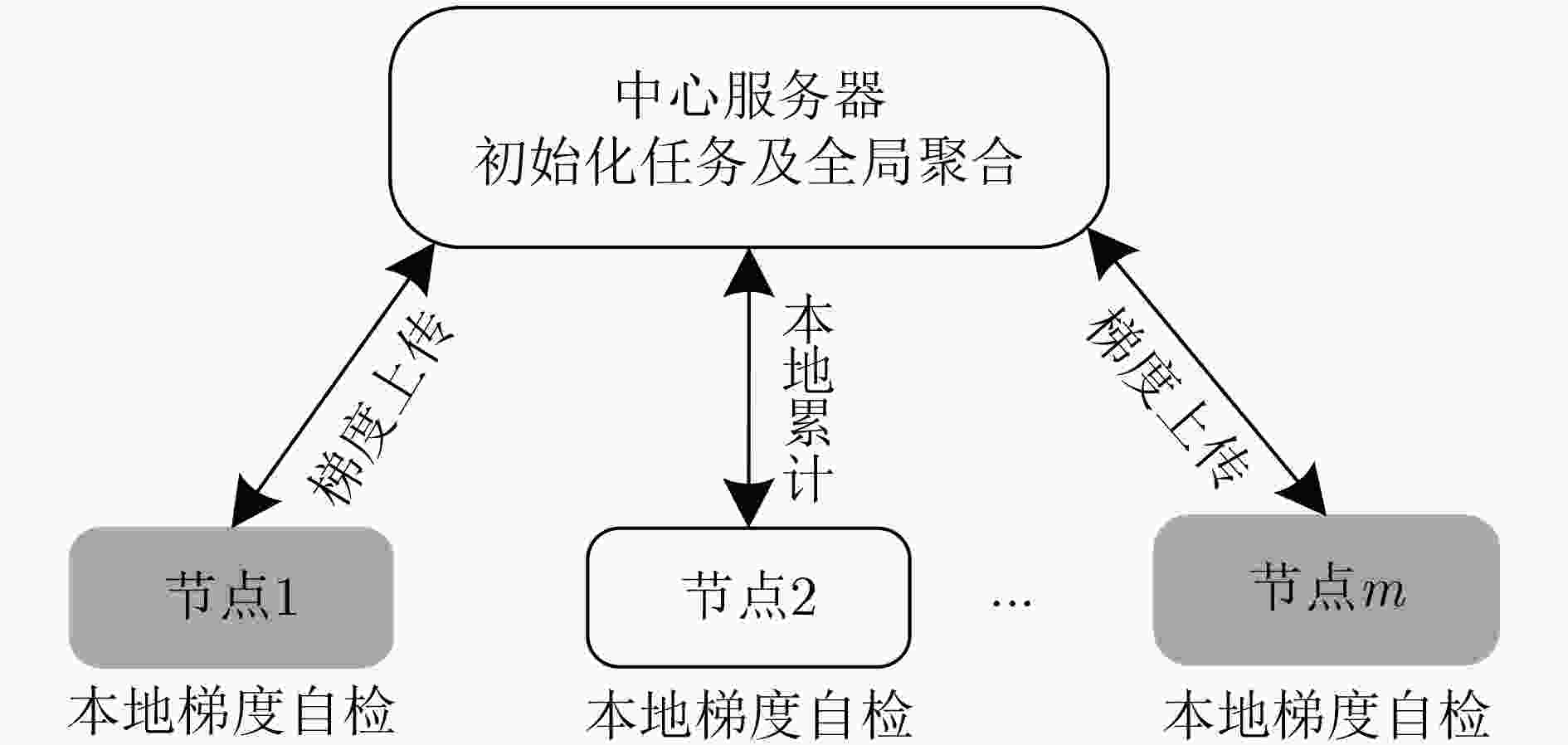

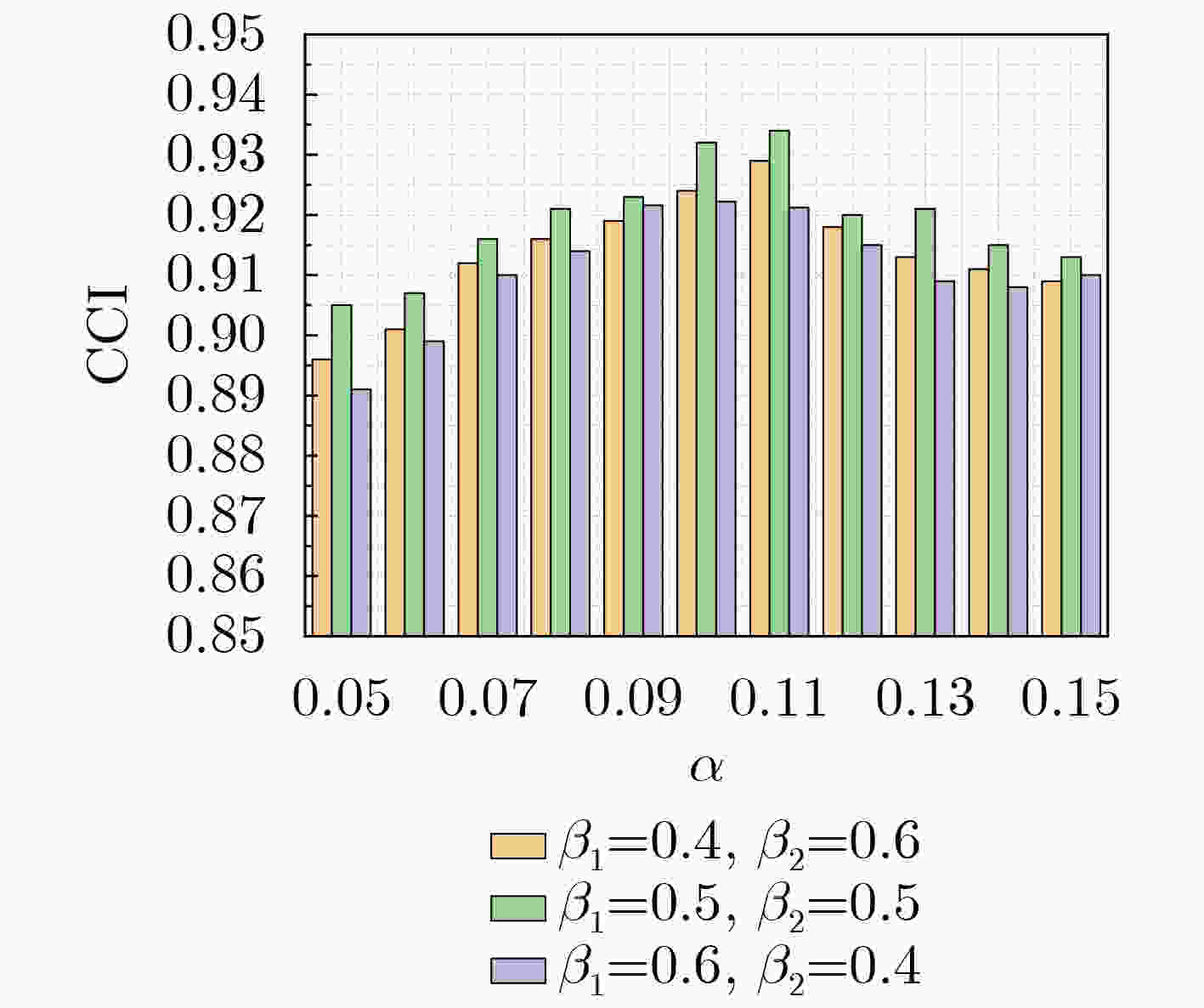

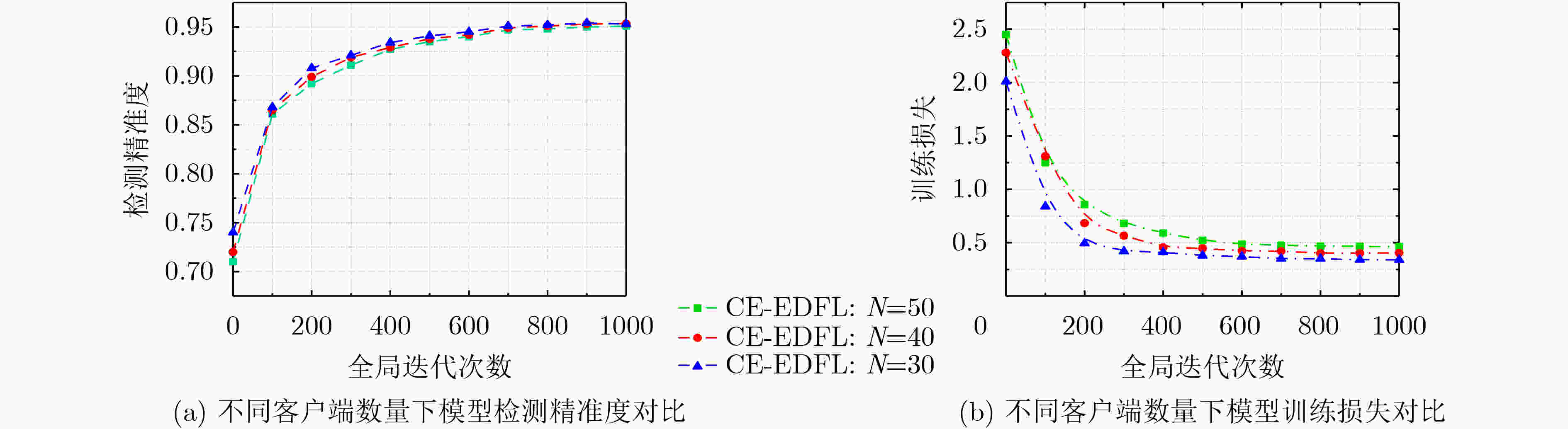

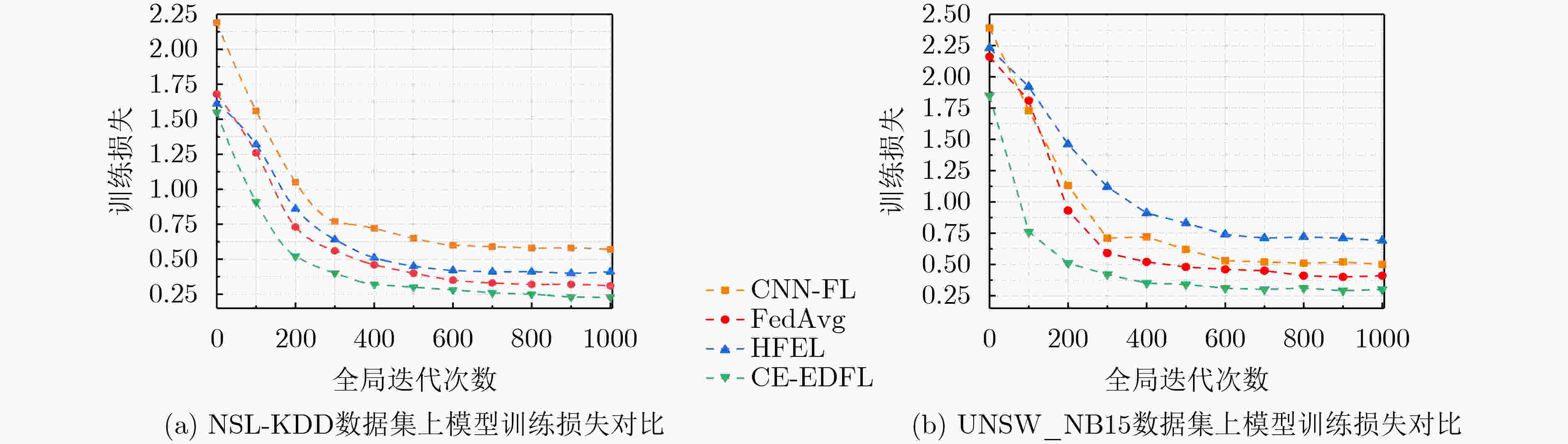

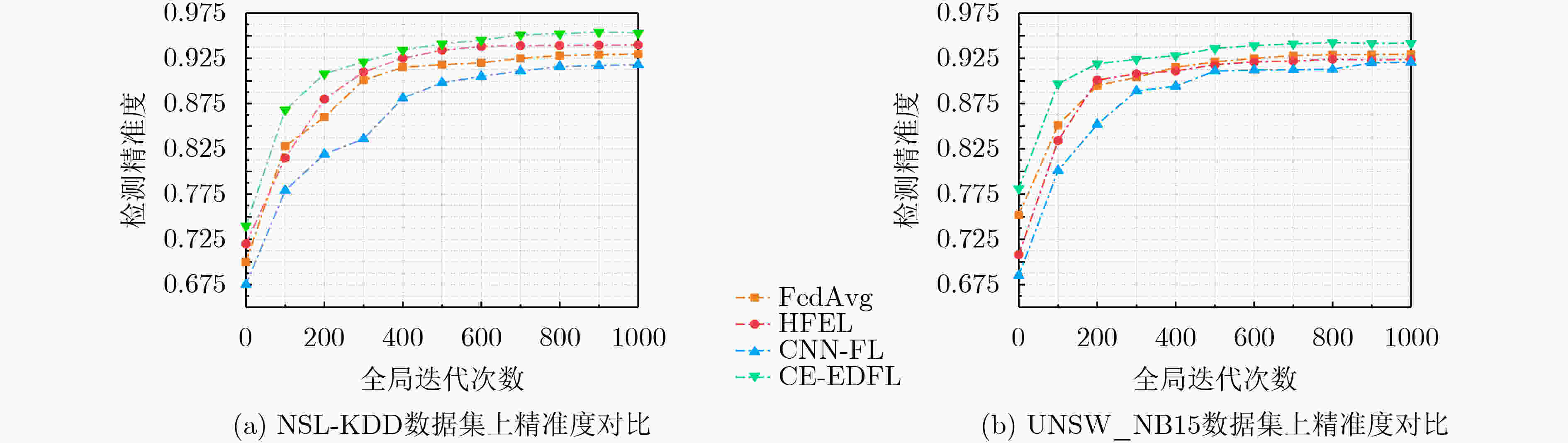

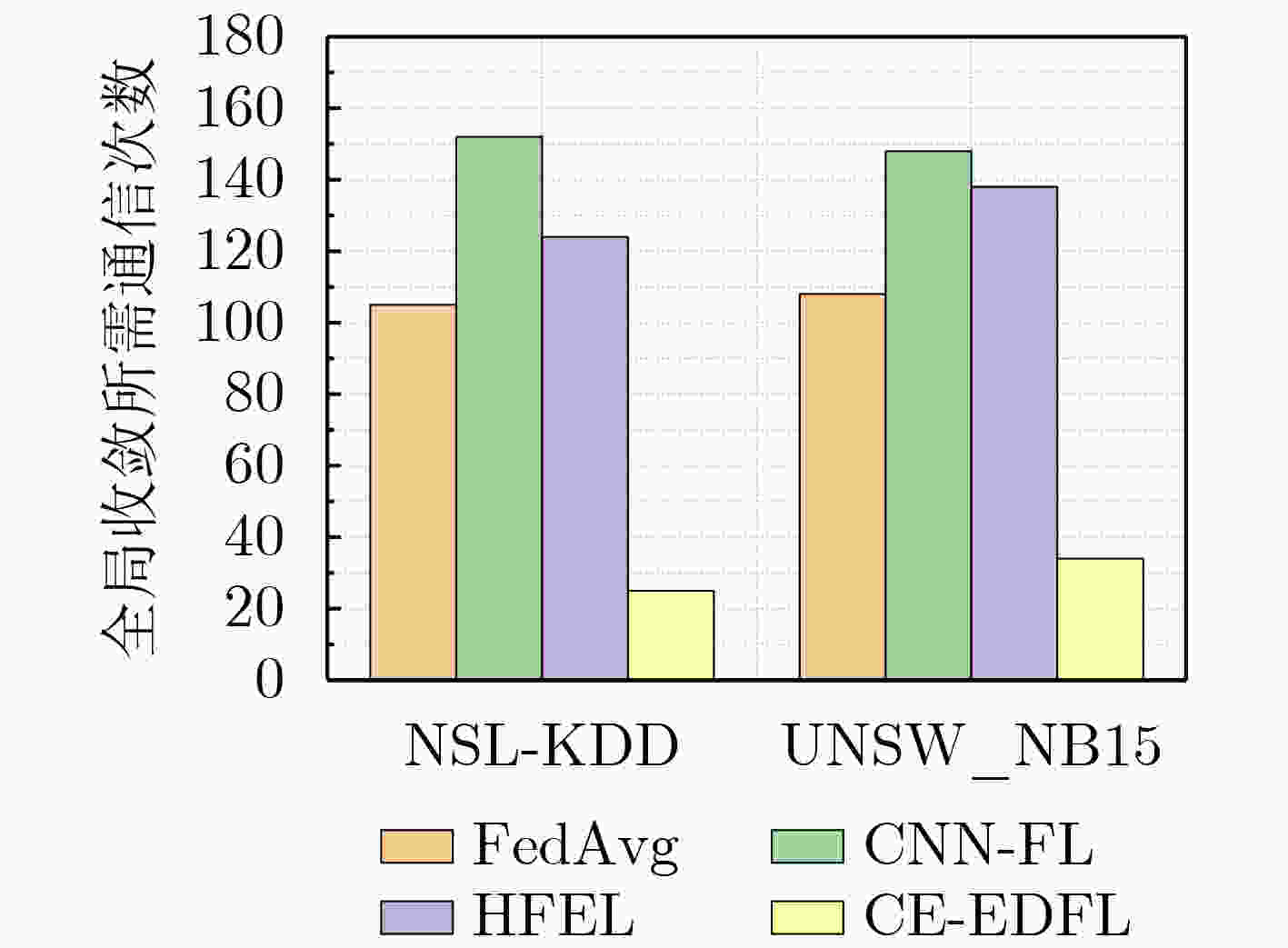

摘要: 针对物联网(IoTs)场景下,联邦学习(FL)过程中大量设备节点之间因冗余的梯度交互通信而带来的不可忽视的通信成本问题,该文提出一种阈值自适应的梯度通信压缩机制。首先,引用了一种基于边缘-联邦学习的高效通信(CE-EDFL)机制,其中边缘服务器作为中介设备执行设备端的本地模型聚合,云端执行边缘服务器模型聚合及新参数下发。其次,为进一步降低联邦学习检测时的通信开销,提出一种阈值自适应的梯度压缩机制(ALAG),通过对本地模型梯度参数压缩,减少设备端与边缘服务器之间的冗余通信。实验结果表明,所提算法能够在大规模物联网设备场景下,在保障深度学习任务完成准确率的同时,通过降低梯度交互通信次数,有效地提升了模型整体通信效率。Abstract: Considering the non-negligible communication cost problem caused by redundant gradient interactive communication between a large number of device nodes in the Federated Learning(FL) process in the Internet of Things (IoTs) scenario, gradient communication compression mechanism with adaptive threshold is proposed. Firstly, a structure of Communication-Efficient EDge-Federated Learning (CE-EDFL) is used to prevent device-side data privacy leakage. The edge server acts as an intermediary device to perform device-side local model aggregation, and the cloud performs edge server model aggregation and new parameter delivery. Secondly, in order to reduce further the communication overhead during federated learning detection, a threshold Adaptive Lazily Aggregated Gradient (ALAG) is proposed, which reduces the redundant communication between the device end and the edge server by compressing the gradient parameters of the local model. The experimental results show that the proposed algorithm can effectively improve the overall communication efficiency of the model by reducing the number of gradient interactions while ensuring the accuracy of deep learning tasks in the large-scale IoT device scenario.

-

算法1 基于边缘-联邦学习的高效通信算法 输入:云端初始化参数$ {\omega _0} $,客户端数量N,边缘设备L 输出:全局模型参数$ \omega (k) $ (1) for $ k = 1,2, \cdots ,K $ do (2) for each Client $ i = 1,2, \cdots ,N $ in parallel do (3) 使用式(3)计算本地更新梯度$ \omega _i^l(k) $ (4) end for (5) if $ k|{K_1} = 0 $ then (6) for each Edge server $ l = 1,2, \cdots ,L $ in parallel do (7) 使用式(4)计算参数$ {\omega ^l}(k) $ (8) if $ k|{K_1}{K_2} \ne 0 $ then (9) 该边缘端下所有设备参数保持不变:

$ {\omega ^l}(k) \leftarrow \omega _i^l(k) $(10) end if (11) end for (12) end if (13) if $ k|{K_1}{K_2} = 0 $ then (14) 使用式(5)计算参数$ \omega (k) $ (15) for each Client $ i = 1,2, \cdots ,N $ in parallel do (16) 设备端参数更新为云端参数:$ \omega (k) \leftarrow \omega _i^l(k) $ (17) end for (18) end if (19) end for 算法2 一种阈值自适应的梯度压缩算法 输入:设备端节点m当前所处迭代k,总迭代次数K,初始化全局

梯度$ \nabla F $输出:完成训练并符合模型要求的设备节点$ {M_{\text{L}}} $,M为设备节点

集合(1) 初始化全局下发参数$ \omega (k - 1) $ (2) for $ k = 1,2, \cdots ,K $ (3) for $ m = 1,2, \cdots ,M $ do (4) 计算当前m节点下的本地参数梯度$ \nabla {F_m}(\theta (k - 1)) $ (5) 判断参数梯度是否满足梯度自检式(16) (6) 满足则跳过本轮通信,本地梯度累计 (7) 参数梯度更新:$ \nabla {F_m}(\theta (k)) \leftarrow \nabla {F_m}(\theta (k - 1)) $ (8) 不满足上传参数梯度$ \nabla {F_m}(\theta (k - 1)) $至边缘服务器端 (9) end for (10) end for 表 1 不同

$ \alpha $ 取值下的模型检测准确率及压缩率$\alpha $ 压缩前平均

通信次数压缩后平均

通信次数模型测试平均

准确率压缩率(%) 0.1 400 32 0.9175 8.00 0.2 400 258 0.9298 64.50 0.3 400 270 0.9301 67.50 0.4 400 295 0.9314 73.75 0.5 400 328 0.9335 82.00 0.6 400 342 0.9341 85.50 0.7 400 351 0.9336 87.75 0.8 400 365 0.9352 91.25 0.9 400 374 0.9351 93.75 1.0 400 400 0.9349 100.00 表 2 不同α, β下各算法性能对比

实验验证指标 LAG EAFLM ALAG Acc(Train set) 0.8890 0.9368 0.9342 CR (%) 5.1100 8.7700 8.0000 CCI($ {\beta _1} = 0.4,{\beta _2} = 0.6 $) 0.9274 0.9206 0.9318 CCI($ {\beta _1} = 0.5,{\beta _2} = 0.5 $) 0.9220 0.9226 0.9331 CCI($ {\beta _1} = 0.6,{\beta _2} = 0.4 $) 0.9167 0.9247 0.9315 -

[1] LI Tian, SAHU A K, TALWALKAR A, et al. Federated learning: Challenges, methods, and future directions[J]. IEEE Signal Processing Magazine, 2020, 37(3): 50–60. doi: 10.1109/MSP.2020.2975749 [2] LUO Siqi, CHEN Xu, WU Qiong, et al. HFEL: Joint edge association and resource allocation for cost-efficient hierarchical federated edge learning[J]. IEEE Transactions on Wireless Communications, 2020, 19(10): 6535–6548. doi: 10.1109/TWC.2020.3003744 [3] HUANG Liang, FENG Xu, FENG Anqi, et al. Distributed deep learning-based offloading for mobile edge computing networks[J]. Mobile Networks and Applications, 2022, 27: 1123–1130. doi: 10.1007/s11036-018-1177-x [4] 赵英, 王丽宝, 陈骏君, 等. 基于联邦学习的网络异常检测[J]. 北京化工大学学报:自然科学版, 2021, 48(2): 92–99. doi: 10.13543/j.bhxbzr.2021.02.012ZHAO Ying, WANG Libao, CHEN Junjun, et al. Network anomaly detection based on federated learning[J]. Journal of Beijing University of Chemical Technology:Natural Science, 2021, 48(2): 92–99. doi: 10.13543/j.bhxbzr.2021.02.012 [5] 周传鑫, 孙奕, 汪德刚, 等. 联邦学习研究综述[J]. 网络与信息安全学报, 2021, 7(5): 77–92. doi: 10.11959/j.issn.2096-109x.2021056ZHOU Chuanxin, SUN Yi, WANG Degang, et al. Survey of federated learning research[J]. Chinese Journal of Network and Information Security, 2021, 7(5): 77–92. doi: 10.11959/j.issn.2096-109x.2021056 [6] SHI Weisong, CAO Jie, ZHANG Quan, et al. Edge computing: Vision and challenges[J]. IEEE Internet of Things Journal, 2016, 3(5): 637–646. doi: 10.1109/JIOT.2016.2579198 [7] WANG Shiqiang, TUOR T, SALONIDIS T, et al. Adaptive federated learning in resource constrained edge computing systems[J]. IEEE Journal on Selected Areas in Communications, 2019, 37(6): 1205–1221. doi: 10.1109/JSAC.2019.2904348 [8] ABESHU A and CHILAMKURTI N. Deep learning: The frontier for distributed attack detection in fog-to-things computing[J]. IEEE Communications Magazine, 2018, 56(2): 169–175. doi: 10.1109/MCOM.2018.1700332 [9] LIU Lumin, ZHANG Jun, SONG Shenghui, et al. Client-edge-cloud hierarchical federated learning[C]. ICC 2020 - 2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 2020: 1–6. [10] SATTLER F, WIEDEMANN S, MÜLLER K R, et al. Robust and communication-efficient federated learning from Non-i. i. d. data[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(9): 3400–3413. doi: 10.1109/TNNLS.2019.2944481 [11] SUN Jun, CHEN Tianyi, GIANNAKIS G B, et al. Lazily aggregated quantized gradient innovation for communication-efficient federated learning[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(4): 2031–2044. doi: 10.1109/TPAMI.2020.3033286 [12] CHEN Tianyi, SUN Yuejiao, and YIN Wotao. Communication-adaptive stochastic gradient methods for distributed learning[J]. IEEE Transactions on Signal Processing, 2021, 69: 4637–4651. doi: 10.1109/TSP.2021.3099977 [13] LU Xiaofeng, LIAO Yuying, LIO P, et al. Privacy-preserving asynchronous federated learning mechanism for edge network computing[J]. IEEE Access, 2020, 8: 48970–48981. doi: 10.1109/ACCESS.2020.2978082 [14] MCMAHAN H B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]. The 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2016: 1273–1282. -

下载:

下载:

下载:

下载: