| [1] |

李卫中, 易本顺, 邱康, 等. 细节保留的多曝光图像融合[J]. 光学 精密工程, 2016, 24(9): 2283–2292. doi: 10.3788/OPE.20162409.2283LI Weizhong, YI Benshun, QIU Kang, et al. Detail preserving multi-exposure image fusion[J]. Optics and Precision Engineering, 2016, 24(9): 2283–2292. doi: 10.3788/OPE.20162409.2283

|

| [2] |

孙婧, 徐岩, 段绿茵, 等. 高动态范围(HDR)技术综述[J]. 信息技术, 2016(5): 41–45,49. doi: 10.13274/j.cnki.hdzj.2016.05.012SUN Jing, XU Yan, DUAN Lvyin, et al. A survey on high dynamic range display technology[J]. Information Technology, 2016(5): 41–45,49. doi: 10.13274/j.cnki.hdzj.2016.05.012

|

| [3] |

MARCHESSOUX C, DE PAEPE L, VANOVERMEIRE O, et al. Clinical evaluation of a medical high dynamic range display[J]. Medical Physics, 2016, 43(7): 4023–4031. doi: 10.1118/1.4953187

|

| [4] |

王东. 基于卷积神经网络的高动态成像技术研究[D]. [硕士论文], 西安电子科技大学, 2020.WANG Dong. Investigation of high dynamic imaging technique based on convolutional neural network[D]. [Master dissertation], Xidian University, 2020.

|

| [5] |

马夏一, 范方晴, 卢陶然, 等. 基于图像块分解的多曝光图像融合去鬼影算法[J]. 光学学报, 2019, 39(9): 0910001. doi: 10.3788/AOS201939.0910001MA Xiayi, FAN Fangqing, LU Taoran, et al. Multi-exposure image fusion de-ghosting algorithm based on image block decomposition[J]. Acta Optica Sinica, 2019, 39(9): 0910001. doi: 10.3788/AOS201939.0910001

|

| [6] |

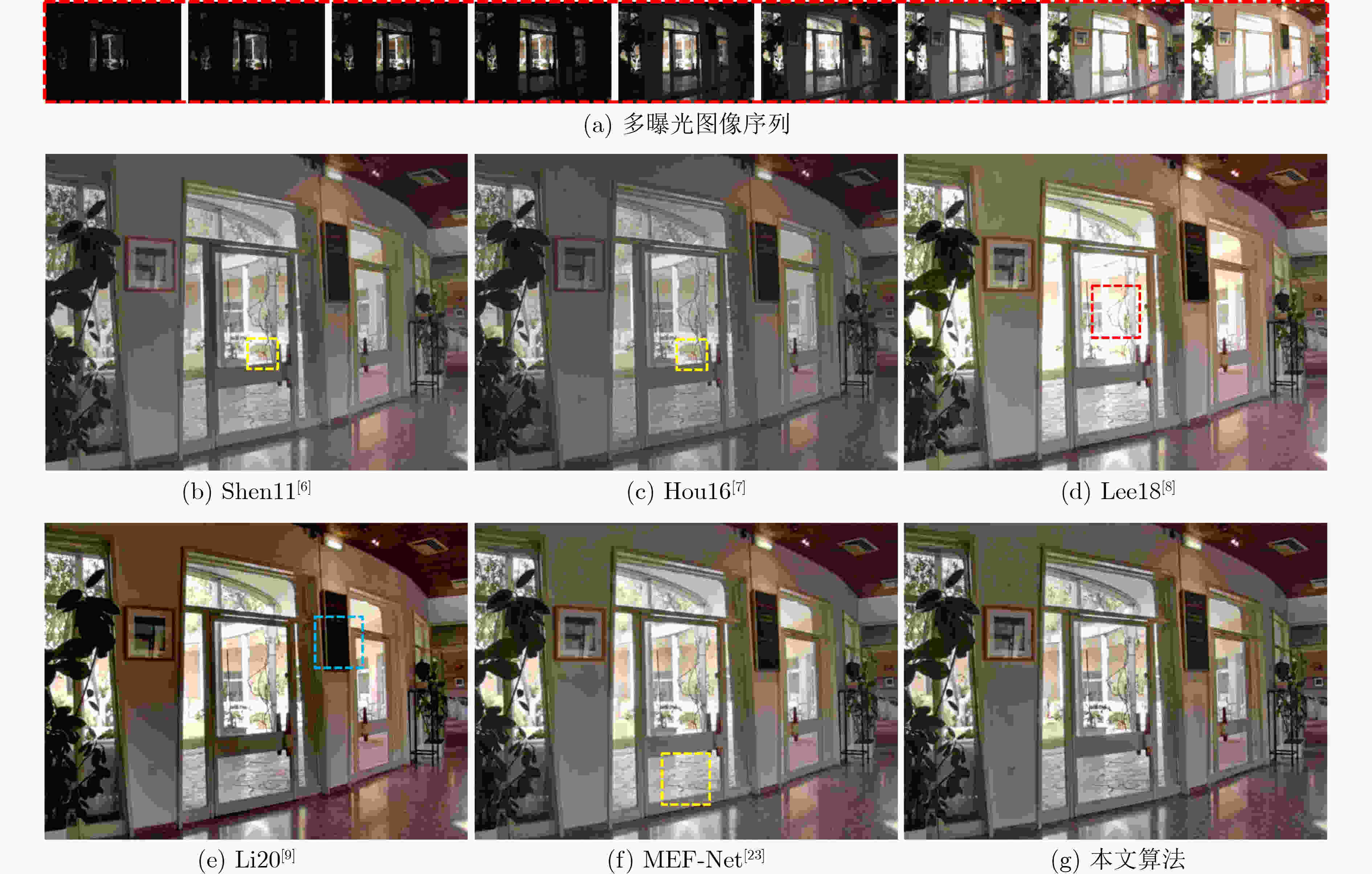

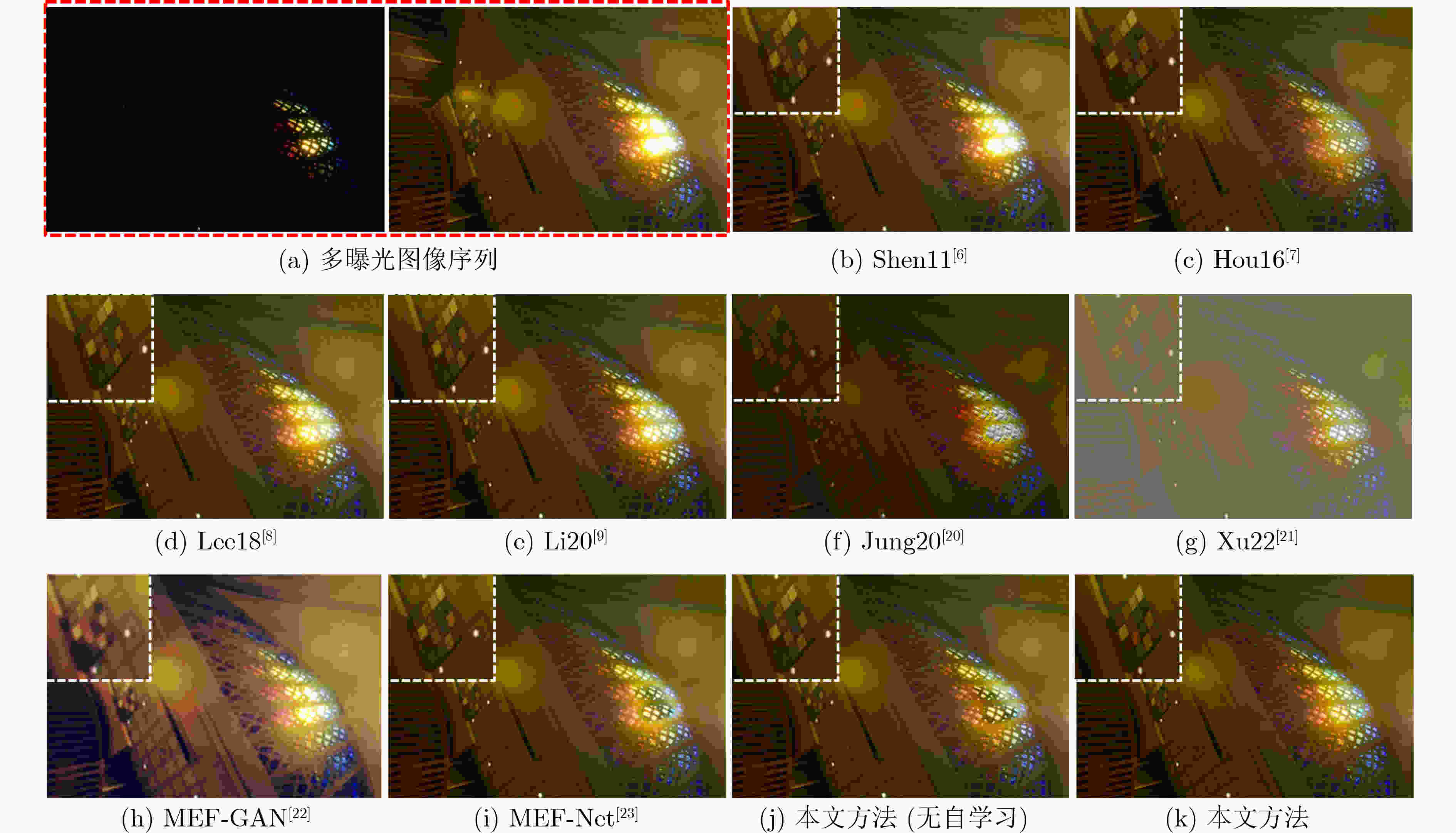

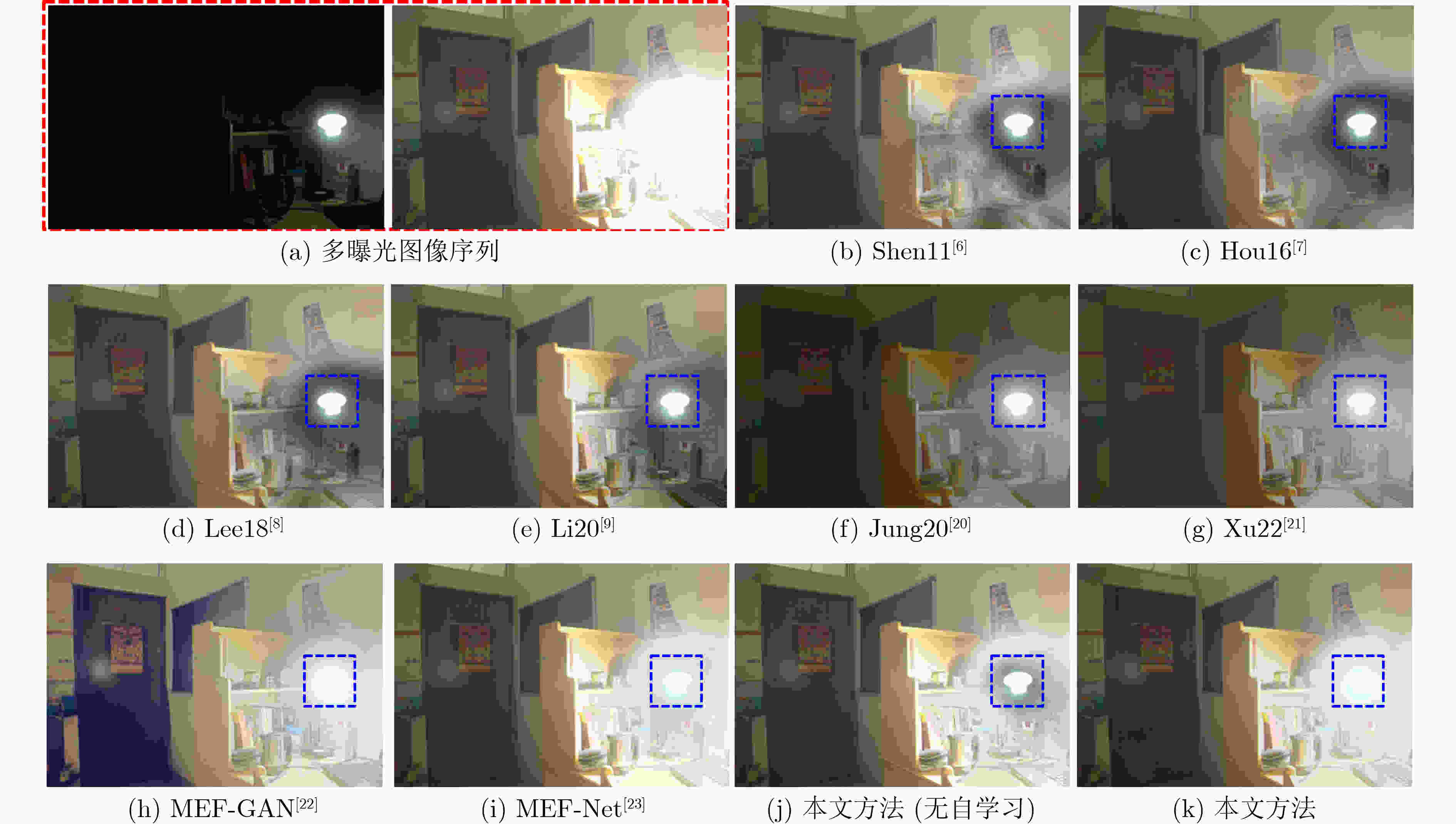

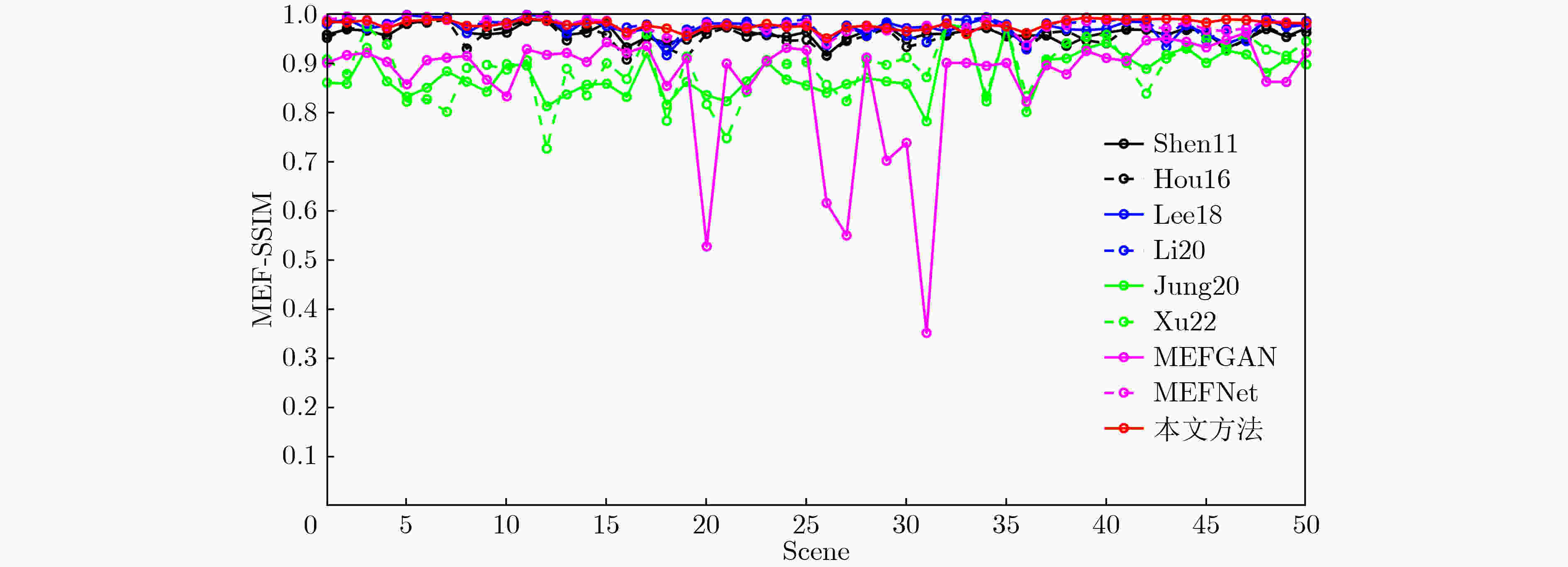

SHEN Rui, CHENG I, SHI Jianbo, et al. Generalized random walks for fusion of multi-exposure images[J]. IEEE Transactions on Image Processing, 2011, 20(12): 3634–3646. doi: 10.1109/TIP.2011.2150235

|

| [7] |

HOU Xinglin, LUO Haibo, QI Feng, et al. Guided filter-based fusion method for multiexposure images[J]. Optical Engineering, 2016, 55(11): 113101. doi: 10.1117/1.OE.55.11.113101

|

| [8] |

LEE S H, PARK J S, and CHO N I. A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient[C]. 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 2018: 1737–1741.

|

| [9] |

LI Hui, MA Kede, YONG Hongwei, et al. Fast multi-scale structural patch decomposition for multi-exposure image fusion[J]. IEEE Transactions on Image Processing, 2020, 29: 5805–5816. doi: 10.1109/TIP.2020.2987133

|

| [10] |

HOU Xinglin, ZHANG Junchao, and ZHOU Peipei. Reconstructing a high dynamic range image with a deeply unsupervised fusion model[J]. IEEE Photonics Journal, 2021, 13(2): 3900210. doi: 10.1109/JPHOT.2021.3058740

|

| [11] |

江泽涛, 何玉婷. 基于卷积自编码器和残差块的红外与可见光图像融合方法[J]. 光学学报, 2019, 39(10): 1015001. doi: 10.3788/AOS201939.1015001JIANG Zetao and HE Yuting. Infrared and visible image fusion method based on convolutional auto-encoder and residual block[J]. Acta Optica Sinica, 2019, 39(10): 1015001. doi: 10.3788/AOS201939.1015001

|

| [12] |

唐超影, 浦世亮, 叶鹏钊, 等. 基于卷积神经网络的低照度可见光与近红外图像融合[J]. 光学学报, 2020, 40(16): 1610001. doi: 10.3788/AOS202040.1610001TANG Chaoying, PU Shiliang, YE Pengzhao, et al. Fusion of low-illuminance visible and near-infrared images based on convolutional neural networks[J]. Acta Optica Sinica, 2020, 40(16): 1610001. doi: 10.3788/AOS202040.1610001

|

| [13] |

LI Hui and WU Xiaojun. DenseFuse: A fusion approach to infrared and visible images[J]. IEEE Transactions on Image Processing, 2019, 28(5): 2614–2623. doi: 10.1109/TIP.2018.2887342

|

| [14] |

聂茜茜, 肖斌, 毕秀丽, 等. 基于超像素级卷积神经网络的多聚焦图像融合算法[J]. 电子与信息学报, 2021, 43(4): 965–973. doi: 10.11999/JEIT191053NIE Xixi, XIAO Bin, BI Xiuli, et al. Multi-focus image fusion algorithm based on super pixel level convolutional neural network[J]. Journal of Electronics &Information Technology, 2021, 43(4): 965–973. doi: 10.11999/JEIT191053

|

| [15] |

MA Boyuan, ZHU Yu, YIN Xiang, et al. SESF-Fuse: An unsupervised deep model for multi-focus image fusion[J]. Neural Computing and Applications, 2021, 33(11): 5793–5804. doi: 10.1007/s00521-020-05358-9

|

| [16] |

ZHANG Junchao, SHAO Jianbo, CHEN Jianlai, et al. PFNet: An unsupervised deep network for polarization image fusion[J]. Optics Letters, 2020, 45(6): 1507–1510. doi: 10.1364/OL.384189

|

| [17] |

ZHANG Junchao, SHAO Jianbo, CHEN Jianlai, et al. Polarization image fusion with self-learned fusion strategy[J]. Pattern Recognition, 2021, 118: 108045. doi: 10.1016/j.patcog.2021.108045

|

| [18] |

CAI Jianrui, GU Shuhang, and ZHANG Lei. Learning a deep single image contrast enhancer from multi-exposure images[J]. IEEE Transactions on Image Processing, 2018, 27(4): 2049–2062. doi: 10.1109/TIP.2018.2794218

|

| [19] |

PRABHAKAR K R, SRIKAR V S, and BABU R V. DeepFuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs[C]. 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 4724–4732.

|

| [20] |

JUNG H, KIM Y, JANG H, et al. Unsupervised deep image fusion with structure tensor representations[J]. IEEE Transactions on Image Processing, 2020, 29: 3845–3858. doi: 10.1109/TIP.2020.2966075

|

| [21] |

XU Han, MA Jiayi, JIANG Junjun, et al. U2Fusion: A unified unsupervised image fusion network[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(1): 502–518. doi: 10.1109/TPAMI.2020.3012548

|

| [22] |

XU Han, MA Jiayi, and ZHANG Xiaoping. MEF-GAN: Multi-exposure image fusion via generative adversarial networks[J]. IEEE Transactions on Image Processing, 2020, 29: 7203–7216. doi: 10.1109/TIP.2020.2999855

|

| [23] |

MA Kede, DUANMU Zhengfang, ZHU Hanwei, et al. Deep guided learning for fast multi-exposure image fusion[J]. IEEE Transactions on Image Processing, 2020, 29: 2808–2819. doi: 10.1109/TIP.2019.2952716

|

| [24] |

YAN Qingsen, GONG Dong, SHI Qinfeng, et al. Attention-guided network for ghost-free high dynamic range imaging[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 1751–1760.

|

| [25] |

LIU Zhen, LIN Wenjie, LI Xinpeng, et al. ADNet: Attention-guided deformable convolutional network for high dynamic range imaging[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, USA, 2021: 463–470.

|

| [26] |

SHARIF S M A, NAQVI R A, BISWAS M, et al. A two-stage deep network for high dynamic range image reconstruction[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, USA, 2021: 550–559.

|

| [27] |

HE Kaiming, SUN Jian, and TANG Xiaoou. Guided image filtering[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(6): 1397–1409. doi: 10.1109/TPAMI.2012.213

|

| [28] |

MA Kede, ZENG Kai, and WANG Zhou. Perceptual quality assessment for multi-exposure image fusion[J]. IEEE Transactions on Image Processing, 2015, 24(11): 3345–3356. doi: 10.1109/TIP.2015.2442920

|

下载:

下载:

下载:

下载: