Paraphrase Based Data Augmentation For Chinese-English Medical Machine Translation

-

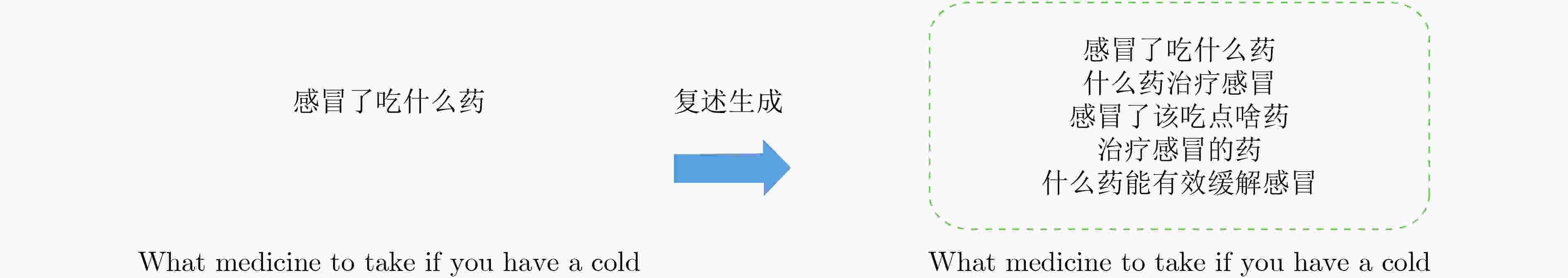

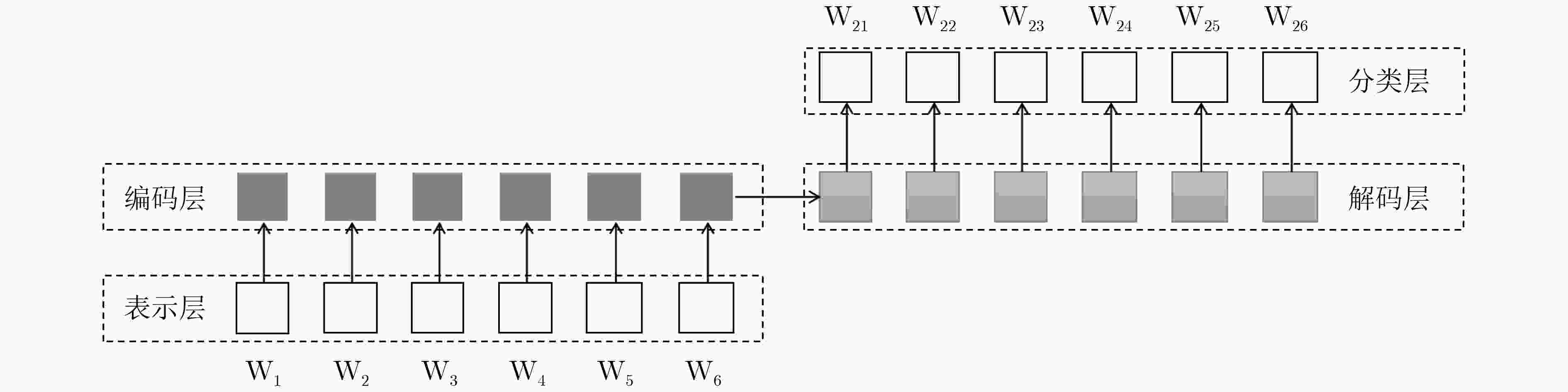

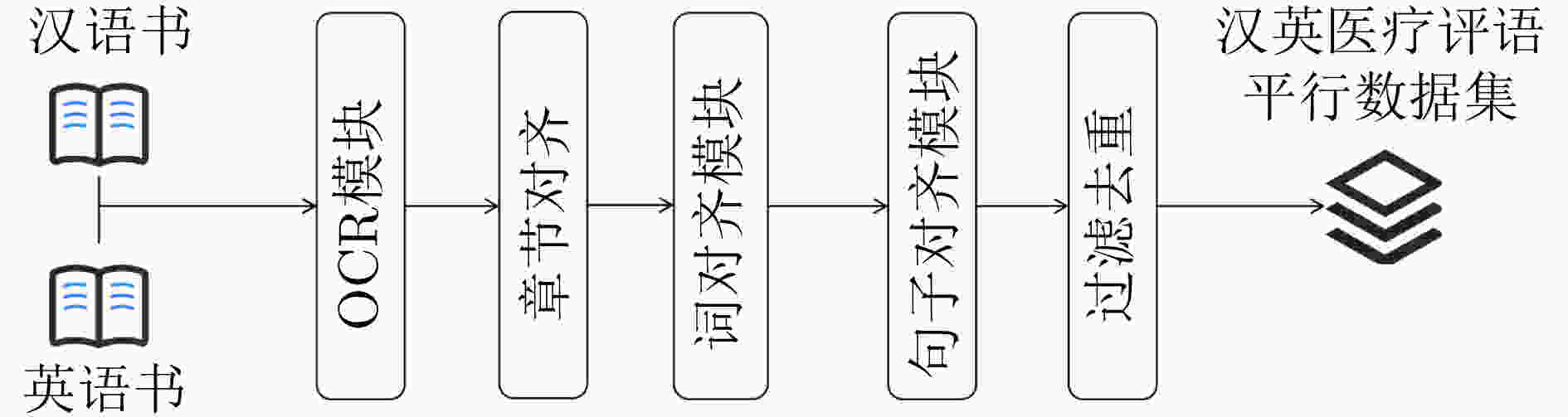

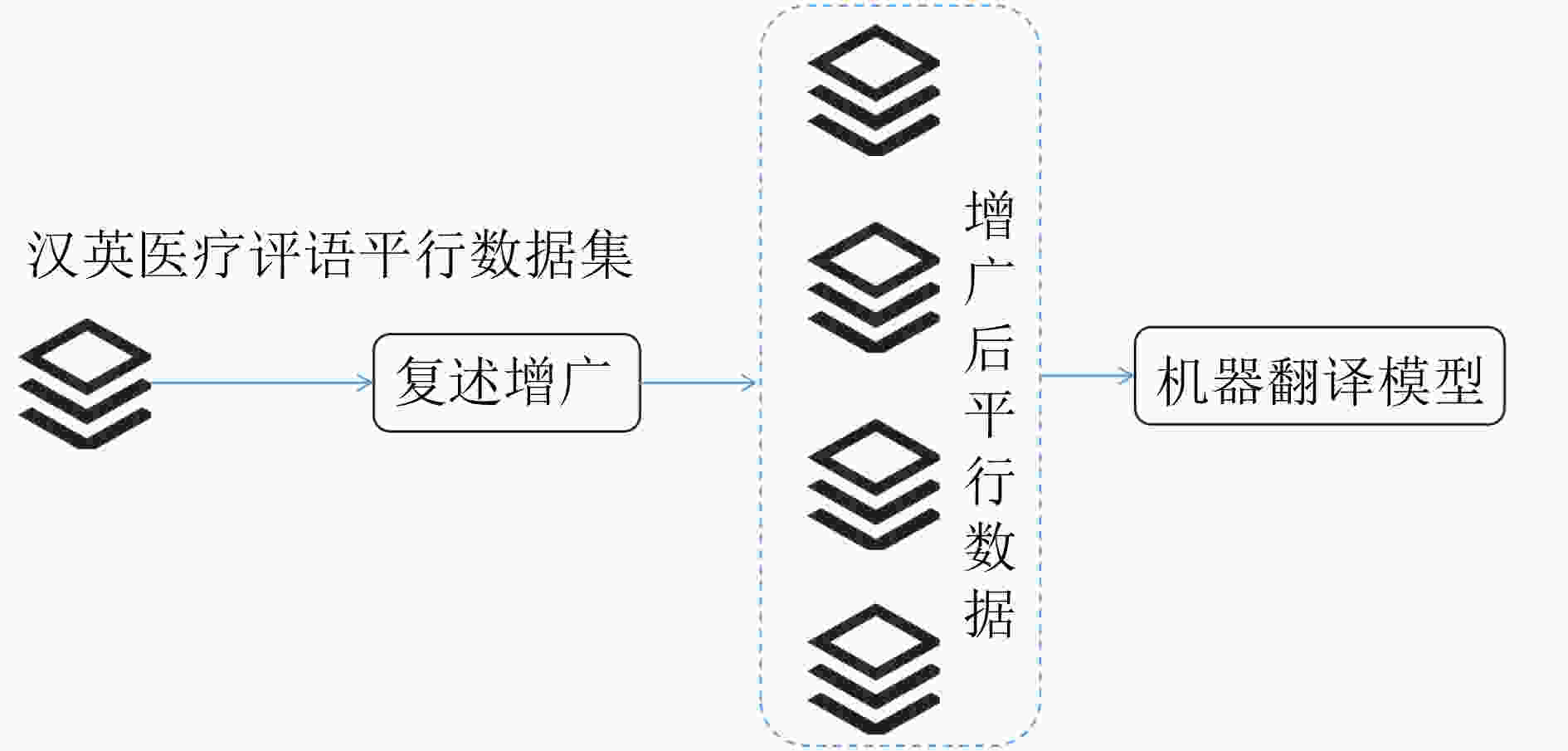

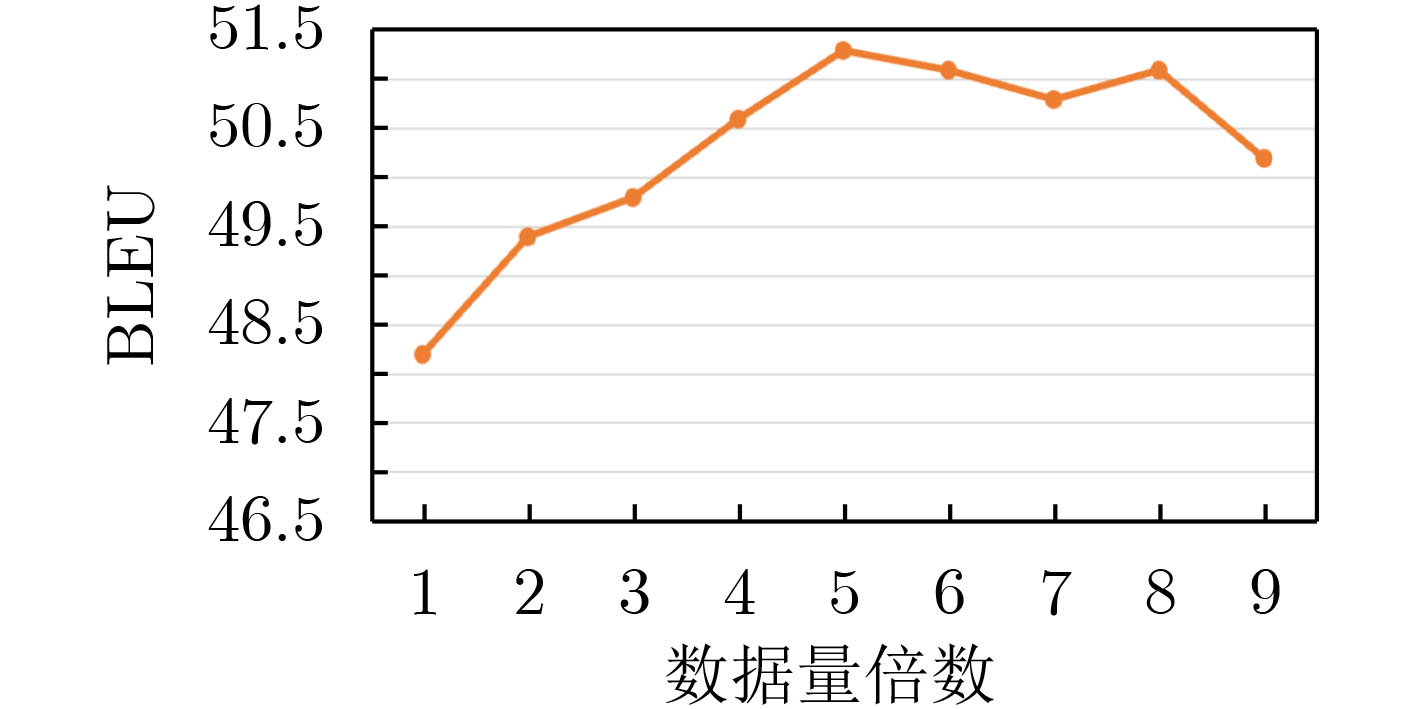

摘要: 医疗机器翻译对于跨境医疗、医疗文献翻译等应用具有重要价值。汉英神经机器翻译依靠深度学习强大的建模能力和大规模双语平行数据取得了长足的进步。神经机器翻译通常依赖于大规模的平行句对训练翻译模型。目前,汉英翻译数据主要以新闻、政策等领域数据为主,缺少医疗领域的数据,导致医疗领域的汉英机器翻译效果不佳。针对医疗垂直领域机器翻译训练数据不足的问题,该文提出利用复述生成技术对汉英医疗机器翻译数据进行增广,扩大汉英机器翻译的规模。通过多种主流的神经机器翻译模型的实验结果表明,通过复述生成对数据进行增广可以有效地提升机器翻译的性能,在RNNSearch, Transformer等多个主流模型上均取得了6个点以上的BLEU值提升,验证了复述增广方法对领域机器翻译的有效性。同时,基于MT5等大规模预训练语言模型可以进一步地提升机器翻译的性能。

-

关键词:

- 神经机器翻译 /

- 汉英翻译 /

- 复述生成 /

- 数据增广 /

- 大规模预训练语言模型

Abstract: Medical machine translation is of great value for cross-border medical translation. Chinese to English neural machine translation has made great progress based on deep learning, powerful modeling ability and large-scale bilingual parallel data. Neural machine translation relies usually on large-scale parallel sentence pairs to train translation models. At present, Chinese-English translation data are mainly in the fields of news, policy and so on. Due to the lack of parallel data in the medical field, the performance of Chinese to English machine translation in the medical field is not compromising. To reduce the size of parallel data for training medical machine translation models, this paper proposes a paraphrase based data augmentation mechanism. The experimental results on a variety of neural machine translation models show that data augmentation through paraphrase augmentation can effectively improve the performance of medical machine translation, and has achieved consistency improvements on mainstream models such as RNNSearch and Transformers, which verifies the effectiveness of paraphrase augmentation method for domain machine translation. Meanwhile, the medical machine translation performances could be further improved based on large-scale pre-training language model, such as MT5. -

表 1 汉英医疗机器翻译数据集

训练集 验证集 测试集 中文平均字数 英文平均词数 85000 7500 7500 14.3 11.2 表 2 模型参数设置

机器翻译模型 参数 参数值 Seq2Seq Embedding size 300 Beam size 50 Batch size 64 Sentence length 256 Learning rate 0.01 Optimizer Adam RNN cell LSTM Drouput 0.2 RNNSearch Embedding size 300 Beam size 50 Batch size 64 Sentence length 256 Learning rate 0.01 Optimizer Adam RNN cell LSTM Drouput 0.2 Transformer Embedding size 300 Beam size 50 Batch size 64 Sentence length 256 Learning rate 0.1 Optimizer Adam RNN cell LSTM Drouput 0.2 Num head 8 表 3 汉英医疗机器翻译结果

机器翻译模型 数据增广模型 BLEU 提升(%) Seq2Seq – 31.99 – WordRep 32.12 0.41 BiLSTM-para 33.45 4.56 Transformer-para 35.23 10.13 Bert-para 35.28 10.28 MT5-para 35.74 11.72 RNNSearch – 41.28 – WordRep 40.98 -0.73 BiLSTM-para 43.25 4.77 Transformer-para 44.12 6.88 Bert-para 44.67 8.21 MT5-para 44.97 8.94 Transformer – 48.21 – WordRep 48.29 0.17 BiLSTM-para 49.86 3.42 Transformer-para 51.32 6.45 Bert-para 51.36 6.53 MT5-para 51.97 7.80 表 4 汉英医疗机器翻译例子

汉语句子 患者男,31岁,因中重度反复头痛18天入院,表现为枕部至双额部逐渐发作,呈搏动性,发作持续超过4h,并持续加重。 百度 The patient, a 31 year old male, was hospitalized for 18 days due to moderate and severe recurrent headache. He showed a gradual attack from the occipital part to the double frontal part, which was pulsatile. The attack lasted for more than 4 hours and continued to worsen. 谷歌 A 31-year-old male patient was admitted to the hospital for 18 days with moderate to severe recurrent headaches. The manifestations were pulsatile attacks from the occiput to the forehead. The attacks lasted more than 4 hours and gradually worsened. 本文 A 31-year-old man was admitted with an 18-day history of a moderate to severe recurrent headache, presenting gradual onset from occipital to bifrontal regions, pulsatile, in episodes lasting beyond four hours, and progressive worsening. -

[1] 刘群. 统计机器翻译综述[J]. 中文信息学报, 2003, 17(4): 1–12. doi: 10.3969/j.issn.1003-0077.2003.04.001LIU Qun. Survey on statistical machine translation[J]. Journal of Chinese Information Processing, 2003, 17(4): 1–12. doi: 10.3969/j.issn.1003-0077.2003.04.001 [2] 李亚超, 熊德意, 张民. 神经机器翻译综述[J]. 计算机学报, 2018, 41(12): 2734–2755. doi: 10.11897/SP.J.1016.2018.02734LI Yachao, XIONG Deyi, and ZHANG Min. A survey of neural machine translation[J]. Chinese Journal of Computers, 2018, 41(12): 2734–2755. doi: 10.11897/SP.J.1016.2018.02734 [3] STAHLBERG F. Neural machine translation: A review[J]. Journal of Artificial Intelligence Research, 2020, 69: 343–418. doi: 10.1613/jair.1.12007 [4] TRIPATHI S and SARKHEL J K. Approaches to machine translation[J]. Annals of Library and Information Studies, 2010, 57: 388–393. [5] CHAROENPORNSAWAT P, SORNLERTLAMVANICH V, and CHAROENPORN T. Improving translation quality of rule-based machine translation[C]. Proceedings of the 2002 COLING workshop on Machine translation in Asia, Taipei, China, 2002. [6] LIU Shujie, LI C H, and ZHOU Ming. Statistic machine translation boosted with spurious word deletion[C]. Proceedings of Machine Translation Summit, Xiamen, China, 2011. [7] GOODFELLOW I, BENGIO Y, and COURVILLE A. Deep Learning[M]. Cambridge: MIT Press, 2016. [8] ECK M, VOGEL S, and WAIBEL A. Improving statistical machine translation in the medical domain using the Unified Medical Language System[C]. Proceedings of the 20th International Conference on Computational Linguistics, Geneva, Switzerland, 2004. [9] DUŠEK O, HAJIČ J, HLAVÁČOVÁ J, et al. Machine translation of medical texts in the Khresmoi project[C]. Proceedings of the Ninth Workshop on Statistical Machine Translation, Baltimore, USA, 2014. [10] WOLK K and MARASEK K P. Translation of Medical Texts Using Neural Networks[M]. HERSHEY P A. Deep Learning and Neural Networks: Concepts, Methodologies, Tools, and Applications. IGI Global, 2020: 1137–1154. [11] ZOPH B, YURET D, MAY J, et al. Transfer learning for low-resource neural machine translation[C]. Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, USA, 2016. [12] PARK C, YANG Y, PARK K, et al. Decoding strategies for improving low-resource machine translation[J]. Electronics, 2020, 9(10): 1562. doi: 10.3390/electronics9101562 [13] FADAEE M, BISAZZA A, and MONZ C. Data augmentation for low-resource neural machine translation[C]. Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, Canada, 2017. [14] LAMPLE G, CONNEAU A, DENOYER L, et al. Unsupervised machine translation using monolingual corpora only[J]. arXiv: 1711.00043, 2017. [15] ARTETXE M, LABAKA G, and AGIRRE E. An effective approach to unsupervised machine translation[C]. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 2019: 194–203. [16] CHENG Yong. Semi-supervised Learning for Neural Machine Translation[M]. CHENG Yong. Joint Training for Neural Machine Translation. Singapore: Springer, 2019: 25–40. [17] DUAN Sufeng, ZHAO Hai, ZHANG Dongdong, et al. Syntax-aware data augmentation for neural machine translation[J]. arXiv: 2004.14200, 2020. [18] PENG Wei, HUANG Chongxuan, LI Tianhao, et al. Dictionary-based data augmentation for cross-domain neural machine translation[J]. arXiv: 2004.02577, 2020. [19] SUGIYAMA A and YOSHINAGA N. Data augmentation using back-translation for context-aware neural machine translation[C]. Proceedings of the Fourth Workshop on Discourse in Machine Translation (DiscoMT 2019), Hong Kong, China, 2019. [20] FREITAG M, FOSTER G, GRANGIER D, et al. Human-paraphrased references improve neural machine translation[J]. arXiv: 2010.10245, 2020. [21] GANITKEVITCH J, VAN DURME B, and CALLISON-BURCH C. PPDB: The paraphrase database[C]. Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, USA, 2013: 758–764. [22] BERANT J and LIANG P. Semantic parsing via paraphrasing[C]. Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, USA, 2014: 1415–1425. [23] STIX G. The Elusive goal of machine translation[J]. Scientific American, 2006, 294(3): 92–95. doi: 10.1038/scientificamerican0306-92 [24] GERBER L and YANG Jin. Systran MT dictionary development[C]. Machine Translation: Past, Present, and Future. In: Proceedings of Machine Translation Summit VI, 1997. [25] NAGAO M, TSUJII J, MITAMURA K, et al. A machine translation system from Japanese into English: Another perspective of MT systems[C]. Proceedings of the 8th Conference on Computational Linguistics, Tokyo, Japan, 1980: 414–423. [26] JOHNSON R, KING M, and DES TOMBE L. Eurotra: A multilingual system under development[J]. Computational Linguistics, 1985, 11(2/3): 155–169. doi: 10.5555/1187874.1187880 [27] WEAVER W. Translation[J]. Machine Translation of Languages, 1955, 14: 15–23. [28] PETER F B, PIETRA S A D, PIETRA V J D, et al. The mathematics of statistical machine translation: Parameter estimation[J]. Computational Linguistics, 1993, 19(2): 263–311. [29] KOEHN P, HOANG H, BIRCH A, et al. Moses: Open source toolkit for statistical machine translation[C]. Proceedings of the 45th Annual Meeting of the ACL on Interactive Poster and Demonstration Sessions, Prague, Czech Republic, 2007: 177–180. [30] XIAO Tong, ZHU Jingbo, ZHANG Hao, et al. NiuTrans: An open source toolkit for phrase-based and syntax-based machine translation[C]. Proceedings of the ACL 2012 System Demonstrations, Jeju Island, Korea, 2012: 19–24. [31] KALCHBRENNER N and BLUNSOM P. Recurrent continuous translation models[C]. Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, USA, 2013: 1700–1709. [32] TRAORE B B, KAMSU-FOGUEM B, and TANGARA F. Deep convolution neural network for image recognition[J]. Ecological Informatics, 2018, 48: 257–268. doi: 10.1016/j.ecoinf.2018.10.002 [33] İRSOY O and CARDIE A. Deep recursive neural networks for compositionality in language[C]. Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 2096–2104. [34] HOCHREITER S and SCHMIDHUBER J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735–1780. doi: 10.1162/neco.1997.9.8.1735 [35] CHEN M X, FIRAT O, BAPNA A, et al. The best of both worlds: Combining recent advances in neural machine translation[C]. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 2018. [36] LUONG T, PHAM H, and MANNING C D. Effective approaches to attention-based neural machine translation[C]. Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Porzgal, 2015: 1412–1421. [37] VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need[C]. Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, USA, 2017: 6000–6010. [38] CHEN Jing, CHEN Qingcai, LIU Xin, et al. The BQ corpus: A large-scale domain-specific Chinese corpus for sentence semantic equivalence identification[C]. Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2018: 4946–4951. [39] ZHANG Bowei, SUN Weiwei, WAN Xiaojun, et al. PKU paraphrase bank: A sentence-level paraphrase corpus for Chinese[C]. 8th CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 2019: 814–826. [40] EGONMWAN E and CHALI Y. Transformer and seq2seq model for paraphrase generation[C]. Proceedings of the 3rd Workshop on Neural Generation and Translation, Hong Kong, China, 2019: 249–255. [41] DEVLIN J, CHANG Minfwei, LEE K, et al. Bert: Pre-training of deep bidirectional transformers for language understanding[C]. Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, Minnesota, 2019: 4171–4186. [42] RAFFEL C, SHAZEER N, ROBERTS A, et al. Exploring the limits of transfer learning with a unified text-to-text transformer[J]. JMLR, 2019, 21(140): 1–67. [43] XUE Linting, CONSTANT N, ROBERTS A, et al. mT5: A massively multilingual pre-trained text-to-text transformer[C]. Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico, USA, 2020: 483–498. [44] LIU Boxiang and HUANG Liang. NEJM-enzh: A parallel corpus for English-Chinese translation in the biomedical domain[J]. arXiv: 2005.09133, 2020. [45] CASACUBERTA F and VIDAL E. GIZA++: Training of statistical translation models[J]. Retrieved October, 2007, 29: 2019. [46] REIMERS N and GUREVYCH I. Sentence-BERT: Sentence embeddings using siamese BERT-networks[C]. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 2019: 3982–3992. -

下载:

下载:

下载:

下载: