Multi-degree-of-freedom Intelligent Ultrasound Robot System Based on Reinforcement Learning

-

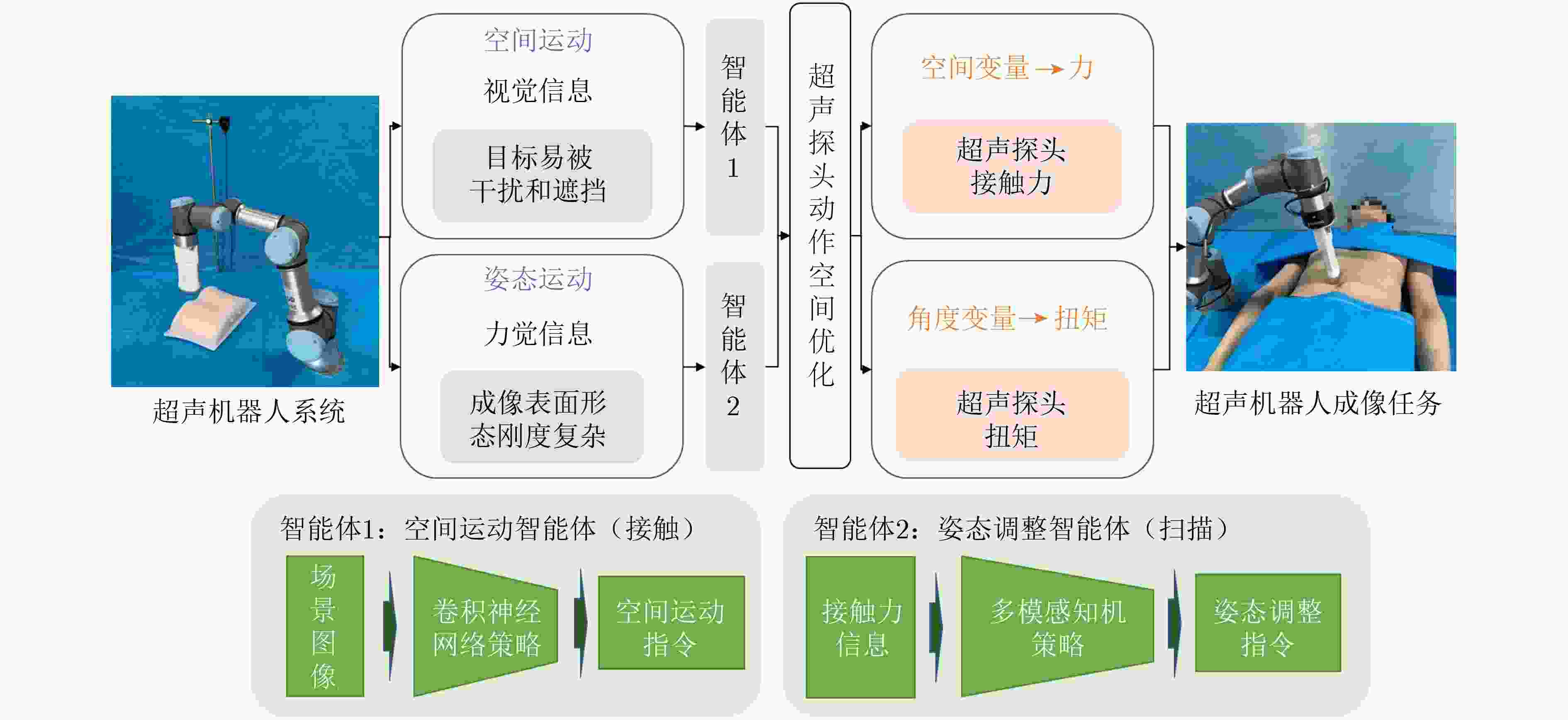

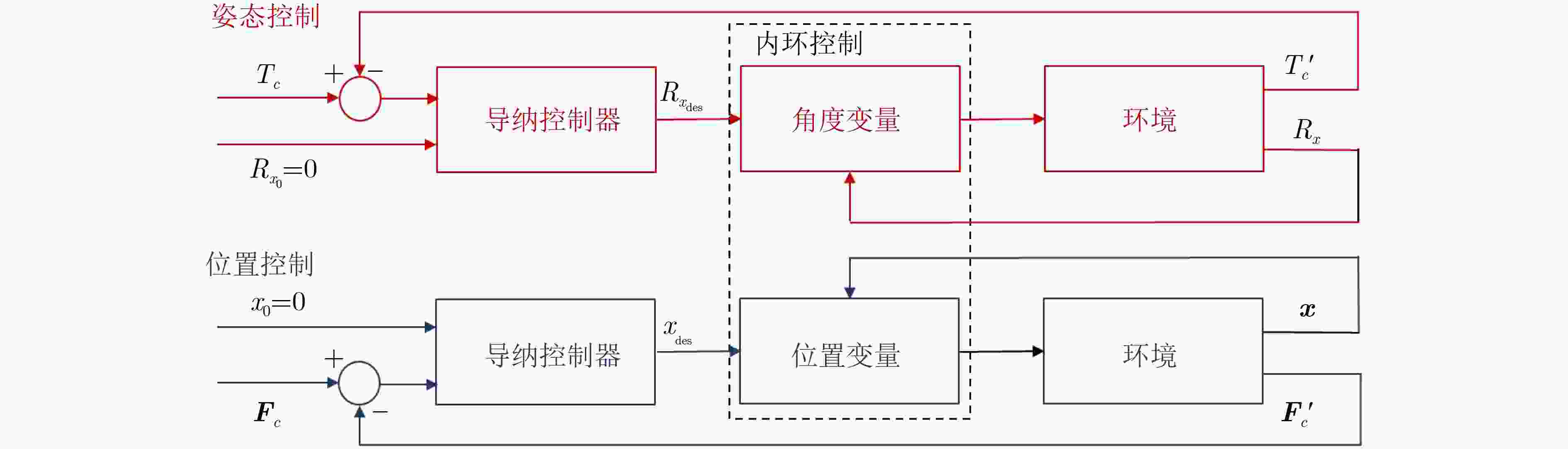

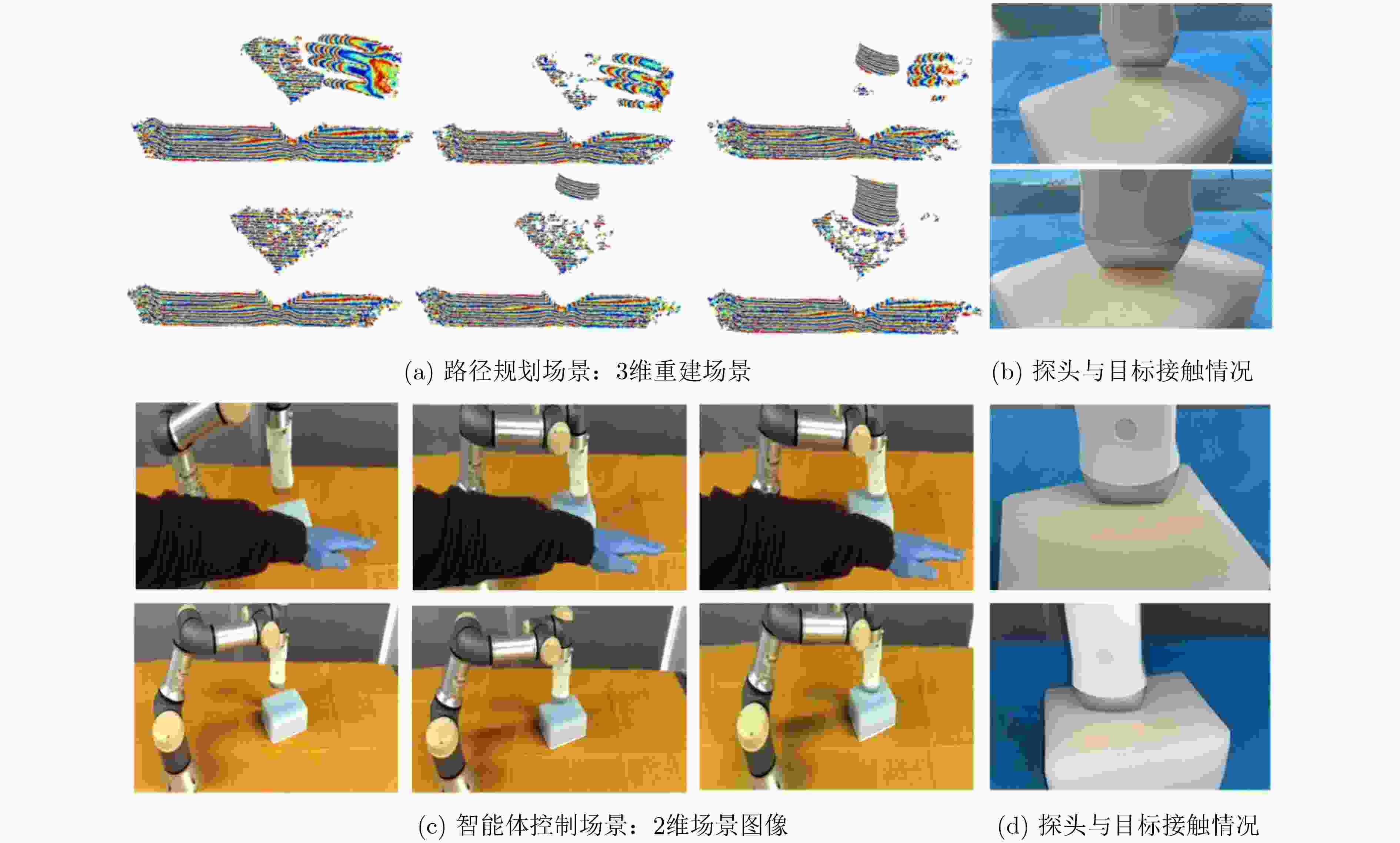

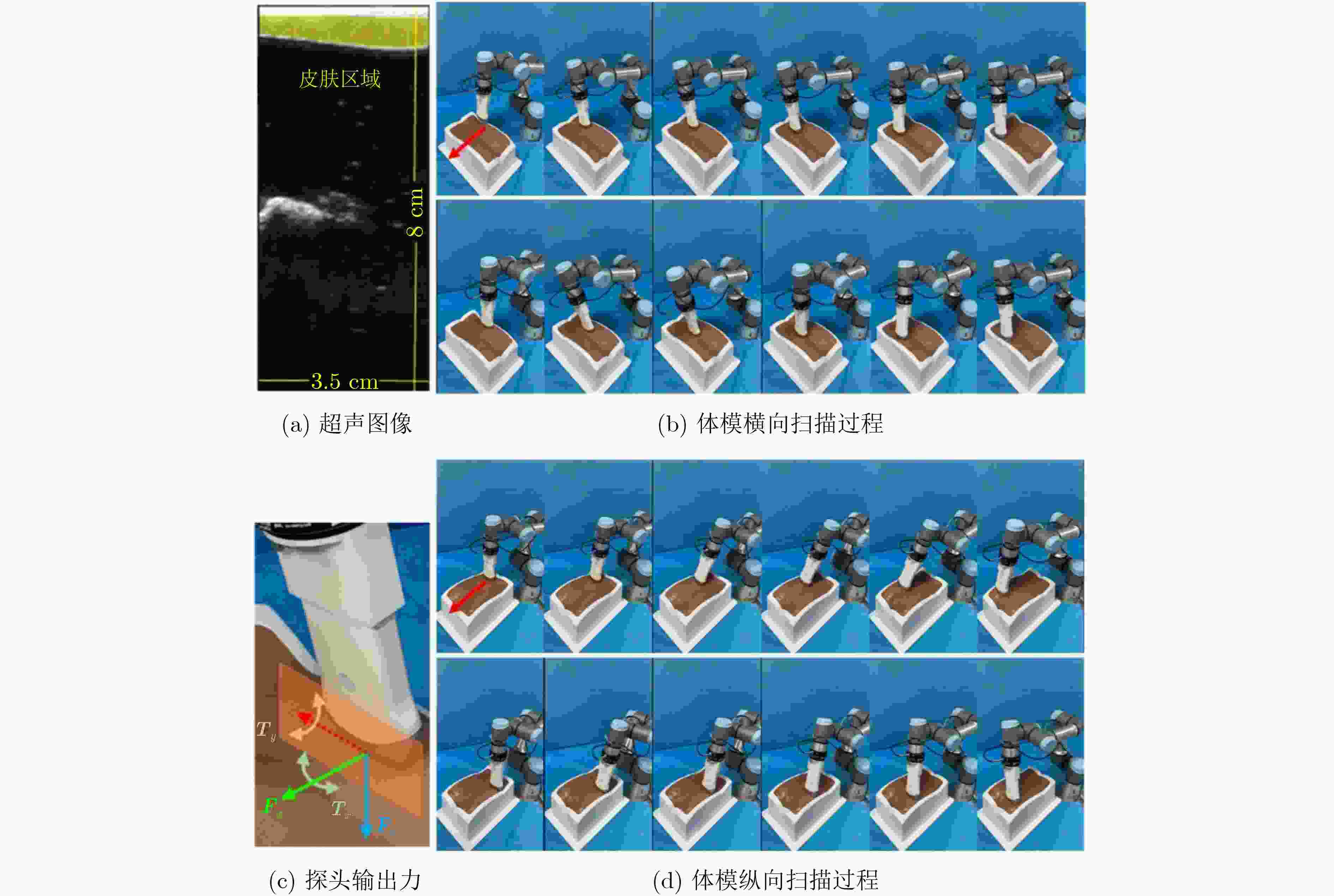

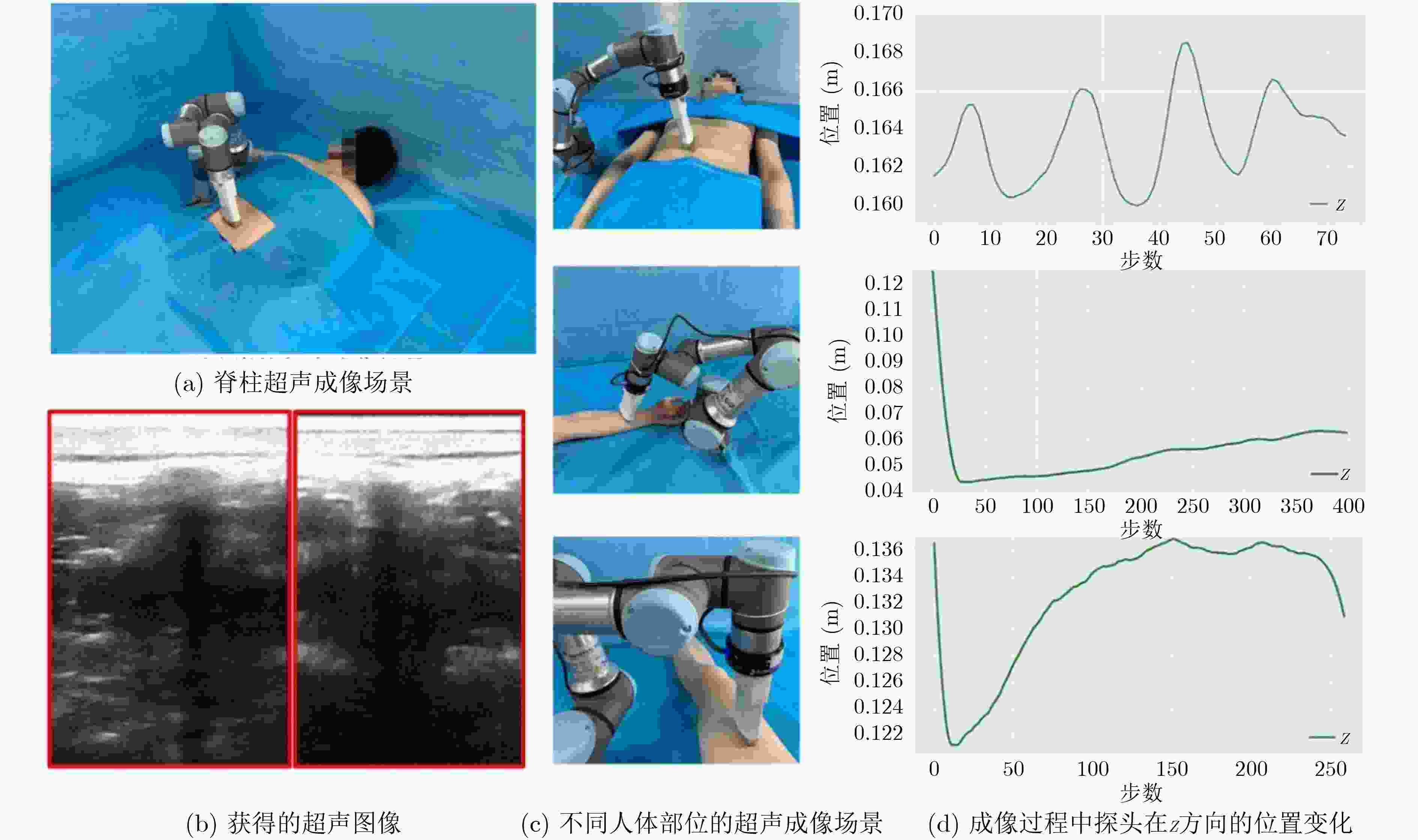

摘要: 超声机器人作为一种典型的医疗机器人,在辅助诊断与外科引导中可以有效提高超声成像效率并降低人工长时间操作导致的疲劳。为了提升超声机器人在复杂动态环境中的成像效率与稳定性,该文提出一种基于深度强化学习的超声机器人多自由度成像控制方法与系统。首先基于近端策略梯度优化的成像动作决策方法,实时生成超声探头空间动作和姿态运动决策,并实现动态环境中对目标成像动作的持续生成过程。进一步,研究根据超声机器人成像任务中面临复杂柔性环境的特点,在超声机器人运动自主决策的基础上提出超声机器人运动空间优化策略。最终实现在避免参数调整和复杂动态环境的情况下,对不同人体部位进行自动的机器人超声成像。Abstract: As a typical medical robot, the efficiency of ultrasound imaging and the fatigue caused by manual operation for a long time in assisted diagnosis and surgical guidance can effectively be reduced by ultrasound robots. To improve the imaging efficiency and stability of ultrasound robots in complex dynamic environments, a deep reinforcement learning-based imaging control method and system are proposed. Firstly, an imaging action decision method based on proximal policy gradient optimization is proposed to generate spatial action and probe pose motion decisions of the ultrasound robot in real-time and to realize the continuous generation process of imaging action decisions for targets in dynamic environments. Further, based on the characteristics of the complex and flexible environment faced by the ultrasound robot in the imaging task, an ultrasound robot control optimization strategy is proposed on the basis of the autonomous ultrasound robot motion decision. Eventually, a fully autonomous robotic ultrasound imaging process for different human body parts is achieved while avoiding parameter adjustments and complex dynamic environments.

-

Key words:

- Ultrasound imaging /

- Ultrasound robotics /

- Soft control /

- Reinforcement learning

-

表 1 强化学习方法与路径规划方法对不同柔性体模的成像成功率对比

方法-体模运动-环境 成功率(%) 体模1 体模2 体模3 强化学习-静态-无干扰 96.7 93.3 95.0 强化学习-静态-有干扰 86.7 76.7 85.0 强化学习-动态-无干扰 93.3 90.0 91.7 强化学习-动态-有干扰 81.7 71.6 80.0 路径规划-静态-无干扰 100.0 93.3 93.3 路径规划-静态-有干扰 68.3 70.0 70.0 路径规划-动态-无干扰 73.3 73.3 75.0 路径规划-动态-有干扰 61.7 58.3 53.3 表 2 自主机器人超声成像过程中超声探头受到不同方向的接触力

编号 接触力(N) 力矩(N·m) x y z Rx Ry 1 0.51±0.26 0.88±1.45 –7.17±3.31 0.035±0.006 0.138±0.0235 2 0.48±0.33 1.08±0.58 –8.31±2.83 –0.059±0.003 0.084±0.0175 3 0.73±0.49 0.77±1.51 –8.32±2.77 0.036±0.111 0.133±0.0912 4 0.43±0.52 1.04±0.64 –8.78±2.21 –0.063±0.087 0.076±0.0201 表 3 自主超声机器人扫描和人工扫描图像中皮肤区域面积结果对比

编号 评估参数 面积(cm2) 面积比(%) 机器人 3.12±0.23 11.2±1.24 手动 3.44±0.39 13.4±0.59 -

[1] VON HAXTHAUSEN F, BÖTTGER F, WULFF S, et al. Medical robotics for ultrasound imaging: Current systems and future trends[J]. Current Robotics Reports, 2021, 2(1): 55–71. doi: 10.1007/s43154-020-00037-y [2] CHEN Fang, LIU Jia, and LIAO Hongen. 3D catheter shape determination for endovascular navigation using a two-step particle filter and ultrasound scanning[J]. IEEE Transactions on Medical Imaging, 2017, 36(3): 685–695. doi: 10.1109/TMI.2016.2635673 [3] BEALES L, WOLSTENHULME S, EVANS J A, et al. Reproducibility of ultrasound measurement of the abdominal aorta[J]. British Journal of Surgery, 2011, 98(11): 1517–1525. doi: 10.1002/bjs.7628 [4] CARDINAL H N, GILL J D, and FENSTER A. Analysis of geometrical distortion and statistical variance in length, area, and volume in a linearly scanned 3-D ultrasound image[J]. IEEE Transactions on Medical Imaging, 2000, 19(6): 632–651. doi: 10.1109/42.870670 [5] SUNG G T and GILL I S. Robotic laparoscopic surgery: A comparison of the da Vinci and Zeus systems[J]. Urology, 2001, 58(6): 893–898. doi: 10.1016/S0090-4295(01)01423-6 [6] HUANG Qinghua, LAN Jiulong, and LI Xuelong. Robotic arm based automatic ultrasound scanning for three-dimensional imaging[J]. IEEE Transactions on Industrial Informatics, 2019, 15(2): 1173–1182. doi: 10.1109/TII.2018.2871864 [7] JIANG Zhongliang, ZHOU Yue, BI Yuan, et al. Deformation-aware robotic 3D ultrasound[J]. IEEE Robotics and Automation Letters, 2021, 6(4): 7675–7682. doi: 10.1109/LRA.2021.3099080 [8] HOUSDEN J, WANG Shuangyi, BAO Xianqiang, et al. Towards standardized acquisition with a dual-probe ultrasound robot for fetal imaging[J]. IEEE Robotics and Automation Letters, 2021, 6(2): 1059–1065. doi: 10.1109/LRA.2021.3056033 [9] JIANG Zhongliang, GRIMM M, ZHOU Mingchuan, et al. Automatic normal positioning of robotic ultrasound probe based only on confidence map optimization and force measurement[J]. IEEE Robotics and Automation Letters, 2020, 5(2): 1342–1349. doi: 10.1109/LRA.2020.2967682 [10] MEROUCHE S, ALLARD L, MONTAGNON E, et al. A robotic ultrasound scanner for automatic vessel tracking and three-dimensional reconstruction of b-mode images[J]. IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 2016, 63(1): 35–46. doi: 10.1109/TUFFC.2015.2499084 [11] NING Guochen, ZHANG Xinran, and LIAO Hongen. Autonomic robotic ultrasound imaging system based on reinforcement learning[J] IEEE Transactions on Biomedical Engineering, 2021, 68(9): 2787–2797. [12] HENNERSPERGER C, FUERST B, VIRGA S, et al. Towards MRI-based autonomous robotic US acquisitions: A first feasibility study[J]. IEEE Transactions on Medical Imaging, 2017, 36(2): 538–548. doi: 10.1109/TMI.2016.2620723 [13] CHATELAIN P, KRUPA A, and MARCHAL M. Real-time needle detection and tracking using a visually servoed 3D ultrasound probe[C]. 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 2013: 1676–1681. [14] LI Keyu, XU Yangxin, and MENG M Q H. An overview of systems and techniques for autonomous robotic ultrasound acquisitions[J]. IEEE Transactions on Medical Robotics and Bionics, 2021, 3(2): 510–524. doi: 10.1109/TMRB.2021.3072190 [15] MNIH V, KAVUKCUOGLU K, SILVER D, et al. Human-level control through deep reinforcement learning[J]. Nature, 2015, 518(7540): 529–533. doi: 10.1038/nature14236 [16] NGUYEN T T, NGUYEN N D, and NAHAVANDI S. Deep reinforcement learning for multiagent systems: A review of challenges, solutions, and applications[J]. IEEE Transactions on Cybernetics, 2020, 50(9): 3826–3839. doi: 10.1109/TCYB.2020.2977374 [17] SUTTON R S and BARTO A G. Reinforcement Learning: An Introduction[M]. 2nd ed. Cambridge: MIT Press, 2018. [18] SCHULMAN J, WOLSKI F, DHARIWAL P, et al. Proximal policy optimization algorithms[J]. arXiv: 1707.06347, 2017. [19] SCHULMAN J, LEVINE S, ABBEEL P, et al. Trust region policy optimization[C]. The 32nd International Conference on Machine Learning, Lille, France, 2015: 1889–1897. [20] UGURLU B, HAVOUTIS I, SEMINI C, et al. Pattern generation and compliant feedback control for quadrupedal dynamic trot-walking locomotion: Experiments on RoboCat-1 and HyQ[J]. Autonomous Robots, 2015, 38(4): 415–437. doi: 10.1007/s10514-015-9422-7 [21] VARIN P, GROSSMAN L, and KUINDERSMA S. A comparison of action spaces for learning manipulation tasks[C]. Proceedings of 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems, Macau, China, 2019: 6015–6021. -

下载:

下载:

下载:

下载: