Pedestrian Tracking Algorithm Based on Convolutional Block Attention Module and Anchor-free Detection Network

-

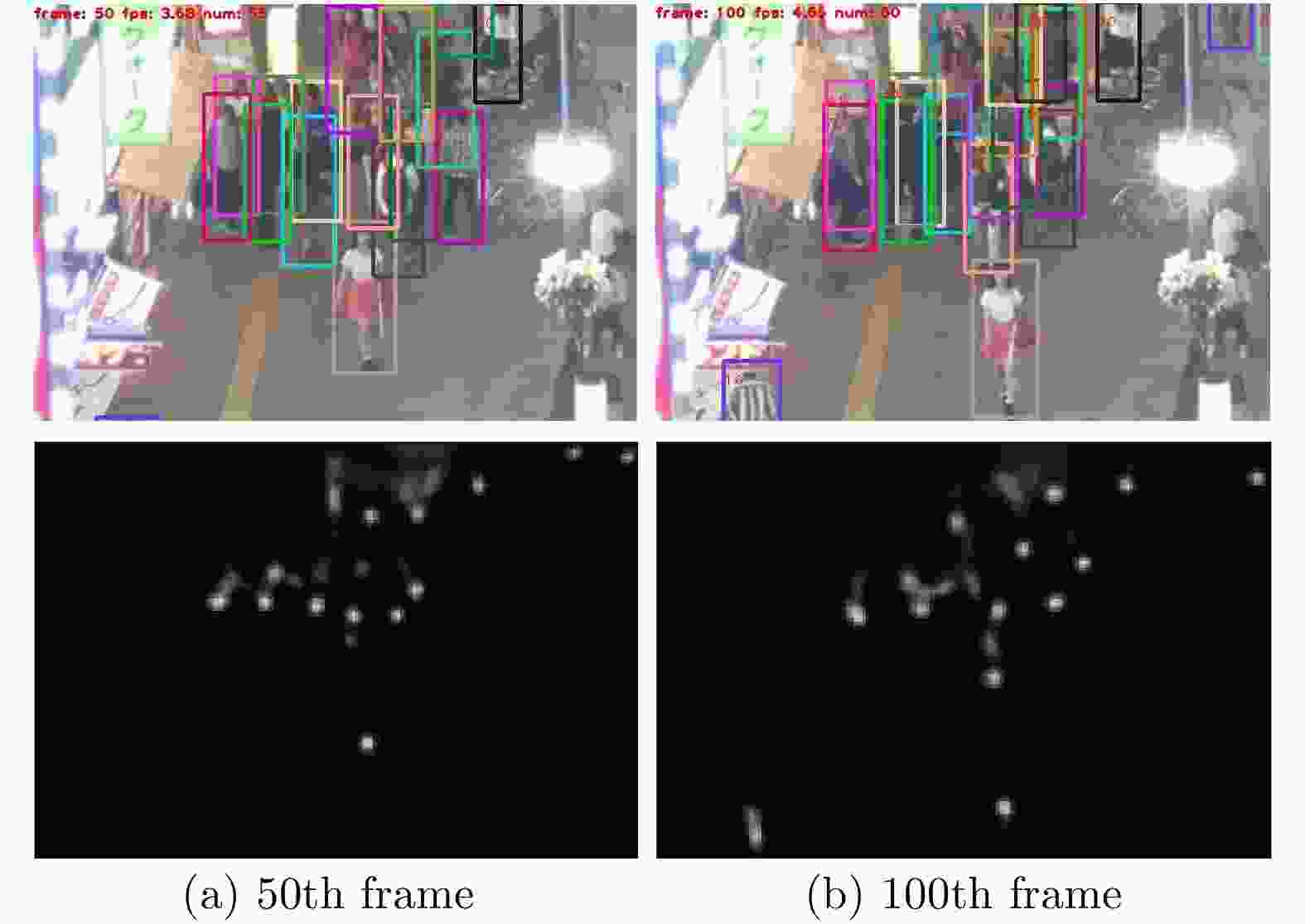

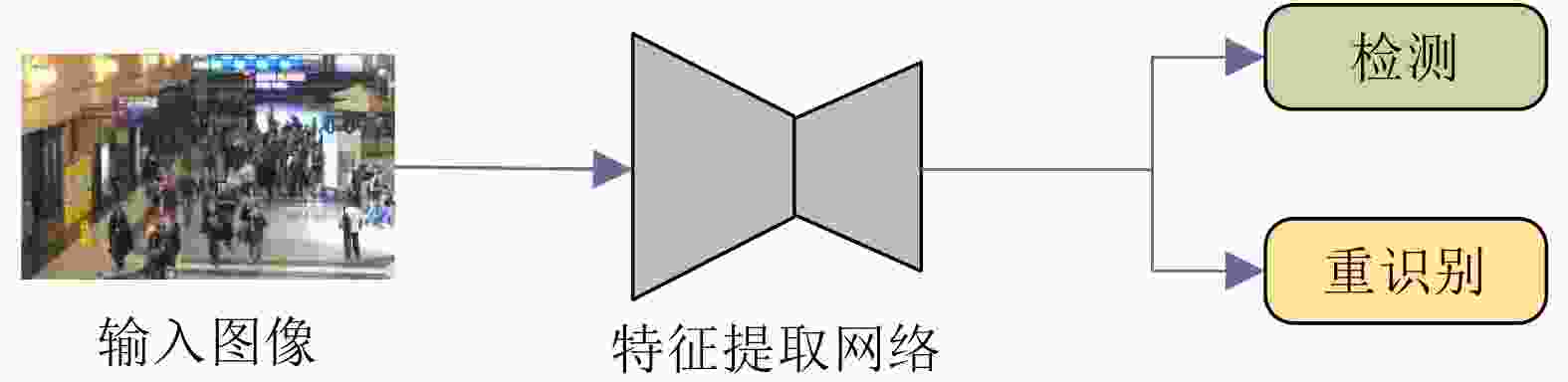

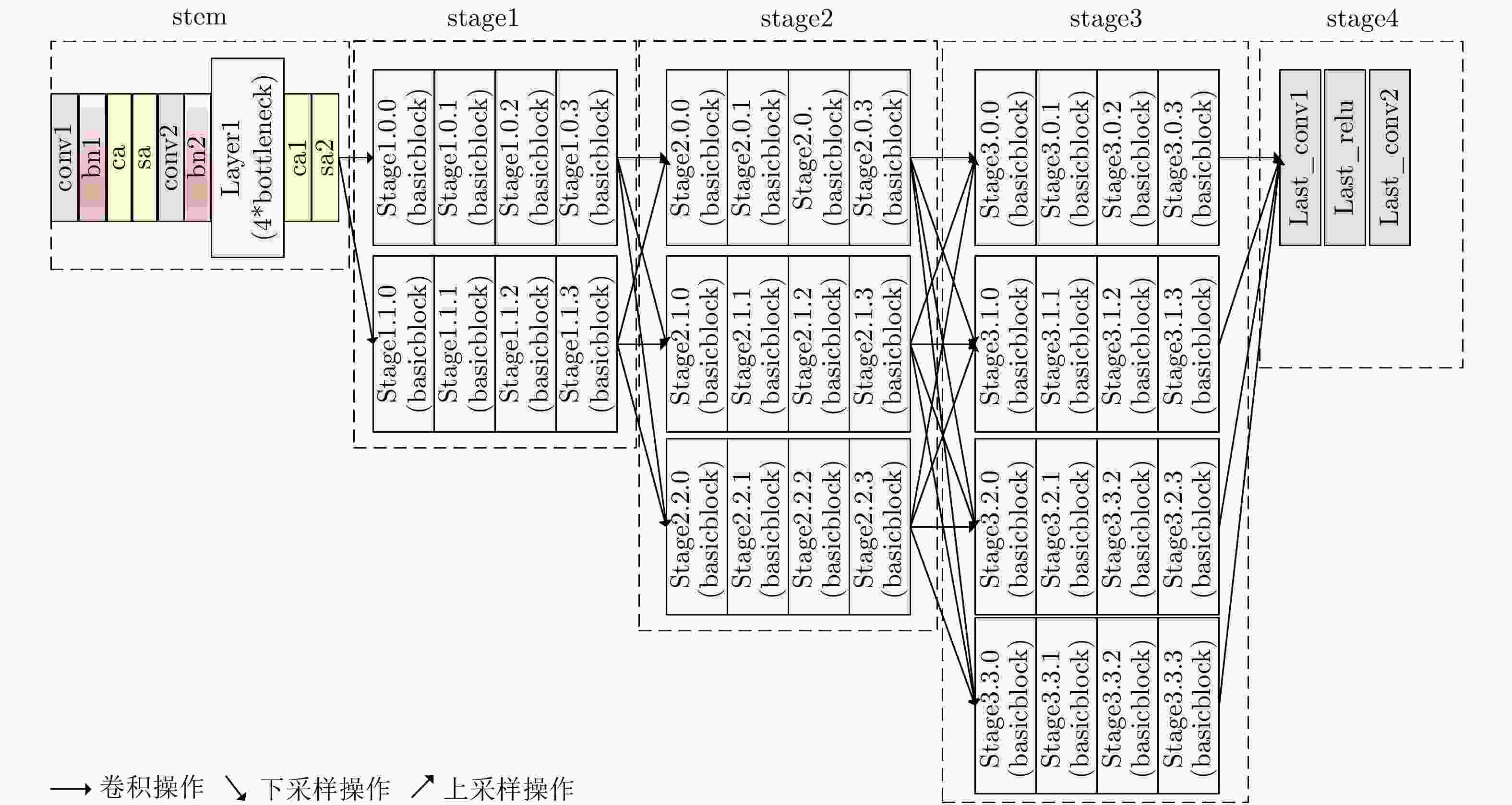

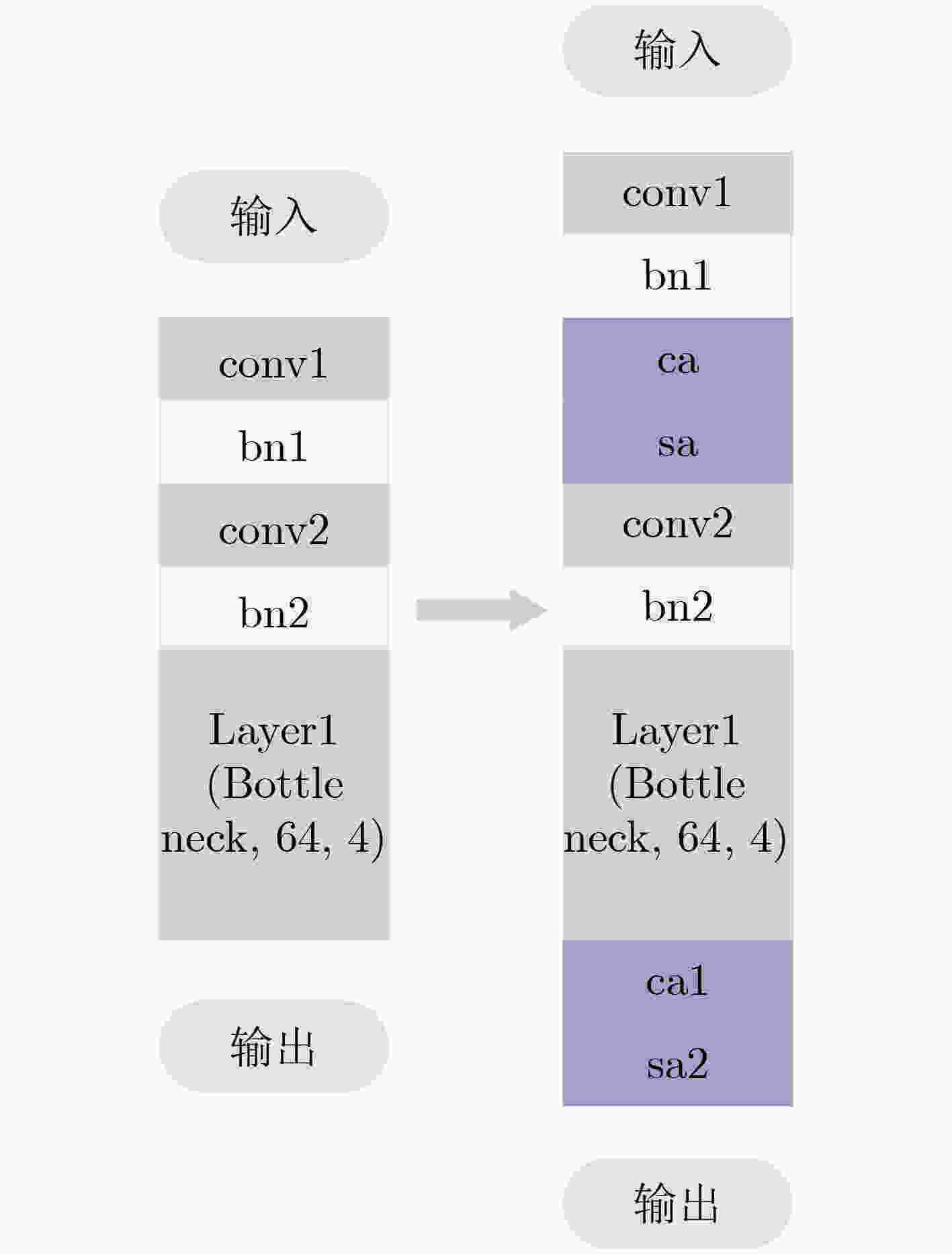

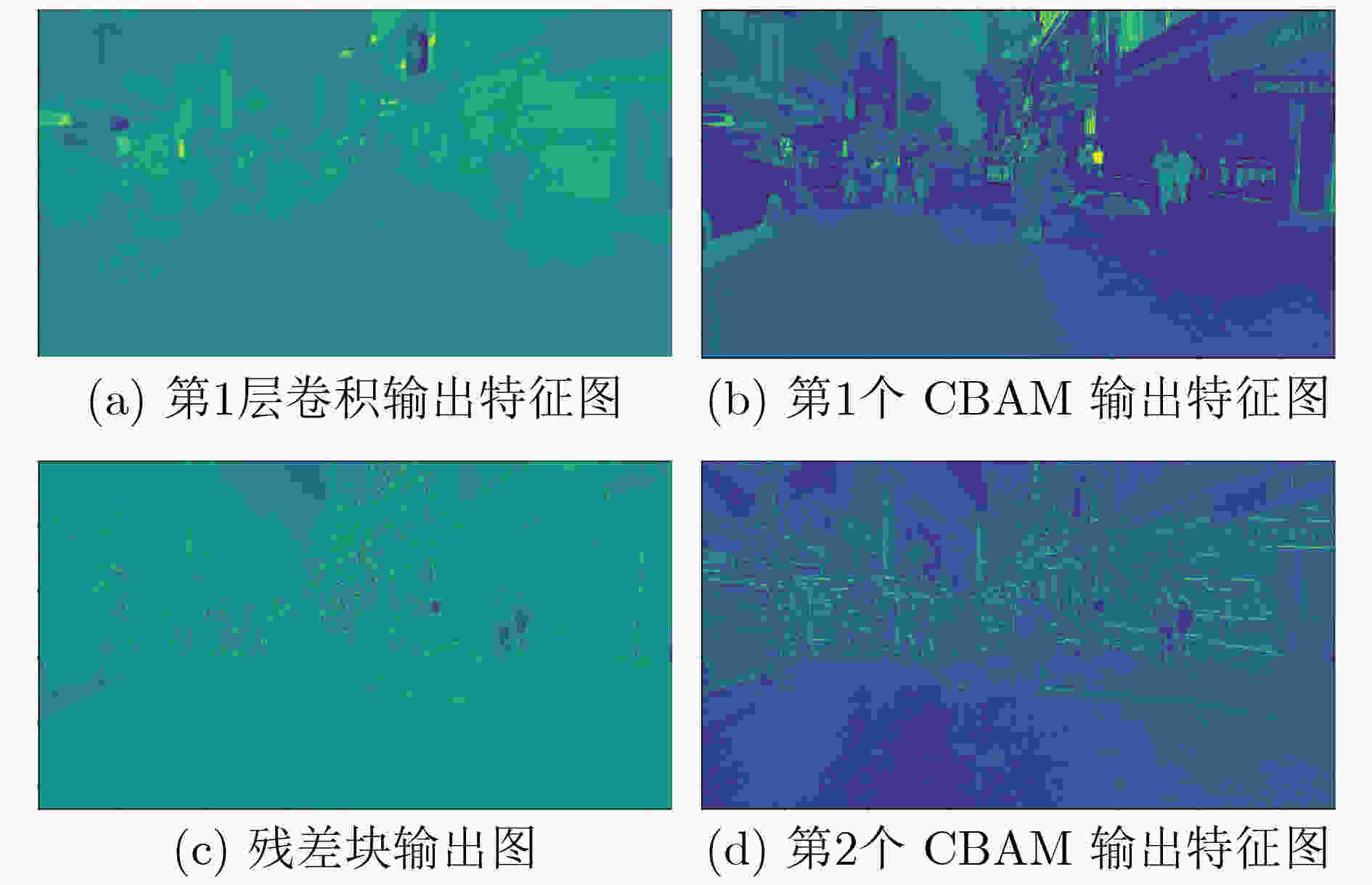

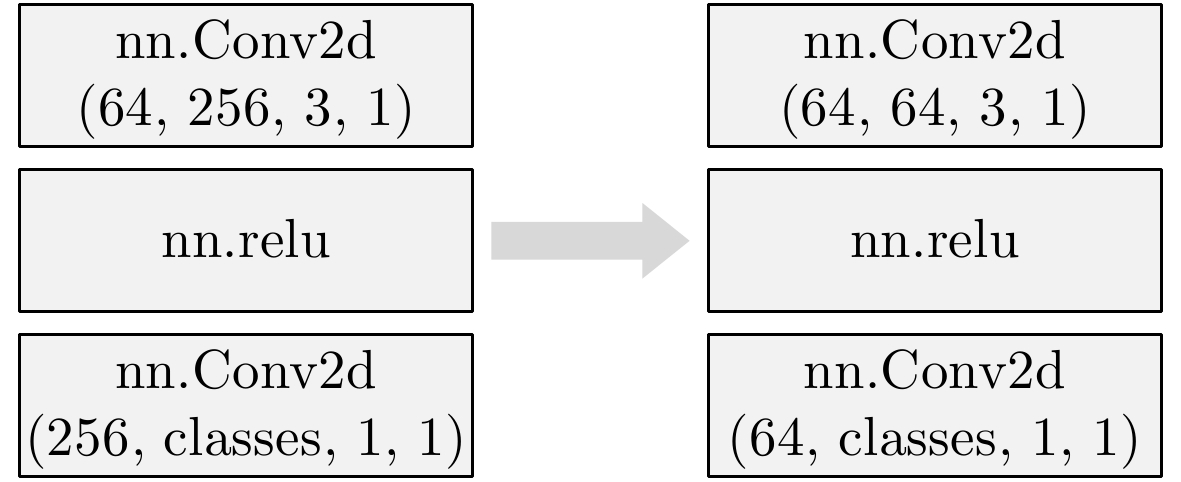

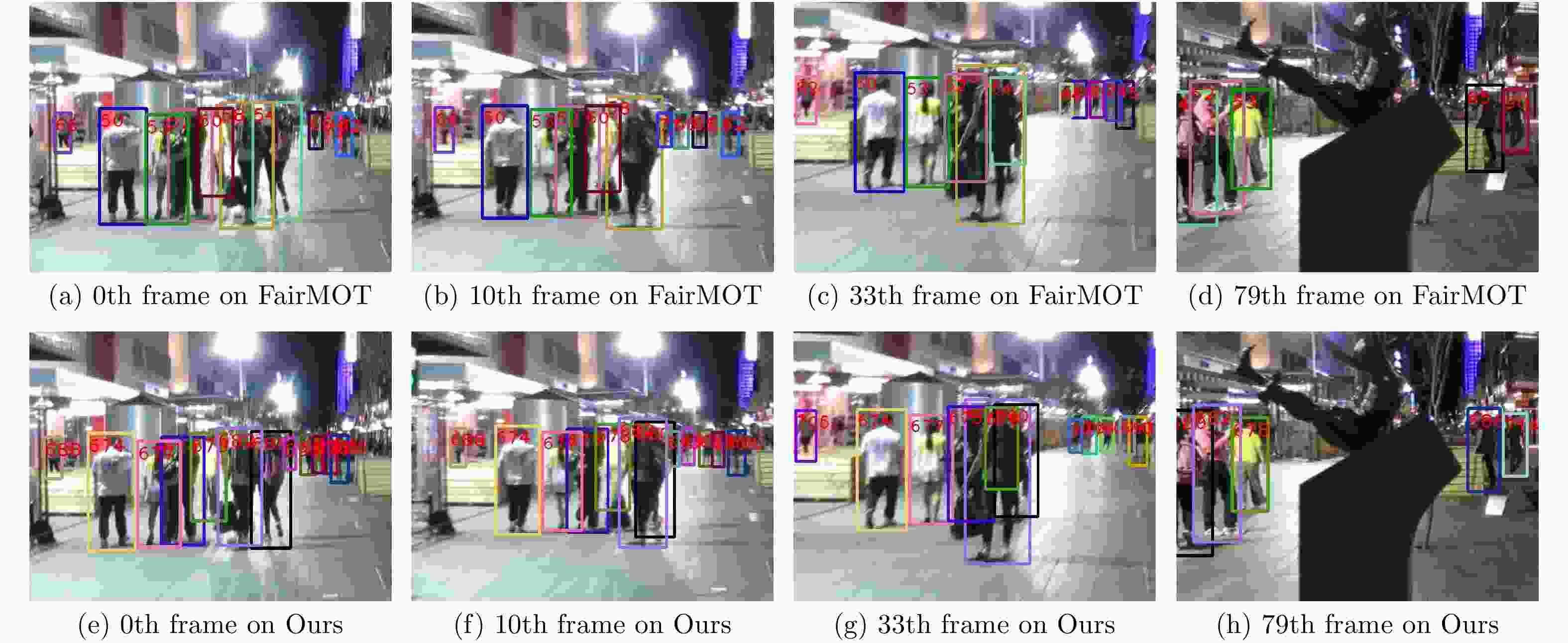

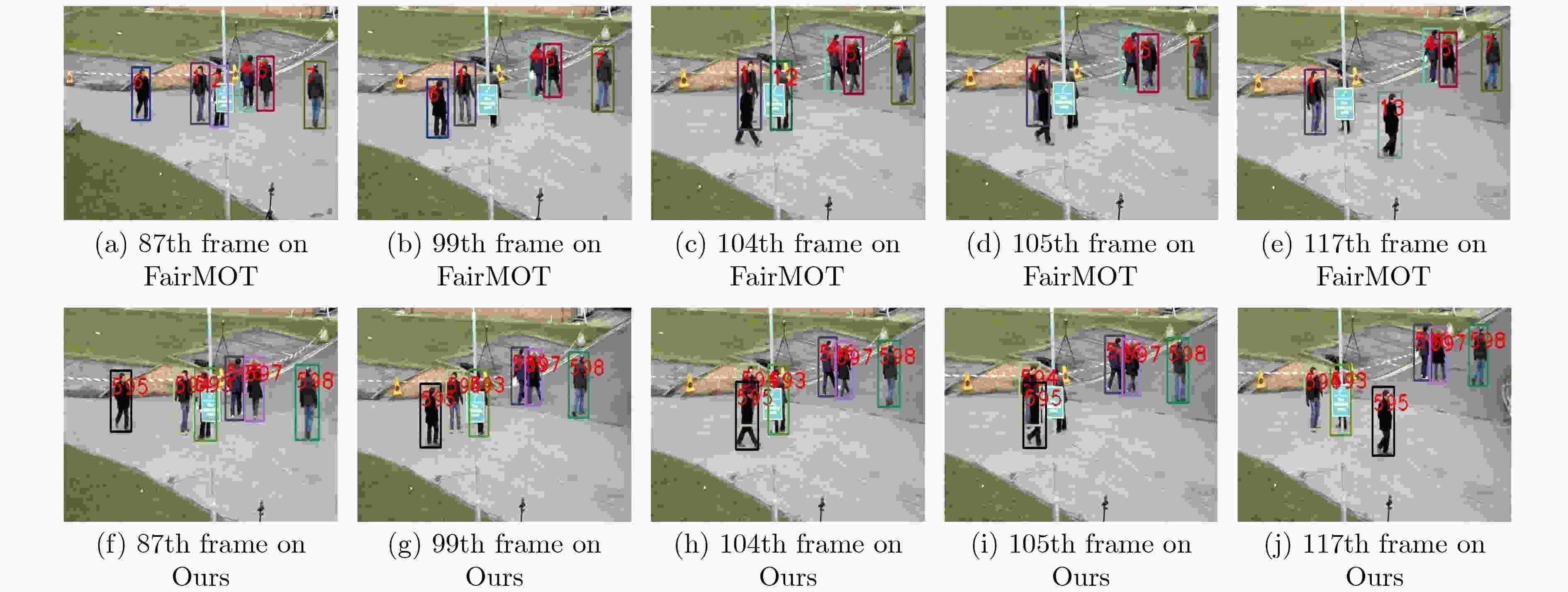

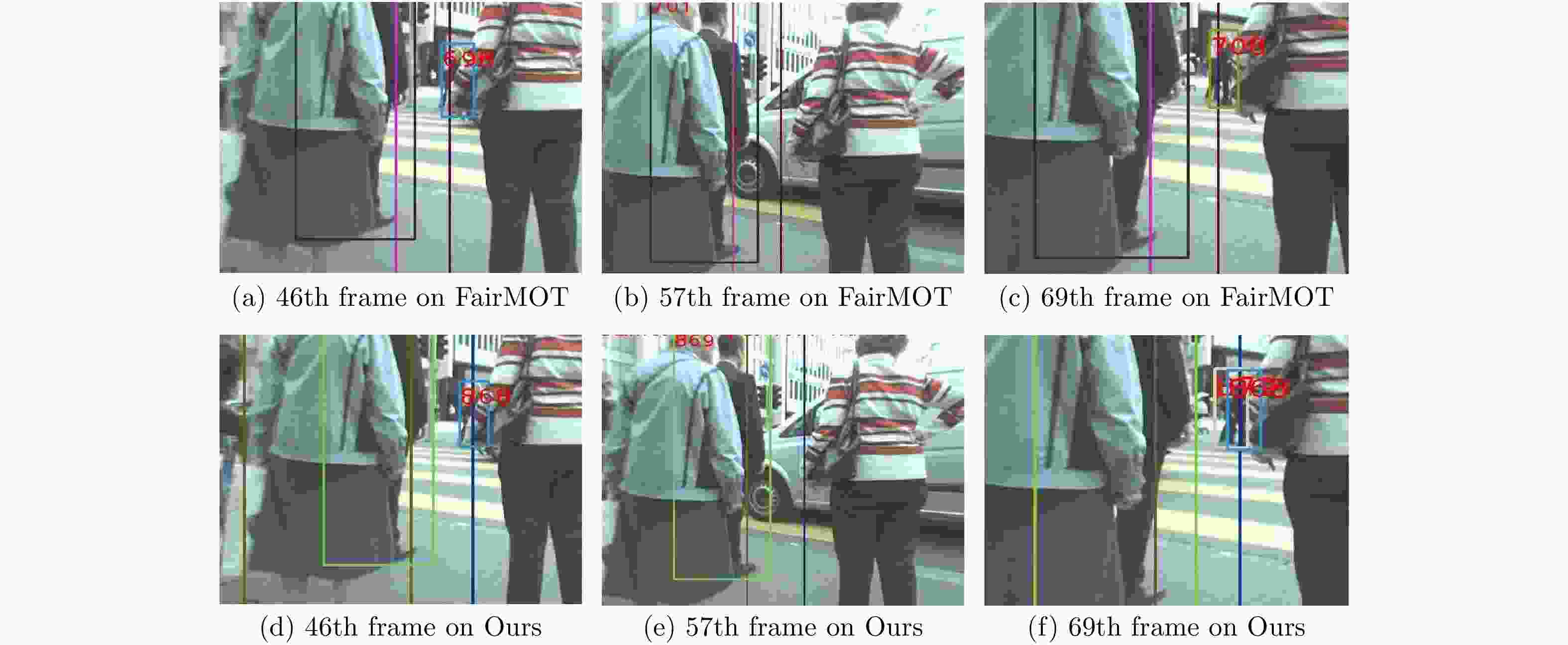

摘要: 针对多目标跟踪过程中遮挡严重时的目标身份切换、跟踪轨迹中断等问题,该文提出一种基于卷积注意力模块 (CBAM)和无锚框(anchor-free)检测网络的行人跟踪算法。首先,在高分辨率特征提取网络HrnetV2的基础上,对stem阶段引入注意力机制,以提取更具表达力的特征,从而加强对重识别分支的训练;其次,为了提高算法的运算速度,使检测和重识别分支共享特征权重且并行运行,同时减少头网络的卷积通道数以降低参数运算量;最后,设定合适的参数对网络进行充分的训练,并使用多个测试集对算法进行测试。实验结果表明,该文算法相较于FairMOT在2DMOT15, MOT17, MOT20数据集上的精确度分别提升1.1%, 1.1%, 0.2%,速度分别提升0.82, 0.88, 0.41 fps;相较于其他几种主流算法拥有最少的目标身份切换次数。该文算法能够更好地适用于遮挡严重的场景,实时性也有所提高。Abstract: According to the target identity switch and tracking trajectory interruption, a multi-pedestrian tracking algorithm based on Convolutional Block Attention Module (CBAM) and anchor-free detection network is proposed. Firstly, attention mechanism is introduced to HrnetV2′s stem stage to extract more expressive features, thus strengthening the training of re-recognition branch. Secondly, in order to improve the operation speed of algorithm, detection task and recognition one share feature weights and are carried out simultaneously. Meanwhile, the convolutional channel’s number and parameter amount are reduced in the head network. Finally, the network is fully trained with proper parameters, and the algorithm is validated by multiple test sets. Experimental results show that compared with FairMOT, the accuracy of the proposed algorithm on 2DMOT15, MOT17 and MOT20 data sets is improved by 1.1%, 1.1%, 0.2% respectively, and the speed is improved by 0.82, 0.88 and 0.41 fps respectively. Compared with other mainstream algorithms, the proposed algorithm has the least number of target identity switching. The proposed algorithm improves effectively real-time performance of network model, which could be better applied to the scenes with severe occlusion.

-

表 1 本文网络部分权重参数

层 权重 conv1 64×3×3×3 ca ca.fc1(4×64×1×1) ca.fc2(64×4×1×1) sa 1×2×7×7 conv2 64×64×3×3 Layer1 [(64×64×1×1),(64×64×3×3),(64×256×1×1)]

[(256×64×1×1),(64×64×3×3),(64×256×1×1)]×3ca1 ca1.fc1(16×256×1×1) ca1.fc2(256×16×1×1) sa1 1×2×7×7 ··· ··· last layer 64×270×3×3,bias=64 hm hm.0(64×64×3×3,bias=64) hm.2(1×64×1×1,bias=1) wh wh.0(64×64×3×3,bias=64) wh.2(2×64×1×1,bias=2) id id.0(64×64×3×3,bias=64)id.2(128×64×1×1,bias=128) reg reg.0(64×64×3×3,bias=64) reg.2(2×64×1×1,bias=2) 表 2 不同CBAM添加策略下的检测性能对比(%)

骨干网络 IDF1 IDP IDR Hrnetv2-w18 74.6 81.1 69.1 HrnetV2-w18(stem)+CBAM(a) 75.3 88.6 64.0 HrnetV2-w18(stem)+CBAM(b) 73.8 77.1 70.8 HrnetV2-w18(stem)+CBAM(c) 76.6 78.8 74.4 表 3 不同网络的计算量和参数量对比

网络 Total flops(GMac) Total params(MB) Head flops(GMac) Head params(MB) HrnetV2-w18 70.44 10.20 25.884 0.625 本文 51.09 9.74 6.475 0.156 表 4 测评指标及其解释说明

测评指标 指标解释 FP↓ 被误认为是正样本的比率,即误检率 FN↓ 被误认为是负样本的比率,即漏检率 IDS↓ 目标ID切换次数,即目标身份发生变化次数 MOTA↑ 跟踪准确度。综合FP, FN, IDS等指标计算而来 MOTP↑ 定位精度。检测响应与真实数据的行人框重合率 FPS↑ 跟踪速度。每秒处理的帧数,用于衡量模型的实时性 表 5 本文算法与FairMOT的测试结果

数据集 算法 MOTA↑ MOTP↑ IDS↓ FN↓ FP↓ fps↑ 2DMOT15 FairMOT 71.7 78.6 136 6100 1849 18.31 本文 72.8 78.6 119 4619 3018 19.13 MOT20 FairMOT 12.8 77.8 4422 1098261 62434 14.69 本文 13.0 77.2 4331 1105907 53288 15.10 MOT17 FairMOT 75.1 81.1 2238 55092 26442 16.23 本文 76.2 84.5 879 69141 9996 17.11 表 6 本文算法与其他几种模型及算法的测试结果对比

-

[1] 曹自强, 赛斌, 吕欣. 行人跟踪算法及应用综述[J]. 物理学报, 2020, 69(8): 084203. doi: 10.7498/aps.69.20191721CAO Ziqiang, SAI Bin, and LU Xin. Review of pedestrian tracking: Algorithms and applications[J]. Acta Physica Sinica, 2020, 69(8): 084203. doi: 10.7498/aps.69.20191721 [2] LAW H and DENG Jia. CornerNet: Detecting objects as paired keypoints[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 734–750. [3] ZHOU Xingyi, WANG Dequan, and KRÄHENBÜHL P. Objects as points[J]. arXiv preprint arXiv: 1904.07850, 2019. [4] WANG Zhongdao, ZHENG Liang, LIU Yixuan, et al. Towards real-time multi-object tracking[J]. arXiv preprint arXiv: 1909.12605, 2020. [5] ZHAN Yifu, WANG Chunyu, WANG Xinggang, et al. A simple baseline for multi-object tracking[J]. arXiv preprint arXiv: 2004.01888, 2020. [6] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 3–19. [7] SUN Ke, ZHAO Yang, JIANG Borui, et al. High-resolution representations for labeling pixels and regions[J]. arXiv preprint arXiv: 1904.04514, 2019. [8] LI Zeming, PENG Chao, YU Gang, et al. Light-head R-CNN: In defense of two-stage object detector[J]. arXiv preprint arXiv: 1711.07264, 2017. [9] XIAO Tong, LI Shuang, WANG Bochao, et al. Joint detection and identification feature learning for person search[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 3415–3424. [10] ZHENG Liang, ZHANG Hengheng, SUN Shaoyan, et al. Person re-identification in the wild[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1367–1376. [11] MILAN A, LEAL-TAIXE L, REID I, et al. MOT16: A benchmark for multi-object tracking[J]. arXiv preprint arXiv: 1603.00831, 2016. [12] LEAL-TAIXÉ L, MILAN A, REID I, et al. MOTchallenge 2015: Towards a benchmark for multi-target tracking[J]. arXiv preprint arXiv: 1504.01942, 2015. [13] WOJKE N, BEWLEY A, and PAULUS D. Simple online and realtime tracking with a deep association metric[C]. 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 2017: 3645–3649. [14] DENDORFER P, REZATOFIGHI H, MILAN A, et al. MOT20: A benchmark for multi object tracking in crowded scenes[J]. arXiv preprint arXiv: 2003.09003, 2020. [15] PANG Bo, LI Yizhuo, ZHANG Yifan, et al. TubeTK: Adopting tubes to track multi-object in a one-step training model[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020. [16] LIANG Chao, ZHANG Zhipeng, LU Yi, et al. Rethinking the competition between detection and ReID in Multi-Object Tracking[J]. arXiv preprint arXiv: 2010.12138, 2020. [17] XU Yihong, BAN Yutong, DELORME G, et al. TransCenter: Transformers with dense queries for multiple-object tracking[J]. arXiv preprint arXiv: 2103.15145, 2021. -

下载:

下载:

下载:

下载: