Review of Affective Detection Based on Functional Magnetic Resonance Imaging

-

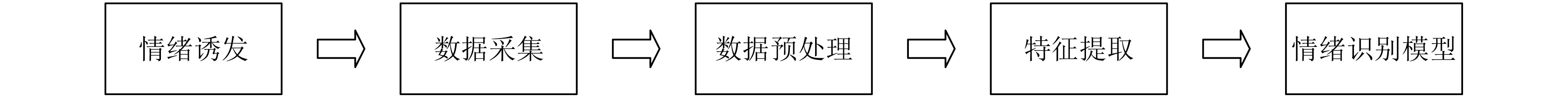

摘要: 情绪是人对具有积极或消极意义的内或外部事件的主观感受,在人们日常生活中发挥着重要作用。情绪解码通过解码人的情绪反应带来的生理信号,达到自动辨别不同情绪状态的目的,从而解决临床上精神疾病患者与情绪相关的实际难题和实现更加自然友好的人-机交互。基于功能磁共振成像的情绪解码是一种常用且有效的方法。该文从功能磁共振影像的实验设计、数据的获取以及现有情绪数据库、数据预处理、特征提取和情绪模式的学习与分类等几个方面,介绍基于功能磁共振成像的情绪解码的研究进展、应用前景以及目前存在的主要问题。Abstract: Emotion is a subjective feeling to internal or external events with positive and negative meanings, and plays an important role in human's daily life. Emotion decoding aims to automatically discriminate different emotional states by decoding physiological signals. Through estimating emotion changes, it helps to solve the practical problems in the clinical applications and develop more friendly human-interaction systems. Nowadays,functional Magnetic Resonance Imaging (fMRI)based emotion decoding is one of the most commonly used methods for deepening our understanding of the emotion-related brain dynamics and boosting the development of affective intelligence.This paper introduces current research progress, applications and main problems in the field of fMRI-based emotion decoding technique, covering experimental design, data acquisition, existing emotion-related fMRI dataset, data processing, feature extraction, and emotional pattern learning and classification.

-

表 1 不同分类模型的优劣势

常见分类模型 优势 劣势 SVM 可解决高维问题,具有较好的分类准确率 模型性能对缺失数据敏感 GNB 具有稳定的分类效率,能处理多分类任务 模型性能受假设和先验概率的计算影响,并对输入数据的表达形式敏感 K-means 算法简单易实现 受给定参数影响,随机初始化,容易陷入局部最优 GMM 计算样本点到每一类的分布概率 迭代的计算量大,可能陷入局部最优 LNN 可处理多分类任务 对复杂的数据分类效果差 DNN 分类的准确率高,能充分描述复杂的非线性关系 需要学习大量的参数,输出结果难以解释,学习时间长 CNN 处理高维数据,可自动提取特征 参数量大,输出结果难以解释 表 2 基于fMRI情绪识别的情绪模型及其识别准确率(%)

参考文献 实验设计 情绪诱发方法 fMRI特征 分类模型 情绪类型 准确率 文献[54] 组块设计 图片刺激

(外部刺激)BOLD信号 SVM 二分类:快乐和厌恶 二分类:65 三分类:快乐、悲伤和厌恶 三分类:60 文献[74] 事件相关设计 图片刺激

(外部刺激)功能连接 SVM 二分类:恐惧和中性 二分类准确率:高达90 文献[75] 组块设计 声音刺激

(外部刺激)BOLD信号 SVM 五分类:愤怒、快乐、悲伤、惊讶和中性 分类准确率范围25.3~28.5 文献[28] 组块设计 自我体验

(内部刺激)BOLD信号 GNB 愤怒、厌恶、嫉妒、恐惧、幸福、

欲望、骄傲、悲伤和羞愧平均准确率:84 文献[80] 组块设计 视频刺激

(外部刺激)功能连接 K-means 34种离散情绪

17种维度情绪聚类27个簇 文献[84] 组块设计 声音刺激

(外部刺激)BOLD信号 GMM 恐惧、幸福和悲伤 平均分类准确率:40 文献[55] 组块设计 视频刺激

(外部刺激)BOLD信号 LNN 五分类:厌恶、恐惧、幸福、悲伤和中性 五分类:平均准确率47 – 自我体验

(内部刺激)六分类:愤怒、恐惧、幸福、悲伤、惊喜和厌恶 六分类:平均准确率55 文献[57] 组块设计 声音刺激

(外部刺激)$ \beta $信号 DNN 效价、唤醒度、掌控度 效价:48

唤醒度:45

掌控度:47文献[96] 组块设计 音乐刺激

(外部刺激)功能连接 CNN 积极、消极、积极和消极 积极:93.61

消极:89.36

积极和消极:94.68表 3 基于fMRI情绪识别研究中的情绪相关脑区及其解释

参考文献 情绪 情绪相关脑区 结果解释 文献[28] 愤怒、厌恶、嫉妒、恐惧、幸福、欲望、骄傲、

悲伤和羞愧负责情绪分类的区域主要分布在前额叶和眶额叶,同时也遍布额叶、顶叶、颞叶、枕叶或皮层下区域。 情绪诱导会调用广泛的神经结构网络。 文献[54] 基本情绪(厌恶、恐惧、幸福、悲伤、愤怒和惊奇) 基本情绪主要集中在MPFC, ACC, IFG, PCC、中央前回、中央后回、舌状回、杏仁核和丘脑。 基本情感以离散的激活模式编码在广泛的大脑区域网络中,而不是特定于情感的大脑区域或系统中。 文献[55] 厌恶、恐惧、幸福、

悲伤和中性负责情绪分类的区域主要分布在MPFC、ACC、楔前叶和PCC。其他重要区域包括中央前回、中央后回、后脑岛、中颞回、颞极、梭状回、枕骨外侧皮层、杏仁核和丘脑。 基本情绪,并非由孤立的脑区表征,而是将所有基本情感与皮层和下皮层区域的分布式网络中的特定激活模式相关联。最一致的差异模式集中在皮质中线结构和感觉运动区域,但也扩展到传统上与情感处理相关的区域,例如岛状或杏仁核。 愤怒、恐惧、幸福、悲伤、惊喜和厌恶 文献[57] 效价、唤醒度、掌控度 情绪相关脑区主要位于ACC、眶前额叶皮层(Orbitofrontal Cortex, OFC)、杏仁核和脑岛。 ACC、OFC、杏仁核和脑岛有助于表征情感声音刺激的情绪加工。 文献[74] 恐惧和中性 观看恐惧面部图片时,左海马体和右角回的功能连接的贡献最大。区分恐惧和中性的脑区主要在丘脑、枕上、额叶岛盖、背外侧前额叶皮层、小脑、顶叶、后颞区和前颞区。 在隐性恐惧感知过程中,丘脑与双侧中颞回和岛突之间的功能连通性起着重要作用。 文献[75] 愤怒、快乐、悲伤、

惊讶和中性右脑的后颞上回延伸至前颞上沟、扣带回、前岛叶及邻近额叶岛盖、额下回、额中回、后颞中回的前后部分和后小脑等区域不同类型情绪表达的分类正确率均高于概率。 声音情感表达的分类依赖一个广泛的网络(右侧额颞叶网络)即包括额叶岛盖复合体、右侧额下回和左侧大脑-小脑区域。 -

[1] HOEMANN K, WU R, LOBUE V, et al. Developing an understanding of emotion categories: Lessons from objects[J]. Trends in Cognitive Sciences, 2020, 24(1): 39–51. doi: 10.1016/j.tics.2019.10.010 [2] DOLAN R J. Emotion, cognition, and behavior[J]. Science, 2002, 298(5596): 1191–1194. doi: 10.1126/science.1076358 [3] 聂聃, 王晓韡, 段若男, 等. 基于脑电的情绪识别研究综述[J]. 中国生物医学工程学报, 2012, 31(4): 595–606. doi: 10.3969/j.issn.0258-8021.2012.04.018NIE Dan, WANG Xiaowei, DUAN Ruonan, et al. A survey on EEG based emotion recognition[J]. Chinese Journal of Biomedical Engineering, 2012, 31(4): 595–606. doi: 10.3969/j.issn.0258-8021.2012.04.018 [4] EBNEABBASI A, MAHDIPOUR M, NEJATI V, et al. Emotion processing and regulation in major depressive disorder: A 7T resting-state fMRI study[J]. Human Brain Mapping, 2021, 42(3): 797–810. doi: 10.1002/hbm.25263 [5] HE Zongling, LU Fengmei, SHENG Wei, et al. Functional dysconnectivity within the emotion-regulating system is associated with affective symptoms in major depressive disorder: A resting-state fMRI study[J]. Australian & New Zealand Journal of Psychiatry, 2019, 53(6): 528–539. doi: 10.1177/0004867419832106 [6] KRYZA-LACOMBE M, BROTMAN M A, REYNOLDS R C, et al. Neural mechanisms of face emotion processing in youths and adults with bipolar disorder[J]. Bipolar Disorders, 2019, 21(4): 309–320. doi: 10.1111/bdi.12768 [7] ZHANG Li, LI Wenfei, WANG Long, et al. Altered functional connectivity of right inferior frontal gyrus subregions in bipolar disorder: A resting state fMRI study[J]. Journal of Affective Disorders, 2020, 272: 58–65. doi: 10.1016/j.jad.2020.03.122 [8] HWANG H C, KIM S M, and HAN D H. Different facial recognition patterns in schizophrenia and bipolar disorder assessed using a computerized emotional perception test and fMRI[J]. Journal of Affective Disorders, 2021, 279: 83–88. doi: 10.1016/j.jad.2020.09.125 [9] REN Fuji and BAO Yanwei. A review on human-computer interaction and intelligent robots[J]. International Journal of Information Technology & Decision Making, 2020, 19(1): 5–47. doi: 10.1142/S0219622019300052 [10] VESISENAHO M, JUNTUNEN M, HÄKKINEN P, et al. Virtual reality in education: Focus on the role of emotions and physiological reactivity[J]. Journal of Virtual Worlds Research, 2019, 12(1): 1–15. doi: 10.4101/jvwr.v12i1.7329 [11] SAWANGJAI P, HOMPOONSUP S, LEELAARPORN P, et al. Consumer grade EEG measuring sensors as research tools: A review[J]. IEEE Sensors Journal, 2020, 20(8): 3996–4024. doi: 10.1109/JSEN.2019.2962874 [12] MURIAS K, SLONE E, TARIQ S, et al. Development of spatial orientation skills: An fMRI study[J]. Brain Imaging and Behavior, 2019, 13(6): 1590–1601. doi: 10.1007/s11682-018-0028-5 [13] RICHARDSON H and SAXE R. Development of predictive responses in theory of mind brain regions[J]. Developmental Science, 2020, 23(1): e12863. doi: 10.1111/desc.12863 [14] EICKHOFF S B, MILHAM M, and VANDERWAL T. Towards clinical applications of movie fMRI[J]. NeuroImage, 2020, 217: 116860. doi: 10.1016/j.neuroimage.2020.116860 [15] LI Qiongge, DEL FERRARO G, PASQUINI L, et al. Core language brain network for fMRI language task used in clinical applications[J]. Network Neuroscience, 2020, 4(1): 134–154. doi: 10.1162/netn_a_00112 [16] LAWRENCE S J D, FORMISANO E, MUCKLI L, et al. Laminar fMRI: Applications for cognitive neuroscience[J]. NeuroImage, 2019, 197: 785–791. doi: 10.1016/j.neuroimage.2017.07.004 [17] HERWIG U, LUTZ J, SCHERPIET S, et al. Training emotion regulation through real-time fMRI neurofeedback of amygdala activity[J]. NeuroImage, 2019, 184: 687–696. doi: 10.1016/j.neuroimage.2018.09.068 [18] WEBER-GOERICKE F and MUEHLHAN M. A quantitative meta-analysis of fMRI studies investigating emotional processing in excessive worriers: Application of activation likelihood estimation analysis[J]. Journal of Affective Disorders, 2019, 243: 348–359. doi: 10.1016/j.jad.2018.09.049 [19] PUTKINEN V, NAZARI-FARSANI S, SEPPÄLÄ K, et al. Decoding music-evoked emotions in the auditory and motor cortex[J]. Cerebral Cortex, 2021, 31(5): 2549–2560. doi: 10.1093/cercor/bhaa373 [20] JAMES W. II. —What is an emotion?[J]. Mind, 1884, os-IX(34): 188–205. doi: 10.1093/mind/os-IX.34.188 [21] CANNON W B. The James-Lange theory of emotions: A critical examination and an alternative theory[J]. The American Journal of Psychology, 1927, 39(1/4): 106–124. doi: 10.2307/1415404 [22] HEALEY J A. Wearable and automotive systems for affect recognition from physiology[D]. [Ph. D. dissertation], Massachusetts Institute of Technology, 2000. [23] EKMAN P and CORDARO D. What is meant by calling emotions basic[J]. Emotion Review, 2011, 3(4): 364–370. doi: 10.1177/1754073911410740 [24] VAN DEN BROEK E L. Ubiquitous emotion-aware computing[J]. Personal and Ubiquitous Computing, 2013, 17(1): 53–67. doi: 10.1007/s00779-011-0479-9 [25] CABANAC M. What is emotion?[J]. Behavioural Processes, 2002, 60(2): 69–83. doi: 10.1016/s0376-6357(02)00078-5 [26] KRAGEL P A and LABAR K S. Decoding the nature of emotion in the brain[J]. Trends in Cognitive Sciences, 2016, 20(6): 444–455. doi: 10.1016/j.tics.2016.03.011 [27] JASTORFF J, HUANG Yun’an, GIESE M A, et al. Common neural correlates of emotion perception in humans[J]. Human Brain Mapping, 2015, 36(10): 4184–4201. doi: 10.1002/hbm.22910 [28] KASSAM K S, MARKEY A R, CHERKASSKY V L, et al. Identifying emotions on the basis of neural activation[J]. PLoS One, 2013, 8(6): e66032. doi: 10.1371/journal.pone.0066032 [29] RUBIN D C and TALARICO J M. A comparison of dimensional models of emotion: Evidence from emotions, prototypical events, autobiographical memories, and words[J]. Memory, 2009, 17(8): 802–808. doi: 10.1080/09658210903130764 [30] HANJALIC A and XU Liqun. Affective video content representation and modeling[J]. IEEE Transactions on Multimedia, 2005, 7(1): 143–154. doi: 10.1109/TMM.2004.840618 [31] SUN Kai, YU Junqing, HUANG Yue, et al. An improved valence-arousal emotion space for video affective content representation and recognition[C]. 2009 IEEE International Conference on Multimedia and Expo, New York, USA, 2009: 566–569. [32] BUECHEL S and HAHN U. Emotion analysis as a regression problem-dimensional models and their implications on emotion representation and metrical evaluation[C]. 22nd European Conference on Artificial Intelligence, Hague, Netherlands, 2016: 1114–1122. [33] MALHI G S, DAS P, OUTHRED T, et al. Cognitive and emotional impairments underpinning suicidal activity in patients with mood disorders: An fMRI study[J]. Acta Psychiatrica Scandinavica, 2019, 139(5): 454–463. doi: 10.1111/acps.13022 [34] SCHMIDT S N L, SOJER C A, HASS J, et al. fMRI adaptation reveals: The human mirror neuron system discriminates emotional valence[J]. Cortex, 2020, 128: 270–280. doi: 10.1016/j.cortex.2020.03.026 [35] WEST H V, BURGESS G C, DUST J, et al. Amygdala activation in cognitive task fMRI varies with individual differences in cognitive traits[J]. Cognitive, Affective, & Behavioral Neuroscience, 2021, 21(1): 254–264. doi: 10.3758/s13415-021-00863-3 [36] LI Jian, ZHONG Yuan, MA Zijuan, et al. Emotion reactivity-related brain network analysis in generalized anxiety disorder: A task fMRI study[J]. BMC Psychiatry, 2020, 20(1): 429. doi: 10.1186/s12888-020-02831-6 [37] IVES-DELIPERI V L, SOLMS M, and MEINTJES E M. The neural substrates of mindfulness: An fMRI investigation[J]. Social Neuroscience, 2011, 6(3): 231–242. doi: 10.1080/17470919.2010.513495 [38] PAULING L and CORYELL C D. The magnetic properties and structure of hemoglobin, oxyhemoglobin and carbonmonoxyhemoglobin[J]. Proceedings of the National Academy of Sciences of the United States of America, 1936, 22(4): 210–216. doi: 10.1073/pnas.22.4.210 [39] OGAWA S, LEE T M, KAY A R, et al. Brain magnetic resonance imaging with contrast dependent on blood oxygenation[J]. Proceedings of the National Academy of Sciences of the United States of America, 1990, 87(24): 9868–9872. doi: 10.1073/pnas.87.24.9868 [40] CHEN Shengyong and LI Xiaoli. Functional magnetic resonance imaging for imaging neural activity in the human brain: The annual progress[J]. Computational and Mathematical Methods in Medicine, 2012, 2012: 613465. doi: 10.1155/2012/613465 [41] FRISTON K J, JEZZARD P, and TURNER R. Analysis of functional MRI time-series[J]. Human Brain Mapping, 1994, 1(2): 153–171. doi: 10.1002/hbm.460010207 [42] VALENTE G, KAAS A L, FORMISANO E, et al. Optimizing fMRI experimental design for MVPA-based BCI control: Combining the strengths of block and event-related designs[J]. NeuroImage, 2019, 186: 369–381. doi: 10.1016/j.neuroimage.2018.10.080 [43] PHAN K L, TAYLOR S F, WELSH R C, et al. Activation of the medial prefrontal cortex and extended amygdala by individual ratings of emotional arousal: A fMRI study[J]. Biological Psychiatry, 2003, 53(3): 211–215. doi: 10.1016/s0006-3223(02)01485-3 [44] KREIFELTS B, ETHOFER T, GRODD W, et al. Audiovisual integration of emotional signals in voice and face: An event-related fMRI study[J]. NeuroImage, 2007, 37(4): 1445–1456. doi: 10.1016/j.neuroimage.2007.06.020 [45] LAURENT H, FINNEGAN M K, and HAIGLER K. Postnatal affective MRI dataset[EB/OL]. https://doi.org/10.18112/openneuro.ds003136.v1.0.0, 2020. [46] BABAYAN A, BACZKOWSKI B, COZATL R, et al. MPI-Leipzig_Mind-brain-body[EB/OL]. https://doi.org/10.18112/openneuro.ds000221.v1.0.0, 2020. [47] VAN ESSEN D C, SMITH S M, BARCH D M, et al. The WU-Minn human connectome project: An overview[J]. NeuroImage, 2013, 80: 62–79. doi: 10.1016/j.neuroimage.2013.05.041 [48] FINNEGAN M K, KANE S, HELLER W, et al. Mothers’ neural response to valenced infant interactions predicts postpartum depression and anxiety[J]. PLoS One, 2021, 16(4): e0250487. doi: 10.1371/journal.pone.0250487 [49] DAVID I and BARRIOS F. Localizing brain function based on full multivariate activity patterns: The case of visual perception and emotion decoding[J]. bioRxiv, To be published. [50] PORCU M, OPERAMOLLA A, SCAPIN E, et al. Effects of white matter hyperintensities on brain connectivity and hippocampal volume in healthy subjects according to their localization[J]. Brain Connectivity, 2020, 10(8): 436–447. doi: 10.1089/brain.2020.0774 [51] MARKETT S, JAWINSKI P, KIRSCH P, et al. Specific and segregated changes to the functional connectome evoked by the processing of emotional faces: A task-based connectome study[J]. Scientific Reports, 2020, 10(1): 4822. doi: 10.1038/s41598-020-61522-0 [52] HUANG Pei, CARLIN J D, HENSON R N, et al. Improved motion correction of submillimetre 7T fMRI time series with boundary-based registration (BBR)[J]. NeuroImage, 2020, 210: 116542. doi: 10.1016/j.neuroimage.2020.116542 [53] LINDQUIST M A. The statistical analysis of fMRI data[J]. Statistical Science, 2008, 23(4): 439–464. doi: 10.1214/09-STS282 [54] SITARAM R, LEE S, RUIZ S, et al. Real-time support vector classification and feedback of multiple emotional brain states[J]. NeuroImage, 2011, 56(2): 753–765. doi: 10.1016/j.neuroimage.2010.08.007 [55] SAARIMÄKI H, GOTSOPOULOS A, JÄÄSKELÄINEN I P, et al. Discrete neural signatures of basic emotions[J]. Cerebral Cortex, 2016, 26(6): 2563–2573. doi: 10.1093/cercor/bhv086 [56] FRISTON K J, HOLMES A P, WORSLEY K J, et al. Statistical parametric maps in functional imaging: A general linear approach[J]. Human Brain Mapping, 1994, 2(4): 189–210. doi: 10.1002/hbm.460020402 [57] KIM H C, BANDETTINI P A, and LEE J H. Deep neural network predicts emotional responses of the human brain from functional magnetic resonance imaging[J]. NeuroImage, 2019, 186: 607–627. doi: 10.1016/j.neuroimage.2018.10.054 [58] ETHOFER T, VAN DE VILLE D, SCHERER K, et al. Decoding of emotional information in voice-sensitive cortices[J]. Current Biology, 2009, 19(12): 1028–1033. doi: 10.1016/j.cub.2009.04.054 [59] FRISTON K J, FRITH C D, LIDDLE P F, et al. Functional connectivity: The principal-component analysis of large (PET) data sets[J]. Journal of Cerebral Blood Flow & Metabolism, 1993, 13(1): 5–14. doi: 10.1038/jcbfm.1993.4 [60] ERYILMAZ H, VAN DE VILLE D, SCHWARTZ S, et al. Impact of transient emotions on functional connectivity during subsequent resting state: A wavelet correlation approach[J]. NeuroImage, 2011, 54(3): 2481–2491. doi: 10.1016/j.neuroimage.2010.10.021 [61] ROY A K, SHEHZAD Z, MARGULIES D S, et al. Functional connectivity of the human amygdala using resting state fMRI[J]. NeuroImage, 2009, 45(2): 614–626. doi: 10.1016/j.neuroimage.2008.11.030 [62] FRISTON K J, BUECHEL C, FINK G R, et al. Psychophysiological and modulatory interactions in neuroimaging[J]. NeuroImage, 1997, 6(3): 218–229. doi: 10.1006/nimg.1997.0291 [63] TOMARKEN A J and WALLER N G. Structural equation modeling: Strengths, limitations, and misconceptions[J]. Annual Review of Clinical Psychology, 2005, 1: 31–65. doi: 10.1146/annurev.clinpsy.1.102803.144239 [64] DESHPANDE G, LACONTE S, JAMES G A, et al. Multivariate granger causality analysis of fMRI data[J]. Human Brain Mapping, 2009, 30(4): 1361–1373. doi: 10.1002/hbm.20606 [65] FRISTON K J, HARRISON L, and PENNY W. Dynamic causal modelling[J]. NeuroImage, 2003, 19(4): 1273–1302. doi: 10.1016/S1053-8119(03)00202-7 [66] DESSEILLES M, SCHWARTZ S, DANG-VU T T, et al. Depression alters “top-down” visual attention: A dynamic causal modeling comparison between depressed and healthy subjects[J]. NeuroImage, 2011, 54(2): 1662–1668. doi: 10.1016/j.neuroimage.2010.08.061 [67] BRÁZDIL M, MIKL M, MAREČEK R, et al. Effective connectivity in target stimulus processing: A dynamic causal modeling study of visual oddball task[J]. NeuroImage, 2007, 35(2): 827–835. doi: 10.1016/j.neuroimage.2006.12.020 [68] DAVID O, MAESS B, ECKSTEIN K, et al. Dynamic causal modeling of subcortical connectivity of language[J]. Journal of Neuroscience, 2011, 31(7): 2712–2717. doi: 10.1523/JNEUROSCI.3433-10.2011 [69] SLADKY R, HÖFLICH A, KÜBLBÖCK M, et al. Disrupted effective connectivity between the amygdala and orbitofrontal cortex in social anxiety disorder during emotion discrimination revealed by dynamic causal modeling for fMRI[J]. Cerebral Cortex, 2015, 25(4): 895–903. doi: 10.1093/cercor/bht279 [70] TORRISI S J, LIEBERMAN M D, BOOKHEIMER S Y, et al. Advancing understanding of affect labeling with dynamic causal modeling[J]. NeuroImage, 2013, 82: 481–488. doi: 10.1016/j.neuroimage.2013.06.025 [71] KUNCHEVA L I and RODRÍGUEZ J J. Classifier ensembles for fMRI data analysis: An experiment[J]. Magnetic Resonance Imaging, 2010, 28(4): 583–593. doi: 10.1016/j.mri.2009.12.021 [72] CORTES C and VAPNIK V. Support-vector networks[J]. Machine Learning, 1995, 20(3): 273–297. doi: 10.1023/A:1022627411411 [73] KARAMIZADEH S, ABDULLAH S M, HALIMI M, et al. Advantage and drawback of support vector machine functionality[C]. 2014 International Conference on Computer, Communications, and Control Technology, Langkawi, Malaysia, 2014: 63–65. [74] PANTAZATOS S P, TALATI A, PAVLIDIS P, et al. Decoding unattended fearful faces with whole-brain correlations: An approach to identify condition-dependent large-scale functional connectivity[J]. PLoS Computational Biology, 2012, 8(3): e1002441. doi: 10.1371/journal.pcbi.1002441 [75] KOTZ S A, KALBERLAH C, BAHLMANN J, et al. Predicting vocal emotion expressions from the human brain[J]. Human Brain Mapping, 2013, 34(8): 1971–1981. doi: 10.1002/hbm.22041 [76] AL-AIDAROOS K M, BAKAR A A, and OTHMAN Z. Naive bayes variants in classification learning[C]. 2010 International Conference on Information Retrieval & Knowledge Management, Shah Alam, Malaysia, 2010: 276–281. [77] NIGAM K, MCCALLUM A K, THRUN S, et al. Text classification from labeled and unlabeled documents using EM[J]. Machine Learning, 2000, 39(2): 103–134. doi: 10.1023/A:1007692713085 [78] WAGER T D, KANG Jian, JOHNSON T D, et al. A bayesian model of category-specific emotional brain responses[J]. PLoS Computational Biology, 2015, 11(4): e1004066. doi: 10.1371/journal.pcbi.1004066 [79] THIRION B, VAROQUAUX G, DOHMATOB E, et al. Which fMRI clustering gives good brain parcellations?[J]. Frontiers in Neuroscience, 2014, 8: 167. doi: 10.3389/fnins.2014.00167 [80] HORIKAWA T, COWEN A S, KELTNER D, et al. The neural representation of visually evoked emotion is high-dimensional, categorical, and distributed across transmodal brain regions[J]. iScience, 2020, 23(5): 101060. doi: 10.1016/j.isci.2020.101060 [81] COWEN A S and KELTNER D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients[J]. Proceedings of the National Academy of Sciences of the United States of America, 2017, 114(38): E7900–E7909. doi: 10.1073/pnas.1702247114 [82] REYNOLDS D A. Gaussian Mixture Models[M]. LI S Z, JAIN A K. Encyclopedia of Biometrics. Boston: Springer, 2009659–663. [83] WILSON-MENDENHALL C D, BARRETT L F, and BARSALOU L W. Neural evidence that human emotions share core affective properties[J]. Psychological Science, 2013, 24(6): 947–956. doi: 10.1177/0956797612464242 [84] AZARI B, WESTLIN C, SATPUTE A B, et al. Comparing supervised and unsupervised approaches to emotion categorization in the human brain, body, and subjective experience[J]. Scientific Reports, 2020, 10(1): 20284. doi: 10.1038/s41598-020-77117-8 [85] OJA E. Principal components, minor components, and linear neural networks[J]. Neural Networks, 1992, 5(6): 927–935. doi: 10.1016/S0893-6080(05)80089-9 [86] KANG T G and KIM N S. DNN-based voice activity detection with multi-task learning[J]. IEICE Transactions on Information and Systems, 2016, E99.D(2): 550–553. doi: 10.1587/transinf.2015EDL8168 [87] NGUYEN A, YOSINSKI J, and CLUNE J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 427–436. [88] YANG Zhengshi, ZHUANG Xiaowei, SREENIVASAN K, et al. A robust deep neural network for denoising task-based fMRI data: An application to working memory and episodic memory[J]. Medical Image Analysis, 2020, 60: 101622. doi: 10.1016/j.media.2019.101622 [89] JANG H, PLIS S M, CALHOUN V D, et al. Task-specific feature extraction and classification of fMRI volumes using a deep neural network initialized with a deep belief network: Evaluation using sensorimotor tasks[J]. NeuroImage, 2017, 145: 314–328. doi: 10.1016/j.neuroimage.2016.04.003 [90] ZHAO Yu, DONG Qinglin, ZHANG Shu, et al. Automatic recognition of fMRI-derived functional networks using 3-D convolutional neural networks[J]. IEEE Transactions on Biomedical Engineering, 2018, 65(9): 1975–1984. doi: 10.1109/TBME.2017.2715281 [91] KAYED M, ANTER A, and MOHAMED H. Classification of garments from fashion MNIST dataset using CNN LeNet-5 architecture[C]. 2020 International Conference on Innovative Trends in Communication and Computer Engineering (ITCE), Aswan, Egypt, 2020: 238–243. [92] ABD ALMISREB A, JAMIL N, and DIN N M. Utilizing AlexNet deep transfer learning for ear recognition[C]. 2018 Fourth International Conference on Information Retrieval and Knowledge Management (CAMP), Kota Kinabalu, Malaysia, 2018: 1–5. [93] SONG Zhenzhen, FU Longsheng, WU Jingzhu, et al. Kiwifruit detection in field images using faster R-CNN with VGG16[J]. IFAC-PapersOnLine, 2019, 52(30): 76–81. doi: 10.1016/j.ifacol.2019.12.500 [94] CHUNG Y L, CHUNG H Y, and TSAI W F. Hand gesture recognition via image processing techniques and deep CNN[J]. Journal of Intelligent & Fuzzy Systems, 2020, 39(3): 4405–4418. doi: 10.3233/JIFS-200385 [95] LEI Xusheng and SUI Zhehao. Intelligent fault detection of high voltage line based on the faster R-CNN[J]. Measurement, 2019, 138: 379–385. doi: 10.1016/j.measurement.2019.01.072 [96] GUI Renzhou, CHEN Tongjie, and NIE Han. The impact of emotional music on active ROI in patients with depression based on deep learning: A task-state fMRI study[J]. Computational Intelligence and Neuroscience, 2019, 2019: 5850830. doi: 10.1155/2019/5850830 [97] HARDOON D R, MOURAO-MIRANDA J, BRAMMER M, et al. Unsupervised analysis of fMRI data using kernel canonical correlation[J]. NeuroImage, 2007, 37(4): 1250–1259. doi: 10.1016/j.neuroimage.2007.06.017 [98] CHANEL G, PICHON S, CONTY L, et al. Classification of autistic individuals and controls using cross-task characterization of fMRI activity[J]. NeuroImage:Clinical, 2016, 10: 78–88. doi: 10.1016/j.nicl.2015.11.010 [99] SZYCIK G R, MOHAMMADI B, HAKE M, et al. Excessive users of violent video games do not show emotional desensitization: An fMRI study[J]. Brain Imaging and Behavior, 2017, 11(3): 736–743. doi: 10.1007/s11682-016-9549-y [100] ZOTEV V, MAYELI A, MISAKI M, et al. Emotion self-regulation training in major depressive disorder using simultaneous real-time fMRI and EEG neurofeedback[J]. NeuroImage:Clinical, 2020, 27: 102331. doi: 10.1016/j.nicl.2020.102331 -

下载:

下载:

下载:

下载: