Siamese Object Tracking Based on Key Feature Information Perception and Online Adaptive Masking

-

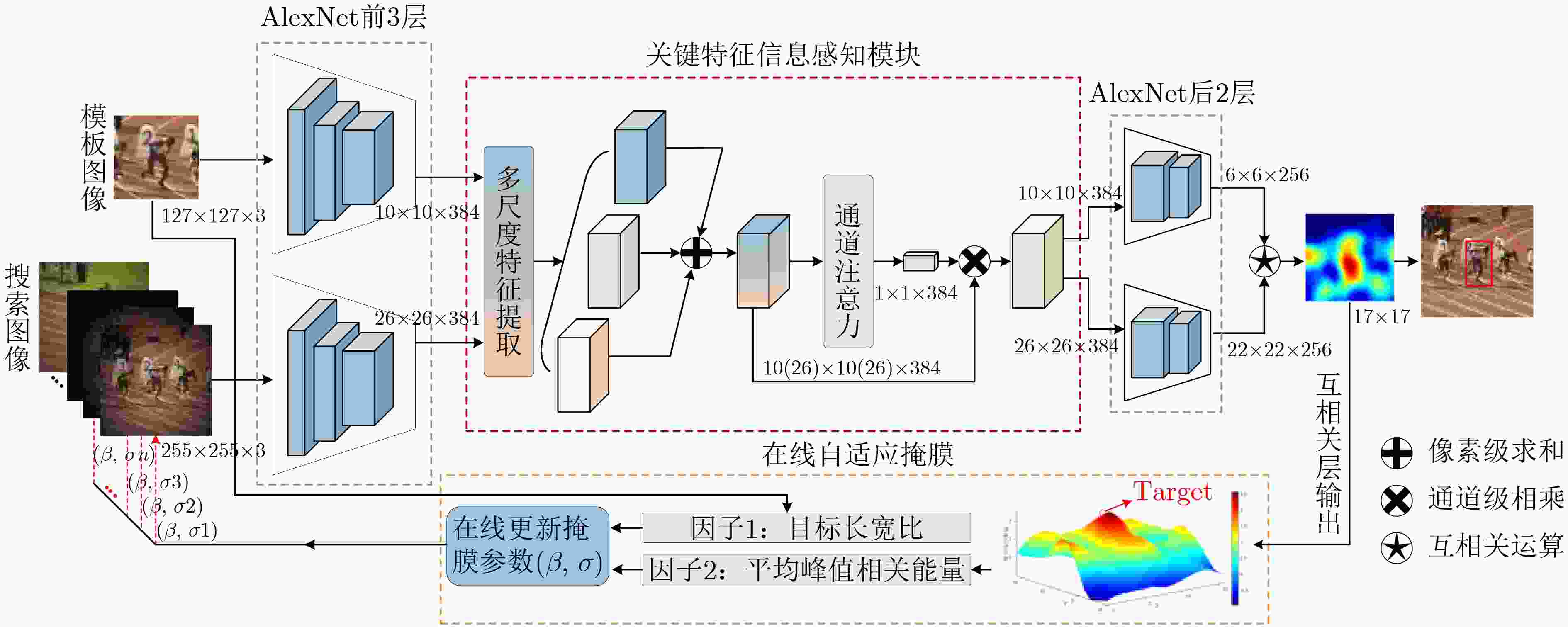

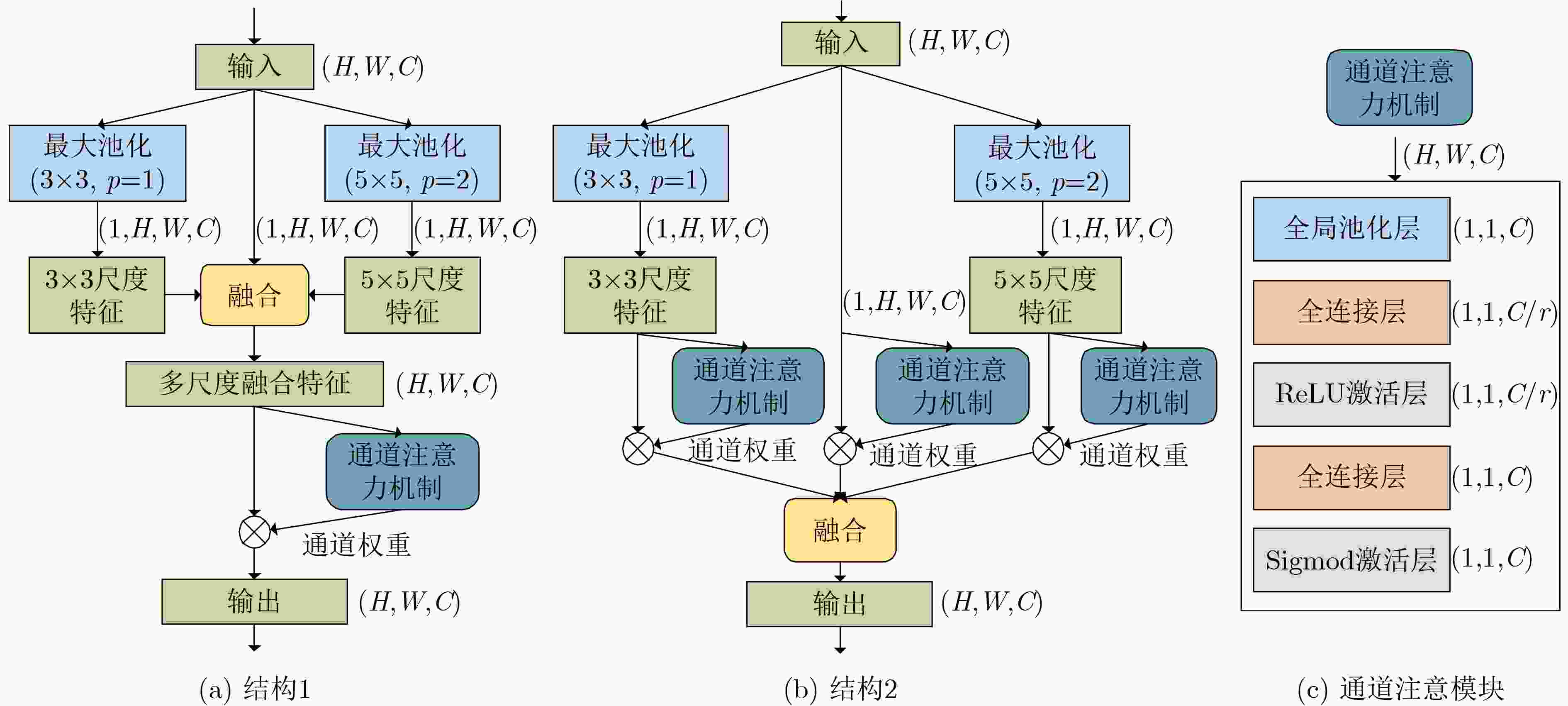

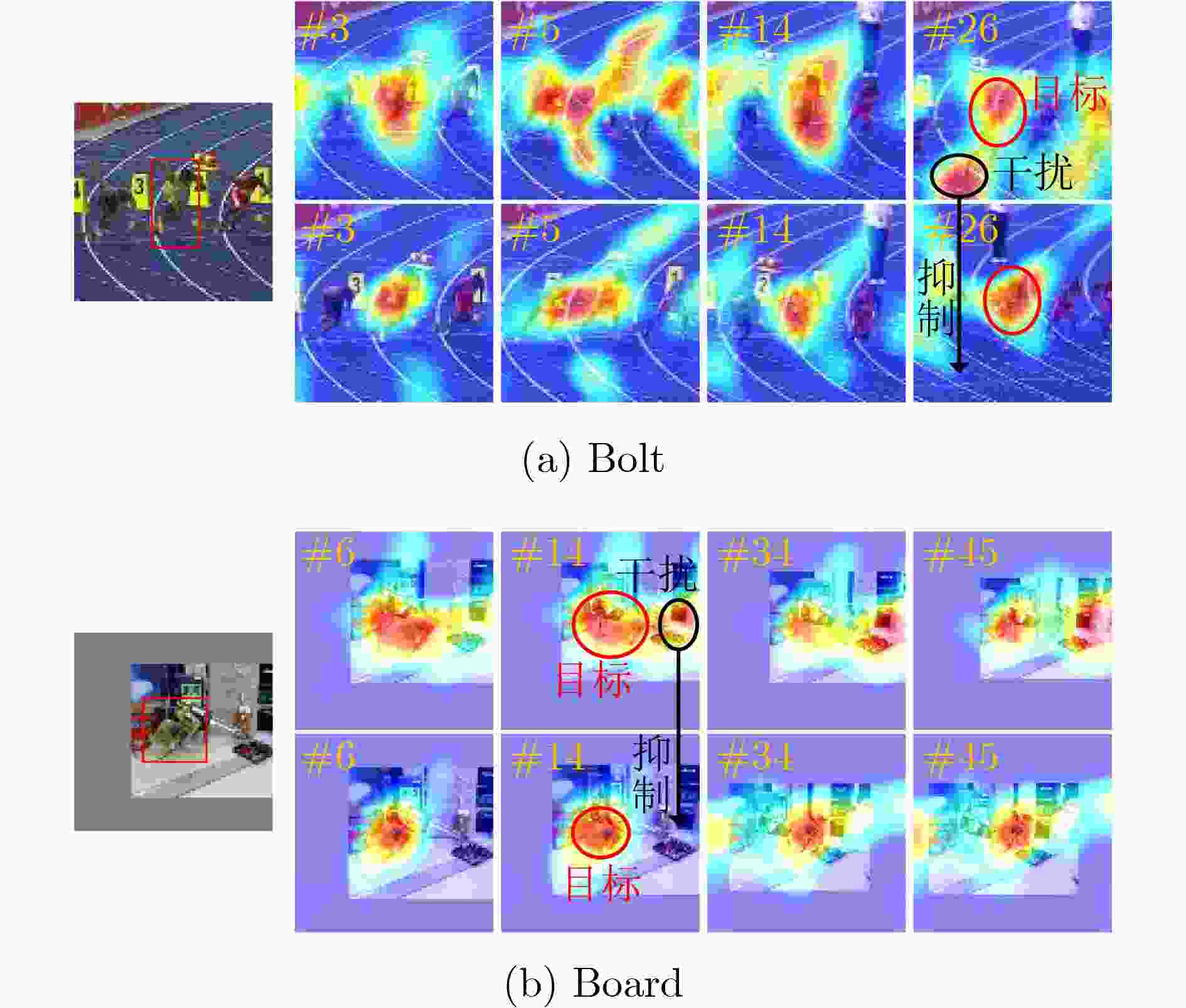

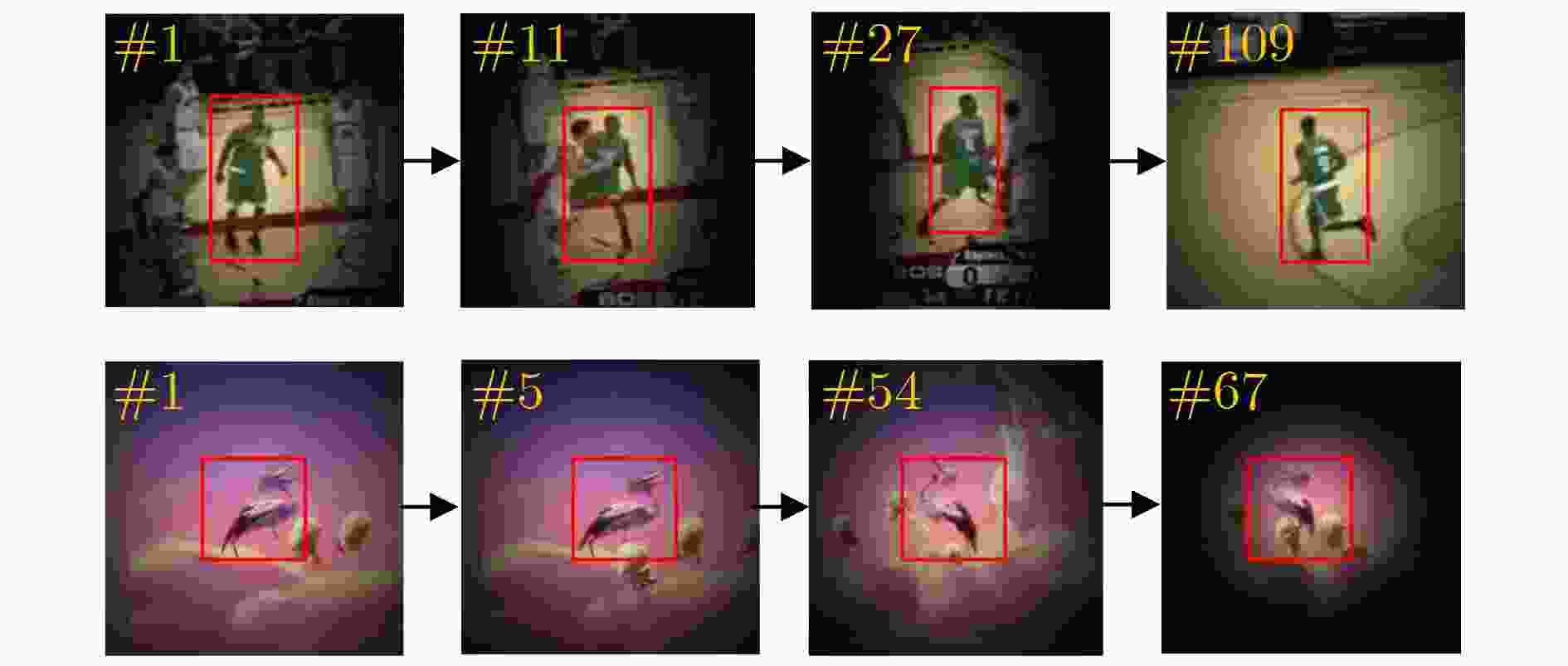

摘要: 近年来,孪生网络在视觉目标跟踪的应用给跟踪器性能带来了极大的提升,可以同时兼顾准确率和实时性。然而,孪生网络跟踪器的准确率在很大程度上受到限制。为了解决上述问题,该文基于通道注意力机制,创新地提出了关键特征信息感知模块来增强网络模型的判别能力,使网络聚焦于目标的卷积特征变化;在此基础上,该文还提出了一种在线自适应掩模策略,根据在线学习到的互相关层输出状态,自适应掩模后续帧,以此来突出前景目标。在OTB100, GOT-10k数据集上进行实验验证,所提跟踪器在不影响实时性的前提下,准确率相较于基准有了显著提升,并且在遮挡、尺度变化以及背景杂乱等复杂场景下具有鲁棒的跟踪效果。Abstract: The application of Siamese network to visual object tracking has greatly improved the performance of the tracker recently, which can take both accuracy and speed into account. However, the accuracy of Siamese network tracker is limited to a great extent. In order to solve the above problems, a key information feature perception module based on channel attention mechanism to enhance the discrimination ability of the network model is proposed, which make the network focus on the convolution feature changes of the target; On this basis, an online adaptive masking strategy is proposed, which adaptively masks the subsequent frames according to the output state of the cross-correlation layer learned online, so as to highlight the foreground target. Experiments on OTB100 and GOT-10k datasets show that without affecting the real-time performance, the proposed tracker has a significant improvement in accuracy compared with the benchmark, and has a robust tracking effect in complex scenes such as occlusion, scale change and background clutter.

-

Key words:

- Object tracking /

- Siamese network /

- Key feature information perception /

- Adaptive mask

-

表 1 训练模型在OTB100上的AUC性能(%)

SiamFC Model1 Model2 Model3 Model4 Model5 58.4 58.7 60.9 60.1 57.4 60.3 表 2 两种不同关键信息感知结构对比

算法 参数量(MB) 运算量(Byte) 准确率(%) fps SiamFC 3.30 0.69742 58.4 131 SiamFC(结构1) 3.32 0.69748 62.4 114 SiamFC(结构2) 3.36 0.69759 62.8 101 SiamFC-DW 2.45 0.80825 62.7 154 SiamFC-DW(结构1) 2.49 0.80841 64.3 147 SiamFC-DW(结构2) 2.55 0.80872 65.2 132 表 3 在线自适应掩模

输入:后续帧搜索图像f (255×255×3) 输出:掩模后的图像$ {f^ * } $ (1) 通过式(5)初始化前i帧搜索图像$ {\sigma _{{x_k}}} $和$ {\sigma _{{y_k}}} $,其中k=1,2,···,i; (2) 根据响应图(Response map)计算前i帧的平均峰值相关能量

(APCE);(3) 由式(8)得到APCE1, APCE2, ···, APCEi; (4) 通过当前帧的前i帧APCE的平均值和APCEi+1,计算得到

εdiv;(5) if (εdiv大于或等于$ {\tau _1} $) (6) /*减弱高斯掩模程度*/ $ {\sigma _{{x_{i + 1}}}} $=$ {\sigma _{{x_i}}}(1 + \mu ) $,$ {\sigma _{{y_{i + 1}}}} $=$ {\sigma _{{y_i}}}(1 + \mu ) $; (7) else if (εdiv小于或等于$ {\tau _2} $) (8) /*增强高斯掩模程度*/ $ {\sigma _{{x_{i + 1}}}} $=$ {\sigma _{{x_i}}}(1 - \mu ) $,$ {\sigma _{{y_{i + 1}}}} $=$ {\sigma _{{y_i}}}(1 - \mu ) $; (9) else (10) /*高斯掩模程度保持不变*/ $ {\sigma _{{x_{i + 1}}}} $=$ {\sigma _{{x_{i + 1}}}} $,$ {\sigma _{{y_{i + 1}}}} $=$ {\sigma _{{y_{i + 1}}}} $; (11) 计算$ f_{i + 1}^ * $=$ f_{i + 1}^ * \cdot G({x_{i + 1}},{y_{i + 1}}) $得到第i+1帧高斯掩模后的

搜索图像;(12) 重复执行步骤(1)—步骤(11)得到后续每帧经过高斯掩模后

的搜索图像$ {f^ * } $。表 4 10种算法在OTB数据集上不同场景的AUC定量对比

场景 视频数 KCF Staple SRDCF DeepSRDCF CFNet SINT SiamFC-DW SiamRPN SiamFC 本文 BC 31 0.476 0.560 0.583 0.627 0.561 0.584 0.574 0.591 0.527 0.611 IV 37 0.510 0.592 0.613 0.621 0.541 0.649 0.632 0.649 0.572 0.643 SV 63 0.433 0.521 0.561 0.605 0.546 0.610 0.613 0.615 0.556 0.614 OCC 48 0.460 0.543 0.559 0.601 0.527 0.595 0.601 0.585 0.549 0.611 DEF 43 0.389 0.551 0.544 0.566 0.526 0.572 0.560 0.617 0.512 0.597 MB 29 0.427 0.541 0.594 0.625 0.540 0.634 0.654 0.622 0.554 0.642 FM 39 0.417 0.540 0.597 0.628 0.554 0.616 0.630 0.599 0.571 0.621 IPR 51 0.460 0.548 0.544 0.589 0.567 0.616 0.606 0.627 0.559 0.628 OPR 63 0.435 0.533 0.550 0.607 0.553 0.621 0.612 0.625 0.561 0.628 OV 14 0.352 0.475 0.460 0.553 0.454 0.572 0.590 0.542 0.475 0.587 LR 9 0.348 0.394 0.514 0.561 0.614 0.622 0.596 0.639 0.618 0.636 OTB100 100 0.471 0.578 0.598 0.635 0.587 0.625 0.627 0.629 0.584 0.639 注:加粗字体为每行最优,斜体为次优。 表 5 10种算法在GOT-10k数据集上性能对比

算法 AO SR0.5 SR0.75 KCF 0.203 0.177 0.076 Staple 0.246 0.239 0.089 SRDCF 0.236 0.227 0.094 DeepSRDCF 0.286 0.275 0.096 CFNet 0.293 0.265 0.087 SINT 0.347 0.375 0.124 SiamFC-DW 0.384 0.401 0.118 SiamRPN 0.367 0.424 0.102 SiamFC 0.326 0.353 0.098 本文 0.411 0.492 0.175 注:加粗字体为每列最优,斜体为次优。 表 6 本文算法在OTB100上的消融实验结果

算法 创新1 创新2 曲线下面积 精度 帧数 SiamFC Ο Ο 0.584 0.772 131 SiamFCv1 Π Ο 0.624 0.831 114 SiamFCv2 Ο Π 0.606 0.818 127 本文 Π Π 0.639 0.861 111 -

[1] 谭建豪, 殷旺, 刘力铭, 等. 引入全局上下文特征模块的DenseNet孪生网络目标跟踪[J]. 电子与信息学报, 2021, 43(1): 179–186. doi: 10.11999/JEIT190788TAN Jianhao, YIN Wang, LIU Liming, et al. DenseNet-siamese network with global context feature module for object tracking[J]. Journal of Electronics &Information Technology, 2021, 43(1): 179–186. doi: 10.11999/JEIT190788 [2] KRISTAN M, LEONARDIS A, MATAS J, et al. The sixth visual object tracking VOT2018 challenge results[C]. 2018 European Conference on Computer Vision, Munich, Germany, 2019: 3–53. [3] 李玺, 查宇飞, 张天柱, 等. 深度学习的目标跟踪算法综述[J]. 中国图象图形学报, 2019, 24(12): 2057–2080. doi: 10.11834/jig.190372LI Xi, CHA Yufei, ZHANG Tianzhu, et al. Survey of visual tracking algorithms based on deep learning[J]. Journal of Image and Graphics, 2019, 24(12): 2057–2080. doi: 10.11834/jig.190372 [4] HENRIQUE J F, CASEIRO R, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596. doi: 10.1109/tpami.2014.2345390 [5] BERTINETTO L, VALMADRE J, GOLODETZ S, et al. Staple: Complementary learners for real-time tracking[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1401–1409. [6] DANELLJAN M, HÄGER G, KHAN F S K, et al. Learning spatially regularized correlation filters for visual tracking[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4310–4318. [7] DANELLJAN M, HÄGER G, KHAN F S, et al. Convolutional features for correlation filter based visual tracking[C]. 2015 IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 2015: 621–629. [8] CHOPRA S, HADSELL R, and LECUN Y. Learning a similarity metric discriminatively, with application to face verification[C]. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, USA, 2005: 539–546. [9] TAO Ran, GAVVES E, and SMEULDERS A W M. Siamese instance search for tracking[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1420–1429. [10] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]. 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 850–865. [11] VALMADRE J, BERTINETTO L, HENRIQUES J, et al. End-to-end representation learning for correlation filter based tracking[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5000–5008. [12] LI Bo, YAN Junjie, WU Wei, et al. High performance visual tracking with Siamese region proposal network[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8971–8980. [13] REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[J] IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149. [14] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904–1916. doi: 10.1109/TPAMI.2015.2389824 [15] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [16] SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. [17] LI Bo, WU Wei, WANG Qiang, et al. SiamRPN++: Evolution of Siamese visual tracking with very deep networks[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4277–4286. [18] ZHANG Zhipeng and PENG Houwen. Deeper and wider Siamese networks for real-time visual tracking[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4586–4595. [19] DANELLJAN M, BHAT G, KHAN F S, et al. ATOM: Accurate tracking by overlap maximization[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 4655–4664. [20] WU Yi, LIM J, and YANG M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834–1848. doi: 10.1109/TPAMI.2014.2388226 [21] HUANG Lianghua, ZHAO Xin, and HUANG Kaiqi. GOT-10k: A large high-diversity benchmark for generic object tracking in the wild[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(5): 1562–1577. doi: 10.1109/TPAMI.2019.2957464 [22] HU Jie, SHEN Li, ALBANIE S, et al. Squeeze-and-excitation networks[J] IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 42(8): 2011–2023. [23] WANG Mengmeng, LIU Yong, and HUANG Zeyi. Large margin object tracking with circulant feature maps[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 4800–4808. -

下载:

下载:

下载:

下载: