An Interactive Graph Attention Networks Model for Aspect-level Sentiment Analysis

-

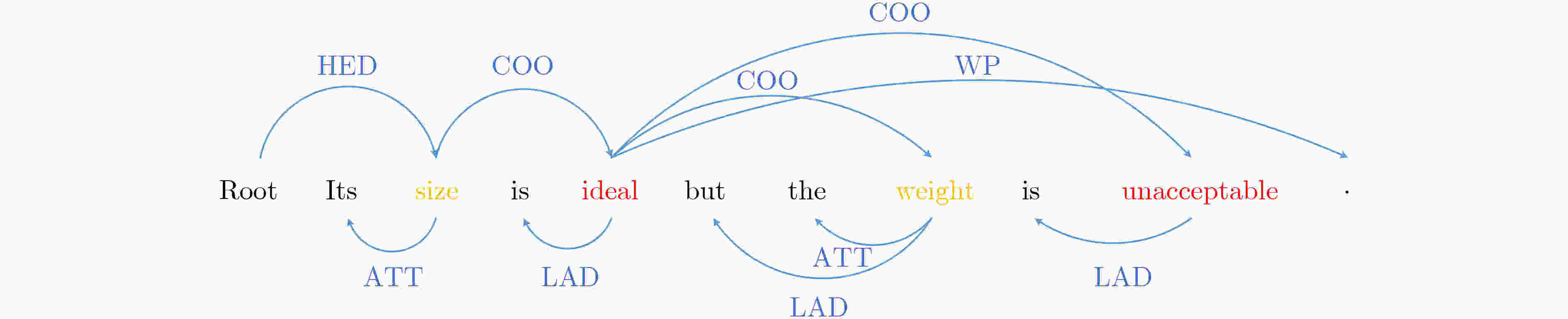

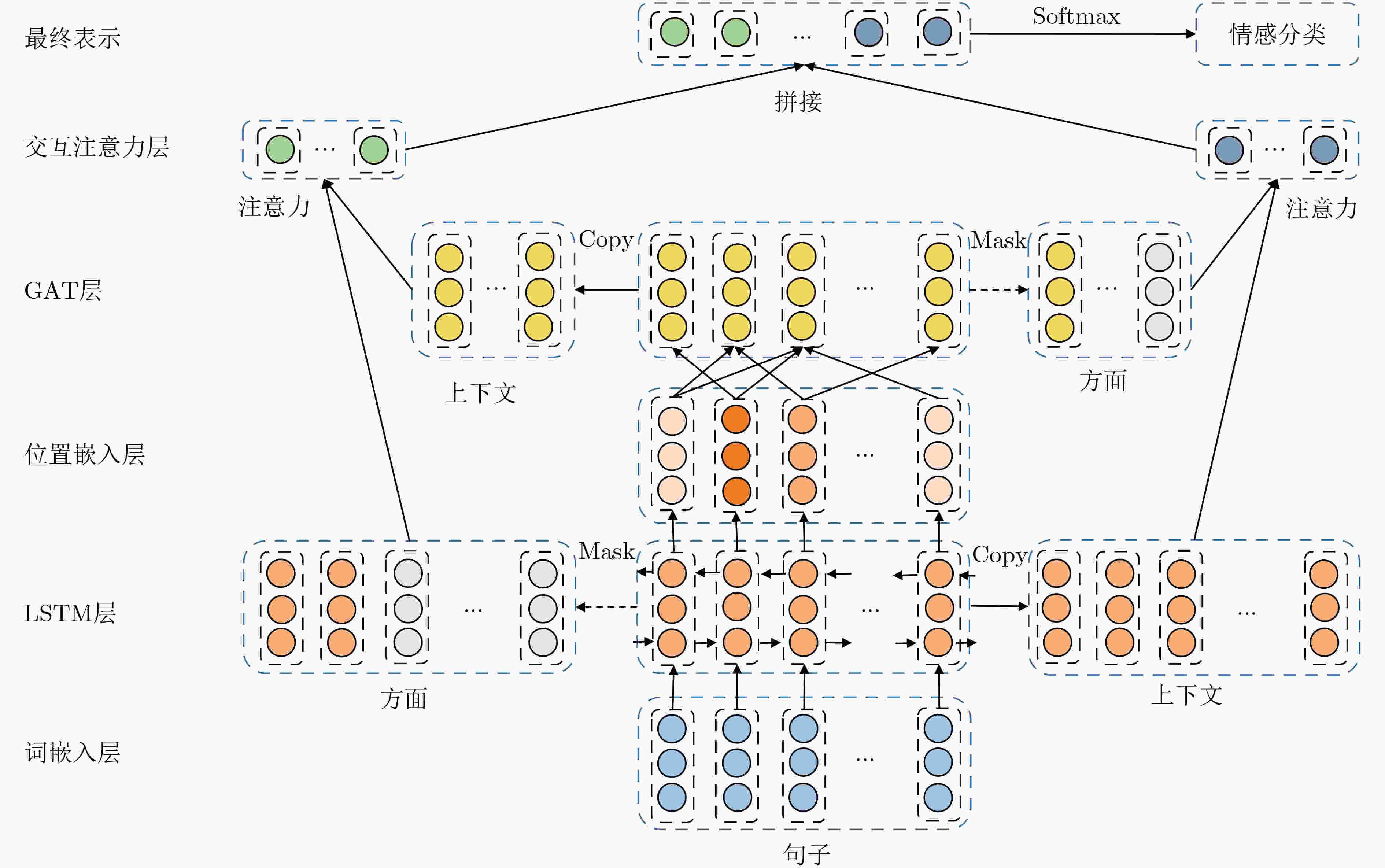

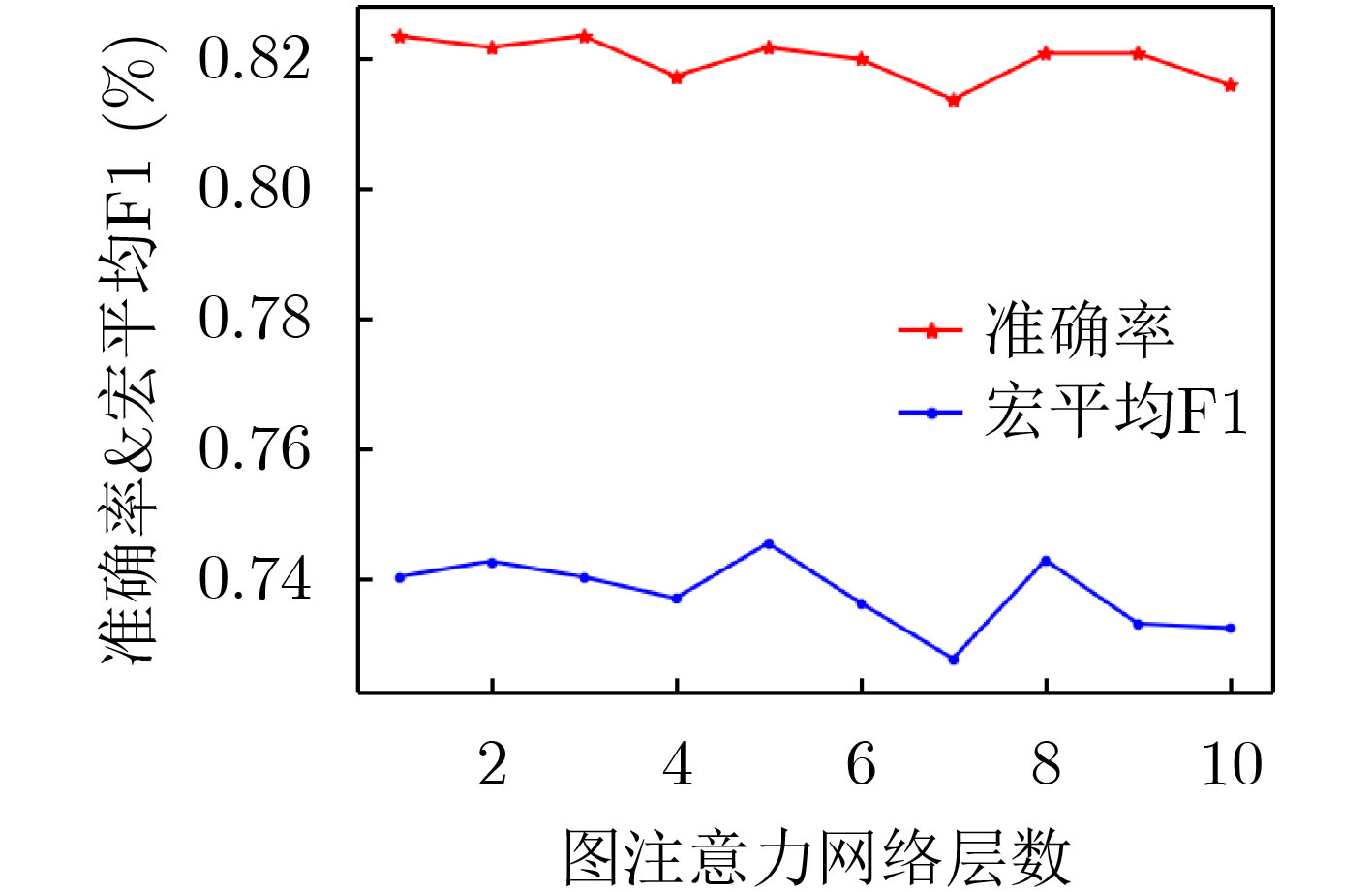

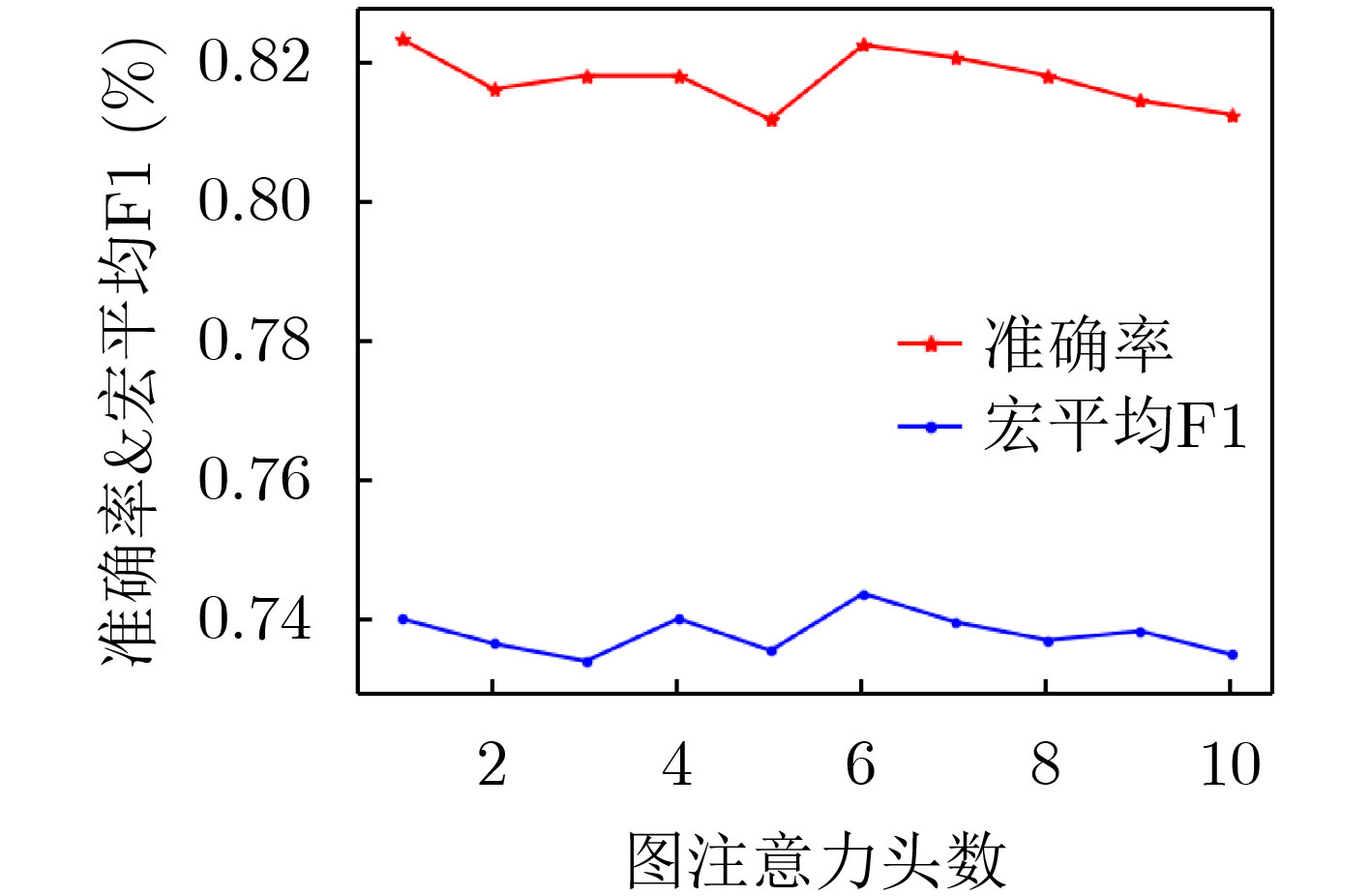

摘要: 方面级情感分析目前主要采用注意力机制与传统神经网络相结合的方法对方面与上下文词进行建模。这类方法忽略了句子中方面与上下文词之间的句法依存信息及位置信息,从而导致注意力权重分配不合理。为此,该文提出一种面向方面级情感分析的交互图注意力网络模型(IGATs)。该模型首先使用双向长短期记忆网络(BiLSTM)学习句子的语义特征表示,并结合位置信息生成新的句子特征表示,然后在新生成的特征表示上构建图注意力网络以捕获句法依存信息,再通过交互注意力机制建模方面与上下文词之间的语义关系,最后利用softmax进行分类输出。在3个公开数据集上的实验结果表明,与其他现有模型相比,IGATs的准确率与宏平均F1值均得到显著提升。Abstract: At present, aspect-level sentiment analysis uses mainly the method of combining attention mechanism and traditional neural network to model aspect and contextual words.These methods ignore the syntactic dependency information and position information between aspects and contextual words in sentences, which leads to unreasonable weight allocation of attention. Therefore, an Interactive Graph ATtention (IGATs) networks model for aspect-level sentiment analysis is proposed. Bidirectional Long Short-Term Memory (BiLSTM) network is firstly used to learn the semantic feature representation of sentences. And then the position information is combined to update the feature representation, a graph attention network is constructed on the newly generated feature representation to capture syntactic dependency information. Finally, interactive attention mechanism is used to model the semantic relations between the aspect and contextual words. Experimental results on three public datasets show that the accuracy and macro average F1 value of IGATs are significantly improved compared with other existing models.

-

表 1 数据集统计

数据集 积极 中性 消极 Twitter-train 1561 3127 1560 Twitter-test 173 346 173 Laptop-train 994 464 870 Laptop-test 341 169 128 Restaurant-train 2164 637 807 Restaurant-test 728 196 196 表 2 实验平台

实验环境 具体信息 操作系统 Windows 10 教育版 CPU Intel(R) Core(TM) i7-7700 CPU @ 3.60 GHz 内存 16.0 GB 显卡 GTX 1080 显存 8.0 GB 表 3 超参数设置

超参数 超参数值数量 词嵌入维度 300 隐藏状态向量维度 300 Batch size 16 训练迭代次数epoch 100 优化器Optimizer Adam 学习率Learning rate 0.001 Dropout rate 0.3 L2正则化系数 0.00001 表 4 各个模型的性能对比(%)

模型 Twitter Laptop Restaurant 准确率(Acc) 宏平均F1 准确率(Acc) 宏平均F1 准确率(Acc) 宏平均F1 SVM 63.40 63.30 70.49 N/A 80.16 N/A LSTM 69.56 67.70 69.28 63.09 78.13 67.47 MemNet 71.48 69.90 70.64 65.17 79.61 69.64 IAN 72.50 70.81 72.05 67.38 79.26 70.09 AOA 72.30 70.20 72.62 67.52 79.97 70.42 AOA-MultiACIA 72.40 69.40 75.27 70.24 82.59 72.13 ASGCN 72.15 70.40 75.55 71.05 80.77 72.02 GATs 73.12 71.25 74.61 70.51 80.63 70.41 IGATs 75.29 73.40 76.02 72.05 82.32 73.99 表 5 各个模型的可训练参数数量(M)

模型 可训练参数数量 SVM – LSTM 0.72 MemNet 0.36 IAN 2.17 AOA 2.10 ASGCN 2.17 GATs 1.81 IGATs 1.81 表 6 消融研究(%)

模型 Twitter Laptop Restaurant 准确率(Acc) 宏平均F1 准确率(Acc) 宏平均F1 准确率(Acc) 宏平均F1 BiLSTM+IAtt 74.13 72.86 75.08 70.82 81.25 72.14 BiLSTM+GAT+IAtt 74.86 72.98 74.92 71.08 82.05 73.45 BiLSTM+PE+IAtt 74.42 72.35 76.65 72.75 82.23 74.01 IGATs 75.29 73.40 76.02 72.05 82.32 73.99 -

[1] PONTIKI M, GALANIS D, PAVLOPOULOS J, et al. Semeval-2014 task 4: Aspect based sentiment analysis[C]. The 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 2014: 27–35. doi: 10.3115/v1/S14-2004. [2] DING Xiaowen, LIU Bing, and YU P S. A holistic lexicon-based approach to opinion mining[C]. 2008 International Conference on Web Search and Data Mining, Palo Alto, USA, 2008: 231–240. doi: 10.1145/1341531.1341561. [3] JIANG Long, YU Mo, ZHOU Ming, et al. Target-dependent twitter sentiment classification[C]. The 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, USA, 2011: 151–160. [4] KIRITCHENKO S, ZHU Xiaodan, CHERRY C, et al. NRC-Canada-2014: Detecting aspects and sentiment in customer reviews[C]. The 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 2014: 437–442. doi: 10.3115/v1/S14-2076. [5] PAN S T, HUANG Zonghong, YUAN S S, et al. Application of hidden Markov models in speech command recognition[J]. Journal of Mechanics Engineering and Automation, 2020, 10(2): 41–45. doi: 10.17265/2159-5275/2020.02.001 [6] WANG Lei. Application research of deep convolutional neural network in computer vision[J]. Journal of Networking and Telecommunications, 2020, 2(2): 23–29. doi: 10.18282/jnt.v2i2.886 [7] DONG Li, WEI Furu, TAN Chuanqi, et al. Adaptive recursive neural network for target-dependent twitter sentiment classification[C]. The 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Baltimore, USA, 2014: 49–54. doi: 10.3115/v1/P14-2009. [8] XUE Wei and LI Tao. Aspect based sentiment analysis with gated convolutional networks[C]. The 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 2018: 2514–2523. doi: 10.18653/v1/P18-1234. [9] TANG Duyu, QIN Bing, FENG Xiaocheng, et al. Effective LSTMs for target-dependent sentiment classification[C]. COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 2016: 3298–3307. [10] WANG Yequan, HUANG Minlie, ZHU Xiaoyan, et al. Attention-based LSTM for aspect-level sentiment classification[C]. 2016 Conference on Empirical Methods in Natural Language Processing, Austin, USA, 2016: 606–615. doi: 10.18653/v1/D16-1058. [11] TANG Duyu, QIN Bing, and LIU Ting. Aspect level sentiment classification with deep memory network[C]. 2016 Conference on Empirical Methods in Natural Language Processing, Austin, USA, 2016: 214–224. doi: 10.18653/v1/D16-1021. [12] MA Dehong, LI Sujian, ZHANG Xiaodong, et al. Interactive attention networks for aspect-level sentiment classification[C]. The 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 2017: 4068–4074. doi: 10.24963/ijcai.2017/568. [13] HUANG Binxuan, OU Yanglan, and CARLEY K M. Aspect level sentiment classification with attention-over-attention neural networks[C]. The 11th International Conference on Social, Cultural, and Behavioral Modeling, Washington, USA, 2018: 197–206. doi: 10.1007/978-3-319-93372-6_22. [14] WU Zhuojia, LI Yang, LIAO Jian, et al. Aspect-context interactive attention representation for aspect-level sentiment classification[J]. IEEE Access, 2020, 8: 29238–29248. doi: 10.1109/ACCESS.2020.2972697 [15] ZHANG Chen, LI Qiuchi, and SONG Dawei. Aspect-based sentiment classification with aspect-specific graph convolutional networks[C]. The 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 2019: 4568–4578. doi: 10.18653/v1/D19-1464. [16] ZHAO Pinlong, HOU Linlin, and WU Ou. Modeling sentiment dependencies with graph convolutional networks for aspect-level sentiment classification[J]. Knowledge-Based Systems, 2020, 193: 105443. doi: 10.1016/j.knosys.2019.105443 [17] GU Shuqin, ZHANG Lipeng, HOU Yuexian, et al. A position-aware bidirectional attention network for aspect-level sentiment[C]. The 27th International Conference on Computational Linguistics, Santa Fe, USA, 2018: 774–784. [18] 苏锦钿, 欧阳志凡, 余珊珊. 基于依存树及距离注意力的句子属性情感分类[J]. 计算机研究与发展, 2019, 56(8): 1731–1745. doi: 10.7544/issn1000-1239.2019.20190102SU Jindian, OUYANG Zhifan, and YU Shanshan. Aspect-level sentiment classification for sentences based on dependency tree and distance attention[J]. Journal of Computer Research and Development, 2019, 56(8): 1731–1745. doi: 10.7544/issn1000-1239.2019.20190102 [19] VELIČKOVIĆ P, CUCURULL G, CASANOVA A, et al. Graph attention networks[C]. The 6th International Conference on Learning Representations, Vancouver, Canada, 2018: 1–12. [20] PENNINGTON J, SOCHER R, and MANNING C. Glove: Global vectors for word representation[C]. 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 2014: 1532–1543. doi: 10.3115/v1/D14-1162. -

下载:

下载:

下载:

下载: