Ultrasound Image Segmentation Method of Thyroid Nodules Based on the Improved U-Net Network

-

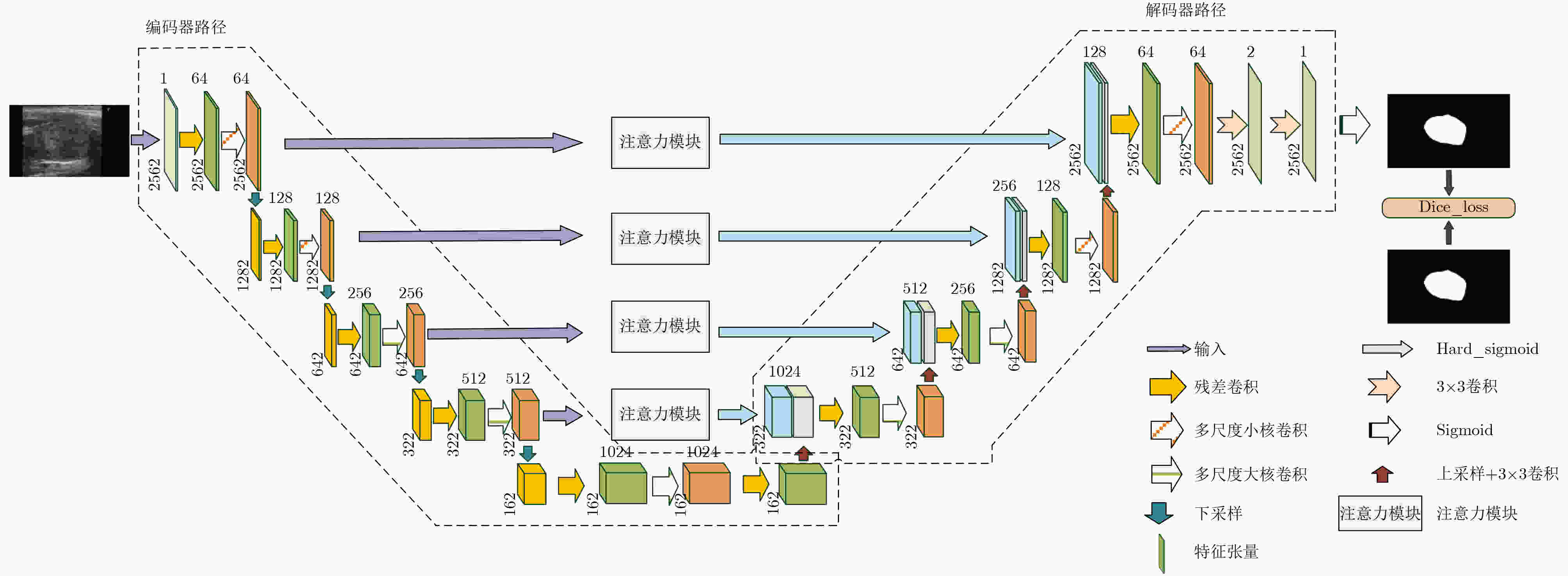

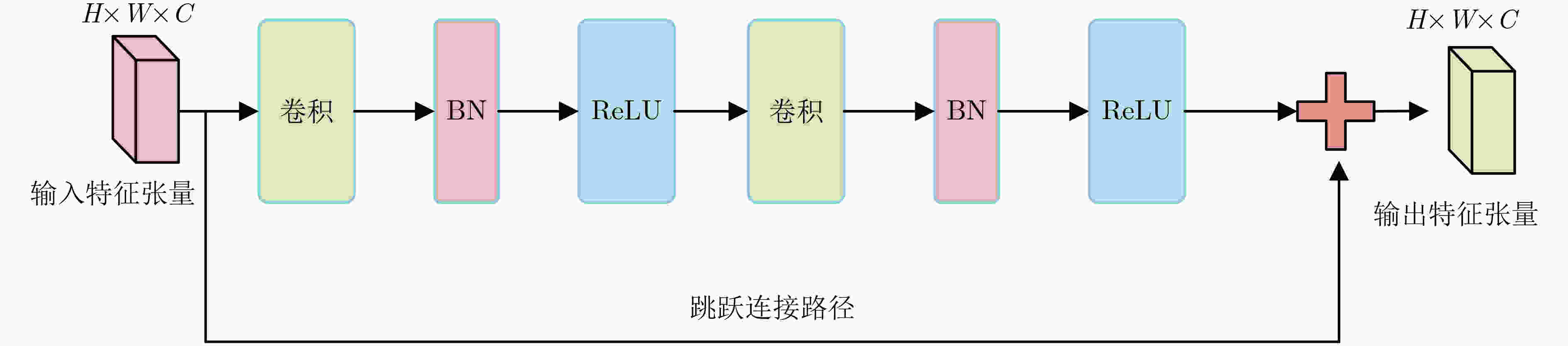

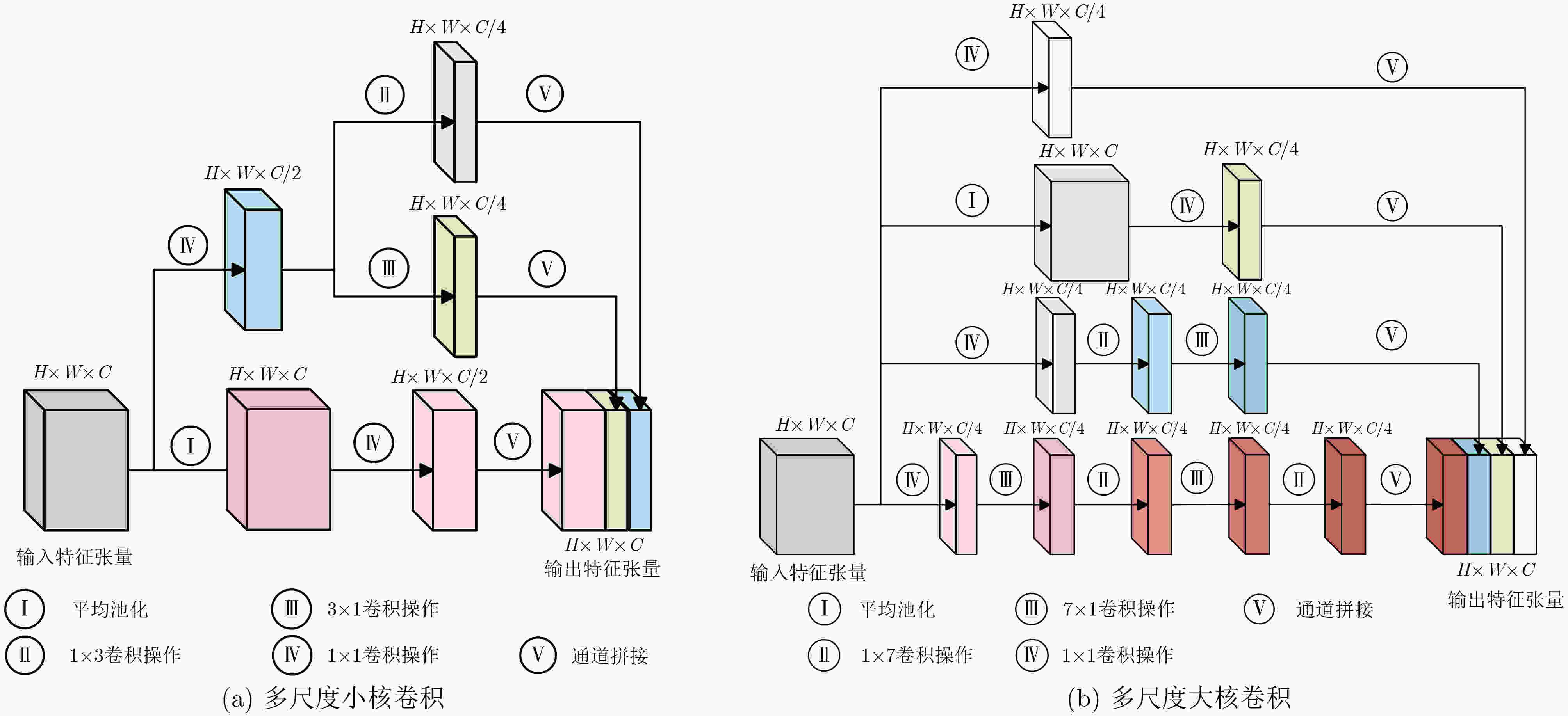

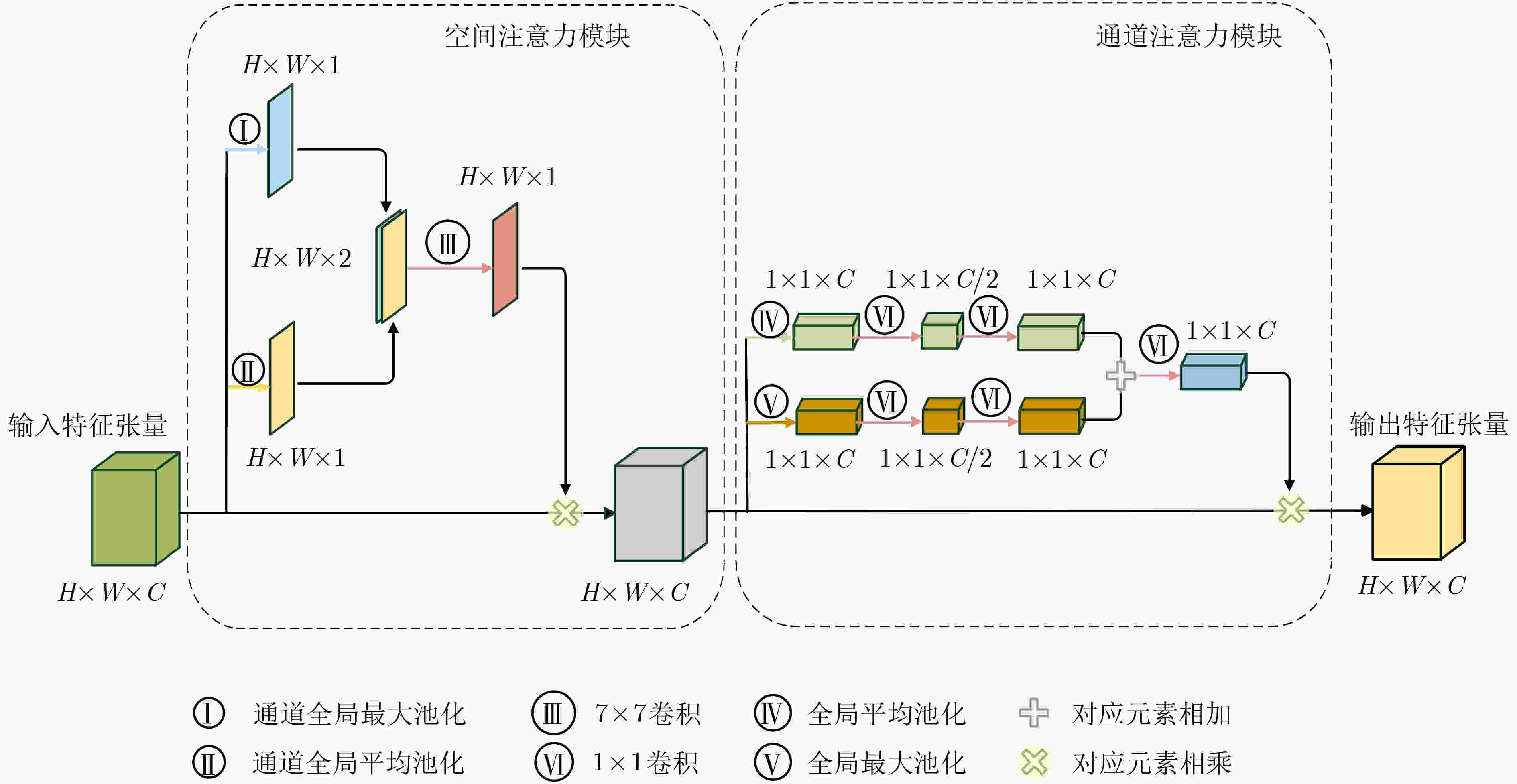

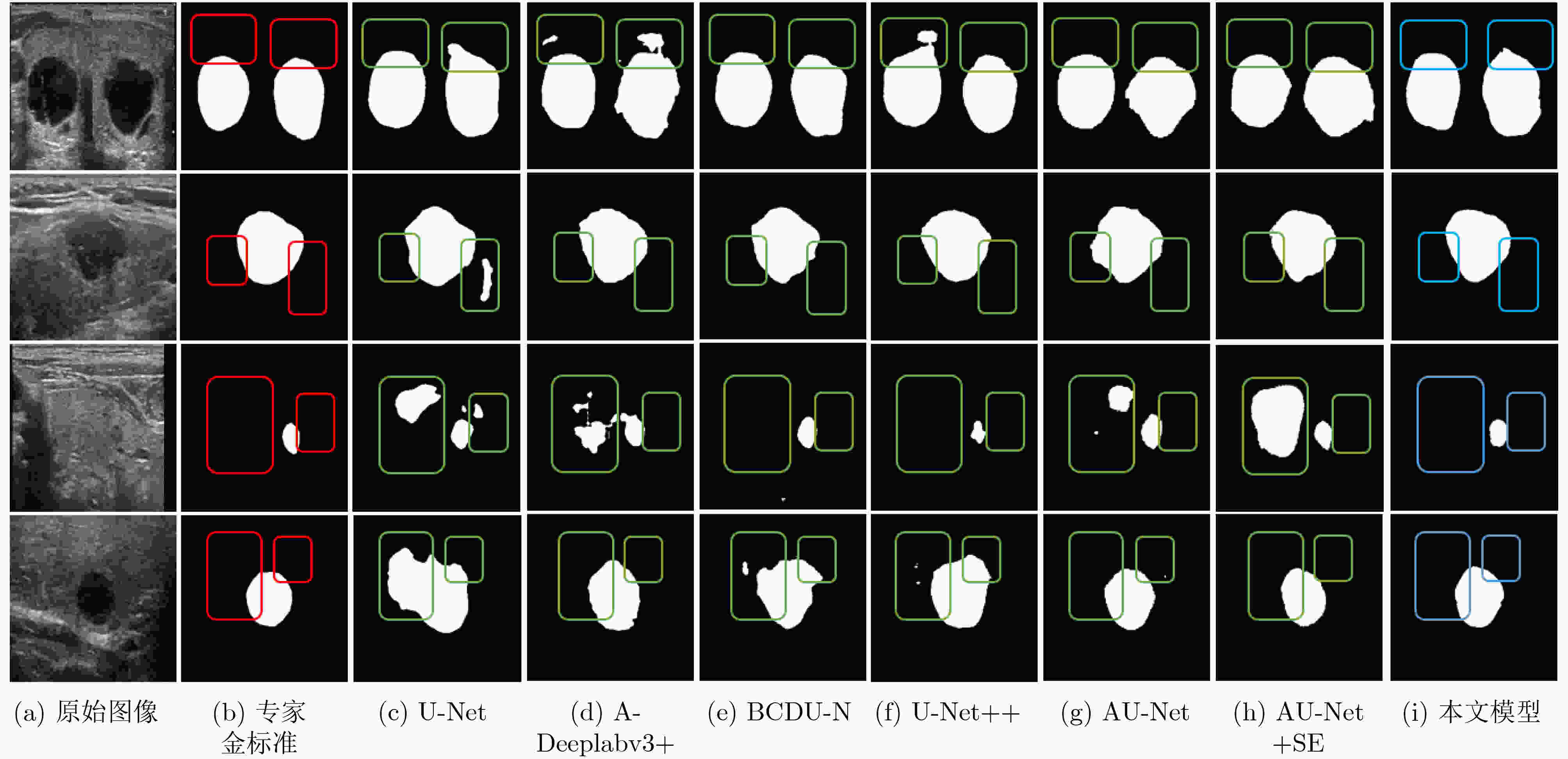

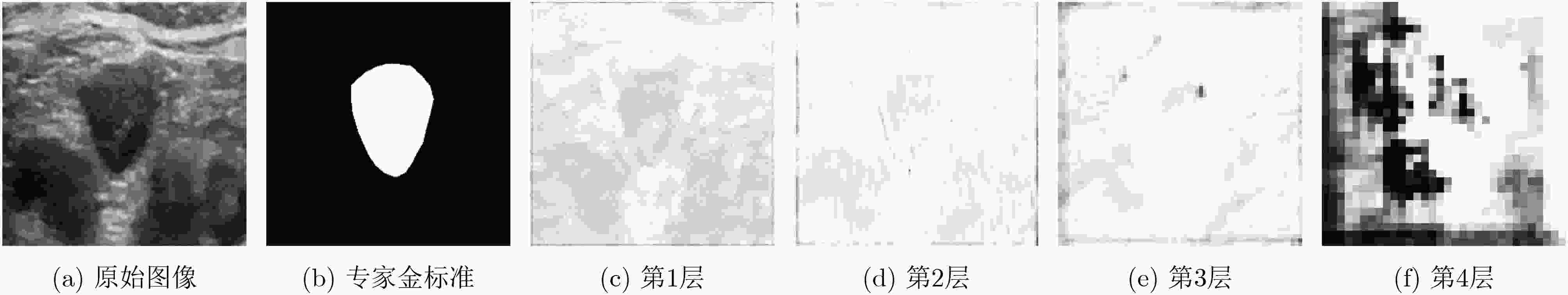

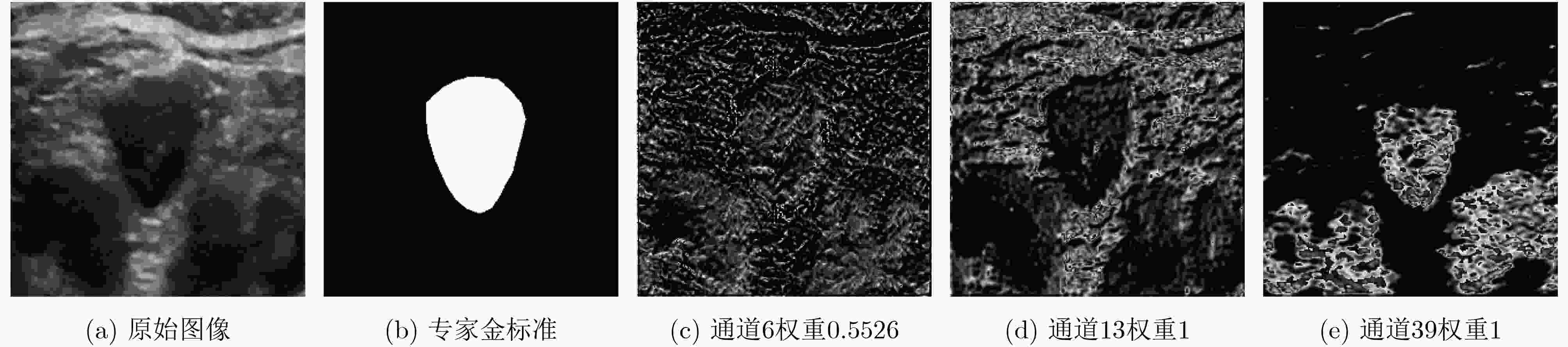

摘要: 针对甲状腺结节尺寸多变、超声图像中甲状腺结节边缘模糊导致难以分割的问题,该文提出一种基于改进U-net网络的甲状腺结节超声图像分割方法。该方法首先将图片经过有残差结构和多尺度卷积结构的编码器路径进行降尺度特征提取;然后,利用带有注意力模块的跳跃长连接部分对特征张量进行边缘轮廓保持操作;最后,使用带有残差结构和多尺度卷积结构的解码器路径得到分割结果。实验结果表明,该文所提方法的平均分割Dice值达到0.7822,较传统U-Net方法具有更优的分割性能。Abstract: An ultrasound image segmentation method of thyroid nodules based on the improved u-net network is proposed in this paper, in order to solve the problem of changeable size of thyroid nodules and difficulty in segmentation due to edge blur of thyroid nodules in the ultrasound image. Firstly, the image is downscaled to extract the features through an encoder path with a residual structure and a multi-scale convolution structure. Secondly, the long skip connection with an attention module is used to maintain the edge contour of characteristic tensor. Finally, the segmentation result is obtained by a decoder path with a residual structure and a multi-scale convolution structure. The experimental results show that with the method proposed in this paper, the average segmentation Dice value reaches 0.7822. It indicates that this method has better segmentation performance than the traditional U-Net method.

-

Key words:

- Image segmentation /

- Ultrasound image of thyroid nodule /

- Attention mechanism /

- U-Net

-

表 1 不同模型的量化分割结果

方法 ${\rm{DSC}}$ ${\rm{IoU}}$ ${\rm{Hausdorff}}$ ${\rm{FPR}}$ ${\rm{FNR}}$ U-Net 0.6254±0.0719 0.4550±0.0709 35.1372±4.2380 0.0703±0.0216 0.1857±0.0532 A-Deeplabv3+ 0.6874±0.0241 0.5258±0.0280 26.7047±2.1334 0.0553±0.0123 0.2364±0.0244 BCDU-Net 0.7132±0.0086 0.5554±0.0104 22.8483±2.9490 0.0540±0.0245 0.2052±0.0416 U-Net++ 0.7177±0.0307 0.5597±0.0214 27.2110±2.3588 0.0407±0.0037 0.2556±0.0785 AU-Net 0.7413±0.0451 0.5889±0.0581 25.2377±1.1363 0.0426±0.0023 0.3024±0.0785 AU-Net+SE 0.7432±0.0231 0.5934±0.0289 24.3763±2.0682 0.0366±0.0022 0.2720±0.0301 本文方法 0.7822±0.0113 0.6423±0.0151 19.2769±1.2694 0.0306±0.0064 0.1991±0.0197 -

[1] HAUGEN B R, ALEXANDER E K, BIBLE K C, et al. 2015 American Thyroid Association management guidelines for adult patients with thyroid nodules and differentiated thyroid cancer: The American Thyroid Association guidelines task force on thyroid nodules and differentiated thyroid cancer[J]. Thyroid, 2016, 26(1): 1–133. doi: 10.1089/thy.2015.0020 [2] MAROULIS D E, SAVELONAS M A, IAKOVIDIS D K, et al. Variable background active contour model for computer-aided delineation of nodules in thyroid ultrasound images[J]. IEEE Transactions on Information Technology in Biomedicine, 2007, 11(5): 537–543. doi: 10.1109/TITB.2006.890018 [3] SAVELONAS M A, IAKOVIDIS D K, LEGAKIS I, et al. Active contours guided by echogenicity and texture for delineation of thyroid nodules in ultrasound images[J]. IEEE Transactions on Information Technology in Biomedicine, 2009, 13(4): 519–527. doi: 10.1109/TITB.2008.2007192 [4] 邵蒙恩, 严加勇, 崔崤峣, 等. 基于CV-RSF模型的甲状腺结节超声图像分割算法[J]. 生物医学工程研究, 2019, 38(3): 336–340. doi: 10.19529/j.cnki.1672-6278.2019.03.15SHAO Meng’en, YAN Jiayong, CUI Xiaoyao, et al. Ultrasound image segmentation of thyroid nodule based on CV-RSF algorithm[J]. Journal of Biomedical Engineering Research, 2019, 38(3): 336–340. doi: 10.19529/j.cnki.1672-6278.2019.03.15 [5] ZHAO Jie, ZHENG Wei, ZHANG Li, et al. Segmentation of ultrasound images of thyroid nodule for assisting fine needle aspiration cytology[J]. Health Information Science and Systems, 2013, 1: 5. doi: 10.1186/2047-2501-1-5 [6] ALRUBAIDI W M H, PENG Bo, YANG Yan, et al. An interactive segmentation algorithm for thyroid nodules in ultrasound images[C]. The 12th International Conference on Intelligent Computing, Lanzhou, China, 2016: 107–115. doi: 10.1007/978-3-319-42297-8_11. [7] RONNEBERGER O, FISCHER P, and BROX T. U-Net: Convolutional networks for biomedical image segmentation[C]. The 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. doi: 10.1007/978-3-319-24574-4_28. [8] CHAN T F and VESE L A. Active contours without edges[J]. IEEE Transactions on Image Processing, 2001, 10(2): 266–277. doi: 10.1109/83.902291 [9] LI Chunming, KAO C Y, GORE J C, et al. Minimization of region-scalable fitting energy for image segmentation[J]. IEEE Transactions on Image Processing, 2008, 17(10): 1940–1949. doi: 10.1109/TIP.2008.2002304 [10] DING Jianrui, HUANG Zichen, SHI Mengdie, et al. Automatic thyroid ultrasound image segmentation based on u-shaped network[C]. The 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Suzhou, China, 2019: 1–5. doi: 10.1109/CISP-BMEI48845.2019.8966062. [11] WANG Jianrong, ZHANG Ruixuan, WEI Xi, et al. An attention-based semi-supervised neural network for thyroid nodules segmentation[C]. 2019 IEEE International Conference on Bioinformatics and Biomedicine, San Diego, USA, 2019: 871–876. doi: 10.1109/BIBM47256.2019.8983288. [12] WU Yating, SHEN Xueliang, BU Feng, et al. Ultrasound image segmentation method for thyroid nodules using ASPP fusion features[J]. IEEE Access, 2020, 8: 172457–172466. doi: 10.1109/ACCESS.2020.3022249 [13] ABDOLALI F, KAPUR J, JAREMKO J L, et al. Automated thyroid nodule detection from ultrasound imaging using deep convolutional neural networks[J]. Computers in Biology and Medicine, 2020, 122: 103871. doi: 10.1016/j.compbiomed.2020.103871 [14] OKTAY O, SCHLEMPER J, FOLGOC L L, et al. Attention U-Net: Learning where to look for the pancreas[J]. arXiv preprint arXiv: 1804.03999, 2018. [15] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 3–19. doi: 10.1007/978-3-030-01234-2_1. [16] FU Jun, LIU Jing, TIAN Haijie, et al. Dual attention network for scene segmentation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3146–3154. doi: 10.1109/CVPR.2019.00326. [17] LEE H J, KIM J U, LEE S, et al. Structure boundary preserving segmentation for medical image with ambiguous boundary[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 4817–4826. doi: 10.1109/CVPR42600.2020.00487. [18] ZHONG Zilong, LIN Zhongqiu, BIDART R, et al. Squeeze-and-attention networks for semantic segmentation[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 13065–13074. doi: 10.1109/CVPR42600.2020.01308. [19] SZEGEDY C, IOFFE S, VANHOUCKE V, et al. Inception-v4, inception-ResNet and the impact of residual connections on learning[J]. arXiv preprint arXiv: 1602.07261, 2016. [20] KINGMA D P and BA J. Adam: A method for stochastic optimization[J]. arXiv preprint arXiv: 1412.6980, 2017. [21] AZAD R, ASADI-AGHBOLAGHI M, FATHY M, et al. Attention deeplabv3+: Multi-level context attention mechanism for skin lesion segmentation[C]. The European Conference on Computer Vision, Glasgow, UK, 2020. doi: 10.1007/978-3-030-66415-2_16. [22] AZAD R, ASADI-AGHBOLAGHI M, FATHY M, et al. Bi-directional ConvLSTM U-Net with densley connected convolutions[C]. 2019 IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Korea (South), 2019: 406–415, doi: 10.1109/ICCVW.2019.00052. [23] ZHOU Zongwei, SIDDIQUEE M M R, TAJBAKHSH N, et al. Unet++: A nested u-net architecture for medical image segmentation[C]. The 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 2018: 3–11. doi: 10.1007/978-3-030-00889-5_1. [24] HU Jie, SHEN Li, and SUN Gang. Squeeze-and-excitation networks[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7132–7141. doi: 10.1109/cvpr.2018.00745. -

下载:

下载:

下载:

下载: