Human Activity Recognition Technology Based on Sliding Window and Convolutional Neural Network

-

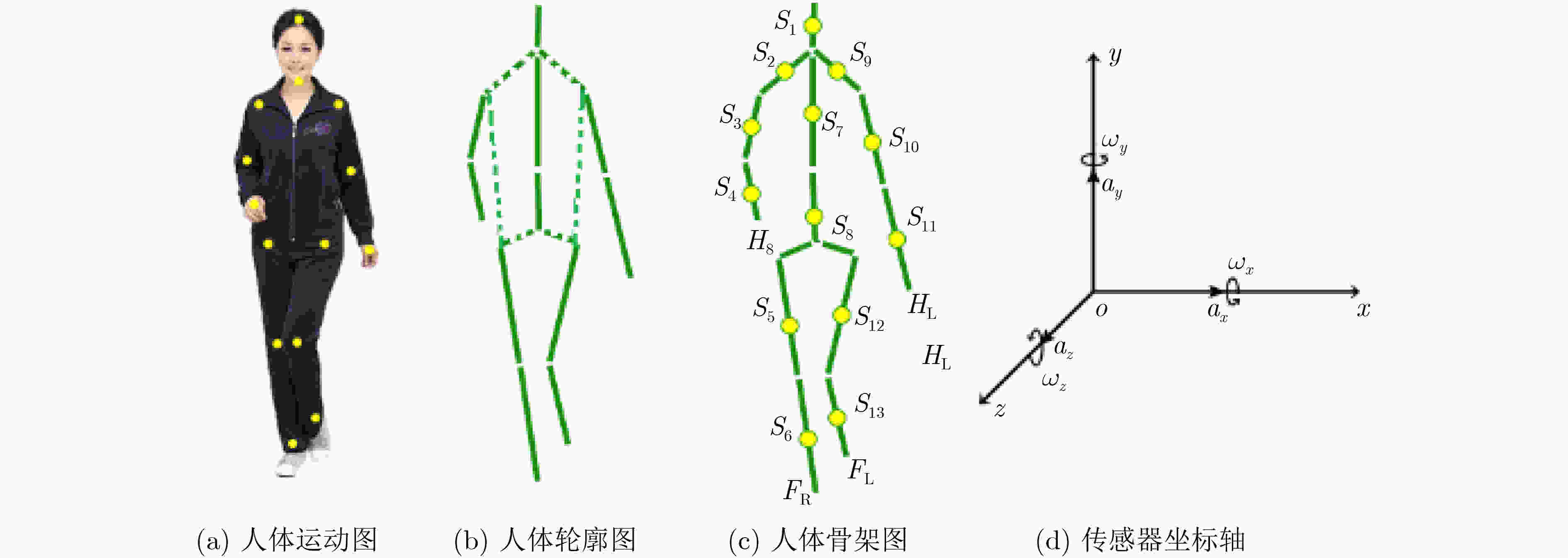

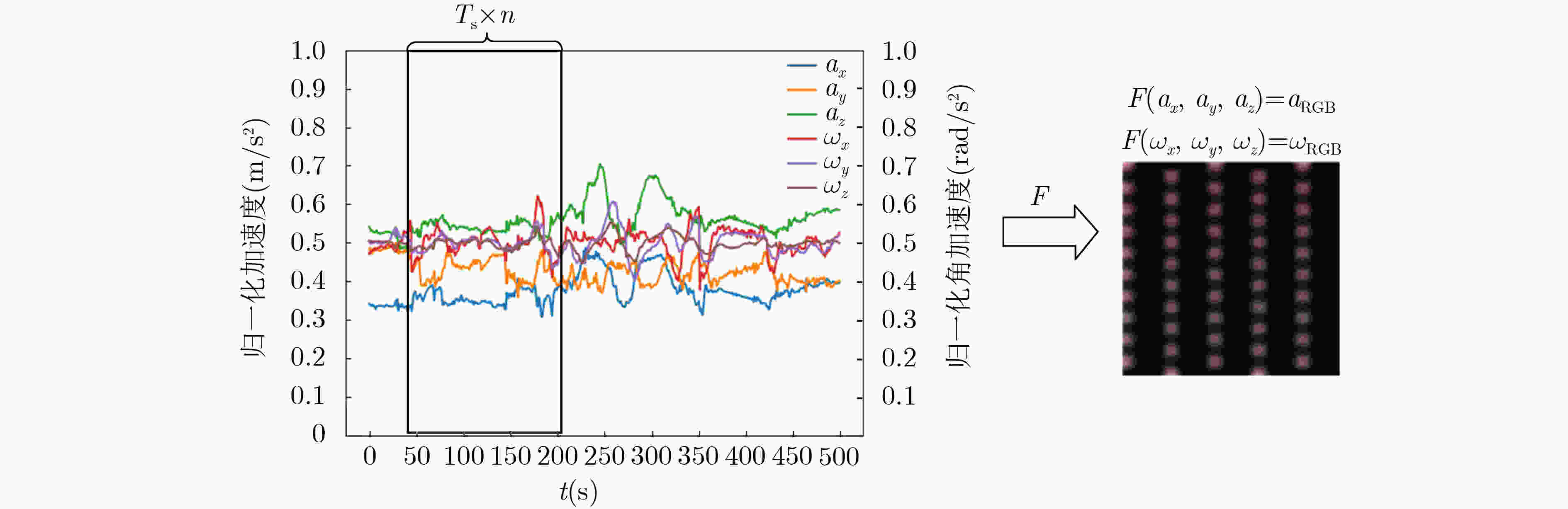

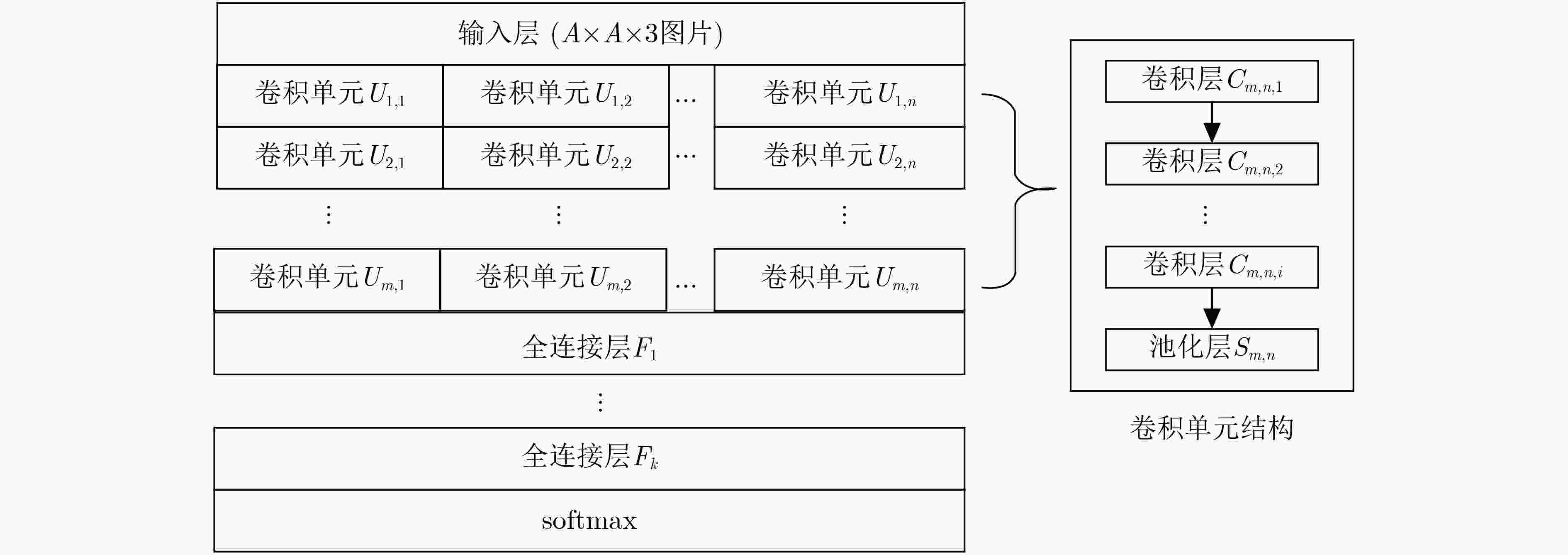

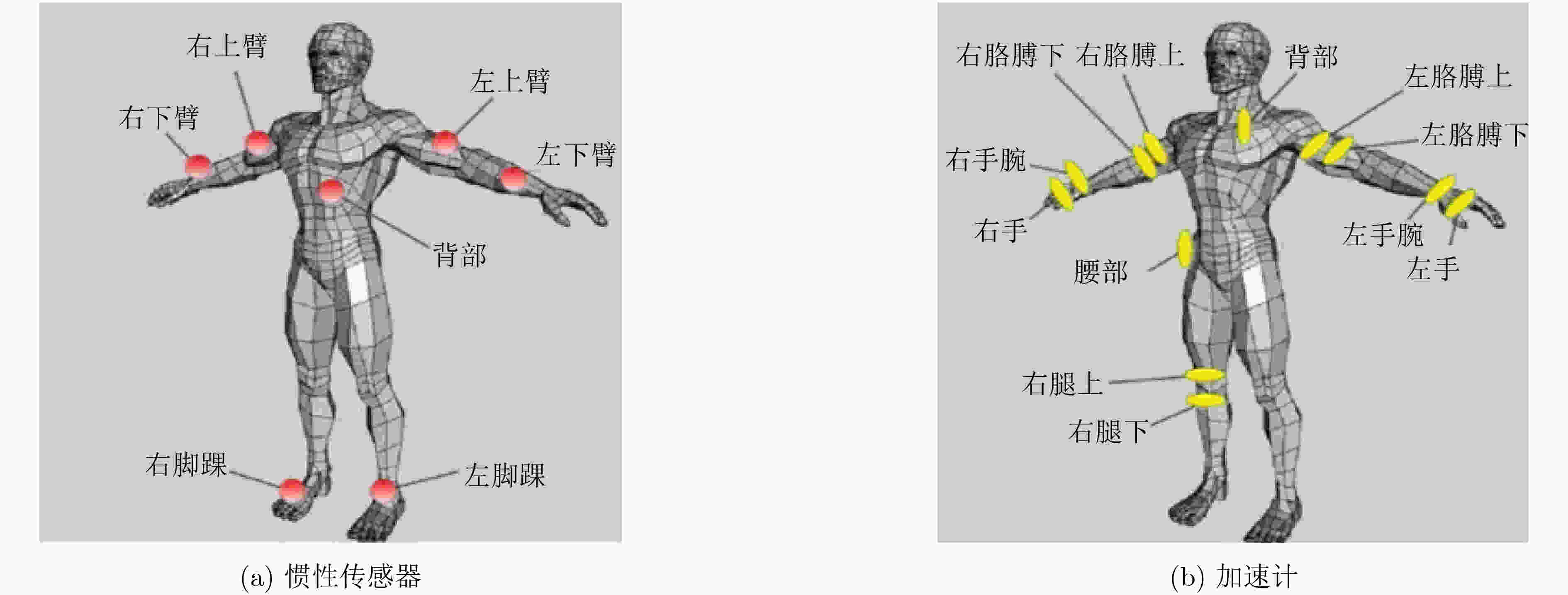

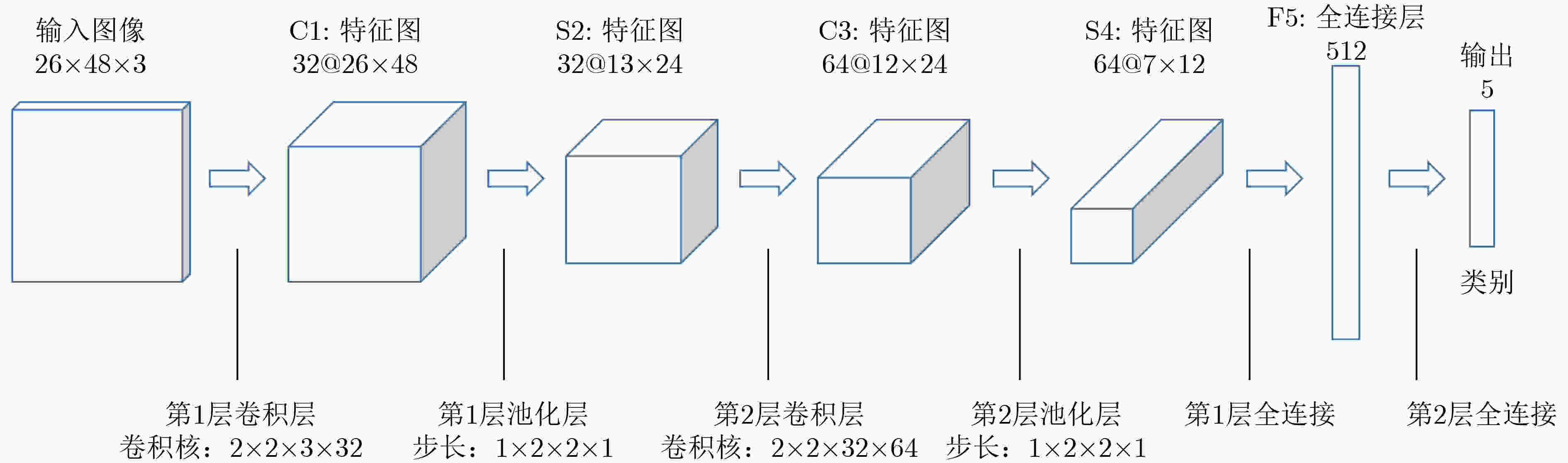

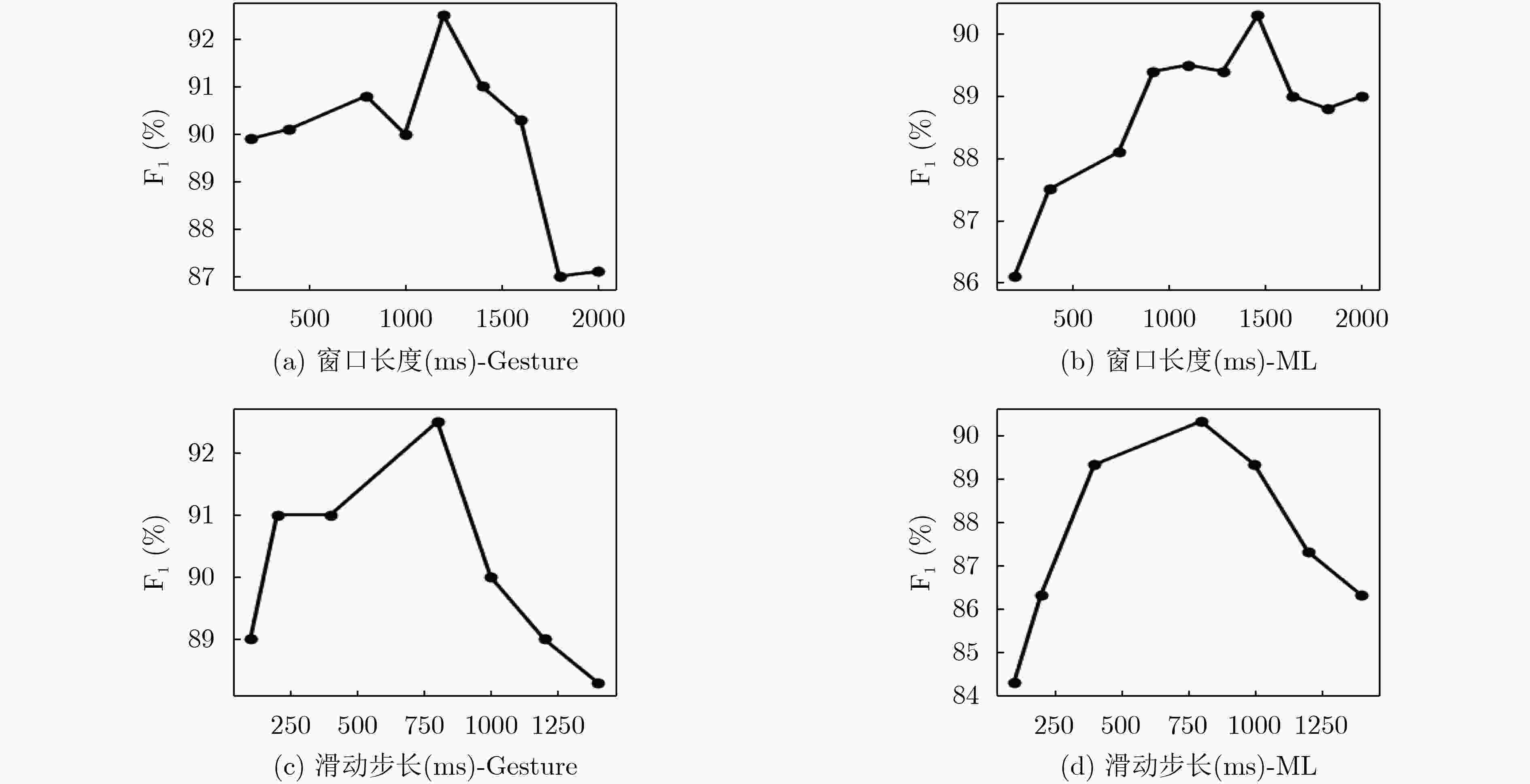

摘要: 由于缺少统一人体活动模型和相关规范,造成已有可穿戴人体活动识别技术采用的传感器类别、数量及部署位置不尽相同,并影响其推广应用。该文在分析人体活动骨架特征基础上结合人体活动力学特征,建立基于笛卡尔坐标的人体活动模型,并规范了模型中活动传感器部署位置及活动数据的归一化方法;其次,引入滑动窗口技术建立将人体活动数据转换为RGB位图的映射方法,并设计了人体活动识别卷积神经网络(HAR-CNN);最后,依据公开人体活动数据集Opportunity创建HAR-CNN实例并进行了实验测试。实验结果表明,HAR-CNN对周期性重复活动和离散性人体活动识别的F1值分别达到了90%和92%,同时算法具有良好的运行效率。Abstract: Due to the lack of unified human activity model and related specifications, the existing wearable human activity recognition technology uses different types, numbers and deployment locations of sensors, and affects its promotion and application. Based on the analysis of human activity skeleton characteristics and human activity mechanics, a human activity model based on Cartesian coordinates is established and the normalization method of activity sensor deployment location and activity data in the model is standardized; Secondly, a sliding window technique is introduced to establish a mapping method to convert human activity data into RGB bitmap, and a Convolutional Neural Network is designed for Human Activity Recognition (HAR-CNN); Finally, a HAR-CNN instance is created and experimentally tested based on the public human activity dataset Opportunity. The experimental results show that HAR-CNN achieves the F1 values of 90% and 92% for periodic repetitive activity and discrete human activity recognition, respectively, while the algorithm has good operational efficiency.

-

表 1 Opportunity数据集描述

活动 次数 周期性 站 960 坐 169 走路 1711 躺 40 非周期性 开门1 125 关门1 122 开门2 119 关门2 120 开冰箱 209 关冰箱 213 喝饮料 136 清理餐桌 134 开/关灯 129 开洗碗机 128 关洗碗机 124 开抽屉1 123 关抽屉1 125 开抽屉2 116 关抽屉2 112 开抽屉3 210 关抽屉3 216 表 2 不同算法F1值对比

算法Gesture Gesture

(NULL)ML ML

(NULL)运行

时间(s)Bayes Network 0.79 0.81 0.82 0.74 32.9 Random Forest 0.63 0.72 0.73 0.69 21.2 Naïve Bayes 0.54 0.66 0.75 0.74 8.1 Random Tree 0.75 0.88 0.87 0.85 7.0 DeepConvLSTM 0.86 0.91 0.93 0.89 6.6 MS-2DCNN 0.81 0.89 0.92 0.85 5.8 DRNN 0.84 0.92 0.91 0.88 7.3 HAR-CNN 0.90 0.92 0.92 0.90 3.7 表 3 ML活动识别的准确率和召回率(%)

活动类别 DRNN准确率 DRNN召回率 HAR-CNN

准确率HAR-CNN

召回率NULL 90 91 92 92 站立 92 91 96 92 走路 79 82 81 94 坐下 92 85 93 73 躺

平均值90

8985

8790

9085

87标准差 5 4 5 8 表 4 GR活动识别的准确率和召回率(%)

活动类别 DRNN准确率 DRNN召回率 HAR-CNN

准确率HAR-CNN

召回率NULL

开门195

8797

9294

9295

87开门2 93 93 96 93 关门1 90 92 96 80 关门2 95 92 96 91 开冰箱 85 65 90 80 关冰箱 83 86 87 74 开洗碗机 88 74 83 83 关洗碗机 80 81 85 83 开抽屉1 79 77 88 86 关抽屉1 80 77 85 75 开抽屉2 77 83 97 82 关抽屉2 79 88 83 83 开抽屉3 86 85 96 90 关抽屉3 86 84 91 85 清理餐桌 93 82 100 100 喝饮料 89 88 78 95 开/关灯

平均值94

8769

8491

9095

87标准差 6 8 6 7 -

[1] SINGH R and SRIVASTAVA R. Some contemporary approaches for human activity recognition: A survey[C]. 2020 International Conference on Power Electronics & IoT Applications in Renewable Energy and its Control, Mathura, India, 2020: 544–548. [2] GOTA D I, PUSCASIU A, FANCA A, et al. Human-Computer Interaction using hand gestures[C]. 2020 IEEE International Conference on Automation, Quality and Testing, Robotics, Cluj-Napoca, Romania, 2020: 1–5. [3] HU Ning, SU Shen, TANG Chang, et al. Wearable-sensors based activity recognition for smart human healthcare using internet of things[C]. 2020 International Wireless Communications and Mobile Computing, Limassol, Cyprus, 2020: 1909–1915. doi: 10.1109/IWCMC48107.2020.9148197. [4] NATANI A, SHARMA A, PERUMA T, et al. Deep learning for multi-resident activity recognition in ambient sensing smart homes[C]. The IEEE 8th Global Conference on Consumer Electronics, Osaka, Japan, 2019: 340–341. [5] RODRIGUES R, BHARGAVA N, VELMURUGAN R, et al. Multi-timescale trajectory prediction for abnormal human activity detection[C]. 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, USA, 2020: 2615–2623. [6] DEEP S and ZHENG Xi. Leveraging CNN and transfer learning for vision-based human activity recognition[C]. The 29th International Telecommunication Networks and Applications Conference, Auckland, New Zealand, 2019: 1–4. [7] 邓诗卓, 王波涛, 杨传贵, 等. CNN多位置穿戴式传感器人体活动识别[J]. 软件学报, 2019, 30(3): 718–737. doi: 10.13328/j.cnki.jos.005685DENG Shizhuo, WANG Botao, YANG Chuangui, et al. Convolutional neural networks for human activity recognition using multi-location wearable sensors[J]. Journal of Software, 2019, 30(3): 718–737. doi: 10.13328/j.cnki.jos.005685 [8] WANG Yan, CANG Shuang, and YU Hongnian. A survey on wearable sensor modality centred human activity recognition in health care[J]. Expert Systems with Applications, 2019, 137: 167–190. doi: 10.1016/j.eswa.2019.04.057 [9] BAO Ling and INTILLE S S. Activity recognition from user-annotated acceleration data[C]. The 2nd International Conference on Pervasive Computing, Vienna, Austria, 2004: 1–17. [10] NURMI P, FLORÉEN P, PRZYBILSKI M, et al. A framework for distributed activity recognition in ubiquitous systems[C]. International Conference on Artificial Intelligence, Las Vegas, USA, 2005: 650–655. [11] RAVI N, DANDEKAR N, MYSORE P, et al. Activity recognition from accelerometer data[C]. The Twentieth National Conference on Artificial Intelligence and the Seventeenth Innovative Applications of Artificial Intelligence Conference, Pittsburgh, USA, 2005: 1541–1546. [12] 何坚, 周明我, 王晓懿. 基于卡尔曼滤波与k-NN算法的可穿戴跌倒检测技术研究[J]. 电子与信息学报, 2017, 39(11): 2627–2634. doi: 10.11999/JEIT170173HE Jian, ZHOU Mingwo, and WANG Xiaoyi. Wearable method for fall detection based on Kalman filter and k-NN algorithm[J]. Journal of Electronics &Information Technology, 2017, 39(11): 2627–2634. doi: 10.11999/JEIT170173 [13] TRAN D N and PHAN D D. Human activities recognition in android smartphone using support vector machine[C]. The 7th International Conference on Intelligent Systems, Modelling and Simulation, Bangkok, Thailand, 2016: 64–68. [14] HANAI Y, NISHIMURA J, and KURODA T. Haar-like filtering for human activity recognition using 3D accelerometer[C]. The 13th Digital Signal Processing Workshop and 5th IEEE Signal Processing Education Workshop. Marco Island, USA, 2009: 675–678. [15] ORDÓÑEZ F J and ROGGEN D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition[J]. Sensors, 2016, 16(1): 115. doi: 10.3390/s16010115 [16] 何坚, 张子浩, 王伟东. 基于ZigBee和CNN的低功耗跌倒检测技术[J]. 天津大学学报: 自然科学与工程技术版, 2019, 52(10): 1045–1054. doi: 10.11784/tdxbz201808059HE Jian, ZHANG Zihao, and WANG Weidong. Low-power fall detection technology based on ZigBee and CNN algorithm[J]. Journal of Tianjin University:Science and Technology, 2019, 52(10): 1045–1054. doi: 10.11784/tdxbz201808059 [17] GJORESKI H, LUSTREK M, and GAMS M. Accelerometer placement for posture recognition and fall detection[C]. 2011 Seventh International Conference on Intelligent Environments, Nottingham, UK, 2011: 47-54. [18] WANG Changhong, LU Wei, NARAYANAN M R, et al. Low-power fall detector using triaxial accelerometry and barometric pressure sensing[J]. IEEE Transactions on Industrial Informatics, 2016, 12(6): 2302–2311. doi: 10.1109/TII.2016.2587761 [19] GJORESKI H, KOZINA S, GAMS M, et al. RAReFall - Real-time activity recognition and fall detection system[C]. 2014 IEEE International Conference on Pervasive Computing and Communication Workshops, Budapest, Hungary, 2014: 145-147. [20] SHOTTON J, FITZGIBBON A, COOK M, et al. Real-time human pose recognition in parts from single depth images[C]. The CVPR 2011, Colorado, USA, 2011: 1297–1304. doi: 10.1109/CVPR.2011.5995316. [21] KONIUSZ P, CHERIAN A, and PORIKLI F. Tensor representations via kernel linearization for action recognition from 3D skeletons[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2106: 37–53. [22] LECUN Y, BOTTOU L, BENGIO Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278–2324. doi: 10.1109/5.726791 [23] KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84–90. doi: 10.1145/3065386 [24] SIMONYAN K and ZISSERMAN A. Very Deep convolutional networks for large-scale image recognition[J]. arXiv: 1409.1556, 2015. [25] Opportunity dataset[EB/OL]. https://archive.ics.uci.edu/ml/datasets/OPPORTUNITY+Activity+Recognition, 2015. [26] MURAD A and PYUN J Y. Deep recurrent neural networks for human activity recognition[J]. Sensors, 2017, 17(11): 2556. doi: 10.3390/s17112556 -

下载:

下载:

下载:

下载: