Self-attention Capsule Network Rate Prediction with Review Quality

-

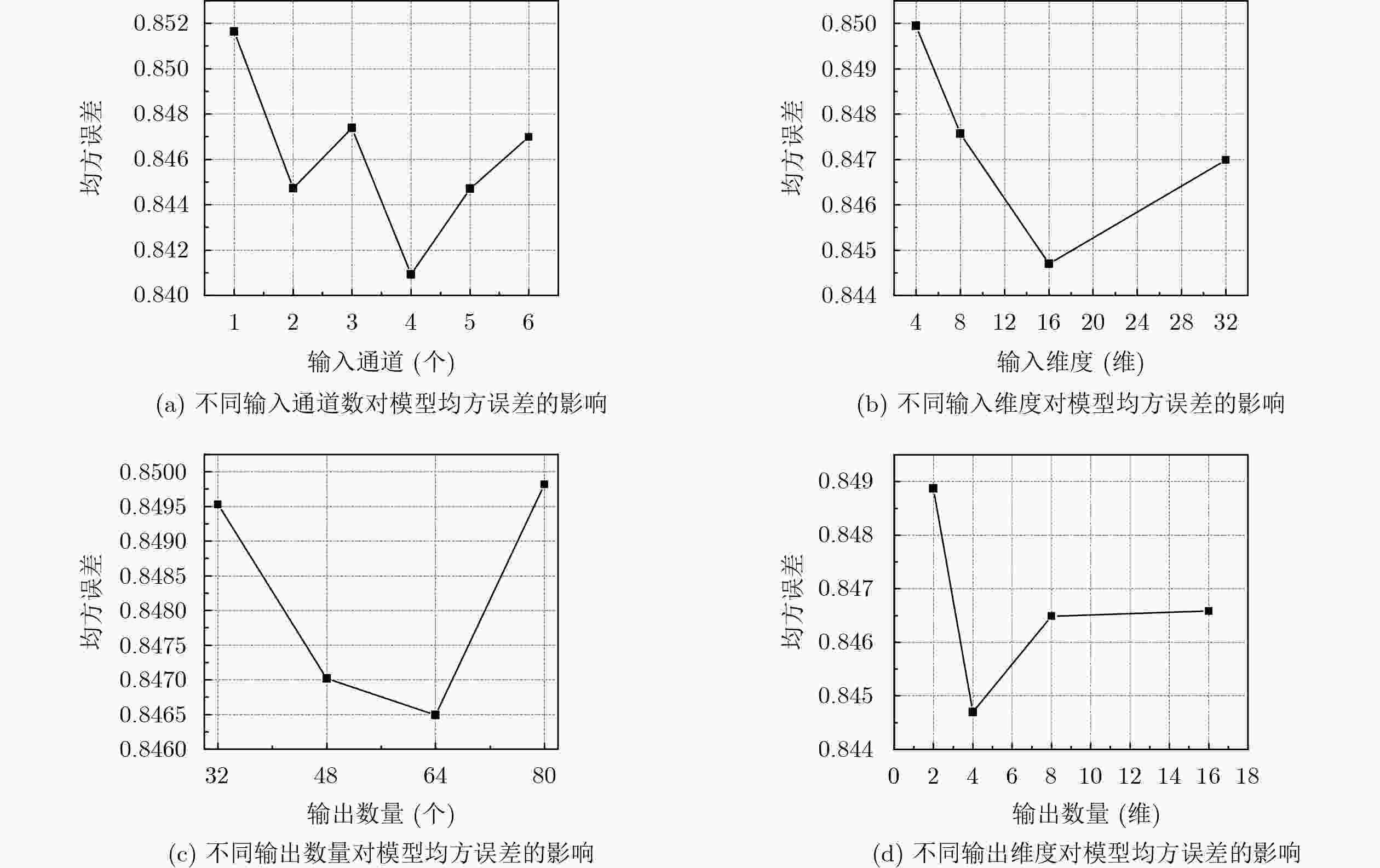

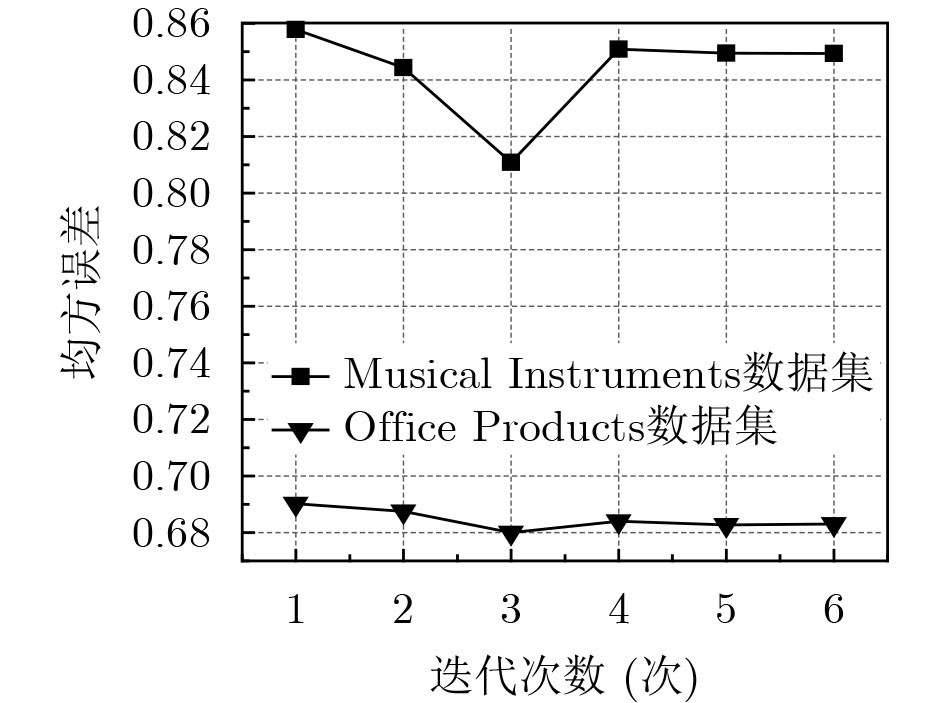

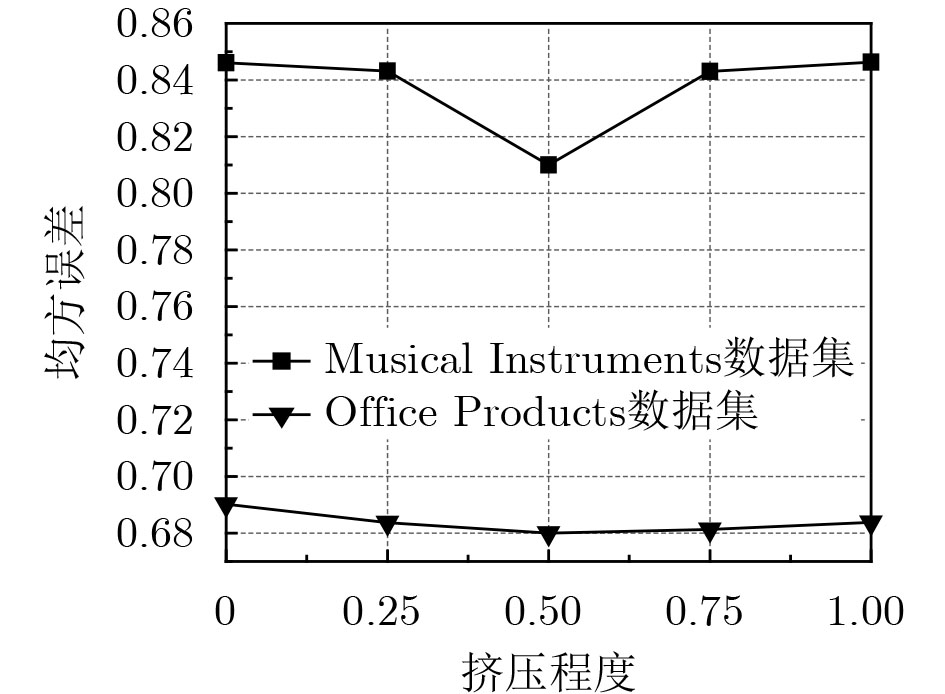

摘要: 基于评论文档的推荐系统普遍采用卷积神经网络识别评论的语义,但由于卷积神经网络存在“不变性”,即只关注特征是否存在,忽略特征的细节,卷积中的池化操作也会丢失文本中的一些重要信息;另外,使用用户项目交互的全部评论文档作为辅助信息不仅不会提升语义的质量,反而会受到其中低质量评论的影响,导致推荐结果并不准确。针对上述提到的两个问题,该文提出了自注意力胶囊网络评分预测模型(Self-Attention Capsule network Rate prediction, SACR),模型使用可以保留特征细节的自注意力胶囊网络挖掘评论文档,使用用户和项目的编号信息标记低质量评论,并且将二者的表示相融合用以预测评分。该文还改进了胶囊的挤压函数,从而得到更精确的高层胶囊。实验结果表明,SACR在预测准确性上较一些经典模型及最新模型均有显著的提升。Abstract: Recommendation systems based on reviews generally use convolutional neural networks to identify the semantics. However, due to the “invariance” of convolutional neural networks, that is, they only pay attention to the existence of features and ignore the details of features. The pooling operation will also lose some important information; In addition, using all the reviews as auxiliary information will not only not improve the quality of semantics, but will be affected by the low-quality reviews, this will lead to inaccurate recommendations. In order to solve the two problems mentioned above, this paper proposes a SACR (Self-Attention Capsule network Rate prediction) model. SACR uses a self-attention capsule network that can retain feature details to mine reviews, uses user and item ID to mark low-quality reviews, and merge the two representations to predict the rate. This paper also improves the squeeze function of the capsule, which can obtain more accurate high-level capsules. The experiments show that SACR has a significant improvement in prediction accuracy compared to some classic models and the latest models.

-

Key words:

- Recommendation system /

- Capsule network /

- Attention /

- Review quality /

- Rate prediction

-

表 1 模型符号定义

符号 定义 符号 定义 N,M 数据集中用户和项目的数量 $ {\alpha _i} $,$ {\beta _{\text{j}}} $ 用户i、项目j的编号嵌入 R 用户或项目的评论文档 $ {u_i} $,$ {v_j} $ 用户i、项目j的胶囊 $ {r_{ij}} $ 评分矩阵中用户i对项目j的评分 $ C $,$ {e^1} $ 输入胶囊的通道数、维度 $ {\hat r_{ij}} $ 用户i对项目j的预测评分 D,e 输出胶囊的数量、维度 W,b 模型中的权重矩阵、偏置向量 $ \lambda $ 路由迭代的次数 d 词嵌入维度 F 评分数量 表 2 对每个数据集的统计

数据集 用户 项目 评论 用户平均评论 项目平均评论 Musical Instruments 1429 900 10261 7.2 11.4 Office Products 4905 2420 53258 10.9 22.0 Digital Music 5540 3568 64706 11.7 18.1 Tools and Improvement 16638 10217 134476 8.1 13.2 Video Games 24303 10672 231780 9.5 21.7 Toys and Games 19412 11924 167597 8.6 14.1 Kindle Store 68223 61935 982619 14.4 15.9 Movies and TV 123960 50052 1679533 13.5 33.6 表 3 各模型实验结果对比

数据集 PMF ConvMF DeepCoNN NARRE CARP SACR Musical Instruments 1.398 0.903 0.893 1.004 0.800 0.810 Office Products 1.092 0.767 0.698 0.931 0.728 0.680 Digital Music 1.206 0.876 0.809 1.270 0.889 0.796 Tools Improvement 1.566 1.056 0.958 1.196 0.964 0.924 Video Games 1.672 1.174 1.134 1.205 1.166 1.104 Toys and Games 1.711 0.877 0.810 0.796 0.800 0.780 Kindle Store 0.984 0.623 0.621 0.605 0.610 0.590 Movies and TV 1.669 1.010 1.026 0.994 0.997 0.930 平均MSE 1.412 0.911 0.866 1.001 0.869 0.826 表 4 子模型预测准确率实验结果对比

模型 Musical Instruments Office Products Digital Music Tools and Home Improvement Video Games SACR-base 0.853 0.681 0.805 0.924 1.105 SACR-cnn 0.886 0.691 0.808 0.936 1.106 DeepCoNN 0.893 0.698 0.809 0.958 1.134 SACR 0.810 0.680 0.796 0.921 1.104 -

[1] TAN Yunzhi, ZHANG Min, LIU Yiqun, et al. Rating-boosted latent topics: Understanding users and items with ratings and reviews[C]. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16), New York, USA, 2016: 2640–2646. [2] ZHANG Wei, YUAN Quan, HAN Jiawei, et al. Collaborative multi-level embedding learning from reviews for rating prediction[C]. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16), New York, USA, 2016: 2986–2992. [3] BLEI D, CARIN L, and DUNSON D. Probabilistic topic models[J]. IEEE Signal Processing Magazine, 2010, 27(6): 55–65. doi: 10.1109/MSP.2010.938079 [4] KIM D, PARK C, OH J, et al. Convolutional matrix factorization for document context-aware recommendation[C]. Proceedings of the 10th ACM Conference on Recommender Systems, Boston, USA, 2016: 233–240. doi: 10.1145/2959100.2959165. [5] ZHENG Lei, NOROOZI V, and YU P S. Joint deep modeling of users and items using reviews for recommendation[C]. Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, 2017: 425–434. doi: 10.1145/3018661.3018665. [6] CHEN Chong, ZHANG Min, LIU Yiqun, et al. Neural attentional rating regression with review-level explanations[C]. Proceedings of the 2018 World Wide Web Conference, Lyon, Italy, 2018: 1583–1592. doi: 10.1145/3178876.3186070. [7] LU Yichao, DONG Ruihai, and SMYTH B. Coevolutionary recommendation model: Mutual learning between ratings and reviews[C]. Proceedings of the 2018 World Wide Web Conference, Lyon, Italy, 2018: 773–782. doi: 10.1145/3178876.3186158. [8] SABOUR S, FROSST N, and HINTON G. Dynamic routing between capsules[C]. NIPS, Los Angeles, USA, 2017: 3859–3869. [9] ZHANG Ningyu, DENG Shumin, SUN Zhanling, et al. Attention-based capsule networks with dynamic routing for relation extraction[C]. Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2018: 986–992. doi: 10.18653/v1/d18-1120. [10] ZHANG Min and GENG Guohua. Capsule networks with word-attention dynamic routing for cultural relics relation extraction[J]. IEEE Access, 2020, 8: 94236–94244. doi: 10.1109/ACCESS.2020.2995447 [11] ZHAO Wei, YE Jianbo, YANG Min, et al. Investigating capsule networks with dynamic routing for text classification[C]. Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2018: 3110–3119. doi: 10.18653/v1/D18-1350. [12] KIM J, JANG S, PARK E, et al. Text classification using capsules[J]. Neurocomputing, 2020, 376: 214–221. doi: 10.1016/j.neucom.2019.10.033 [13] LI Chenliang, QUAN Cong, PENG Li, et al. A capsule network for recommendation and explaining what you like and dislike[C]. Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 2019: 275–284. doi: 10.1145/3331184.3331216. [14] LI Chao, LIU Zhiyuan, WU Mengmeng, et al. Multi-interest network with dynamic routing for recommendation at tmall[C]. The 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 2019: 2615–2623. doi: 10.1145/3357384.3357814. [15] OLATUNJI I E, LI Xin, and LAM W. Context-aware helpfulness prediction for online product reviews[C]. 15th Asia Information Retrieval Societies Conference on Information Retrieval Technology, Hong Kong, China, 2019: 56–65. doi: 10.1007/978-3-030-42835-8_6. [16] 丁永刚, 李石君, 付星, 等. 面向时序感知的多类别商品方面情感分析推荐模型[J]. 电子与信息学报, 2018, 40(6): 1453–1460. doi: 10.11999/JEIT170938DING Yonggang, LI Shijun, FU Xing, et al. Temporal-aware multi-category products recommendation model based on aspect-level sentiment analysis[J]. Journal of Electronics &Information Technology, 2018, 40(6): 1453–1460. doi: 10.11999/JEIT170938 [17] KINGMA D P and BA L J. ADAM: A method for stochastic optimization[C]. International Conference on Learning Representations (ICLR), San Diego, USA, 2015: 1–15. [18] SRIVASTAVA N, HINTON G, KRIZHEVSKY A, et al. Dropout: A simple way to prevent neural networks from overfitting[J]. The Journal of Machine Learning Research, 2014, 15(1): 1929–1958. doi: 10.5555/2627435.2670313 [19] MCAULEY J, TARGETT C, SHI Qinfeng, et al. Image-based recommendations on styles and substitutes[C]. The 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, USA, 2015: 43–52. doi: 10.1145/2766462.2767755. [20] SALAKHUTDINOV R and MNIH A. Probabilistic matrix factorization[C]. Proceedings of the 20th International Conference on Neural Information Processing Systems, Vancouver, Canada, 2007: 1257–1264. doi: 10.5555/2981562.2981720. -

下载:

下载:

下载:

下载: