Instance Segmentation of Thermal Imaging Temperature Measurement Region Based on Infrared Attention Enhancement Mechanism

-

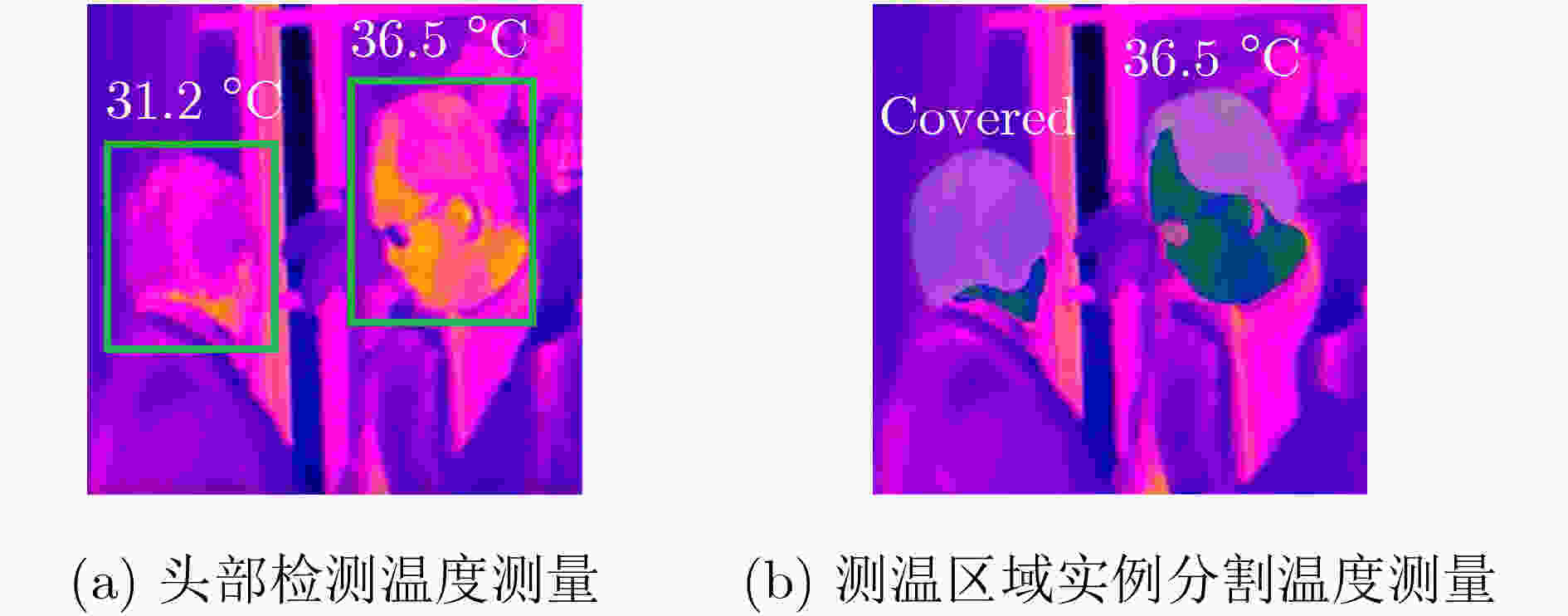

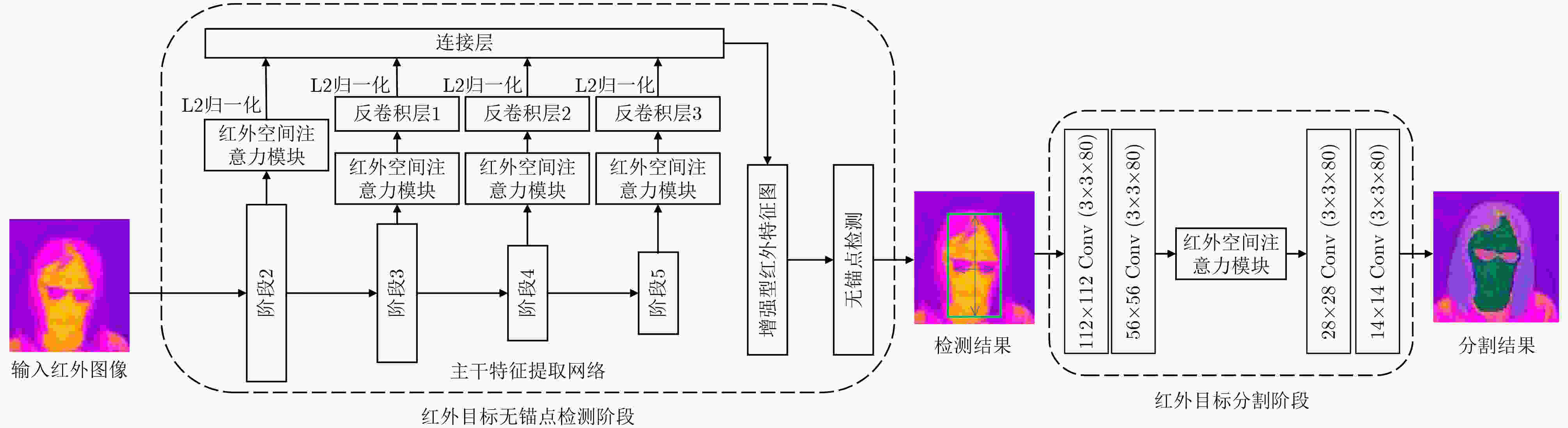

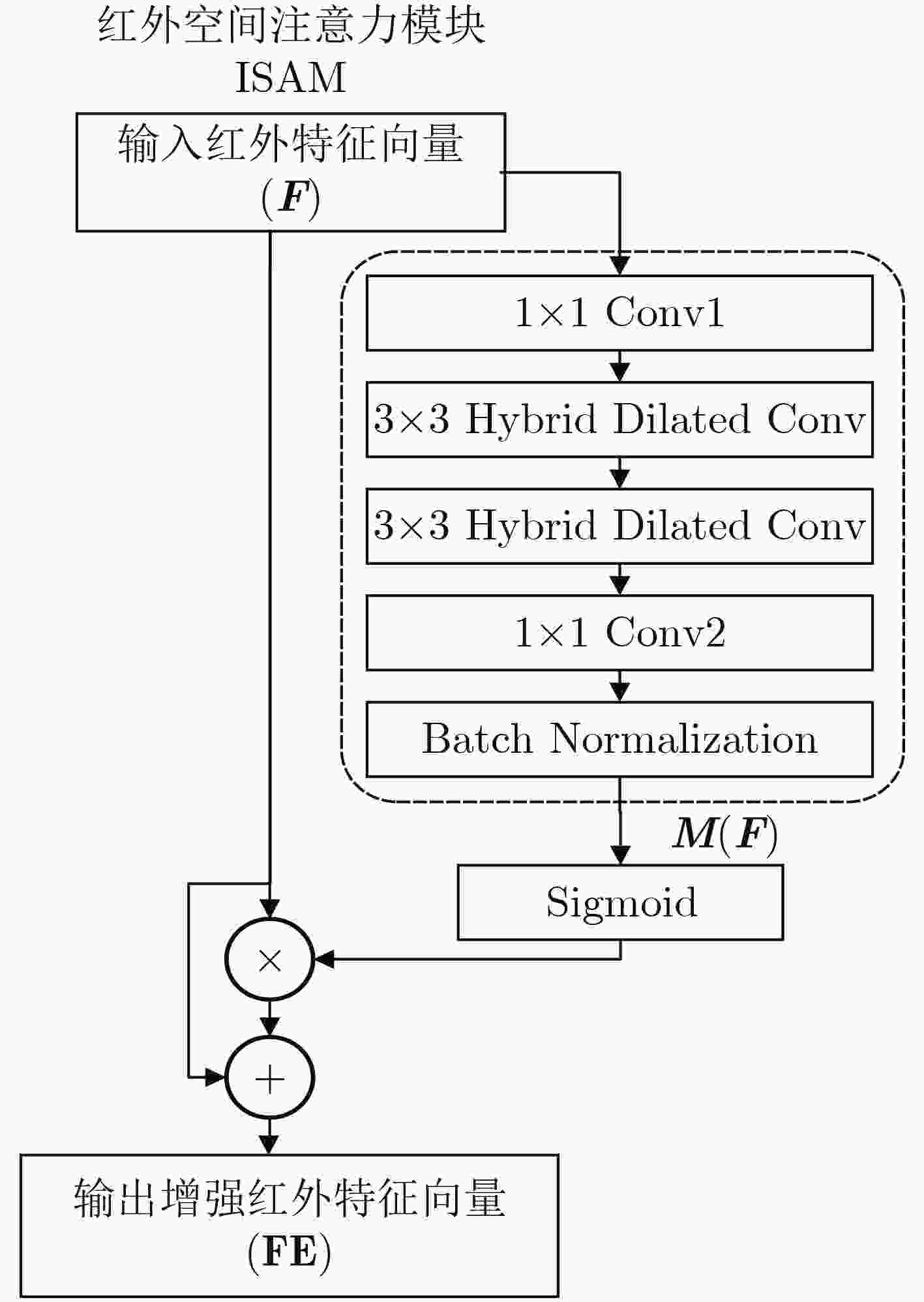

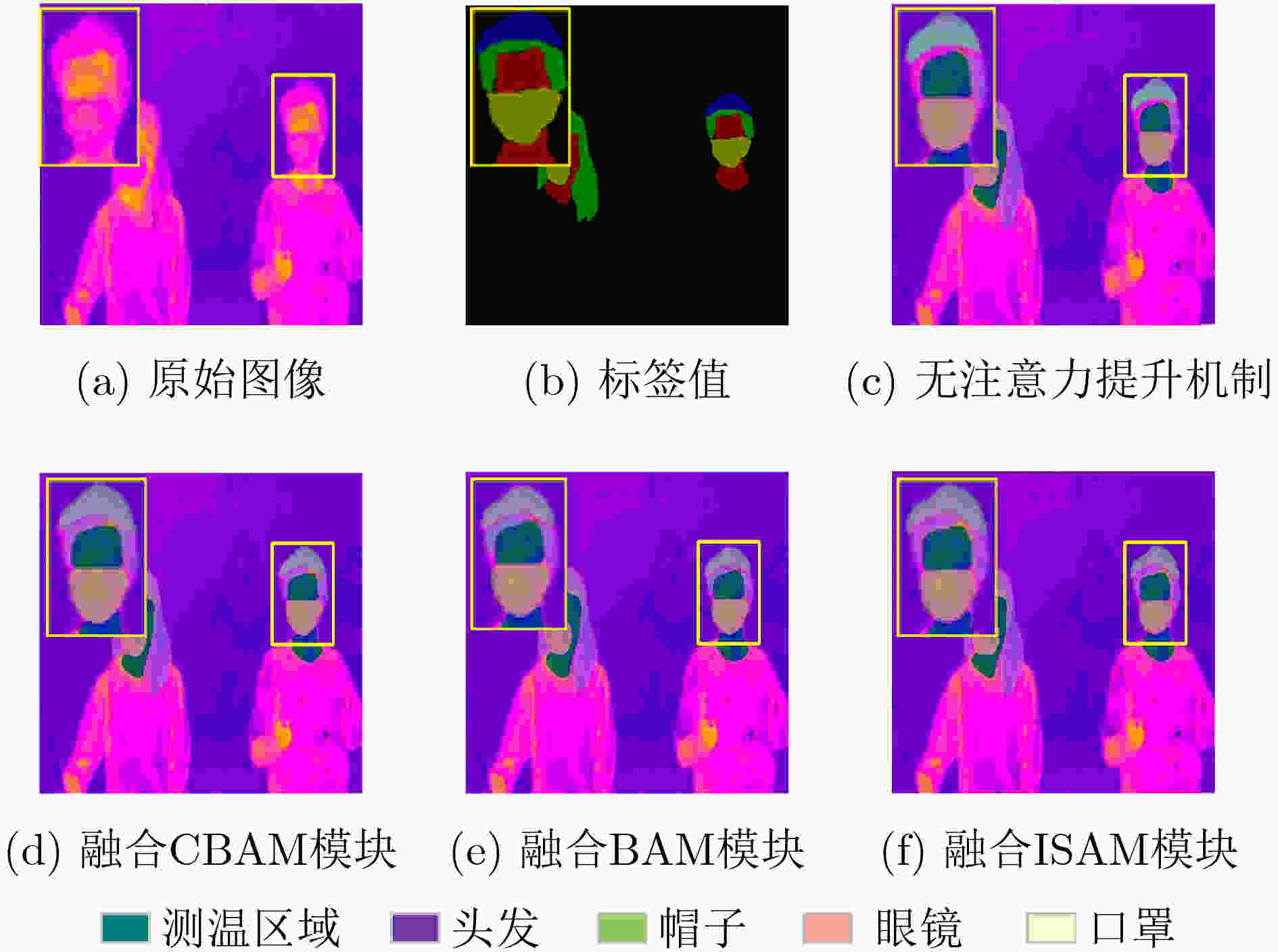

摘要: AI+热成像人体温度监测系统被广泛用于人群密集的人体实时温度测量。此类系统检测人的头部区域进行温度测量,由于各类遮挡,温度测量区域可能太小而无法正确测量。为了解决这个问题,该文提出一种融合红外注意力提升机制的无锚点实例分割网络,用于实时红外热成像温度测量区域实例分割。该文所提出的实例分割网络在检测阶段和分割阶段融合红外空间注意力模块(ISAM),旨在准确分割红外图像中的头部裸露区域,以进行准确实时的温度测量。结合公共热成像面部数据集和采集的红外热成像数据集,制作了“热成像温度测量区域分割数据集”用于网络训练。实验结果表明:该方法对红外热成像图像中头部裸露测温区域的平均检测精度达到88.6%,平均分割精度达到86.5%,平均处理速度达到33.5 fps,在评价指标上优于大多数先进的实例分割方法。

-

关键词:

- 红外热成像 /

- 人体体温监测系统 /

- 红外注意力提升机制 /

- 无锚点实例分割网络 /

- 热成像温度测量区域分割数据集

Abstract: AI+thermal imaging human body temperature monitoring system is widely used for real-time temperature measurement of human body in dense crowds. The artificial intelligence method used in such systems detects the human head region for temperature measurement. The temperature measurement area may be too small to measure correctly due to occlusion. To tackle this problem, an anchor-free instance segmentation network incorporating infrared attention enhancement mechanism is proposed for real-time infrared thermal imaging temperature measurement area segmentation. The instance segmentation network proposed in this paper integrates the Infrared Spatial Attention Module (ISAM) in the detection stage and the segmentation stage, aiming to accurately segment the bare head area in the infrared image. Combined with the public thermal imaging facial dataset and the collected infrared thermal imaging dataset, the "thermal imaging temperature measurement area segmentation dataset" is produced. Experimental results demonstrate that this method reached an average detection precision of 88.6%, average mask precision of 86.5%, average processing speed of 33.5 fps. This network is superior to most state of the art instance segmentation methods in objective evaluation metrics. -

表 1 训练集中各类数据分布

数据类型 带口罩的面部 裸露的面部 存在各类遮挡的面部 比例(%) 40 20 40 表 2 数据集中标签对应的标注色

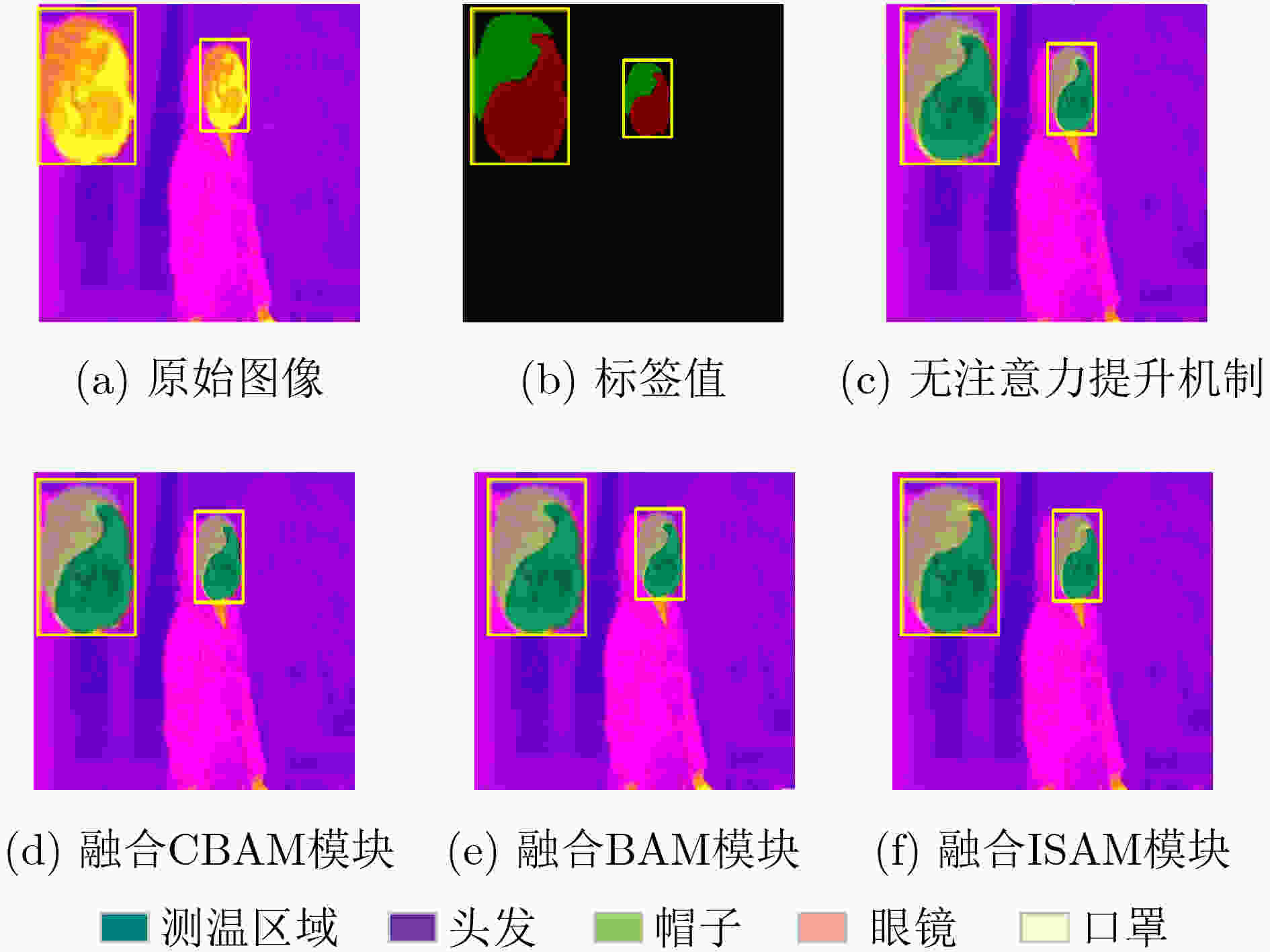

分割类别 温度测量区域 头发 帽子 眼镜 口罩 R 0 139 0 255 255 G 100 0 255 105 255 B 0 139 127 180 210 表 3 ISAM模块目标检测阶段有效性验证实验结果

结构 AP(%) AMP(%) fps 无注意力提升机制 83.7 80.0 38.0 融合CBAM模块 85.6 81.5 33.5 融合BAM模块 84.8 81.0 34.5 融合ISAM模块 88.6 84.5 35.5 表 4 ISAM模块目标分割阶段有效性实验结果

结构 AP(%) AMP(%) fps 无注意力提升机制 88.6 84.5 35.5 融合CBAM模块 88.6 84.3 31.2 融合BAM模块 88.6 84.1 32.5 融合ISAM模块 88.6 86.5 33.5 表 5 热成像测温区域实例分割方法对比实验结果

实例分割方法 AP(%) AMP(%) fps Mask R-CNN 83.8 80.3 11.5 YOLACT 75.6 70.1 38.0 CenterMask 82.8 78.1 33.0 PolarMask 83.6 79.0 30.5 本文方法 88.6 86.5 33.5 -

[1] USAMENTIAGA R, VENEGAS P, GUEREDIAGA J, et al. Infrared thermography for temperature measurement and non-destructive testing[J]. Sensors, 2014, 14(7): 12305–12348. doi: 10.3390/s140712305 [2] KAMMERSGAARD T S, MALMKVIST J, and PEDERSEN L J. Infrared thermography–a non-invasive tool to evaluate thermal status of neonatal pigs based on surface temperature[J]. Animal, 2013, 7(12): 2026–2034. doi: 10.1017/S1751731113001778 [3] ARORA N, MARTINS D, RUGGERIO D, et al. Effectiveness of a noninvasive digital infrared thermal imaging system in the detection of breast cancer[J]. The American Journal of Surgery, 2008, 196(4): 523–526. doi: 10.1016/j.amjsurg.2008.06.015 [4] KONTOS M, WILSON R, and FENTIMAN I. Digital Infrared Thermal Imaging (DITI) of breast lesions: Sensitivity and specificity of detection of primary breast cancers[J]. Clinical Radiology, 2011, 66(6): 536–539. doi: 10.1016/j.crad.2011.01.009 [5] CLARK R P and STOTHERS J K. Neonatal skin temperature distribution using infra-red colour thermography[J]. The Journal of Physiology, 1980, 302: 323–333. doi: 10.1113/jphysiol.1980.sp013245 [6] RASOR J S, ZLOTTA A R, EDWARDS S D, et al. TransUrethral Needle Ablation (TUNA): Thermal gradient mapping and comparison of lesion size in a tissue model and in patients with benign prostatic hyperplasia[J]. European Urology, 1993, 24(3): 411–414. doi: 10.1159/000474339 [7] BAGAVATHIAPPAN S, SARAVANAN T, PHILIP J, et al. Investigation of peripheral vascular disorders using thermal imaging[J]. The British Journal of Diabetes & Vascular Disease, 2008, 8(2): 102–104. [8] BAGAVATHIAPPAN S, SARAVANAN T, PHILIP J, et al. Infrared thermal imaging for detection of peripheral vascular disorders[J]. Journal of Medical Physics, 2009, 34(1): 43–47. doi: 10.4103/0971-6203.48720 [9] HE Kaiming, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]. IEEE International Conference on Computer Vision, Shenzhen, China, 2017: 2961–2969. doi: 10.1109/TPAMI.2018.2844175. [10] BOLYA D, ZHOU Chong, XIAO Fanyi, et al. YOLACT++: Better real-time instance segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, To be published. doi: 10.1109/TPAMI.2020.3014297 [11] LEE Y and PARK J. CenterMask: Real-time anchor-free instance segmentation[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 13903–13912. [12] XIE Enze, SUN Peize, SONG Xiaoge, et al. PolarMask: Single shot instance segmentation with polar representation[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 12190–12199. [13] BENINI S, KHAN K, LEONARDI R, et al. Face analysis through semantic face segmentation[J]. Signal Processing: Image Communication, 2019, 74: 21–31. doi: 10.1016/j.image.2019.01.005 [14] GHIASS R S, ARANDJELOVIĆ O, BENDADA A, et al. Infrared face recognition: A comprehensive review of methodologies and databases[J]. Pattern Recognition, 2014, 47(9): 2807–2824. doi: 10.1016/j.patcog.2014.03.015 [15] YANG Changcai, ZHOU Huabing, SUN Sheng, et al. Good match exploration for infrared face recognition[J]. Infrared Physics & Technology, 2014, 67: 111–115. [16] BUDZAN S and WYŻGOLIK R. Face and eyes localization algorithm in thermal images for temperature measurement of the inner canthus of the eyes[J]. Infrared Physics & Technology, 2013, 60: 225–234. [17] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. [18] ZHOU Xingyi, WANG Dequan, and KRÄHENBÜHL P. Objects as points[C]. IEEE Conference on Computer Vision and Pattern Recognition, Los Angeles, 2019: 2678–2689. [19] WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module[C]. 15th European Conference on Computer Vision, Munich, Germany, 2018: 3–19. [20] PARK J, WOO S, LEE J Y, et al. BAM: Bottleneck attention module[C]. European Conference on Computer Vision, Munich, Germany, 2018: 68–86. -

下载:

下载:

下载:

下载: