Person Re-identification Based on Multi-scale Network Attention Fusion

-

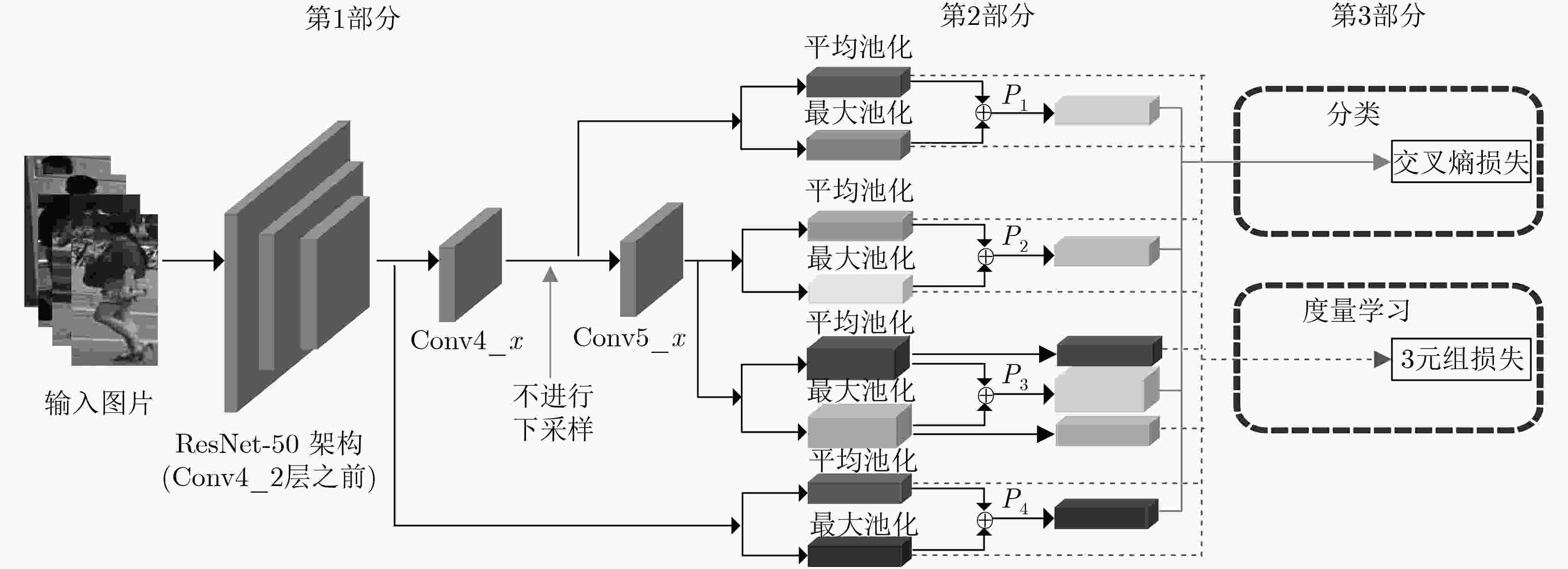

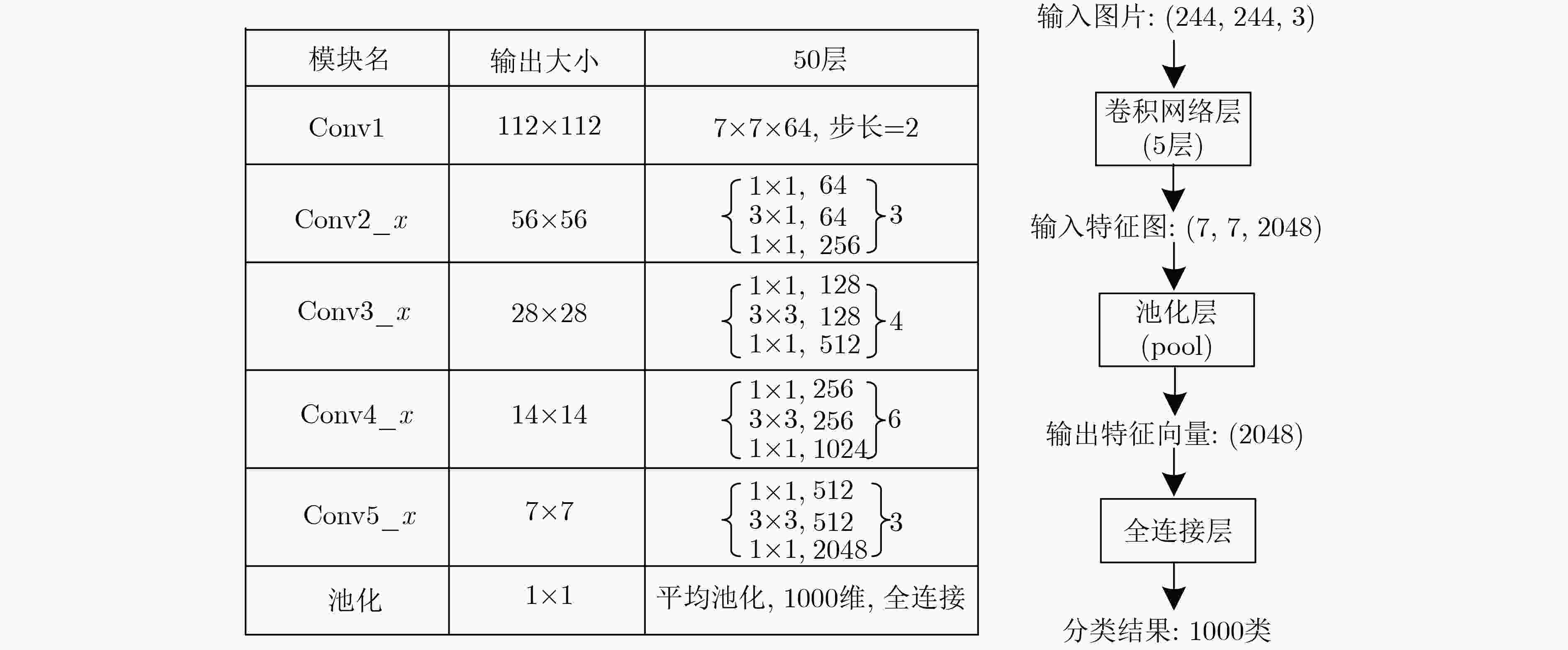

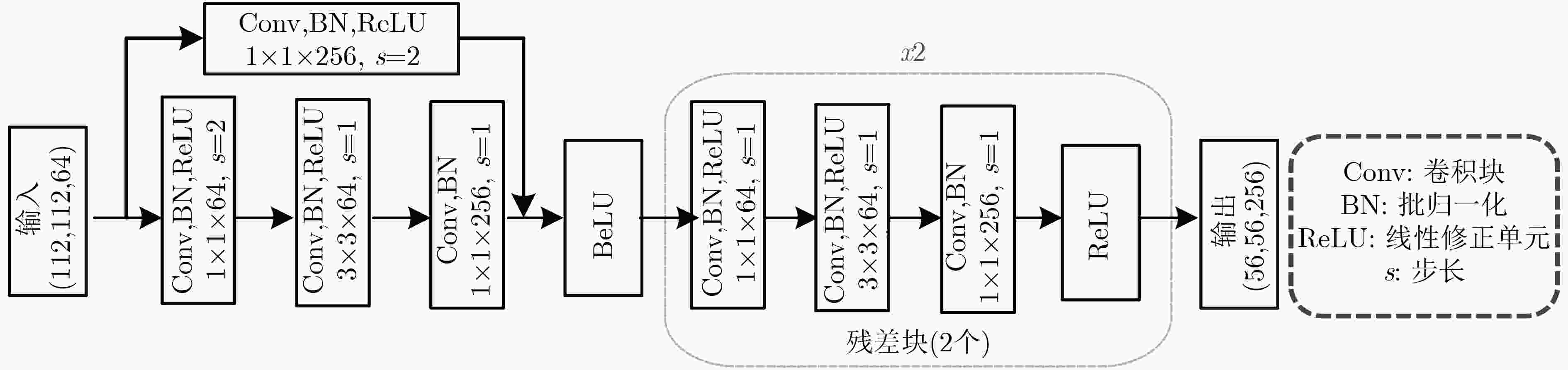

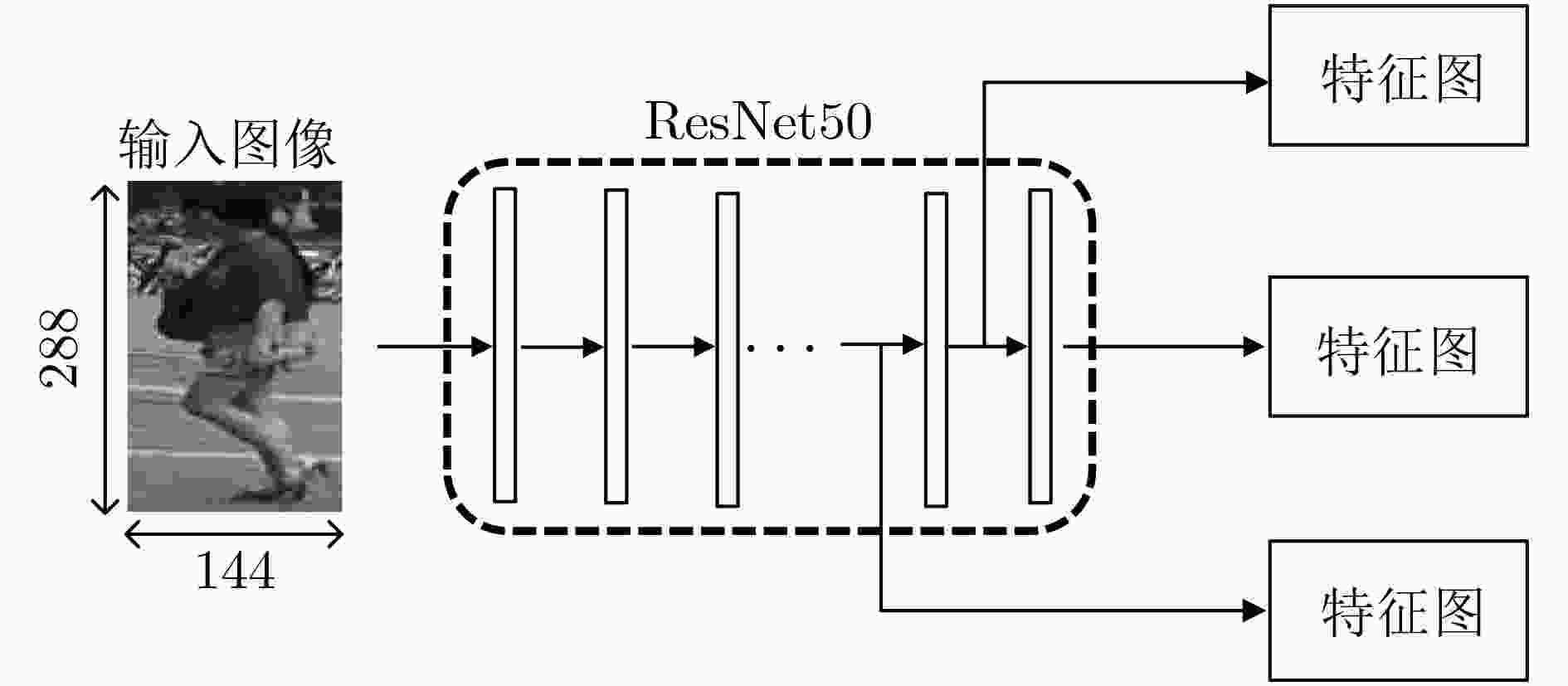

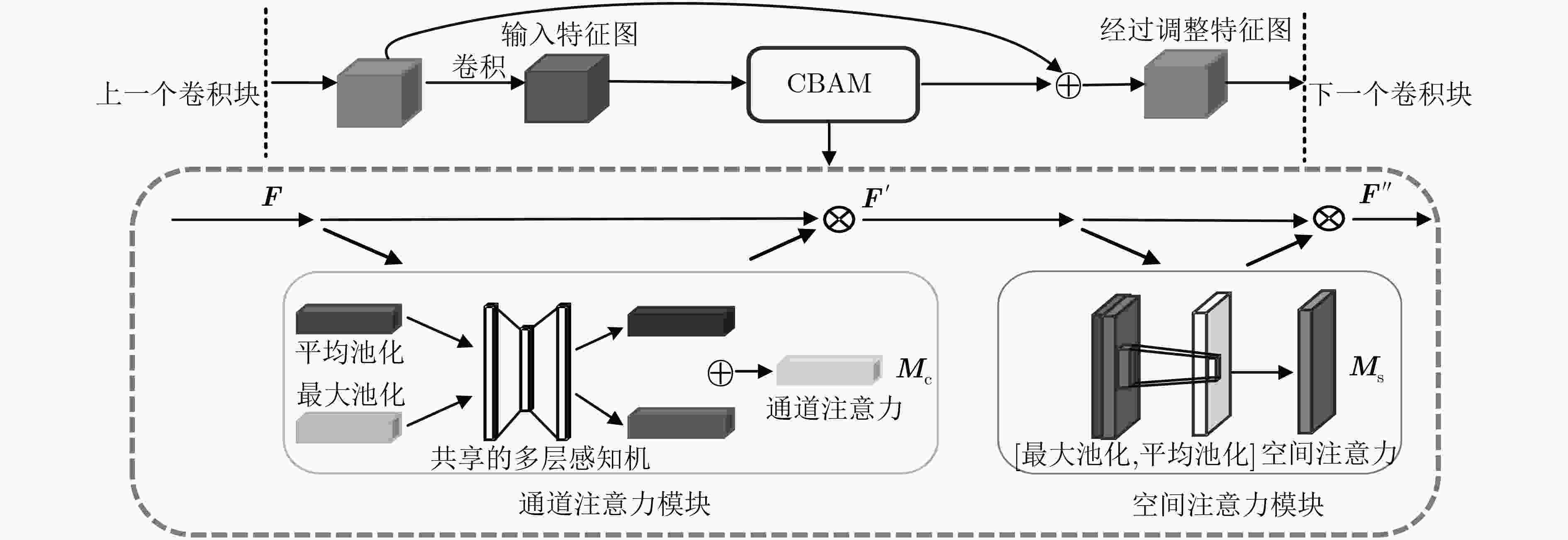

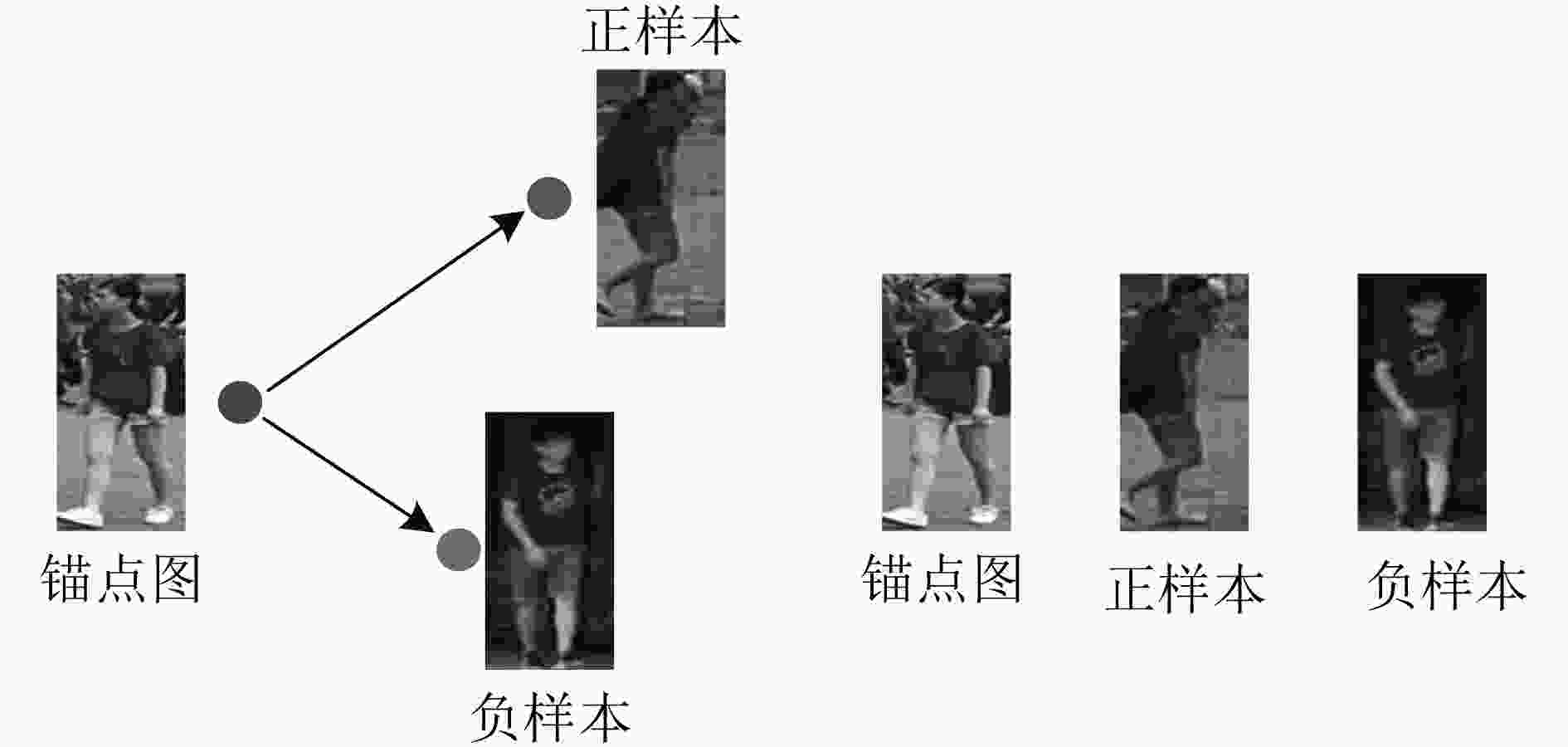

摘要: 行人重识别的关键依赖于行人特征的提取,卷积神经网络具有强大的特征提取以及表达能力。针对不同尺度下可以观察到不同的特征,该文提出一种基于多尺度和注意力网络融合的行人重识别方法(MSAN)。该方法通过对网络不同深度的特征进行采样,将采样的特征融合后对行人进行预测。不同深度的特征图具有不同的表达能力,使网络可以学习到行人身上更加细粒度的特征。同时将注意力模块嵌入到残差网络中,使得网络能更加关注于一些关键信息,增强网络特征学习能力。所提方法在Market1501, DukeMTMC-reID和MSMT17_V1数据集上首位准确率分别到了95.3%, 89.8%和82.2%。实验表明,该方法充分利用了网络不同深度的信息和关注的关键信息,使模型具有很强的判别能力,而且所提模型的平均准确率优于大多数先进算法。Abstract: The key to person re-identification depends on the extraction of pedestrian characteristics. Convolutional neural networks have powerful feature extraction and expression capabilities. In view of the fact that different features can be observed at different scales, a pedestrian re-identification method based on Multi-Scale Attention Network(MSAN) fusion is proposed. This method samples the features at different depths of the network and fuses the sampled features to predict pedestrians. Feature maps of different depths have different expressive powers, enabling the network to learn more fine-grained features of pedestrians. At the same time, the attention module is embedded in the residual network, so that the network can pay more attention to some key information and enhance the network feature learning ability. The accuracy of the proposed method on the datasets such as Market1501, DukeMTMC-reID and MSMT17_V1 reaches 95.3%, 89.8% and 82.2%, respectively. Experiments show that the method makes full use of the information of different depths of the network and the key information of interest, so that the model has strong discriminating ability, and the average accuracy of the proposed model is better than most state-of-the-art algorithms.

-

Key words:

- Person re-identification /

- Multiple scale /

- Attention /

- Residual network /

- Metric learning

-

表 1 多尺度融合模型准确率验证实验结果(%)

方法 Market1501 DukeMTMC-reID MSMT17_V1 Rank-1 mAP Rank-1 mAP Rank-1 mAP SSAN 94.9 87.9 86.1 67.7 81.4 66.3 SSAN(+RK) 95.3 93.7 86.0 75.6 84.6 73.8 MSAN 95.3 87.9 89.8 78.8 82.2 60.6 MSAN (+RK) 95.9 93.9 92.3 89.7 85.0 74.6 表 2 CBAM模块准确率验证实验结果(%)

方法 Market1501 DukeMTMC-reID MSMT17_V1 Rank-1 mAP Rank-1 mAP Rank-1 mAP MSN 94.4 86.2 87.5 77.2 79.6 56.0 MSN (+CBAM) 95.3 87.9 89.8 78.8 82.2 60.6 MSN(+RK) 95.3 93.1 90.9 89.2 83.2 72.0 MSN(+CBAM+RK) 95.9 93.9 92.3 89.7 85.0 74.6 表 3 所提MSAN算法与其他先进算法的准确率对比(%)

方法 Market1501 DukeMTMC-reID MSMT17_V1 Rank-1 mAP Rank-1 mAP Rank-1 mAP SVDNet[21] 82.3 62.1 76.7 56.8 – – DPFL[22] 88.6 72.6 79.2 60.0 – – SVDNet+Era[23] 87.1 71.3 79.3 62.4 – – TriNET+Era[23] 83.9 68.7 73.0 56.6 – – DaRe[24] 89.0 76.0 80.2 64.5 – – GP-reid[25] 92.2 81.2 85.2 72.8 – – PCB[4] 92.3 77.4 81.9 65.3 68.2 40.4 Aligned-ReID[5] 92.6 82.3 – – – – PCB+RPP[4] 93.8 81.6 83.3 69.2 – – MGN[6] 95.7 86.9 88.7 78.4 – – BFENET[8] 94.2 84.3 86.8 72.1 – – IANet[18] 94.4 83.1 87.1 73.4 75.5 46.8 DGNet[19] 94.8 86.0 86.6 74.8 77.2 52.3 OSNet[20] 94.8 84.9 88.6 73.5 78.7 52.9 MSAN 95.3 87.9 89.8 78.8 82.2 60.6 MSAN(+RK) 95.9 93.9 92.3 89.7 85.0 74.6 -

FARENZENA M, BAZZANI L, PERINA A, et al. Person re-identification by symmetry-driven accumulation of local features[C]. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, USA, 2010: 2360–2367. 周智恒, 刘楷怡, 黄俊楚, 等. 一种基于等距度量学习策略的行人重识别改进算法[J]. 电子与信息学报, 2019, 41(2): 477–483. doi: 10.11999/JEIT180336ZHOU Zhiheng, LIU Kaiyi, HUANG Junchu, et al. Improved metric learning algorithm for person re-identification based on equidistance[J]. Journal of Electronics &Information Technology, 2019, 41(2): 477–483. doi: 10.11999/JEIT180336 HIRZER M, ROTH P M, KÖSTINGER M, et al. Relaxed pairwise learned metric for person re-identification[C]. The 12th European Conference on Computer Vision, Florence, Italy, 2012: 780–793. SUN Yifan, ZHENG Liang, YANG Yi, et al. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline)[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 480–496. LUO Hao, JIANG Wei, ZHANG Xuan, et al. AlignedReID++: Dynamically matching local information for person re-identification[J]. Pattern Recognition, 2019, 94: 53–61. doi: 10.1016/j.patcog.2019.05.028 WANG Guanshuo, YUAN Yufeng, CHEN Xiong, et al. Learning discriminative features with multiple granularities for person re-identification[C]. 2018 ACM Multimedia Conference on Multimedia Conference, Seoul, Korea, 2018: 274–282. 陈鸿昶, 吴彦丞, 李邵梅, 等. 基于行人属性分级识别的行人再识别[J]. 电子与信息学报, 2019, 41(9): 2239–2246. doi: 10.11999/JEIT180740CHEN Hongchang, WU Yancheng, LI Shaomei, et al. Person re-identification based on attribute hierarchy recognition[J]. Journal of Electronics &Information Technology, 2019, 41(9): 2239–2246. doi: 10.11999/JEIT180740 DAI Zuozhuo, CHEN Mingqiang, GU Xiaodong, et al. Batch DropBlock network for person re-identification and beyond[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 3691–3701. WOO S, PARK J, LEE J Y, et al. Cbam: Convolutional block attention module[C]. The 15th European Conference on Computer Vision (ECCV), Munich, Germany, 2018: 3–19. LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]. 2017 IEEE conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2117–2125. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. HERMANS A, BEYER L, and LEIBE B. In defense of the triplet loss for person re-identification[EB/OL]. https://arxiv.org/abs/1703.07737, 2017. ZHENG Liang, SHEN Liyue, TIAN Lu, et al. Scalable person re-identification: A benchmark[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1116–1124. RISTANI E, SOLERA F, ZOU R, et al. Performance measures and a data set for multi-target, multi-camera tracking[C]. 2016 European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 17–35. WEI Longhui, ZHANG Shiliang, GAO Wen, et al. Person transfer GAN to bridge domain gap for person re-identification[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 79–88. ZHONG Zhun, ZHENG Liang, CAO Donglin, et al. Re-ranking person re-identification with k-reciprocal encoding[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 1318–1327. SALLEH S S, AZIZ N A A, MOHAMAD D, et al. Combining mahalanobis and jaccard distance to overcome similarity measurement constriction on geometrical shapes[J]. International Journal of Computer Science Issues, 2012, 9(4): 124–132. ZHENG Zhedong, YANG Xiaodong, YU Zhiding, et al. Joint discriminative and generative learning for person re-identification[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 2138–2147. HOU Ruibing, MA Bingpeng, CHANG Hong, et al. Interaction-and-aggregation network for person re-identification[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 9317–9326. ZHOU Kaiyang, YANG Yongxin, CAVALLARO A, et al. Omni-Scale feature learning for person re-identification[C]. 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 3702–3712. SUN Yifan, ZHENG Liang, DENG Weijian, et al. SVDNet for pedestrian retrieval[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 3800–3808. CHEN Yanbei, ZHU Xiatian, and GONG Shaogang. Person re-identification by deep learning multi-scale representations[C]. 2017 IEEE International Conference on Computer Vision Workshops, Venice, Italy, 2017: 2590–2600. ZHONG Zhun, ZHENG Liang, KANG Guoliang, et al. Random erasing data augmentation[EB/OL]. https://arxiv.org/abs/1708.04896, 2017. WANG Yan, WANG Lequn, YOU Yurong, et al. Resource aware person re-identification across multiple resolutions[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 8042–8051. ALMAZAN J, GAJIC B, MURRAY N, et al. Re-ID done right: towards good practices for person re-identification[EB/OL]. https://arxiv.org/abs/1801.05339, 2018. -

下载:

下载:

下载:

下载: