DenseNet-siamese Network with Global Context Feature Module for Object Tracking

-

摘要:

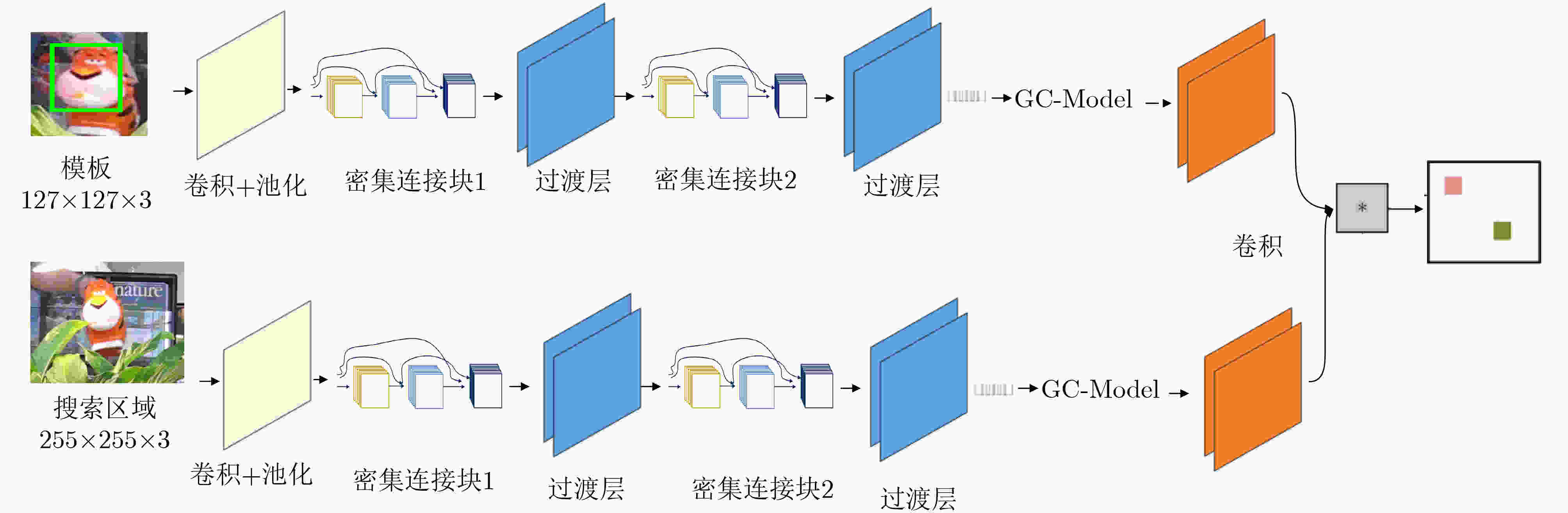

近年来,采用孪生网络提取深度特征的方法由于其较好的跟踪精度和速度,成为目标跟踪领域的研究热点之一,但传统的孪生网络并未提取目标较深层特征来保持泛化性能,并且大多数孪生网络只提取局部领域特征,这使得模型对于外观变化是非鲁棒和局部的。针对此,该文提出一种引入全局上下文特征模块的DenseNet孪生网络目标跟踪算法。该文创新性地将DenseNet网络作为孪生网络骨干,采用一种新的密集型特征重用连接网络设计方案,在构建更深层网络的同时减少了层之间的参数量,提高了算法的性能,此外,为应对目标跟踪过程中的外观变化,该文将全局上下文特征模块(GC-Model)嵌入孪生网络分支,提升算法跟踪精度。在VOT2017和OTB50数据集上的实验结果表明,与当前较为主流的算法相比,该文算法在跟踪精度和鲁棒性上有明显优势,在尺度变化、低分辨率、遮挡等情况下具有良好的跟踪效果,且达到实时跟踪要求。

-

关键词:

- 目标跟踪 /

- 孪生网络 /

- 全局上下文特征 /

- DenseNet网络

Abstract:In recent years, the method of extracting depth features from siamese networks has become one of the hotspots in visual tracking because of its balanced in accuracy and speed. However, the traditional siamese network does not extract the deeper features of the target to maintain generalization performance, and most siamese architecture networks usually process one local neighborhood at a time, which makes the appearance model local and non-robust to appearance changes. In view of this problem, a densenet-siamese network with global context feature module for object tracking algorithm is proposed. This paper innovatively takes densenet network as the backbone of siamese network, adopts a new design scheme of dense feature reuse connection network, which reduces the parameters between layers while constructing deeper network, and enhances the generalization performance of the algorithm. In addition, in order to cope with the appearance changes in the process of object tracking, the Global Context feature Module (GC-Model) is embedded in the siamese network branches to improve the tracking accuracy. The experimental results on the VOT2017 and OTB50 datasets show that comparing with the current mainstream tracking algorithms, the Tracker has obvious advantages in tracking accuracy and robustness, and has good tracking effect in scale change, low resolution, occlusion and so on.

-

Key words:

- Object tracking /

- Siamese network /

- Global context feature /

- DenseNet network

-

表 1 网络结构

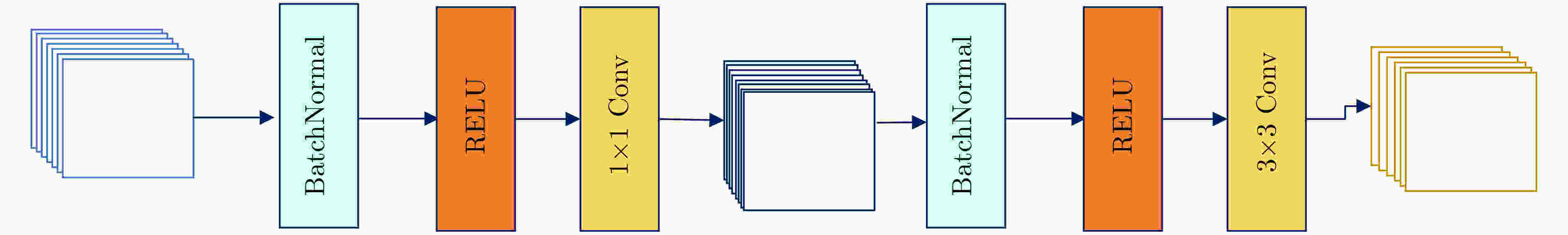

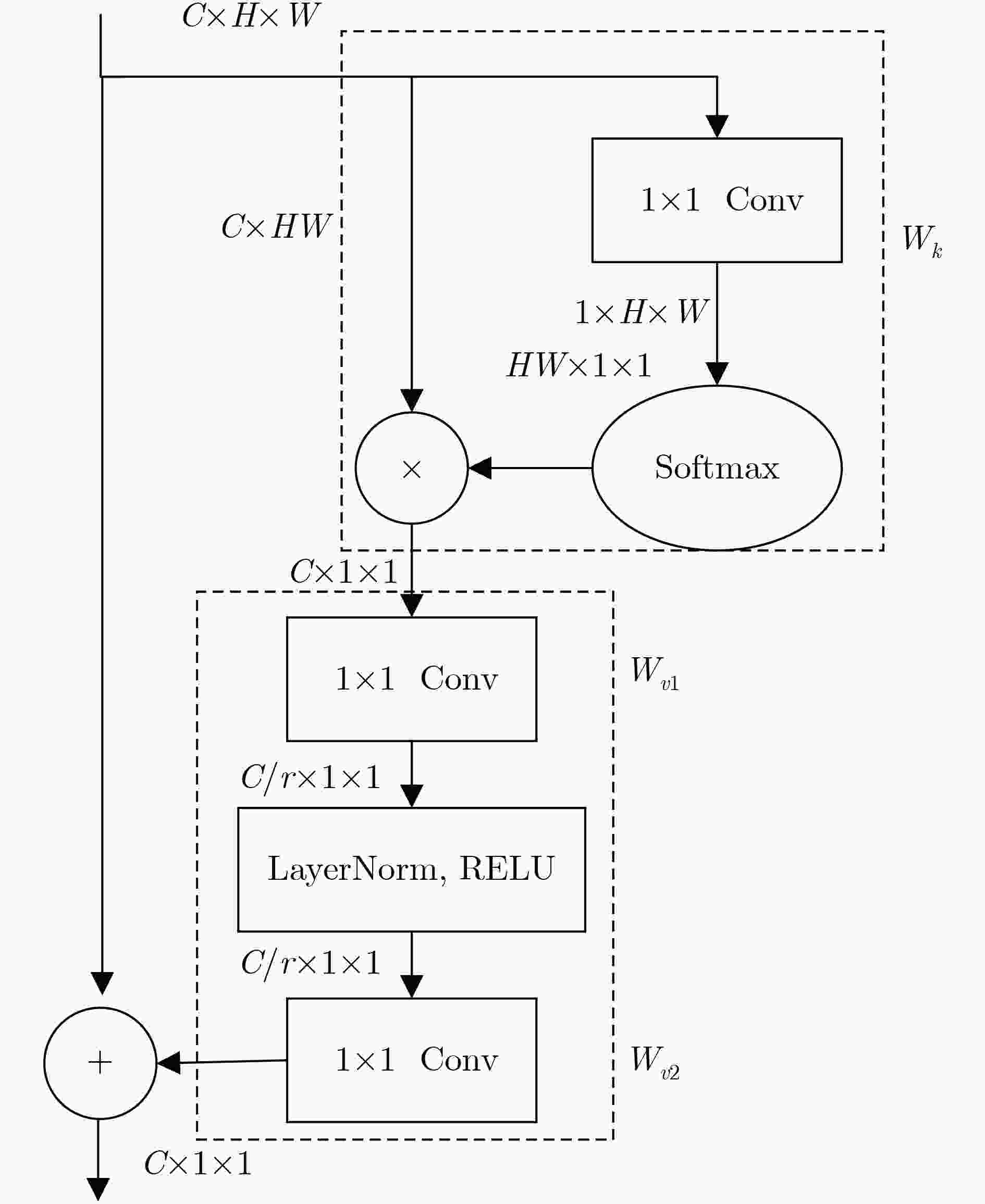

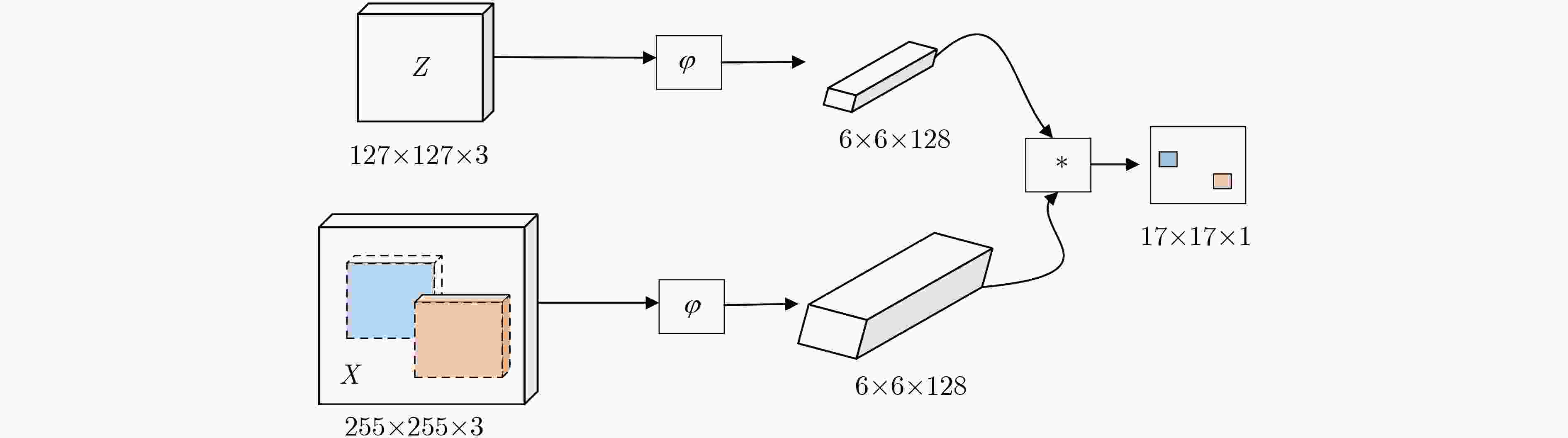

层名称 模板分支 搜索分支 输出 卷积层 7×7Conv, stride 2 7×7Conv, stride 2 61×61×72 密集连接1 1×1Conv ×2 +3×3Conv×2 1×1Conv ×2+3×3Conv×2 61×61×144 过渡层1 1×1Conv+average pool 1×1Conv+average pool 30×30×36 密集连接2 1×1Conv ×4+3×3Conv×4 1×1Conv ×4+3×3Conv×4 30×30×180 过渡层2 1×1Conv+average pool 1×1Conv+average pool 15×15×36 密集连接3 1×1Conv ×6+3×3Conv×6 1×1Conv ×6+3×3Conv×6 15×15×252 密集连接3 3×3Conv×3 3×3Conv×3 9×9×128 GC-Model 图3 图3 9×9×128 表 2 在VOT2017数据集上与主流算法的基础模型结果对比

跟踪算法 精确度 鲁棒性 平均重叠期望 本文算法 0.544 20.090 0.297 SiamFC 0.500 34.031 0.188 SiamVGG 0.525 20.453 0.287 DCFNet 0.465 35.202 0.183 SRDCF 0.480 64.114 0.119 DeepCSRDCF 0.483 19.007 0.293 Staple 0.524 44.019 0.169 表 3 不同属性下算法的跟踪精度对比

跟踪算法 相机移动 目标丢失 光照变化 运动变化 目标遮挡 尺度变化 本文算法 0.561 0.562 0.543 0.554 0.461 0.543 SiamFC 0.513 0.513 0.556 0.514 0.416 0.474 SiamVGG 0.542 0.531 0.538 0.540 0.442 0.514 DCFNet 0.485 0.472 0.532 0.464 0.377 0.450 SRDCF 0.484 0.511 0.588 0.453 0.419 0.447 Staple 0.554 0.528 0.5371 0.523 0.459 0.492 表 4 不同属性下算法的跟踪鲁棒性对比(数字表示失败次数)

跟踪算法 相机移动 目标丢失 光照变化 运动变化 目标遮挡 尺度变化 本文算法 29.0 18.0 3.0 16.0 22.0 11.0 SiamFC 40.0 31.0 5.0 42.0 32.0 25.0 SiamVGG 35.0 15.0 2.0 15.0 19.0 11.0 DCFNet 50.0 34.0 8.0 31.0 24.0 21.0 SRDCF 76.0 86.0 9.0 49.0 32.0 29.0 Staple 62.0 53.0 5.0 27.0 27.0 17.0 表 5 OTB50中测试序列与其影响因素

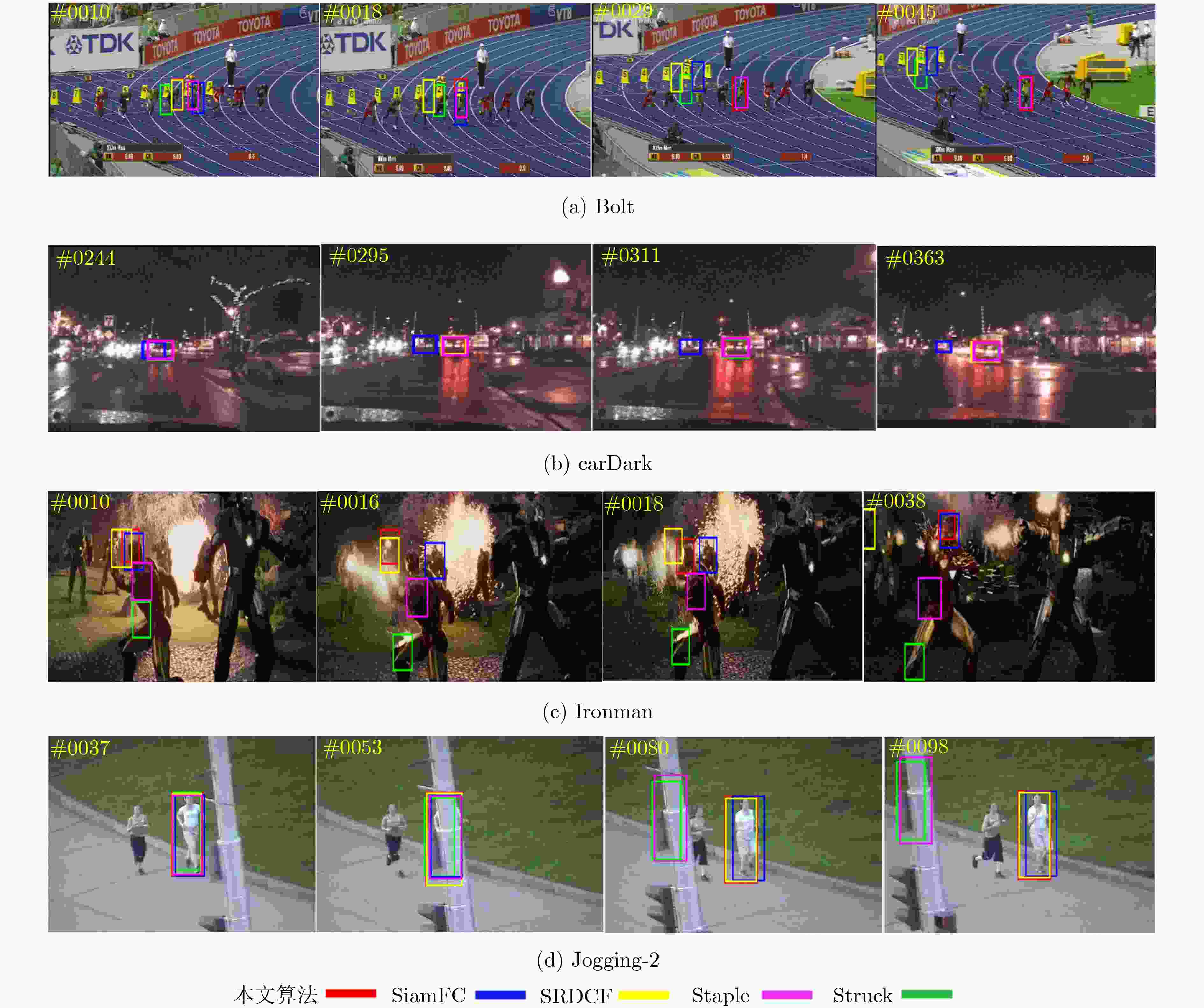

测试序列 帧数 影响因素 Bolt 18 快速移动、相机移动、尺度变化等 carDark 244~363 运动模糊、低分辨率、背景杂波等 Ironman 38 平面内旋转、快速运动、光照变化等 Shaking 55 光照变化、背景模糊等 Jogging-2 53 遮挡 -

孙彦景, 石韫开, 云霄, 等. 基于多层卷积特征的自适应决策融合目标跟踪算法[J]. 电子与信息学报, 2019, 41(10): 2464–2470. doi: 10.11999/JEIT180971SUN Yanjing, SHI Yunkai, YUN Xiao, et al. Adaptive strategy fusion target tracking based on multi-layer convolutional features[J]. Journal of Electronics &Information Technology, 2019, 41(10): 2464–2470. doi: 10.11999/JEIT180971 HENRIQUE J F, CASEIRO R, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596. doi: 10.1109/tpami.2014.2345390 DANELLJAN M, HÄGER G, KHAN F S, et al. Learning spatially regularized correlation filters for visual tracking[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4310–4318. BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]. European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 850–865. doi: 10.1007/978-3-319-48881-3_56. VALMADRE J, BERTINETTO L, HENRIQUES J, et al. End-to-end representation learning for correlation filter based tracking[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5000–5008. doi: 10.1109/CVPR.2017.531. GUO Qing, WEI Feng, ZHOU Ce, et al. Learning dynamic Siamese network for visual object tracking[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 1781–1789. doi: 10.1109/ICCV.2017.196. HE Anfeng, LUO Chong, TIAN Xinmei, et al. A twofold siamese network for real-time object tracking[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 4834–4843. doi: 10.1109/CVPR.2018.00508. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. SZEGEDY C, VANHOUCKE V, IOFFE S, et al. Rethinking the inception architecture for computer vision[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 2818–2826. doi: 10.1109/CVPR.2016.308. 侯志强, 陈立琳, 余旺盛, 等. 基于双模板Siamese网络的鲁棒视觉跟踪算法[J]. 电子与信息学报, 2019, 41(9): 2247–2255. doi: 10.11999/JEIT181018HOU Zhiqiang, CHEN Lilin, YU Wangsheng, et al. Robust visual tracking algorithm based on Siamese network with dual templates[J]. Journal of Electronics &Information Technology, 2019, 41(9): 2247–2255. doi: 10.11999/JEIT181018 WANG Xiaolong, GIRSHICK R, GUPTA A, et al. Non-local neural networks[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7794–7803. doi: 10.1109/CVPR.2018.00813. HU Jie, SHEN Li, and SUN Gang. Squeeze-and-excitation networks[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7132–7141. doi: 10.1109/CVPR.2018.00745. HU Jie, SHEN Li, ALBANIE S, et al. Gather-excite: Exploiting feature context in convolutional neural networks[C]. The 32nd International Conference on Neural Information Processing Systems, Montréal, Canada, 2018: 9423–9433. CAO Yue, XU Jiarui, LIN S, et al. GCNet: Non-local networks meet squeeze-excitation networks and beyond[C]. 2019 IEEE/CVF International Conference on Computer Vision Workshop, Seoul, Korea (South), 2019: 1971–1980. doi: 10.1109/ICCVW.2019.00246. 刘畅, 赵巍, 刘鹏, 等. 目标跟踪中辅助目标的选择、跟踪与更新[J]. 自动化学报, 2018, 44(7): 1195–1211.LIU Chang, ZHAO Wei, LIU Peng, et al. Auxiliary objects selecting, tracking and updating in target tracking[J]. Acta Automatica Sinica, 2018, 44(7): 1195–1211. ABDELPAKEY M H, SHEHATA M S, and MOHAMED M M. DensSiam: End-to-end densely-Siamese network with self-attention model for object tracking[C]. The 13th International Symposium on Visual Computing, Las Vegas, USA, 2018: 463–473. KRISTAN M, LEONARDIS A, MATAS J, et al. The visual object tracking VOT2017 challenge results[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 1949–1972. doi: 10.1109/ICCVW.2017.230. LI Yuhong and ZHANG Xiaofan. SiamVGG: Visual tracking using deeper Siamese networks[J]. arXiv: 2019, 1902.02804. WANG Qiang, GAO Jin, XING Junliang, et al. Dcfnet: Discriminant correlation filters network for visual tracking[J]. arXiv: 2017, 1704.04057. BERTINETTO L, VALMADRE J, GOLODETZ S, et al. Staple: Complementary learners for real-time tracking[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1401–1409. doi: 10.1109/CVPR.2016.156. HARE S, GOLODETZ S, SAFFARI A, et al. Struck: Structured output tracking with kernels[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(10): 2096–2109. doi: 10.1109/TPAMI.2015.2509974 WU Yi, LIM J, and YANG M H. Online object tracking: A benchmark[C]. 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, USA, 2013: 2411–2418. doi: 10.1109/CVPR.2013.312. -

下载:

下载:

下载:

下载: