SAR Target Detection Network via Semi-supervised Learning

-

摘要:

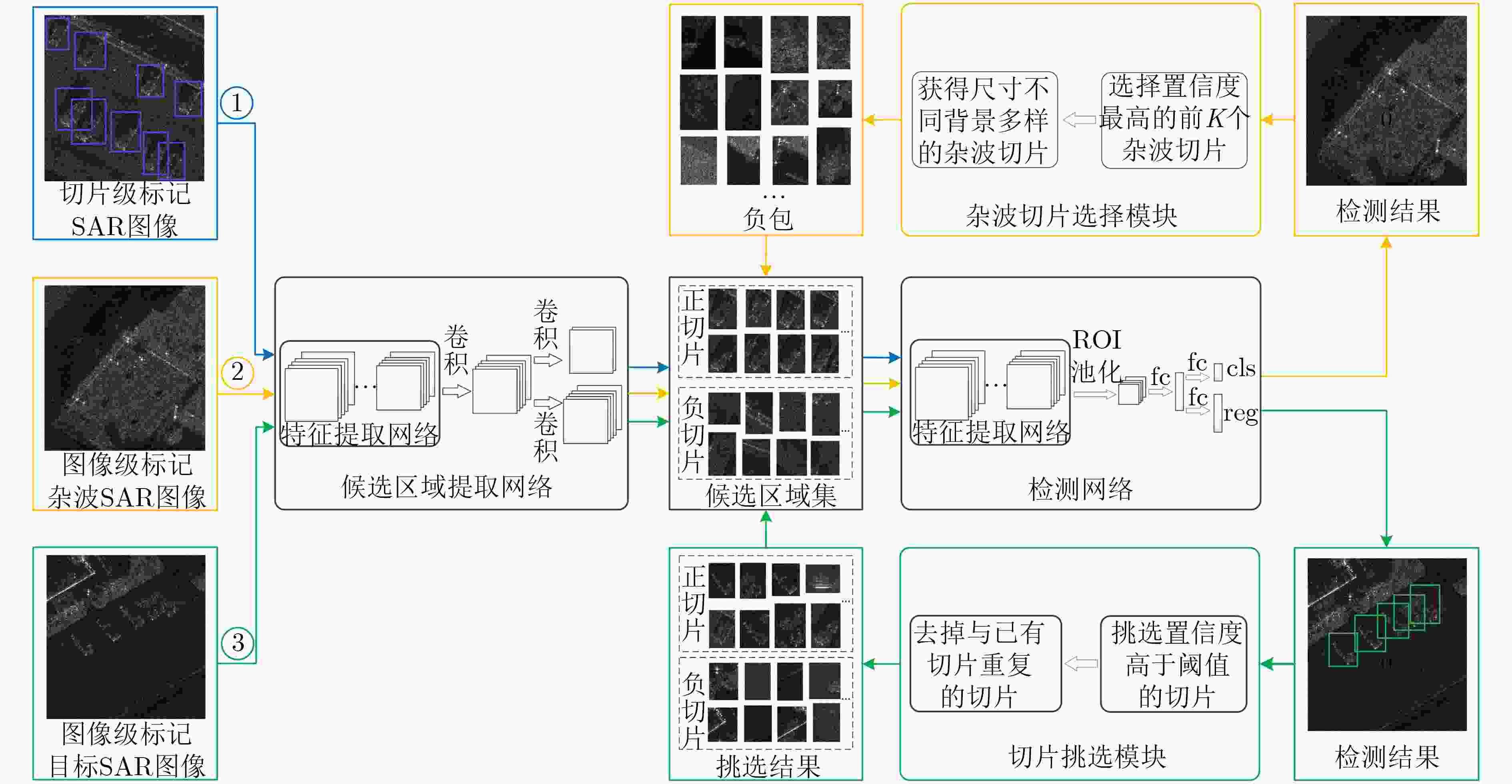

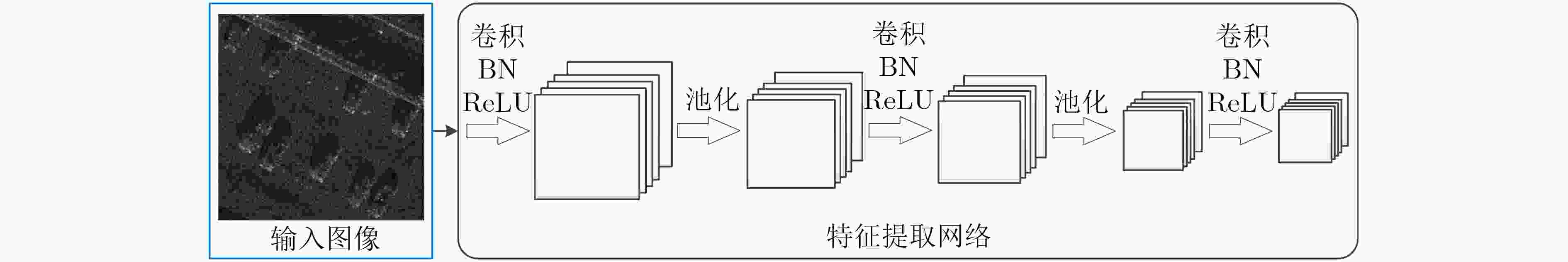

现有的基于卷积神经网络(CNN)的合成孔径雷达(SAR)图像目标检测算法依赖于大量切片级标记的样本,然而对SAR图像进行切片级标记需要耗费大量的人力和物力。相对于切片级标记,仅标记图像中是否含有目标的图像级标记较为容易。该文利用少量切片级标记的样本和大量图像级标记的样本,提出一种基于卷积神经网络的半监督SAR图像目标检测方法。该方法的目标检测网络由候选区域提取网络和检测网络组成。半监督训练过程中,首先使用切片级标记的样本训练目标检测网络,训练收敛后输出的候选切片构成候选区域集;然后将图像级标记的杂波样本输入网络,将输出的负切片加入候选区域集;接着将图像级标记的目标样本也输入网络,对输出结果中的正负切片进行挑选并加入候选区域集;最后使用更新后的候选区域集训练检测网络。更新候选区域集和训练检测网络交替迭代直至收敛。基于实测数据的实验结果证明,所提方法的性能与使用全部样本进行切片级标记的全监督方法的性能相差不大。

Abstract:The current Synthetic Aperture Radar (SAR) target detection methods based on Convolutional Neural Network (CNN) rely on a large amount of slice-level labeled train samples. However, it takes a lot of labor and material resources to label the SAR images at slice-level. Compared to label samples at slice-level, it is easier to label them at image-level. The image-level label indicates whether the image contains the target of interest or not. In this paper, a semi-supervised SAR image target detection method based on CNN is proposed by using a small number of slice-level labeled samples and a large number of image-level labeled samples. The target detection network of this method consists of region proposal network and detection network. Firstly, the target detection network is trained using the slice-level labeled samples. After training convergence, the output slices constitute the candidate region set. Then, the image-level labeled clutter samples are input into the network and then the negative slices of the output are added to the candidate region set. Next, the image-level labeled target samples are input into the network as well. After selecting the positive and negative slices in the output of the network, they are added to the candidate region set. Finally, the detection network is trained using the updated candidate region set. The processes of updating candidate region set and training detection network alternate until convergence. The experimental results based on the measured data demonstrate that the performance of the proposed method is similar to the fully supervised training method using a much larger set of slice-level samples.

-

Key words:

- SAR /

- Target detection /

- Semi-supervised learning /

- Convolutional Neural Network (CNN)

-

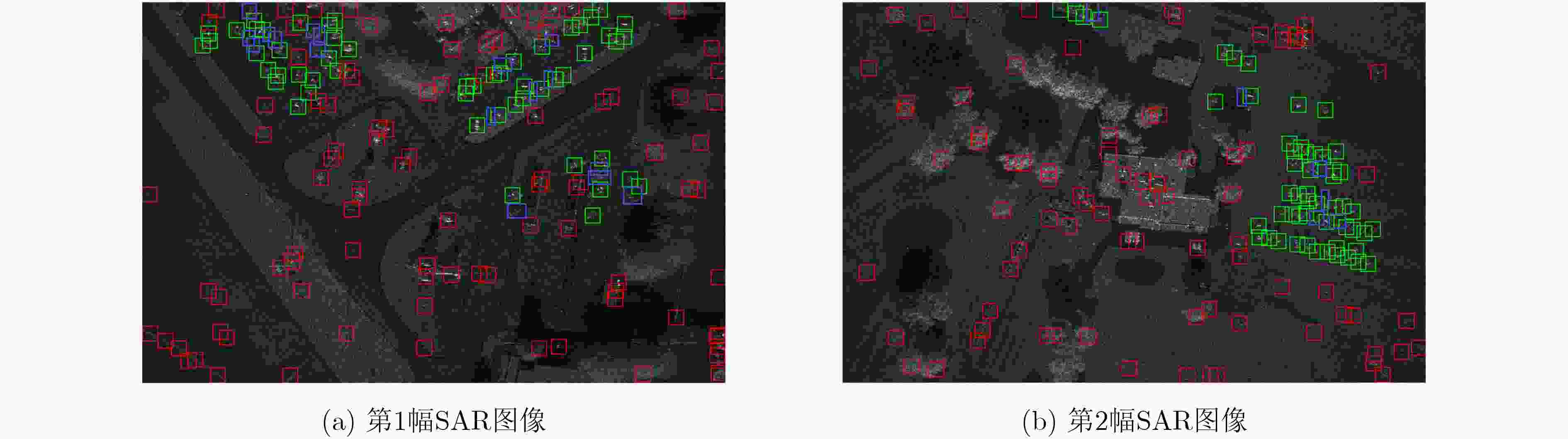

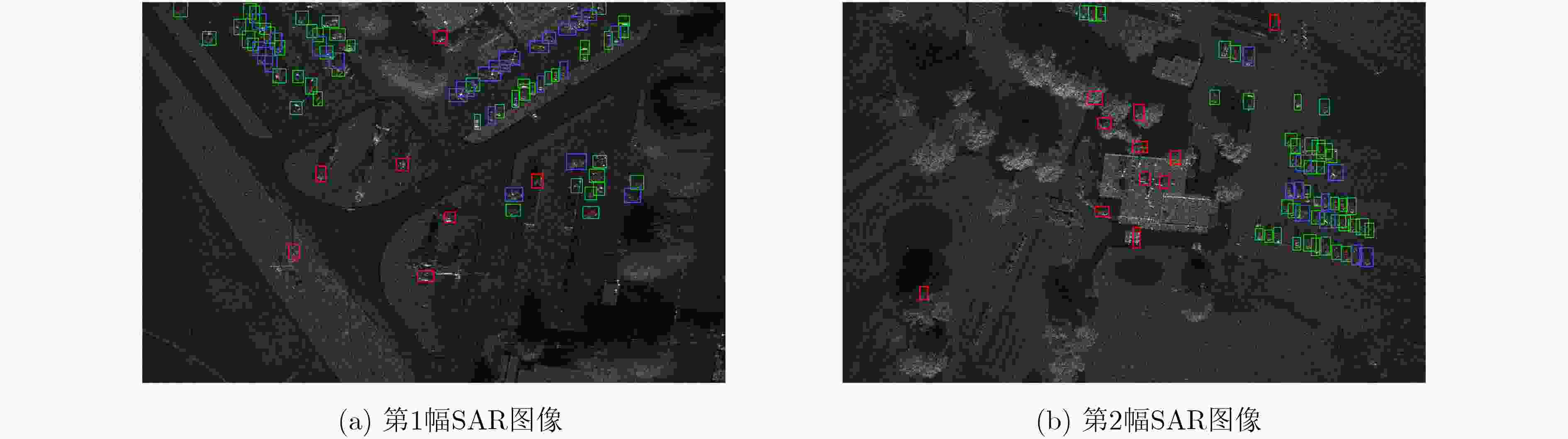

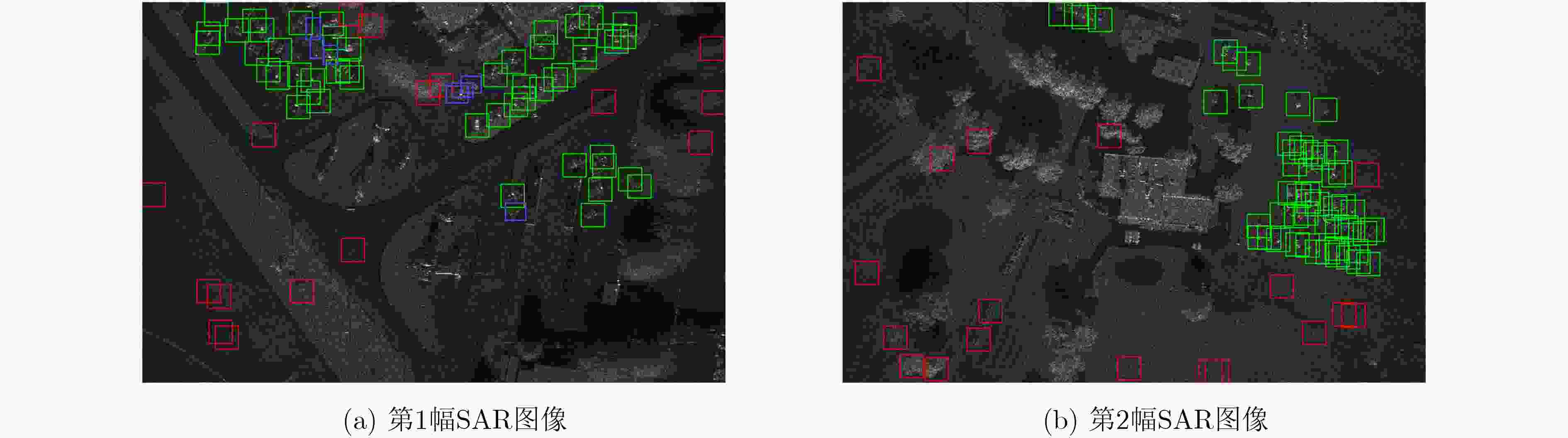

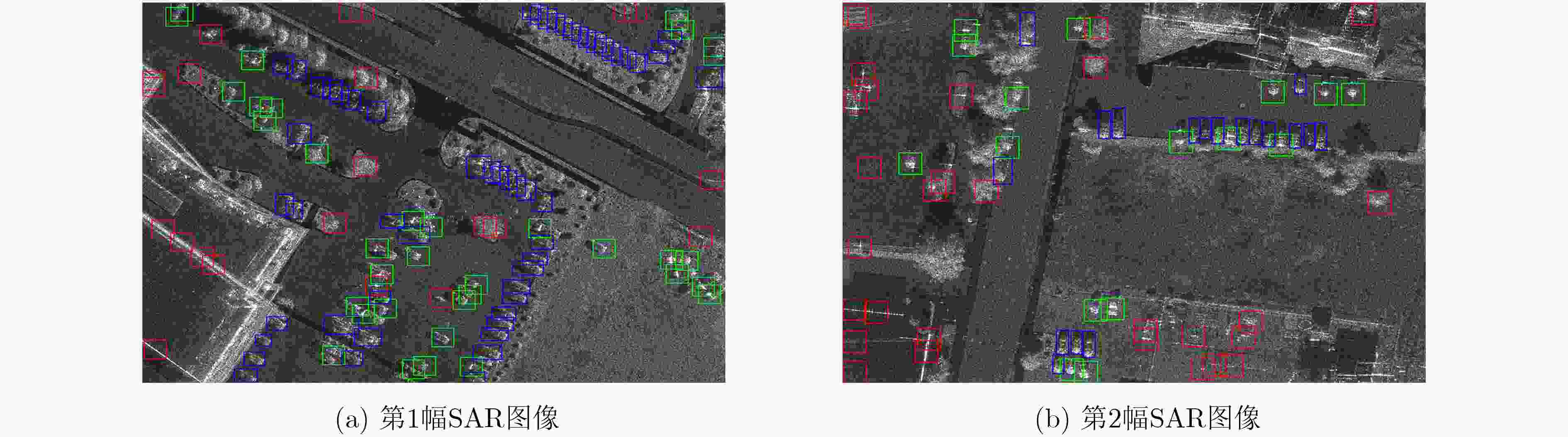

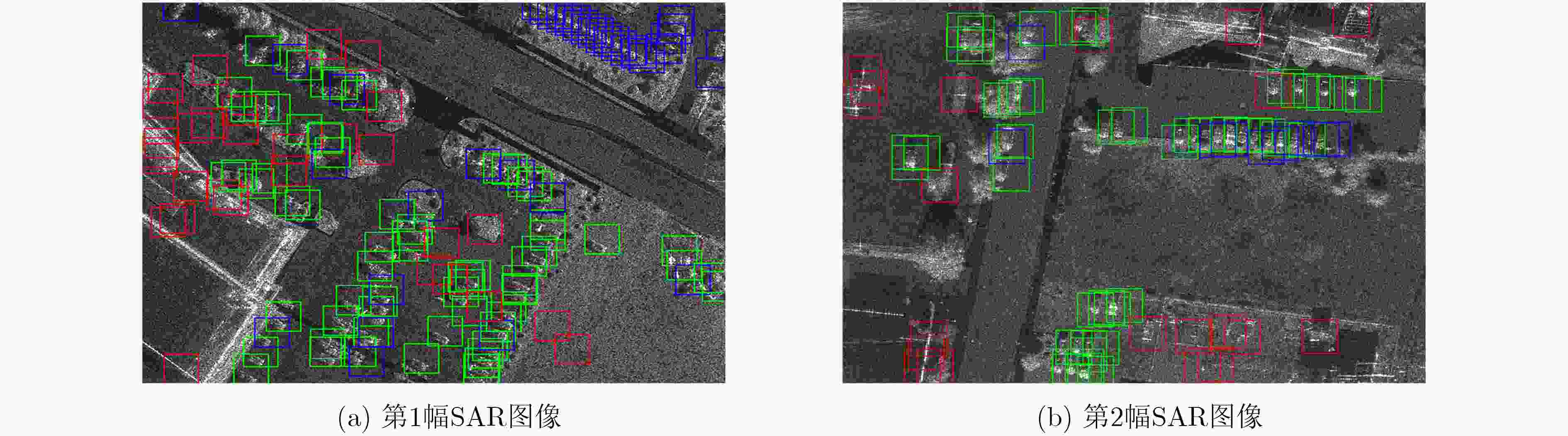

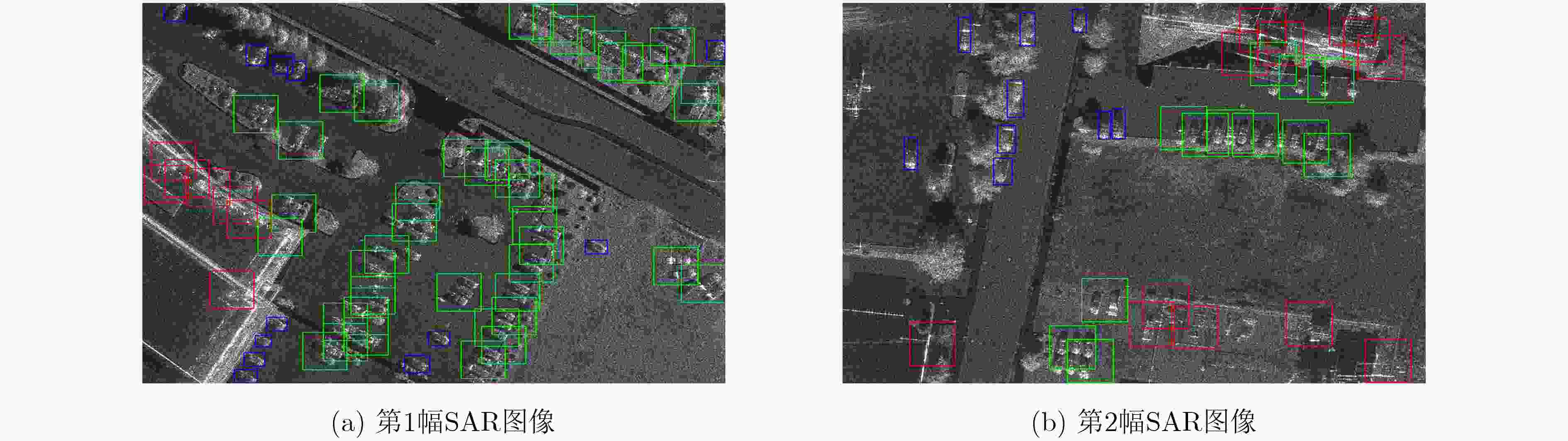

图 7 MiniSAR数据集:文献[14]方法的检测结果

图 8 MiniSAR数据集:文献[15]方法的检测结果

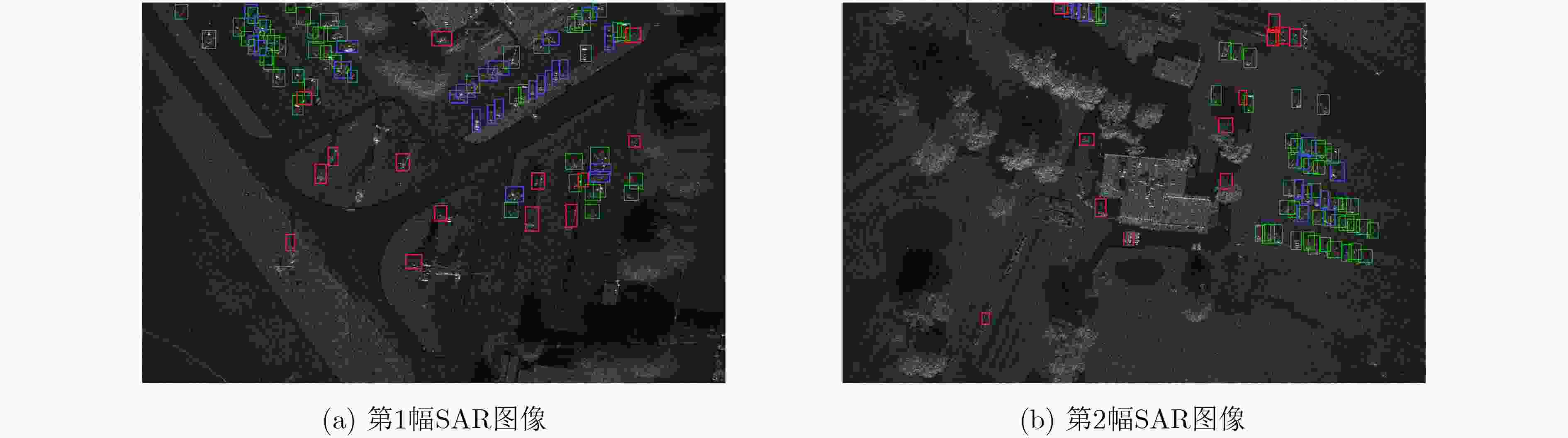

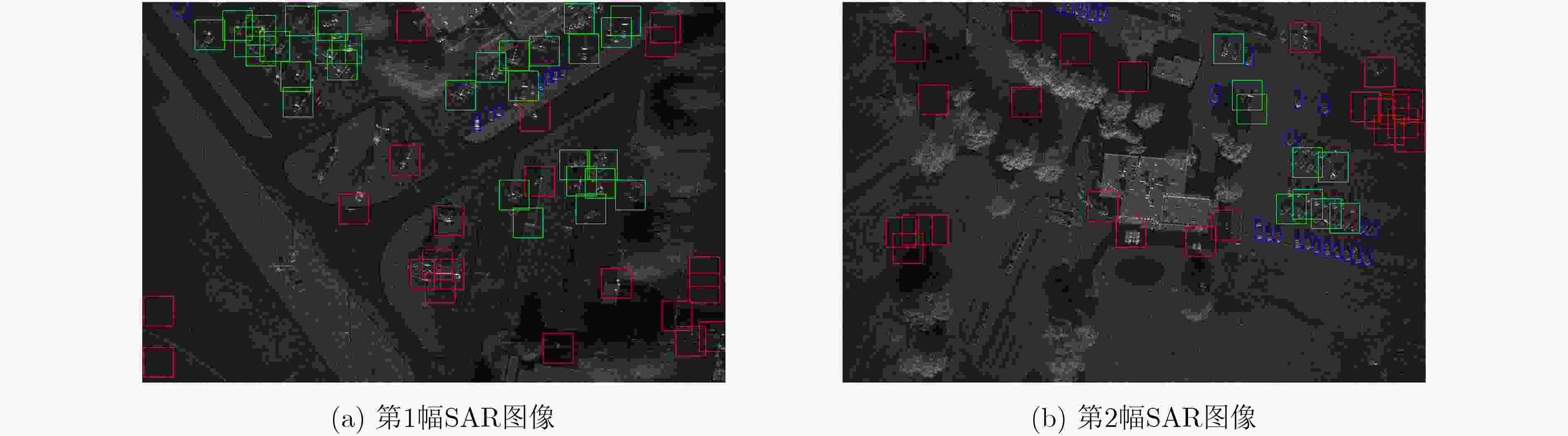

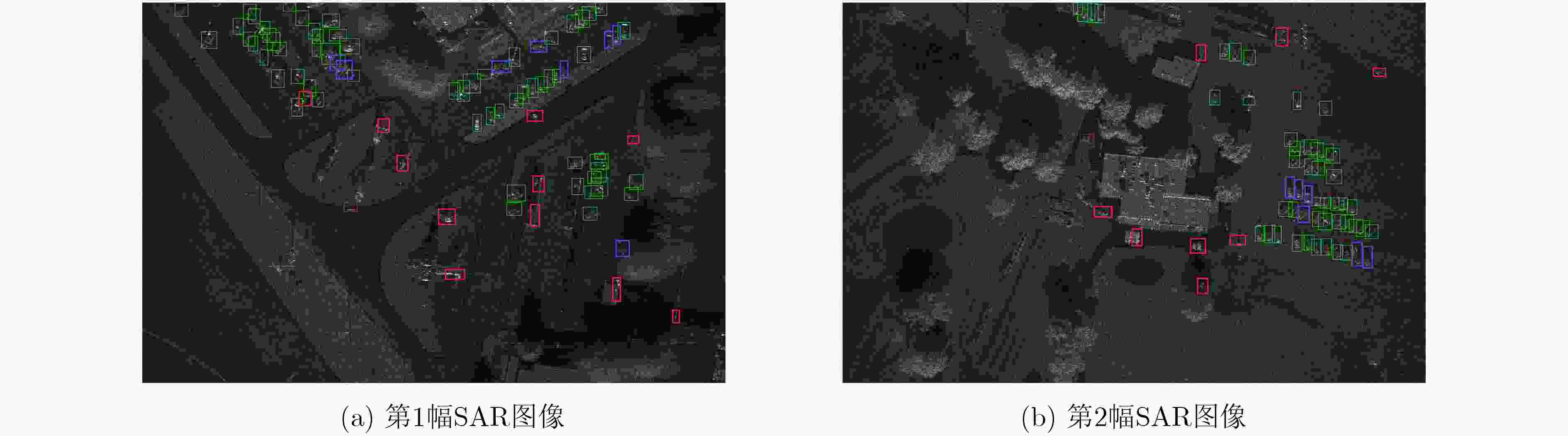

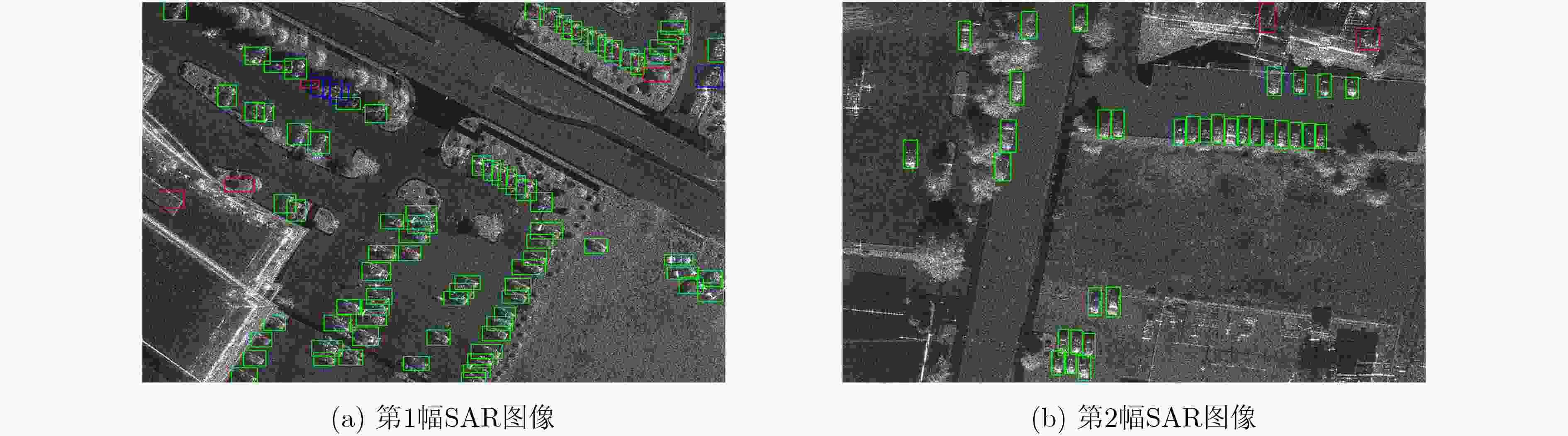

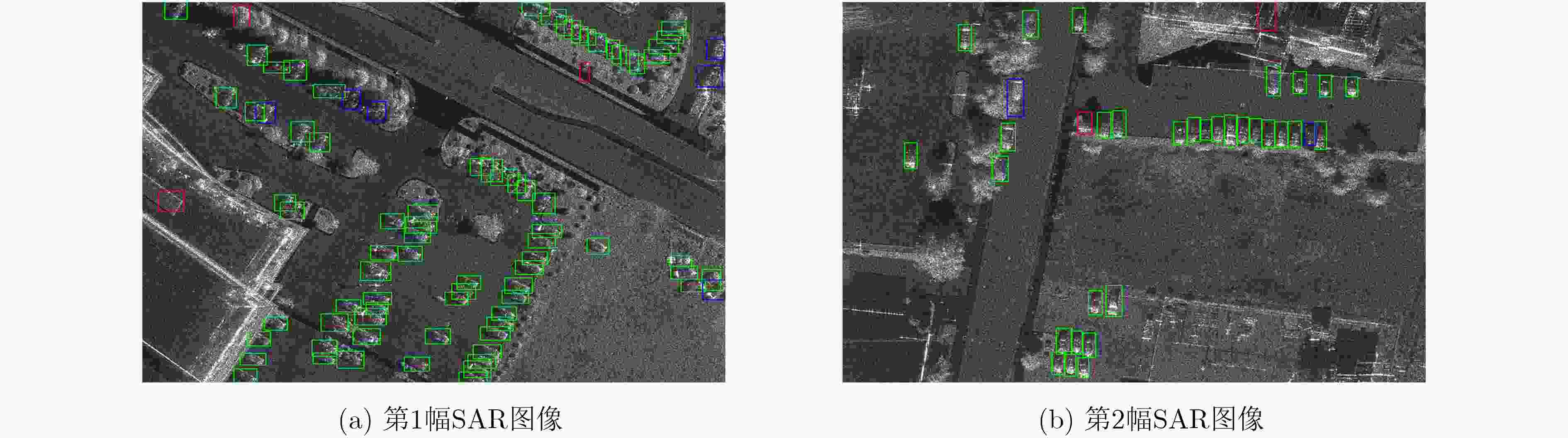

图 13 FARADSAR数据集:文献[14]方法的检测结果

图 14 FARADSAR数据集:文献[15]方法的检测结果

表 1 不同方案的实验结果

负包数量 挑选的切片 $P$ $R$ F1-score 0 正切片 0.6397 0.7500 0.6905 负切片 0.8833 0.4569 0.6023 正切片+负切片 0.7387 0.7069 0.7225 10 正切片 0.6797 0.7500 0.7131 负切片 0.7917 0.4914 0.6064 正切片+负切片 0.7573 0.6724 0.7123 20 正切片 0.7658 0.7328 0.7489 负切片 0.8382 0.4914 0.6196 正切片+负切片 0.8137 0.7155 0.7615 30 正切片 0.8202 0.6293 0.7122 负切片 0.8413 0.4569 0.5922 正切片+负切片 0.8675 0.6207 0.7236 40 正切片 0.8111 0.6293 0.7087 负切片 0.8667 0.4483 0.5909 正切片+负切片 0.8352 0.6552 0.7343 表 2 不同方法的实验结果

不同方法 MiniSAR数据集 FARADSAR数据集 $P$ $R$ F1-score $P$ $R$ F1-score Gaussian-CFAR 0.3789 0.7966 0.5135 0.2813 0.4671 0.3512 Faster R-CNN-少部分切片级标记 0.6455 0.6121 0.6283 0.7370 0.8813 0.8027 Faster R-CNN-全部切片级标记 0.8073 0.7586 0.7822 0.7760 0.9479 0.8534 文献[14]方法 0.5814 0.9806 0.7285 0.4506 0.7325 0.5580 文献[15]方法 0.4699 0.7480 0.5772 0.3744 0.7945 0.5090 本文方法 0.8137 0.7155 0.7615 0.8035 0.8813 0.8406 -

NOVAK L M, BURL M C, and IRVING W W. Optimal polarimetric processing for enhanced target detection[J]. IEEE Transactions on Aerospace and Electronic Systems, 1993, 29(1): 234–244. doi: 10.1109/7.249129 XING X W, CHEN Z L, ZOU H X, et al. A fast algorithm based on two-stage CFAR for detecting ships in SAR images[C]. The 2nd Asian-Pacific Conference on Synthetic Aperture Radar, Xi’an, China, 2009: 506–509. doi: 10.1109/APSAR.2009.5374119. LENG Xiangguang, JI Kefeng, YANG Kai, et al. A bilateral CFAR algorithm for ship detection in SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2015, 12(7): 1536–1540. doi: 10.1109/LGRS.2015.2412174 LECUN Y, BOTTOU L, BENGIO Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278–2324. doi: 10.1109/5.726791 HINTON G E and SALAKHUTDINOV R R. Reducing the dimensionality of data with neural networks[J]. Science, 2006, 313(5786): 504–507. doi: 10.1126/science.1127647 KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[C]. The 25th International Conference on Neural Information Processing Systems, Lake Tahoe, USA, 2012: 1097–1105. SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[J]. arXiv: 1409.1556, 2014. SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. doi: 10.1109/CVPR.2015.7298594. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. doi: 10.1109/CVPR.2014.81. GIRSHICK R. Fast R-CNN[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440–1448. doi: 10.1109/ICCV.2015.169. REN Shaoqing, HE Kaiming, GIRSHICK R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks[C]. The 28th International Conference on Neural Information Processing Systems, Montréal, Canada, 2015: 91–99. 杜兰, 刘彬, 王燕, 等. 基于卷积神经网络的SAR图像目标检测算法[J]. 电子与信息学报, 2016, 38(12): 3018–3025. doi: 10.11999/JEIT161032DU Lan, LIU Bin, WANG Yan, et al. Target detection method based on convolutional neural network for SAR image[J]. Journal of Electronics &Information Technology, 2016, 38(12): 3018–3025. doi: 10.11999/JEIT161032 ROSENBERG C, HEBERT M, and SCHNEIDERMAN H. Semi-supervised self-training of object detection models[C]. The 7th IEEE Workshops on Applications of Computer Vision, Breckenridge, USA, 2005: 29–36. doi: 10.1109/ACVMOT.2005.107. ZHANG Fan, DU Bo, ZHANG Liangpei, et al. Weakly supervised learning based on coupled convolutional neural networks for aircraft detection[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(9): 5553–5563. doi: 10.1109/TGRS.2016.2569141 IOFFE S and SZEGEDY C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]. The 32nd International Conference on Machine Learning, Lille, France, 2015: 448–456. GLOROT X, BORDES A, and BENGIO Y. Deep sparse rectifier neural networks[C]. The 14th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, USA, 2011: 315–323. GUTIERREZ D. MiniSAR: A review of 4-inch and 1-foot resolution Ku-band imagery[EB/OL]. https://www.sandia.gov/radar/Web/images/SAND2005-3706P-miniSAR-flight-SAR-images.pdf, 2005. FARADSAR public Release Data[EB/OL]. https://www.sandia.gov/radar/complex_data/FARAD_KA_BAND.zip. -

下载:

下载:

下载:

下载: