A Fast Deep Q-learning Network Edge Cloud Migration Strategy for Vehicular Service

-

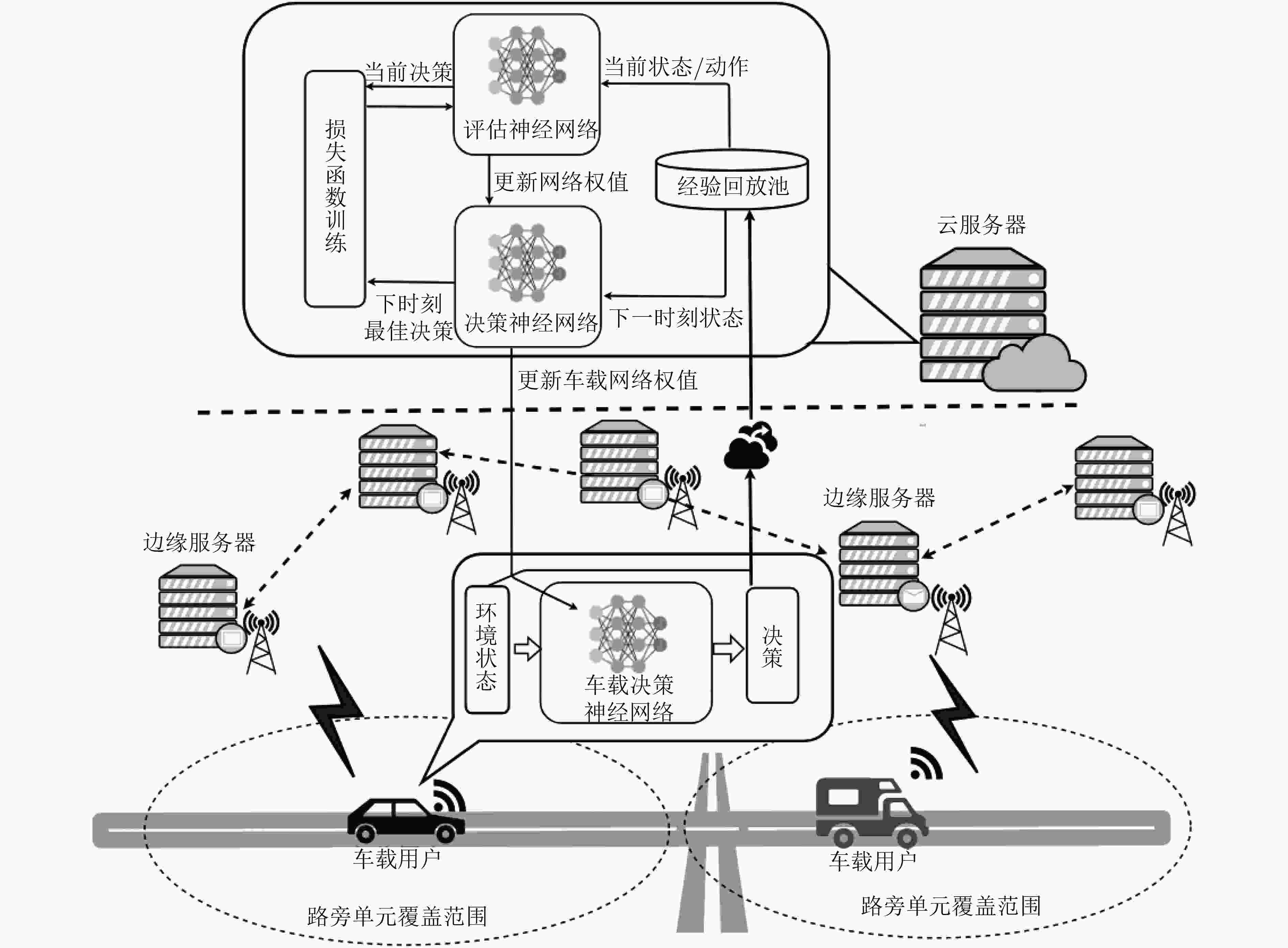

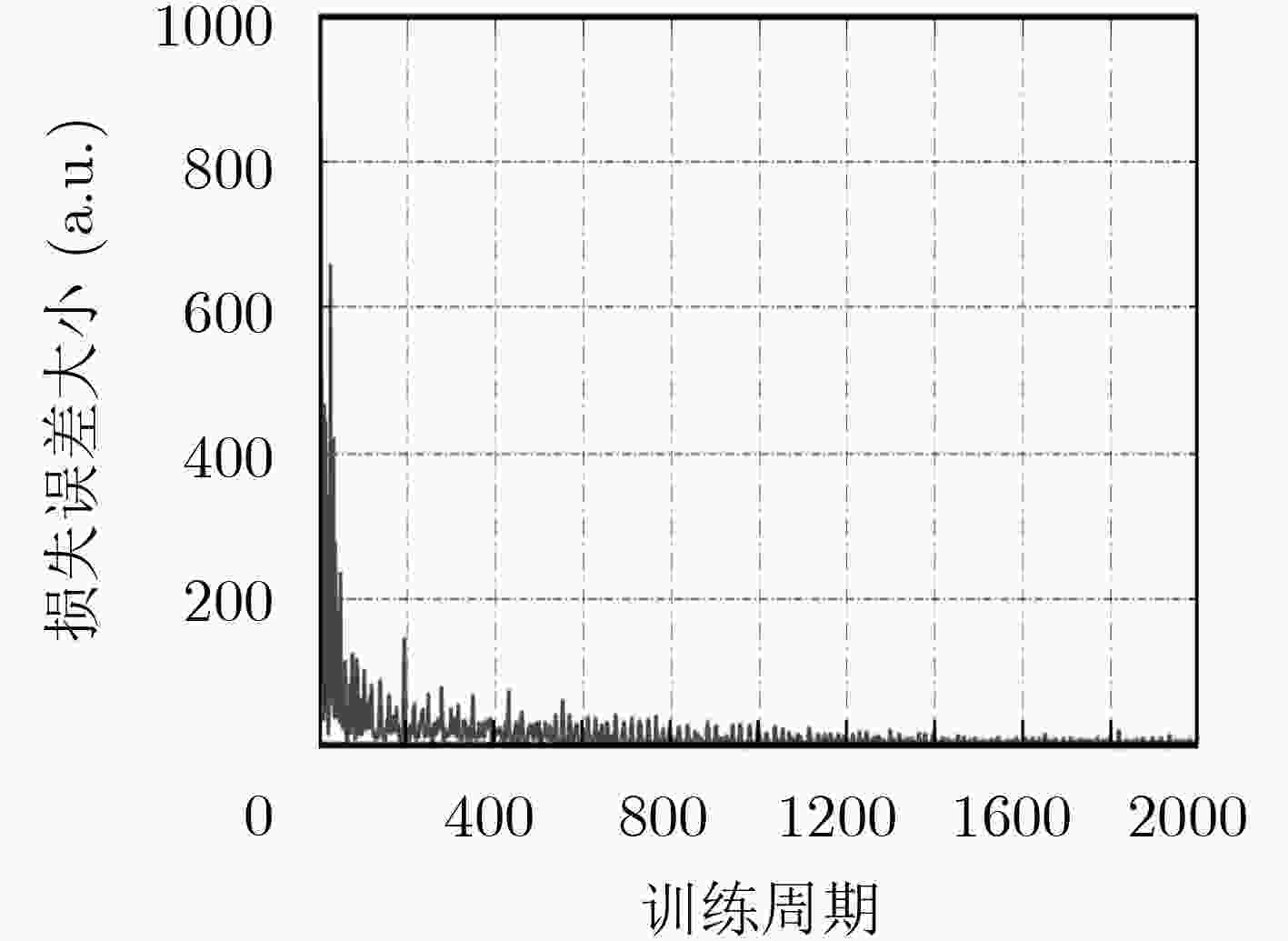

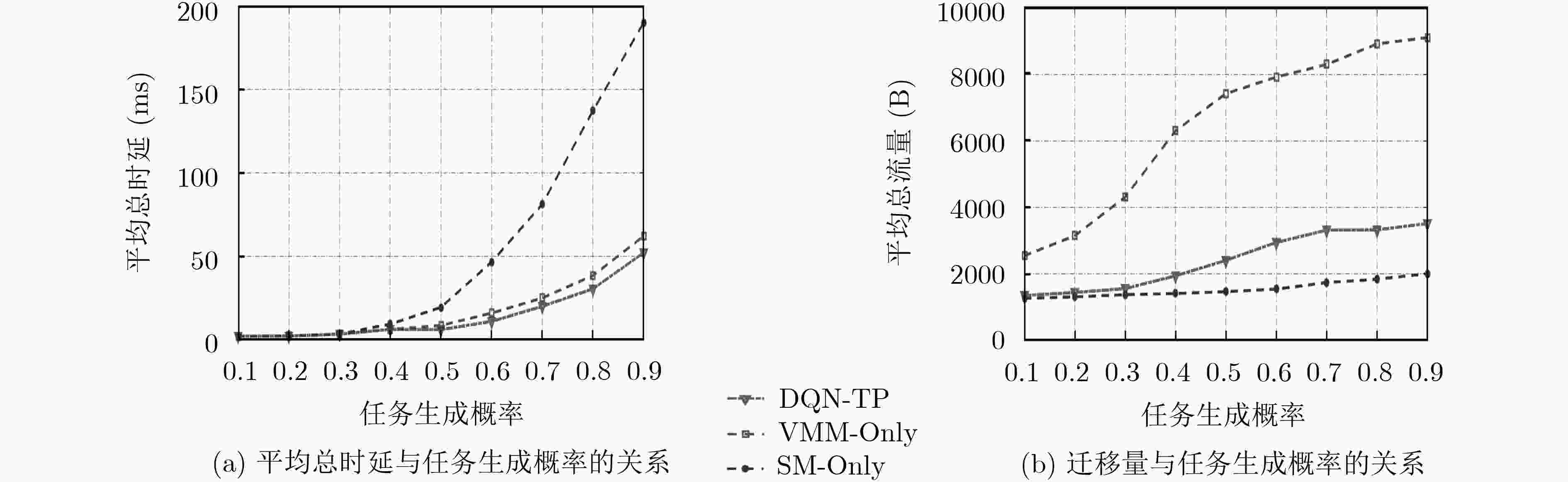

摘要: 智能网联交通系统中车载用户的高速移动,不可避免地造成了数据在边缘服务器之间频繁迁移,产生了额外的通信回传时延,对边缘服务器的实时计算服务带来了巨大的挑战。为此,该文提出一种基于车辆运动轨迹的快速深度Q学习网络(DQN-TP)边云迁移策略,实现数据迁移的离线评估和在线决策。车载决策神经网络实时获取接入的边缘服务器网络状态和通信回传时延,根据车辆的运动轨迹进行虚拟机或任务迁移的决策,同时将实时的决策信息和获取的边缘服务器网络状态信息发送到云端的经验回放池中;评估神经网络在云端读取经验回放池中的相关信息进行网络参数的优化训练,定时更新车载决策神经网络的权值,实现在线决策的优化。最后仿真验证了所提算法与虚拟机迁移算法和任务迁移算法相比能有效地降低时延。Abstract: The high-speed movement of vehicles inevitably leads to frequent data migration between edge servers and increases communication delay, which brings great challenges to the real-time computing service of edge servers. To solve this problem, a real-time reinforcement learning method based on Deep Q-learning Networks according to vehicle motion Trajectory Process (DQN-TP) is proposed. The proposed algorithm separates the decision-making process from the training process by using two neural networks. The decision neural network obtains the network state in real time according to the vehicle’s movement track and chooses the migration method in the virtual machine migration and task migration. At the same time, the decision neural network uploads the decision records to the memory replay pool in the cloud. The evaluation neural network in the cloud trains with the records in the memory replay pool and periodically updates the parameters to the on-board decision neural network. In this way, training and decision-making can be carried out simultaneously. At last, a large number of simulation experiments show that the proposed algorithm can effectively reduce the latency compared with the existing methods of task migration and virtual machine migration.

-

表 1 变量表

变量名 变量符号 决策周期长度 $\sigma $ 决策周期 $t$ 边缘服务器数量 $i$ 车载用户位置 ${\nu _t}$ 边缘服务器位置 ${\mu _m}$ 路径损失参数 $\delta $ 路旁单元覆盖半径 $r$ 任务大小 ${q_{\rm s}}$ 任务最大容忍时延 ${q_{\rm d}}$ 传输功率 ${P_{\rm s}}$ 时延 $T$ 虚拟机所在位置 ${ D}$ 表 2 DQN-TP算法

算法1: DQN-TP算法 (1) Repeat: (2) 车载用户上传车载决策神经网络的经验$({X_t},{a_t},{U_t},{X_{t + 1}})$到经验回放池; (3) While $t \ne $最后一个周期do (4) 从经验回放池中随机抽取$n$个经验作为一个mini-batch; (5) 将${X_t},{a_t}$作为评估神经网络的输入获得${Q_{\pi} }({X_t},{a_t};\theta )$,将${X_{t + 1}}$作为决策神经网络的输入获得${Q_{\pi } }({X_{t + 1} },{a_{t + 1} };{\theta ^-})$; (6) 根据式(13)、式(14)训练神经网络; (7) End While (8) 每训练$c$次将云端的神经网络参数更新给车载神经网络$\theta \to {\theta ^{^\_}}$; (9) 车载用户使用$\varepsilon {\rm{ - }}$贪婪算法选择动作-状态值函数最高的动作作为车载用户动作执行; (10) End 表 3 仿真参数设定

参数名 参数符号 参数值 决策周期 $\sigma $ 10–3 s 边缘服务器数量 $i$ 10 路径损失参数 $\delta $ 1.5 带宽 $W$ 4 MHz 路旁单元覆盖半径 $r$ 500 m 效用函数参数 $k$ 1.3 效用函数参数 $b$ 0.1 记忆回放池最大存储数 $o$ 3000 Mini-batch大小 $n$ 500 参数更新间隔步长 $c$ 80 神经网络层数 无 4 神经元总数 无 100 -

ZHU Li, YU F R, WANG Yige, et al. Big data analytics in intelligent transportation systems: A survey[J]. IEEE Transactions on Intelligent Transportation Systems, 2019, 20(1): 383–398. doi: 10.1109/TITS.2018.2815678 D’OREY P M and FERREIRA M. ITS for sustainable mobility: A survey on applications and impact assessment tools[J]. IEEE Transactions on Intelligent Transportation Systems, 2014, 15(2): 477–493. doi: 10.1109/TITS.2013.2287257 彭军, 马东, 刘凯阳, 等. 基于LTE D2D技术的车联网通信架构与数据分发策略研究[J]. 通信学报, 2016, 37(7): 62–70. doi: 10.11959/j.issn.1000-436x.2016134PENG Jun, MA Dong, LIU Kaiyang, et al. LTE D2D based vehicle networking communication architecture and data distributing strategy[J]. Journal on Communications, 2016, 37(7): 62–70. doi: 10.11959/j.issn.1000-436x.2016134 GAO Kai, HAN Farong, DONG Pingping, et al. Connected vehicle as a mobile sensor for real time queue length at signalized intersections[J]. Sensors, 2019, 19(9): 2059. doi: 10.3390/s19092059 KONG Yue, ZHANG Yikun, WANG Yichuan, et al. Energy saving strategy for task migration based on genetic algorithm[C]. 2018 International Conference on Networking and Network Applications, Xi’an, China, 2018: 330–336. CHEN Xianfu, ZHANG Honggang, WU C, et al. Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning[J]. IEEE Internet of Things Journal, 2019, 6(3): 4005–4018. doi: 10.1109/JIOT.2018.2876279 SAHA S and HASAN M S. Effective task migration to reduce execution time in mobile cloud computing[C]. The 23rd International Conference on Automation and Computing, Huddersfield, UK, 2017: 1–5. GONÇALVES D, VELASQUEZ K, CURADO M, et al. Proactive virtual machine migration in fog environments[C]. 2018 IEEE Symposium on Computers and Communications, Natal, Brazil, 2018: 742–745. KIKUCHI J, WU C, JI Yusheng, et al. Mobile edge computing based VM migration for QoS improvement[C]. The 6th IEEE Global Conference on Consumer Electronics, Nagoya, Japan, 2017: 1–5. CHOWDHURY M, STEINBACH E, KELLERER W, et al. Context-Aware task migration for HART-Centric collaboration over FiWi based tactile internet infrastructures[J]. IEEE Transactions on Parallel and Distributed Systems, 2018, 29(6): 1231–1246. doi: 10.1109/TPDS.2018.2791406 LU Wei, MENG Xianyu, and GUO Guanfei. Fast service migration method based on virtual machine technology for MEC[J]. IEEE Internet of Things Journal, 2019, 6(3): 4344–4354. doi: 10.1109/JIOT.2018.2884519 WANG Yanting, SHENG Min, WANG Xijun, et al. Mobile-edge computing: Partial computation offloading using dynamic voltage scaling[J]. IEEE Transactions on Communications, 2016, 64(10): 4268–4282. doi: 10.1109/TCOMM.2016.2599530 SUTTON R S and BARTO A G. Reinforcement Learning: An Introduction[M]. Cambridge: MIT Press, 1998: 25–42. SNIA trace data[EB/OL]. http://iotta.snia.org/traces, 2018. -

下载:

下载:

下载:

下载: