Recent Advances in Zero-Shot Learning

-

摘要:

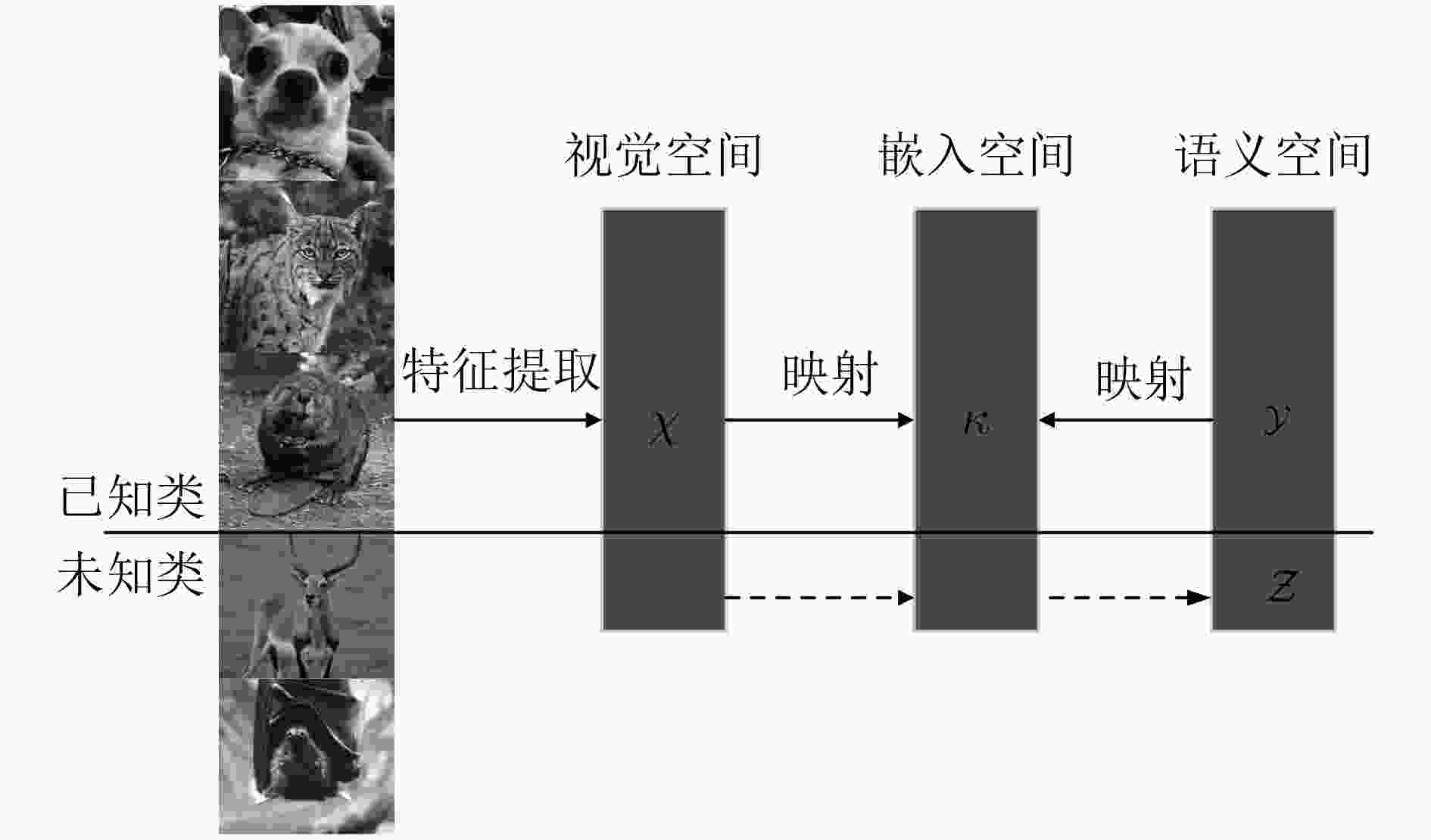

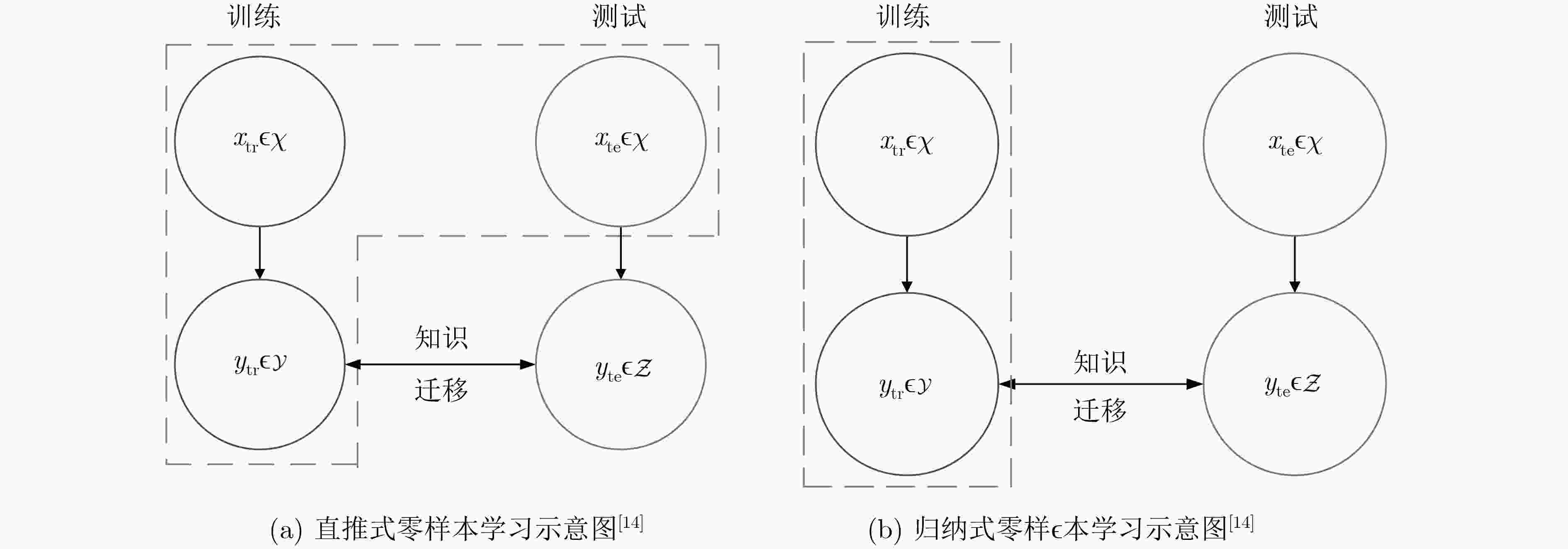

深度学习在人工智能领域已经取得了非常优秀的成就,在有监督识别任务中,使用深度学习算法训练海量的带标签数据,可以达到前所未有的识别精确度。但是,由于对海量数据的标注工作成本昂贵,对罕见类别获取海量数据难度较大,所以如何识别在训练过程中少见或从未见过的未知类仍然是一个严峻的问题。针对这个问题,该文回顾近年来的零样本图像识别技术研究,从研究背景、模型分析、数据集介绍、实验分析等方面全面阐释零样本图像识别技术。此外,该文还分析了当前研究存在的技术难题,并针对主流问题提出一些解决方案以及对未来研究的展望,为零样本学习的初学者或研究者提供一些参考。

Abstract:Deep learning has shown excellent performance in the field of artificial intelligence. In the supervised identification task, deep learning algorithms can achieve unprecedented recognition accuracy by training massive tagged data. However, owing to the high cost of labeling massive data and the difficulty of obtaining massive data of rare categories, it is still a serious problem how to identify unknown class that is rarely or never seen during training. In view of this problem, the researches of Zero-Shot Learning (ZSL) in recent years is reviewed and illustrated from the aspects of research background, model analysis, data set introduction and performance analysis in this article. Some solutions of mainstream problem and prospects of future research are provided. Meanwhile, the current technical problems of ZSL is analyzed, which can offer some references to beginners and researchers of ZSL.

-

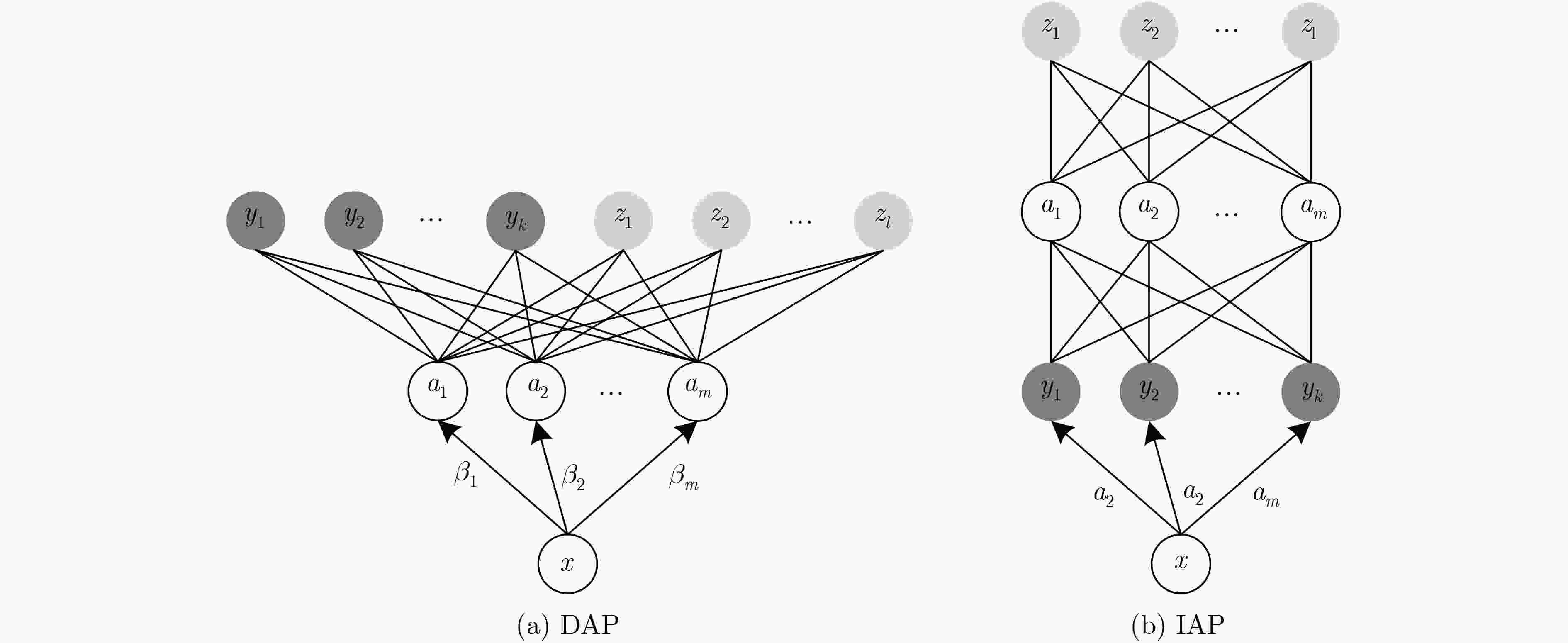

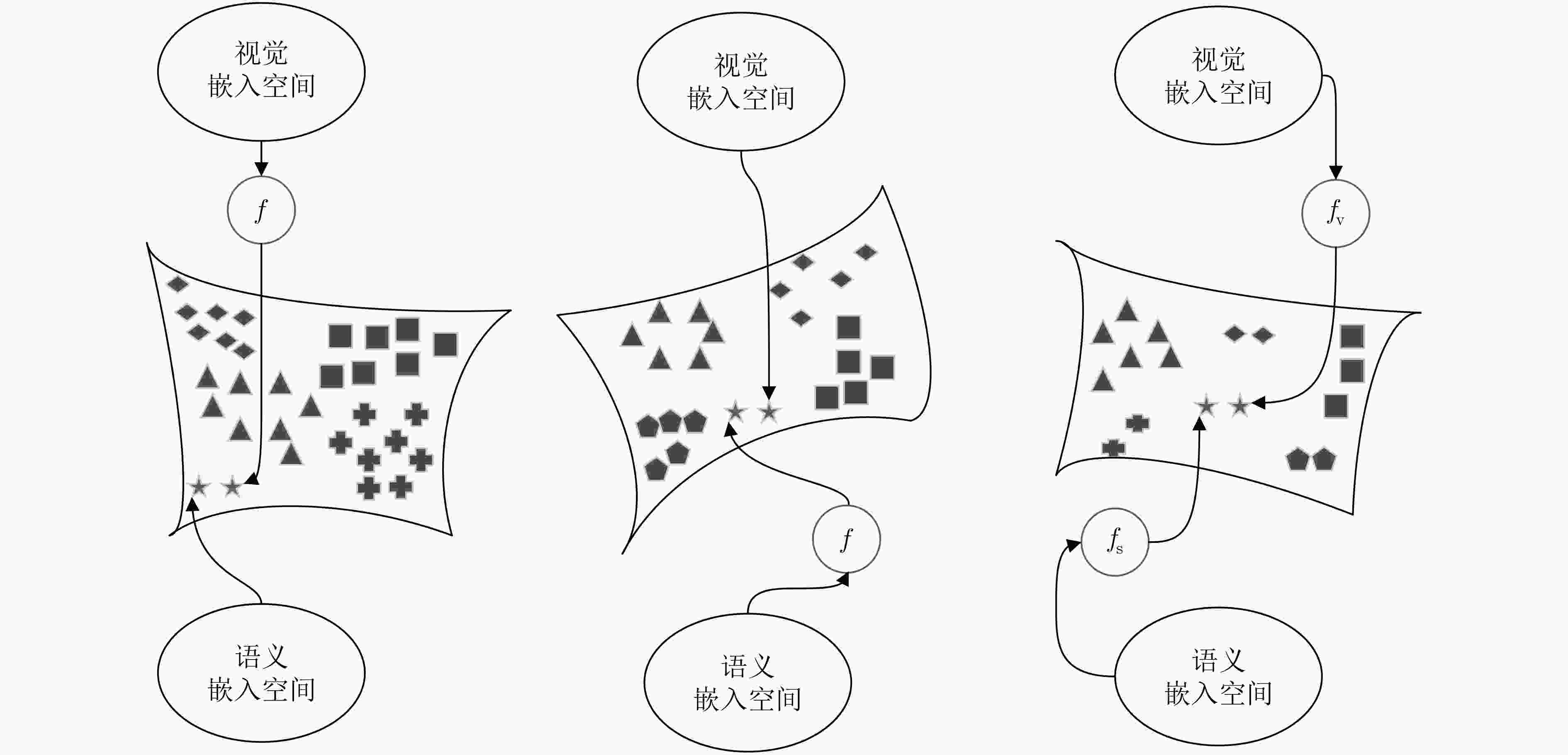

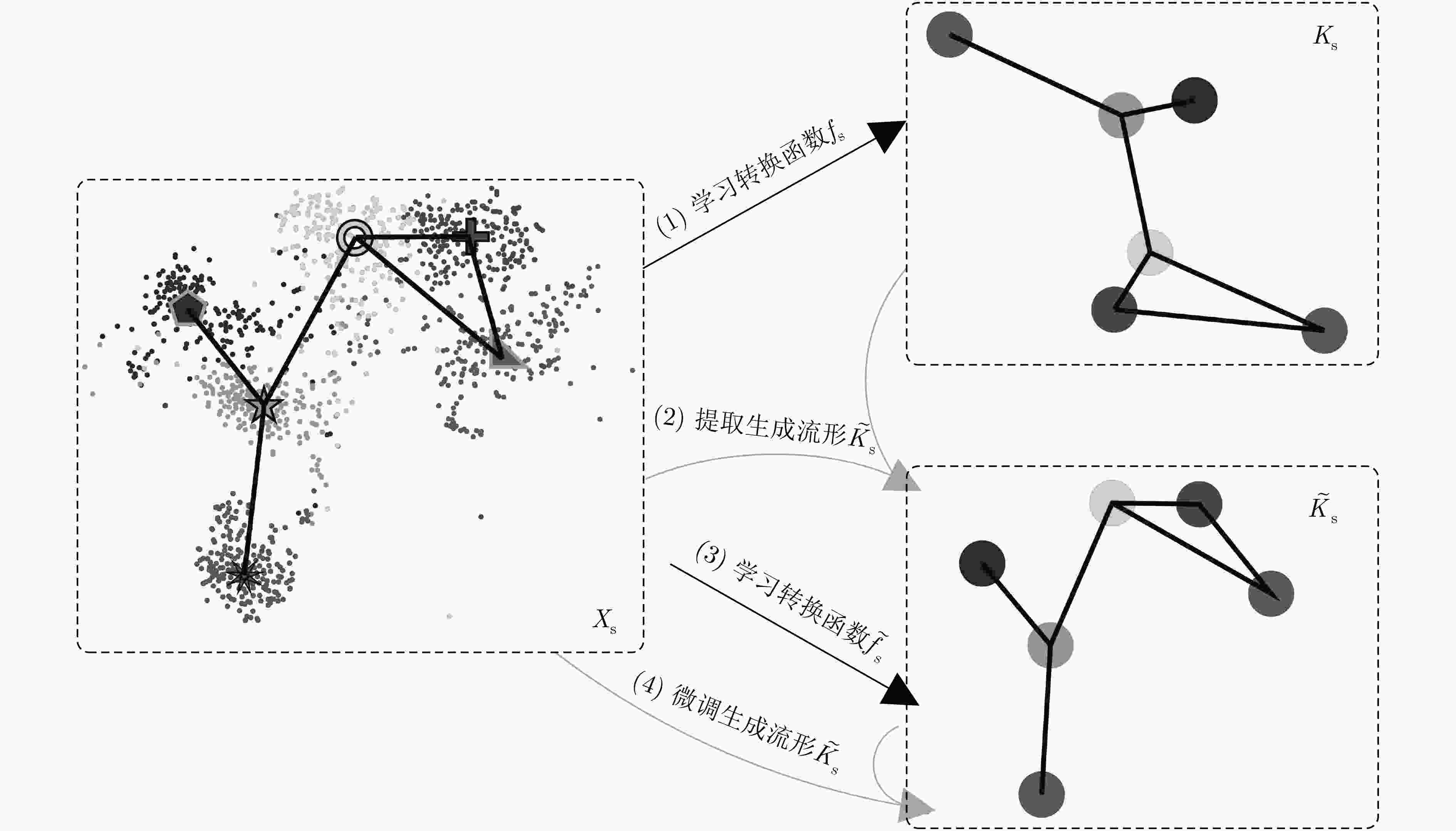

图 3 经典归纳式零样本模型示意图[7]

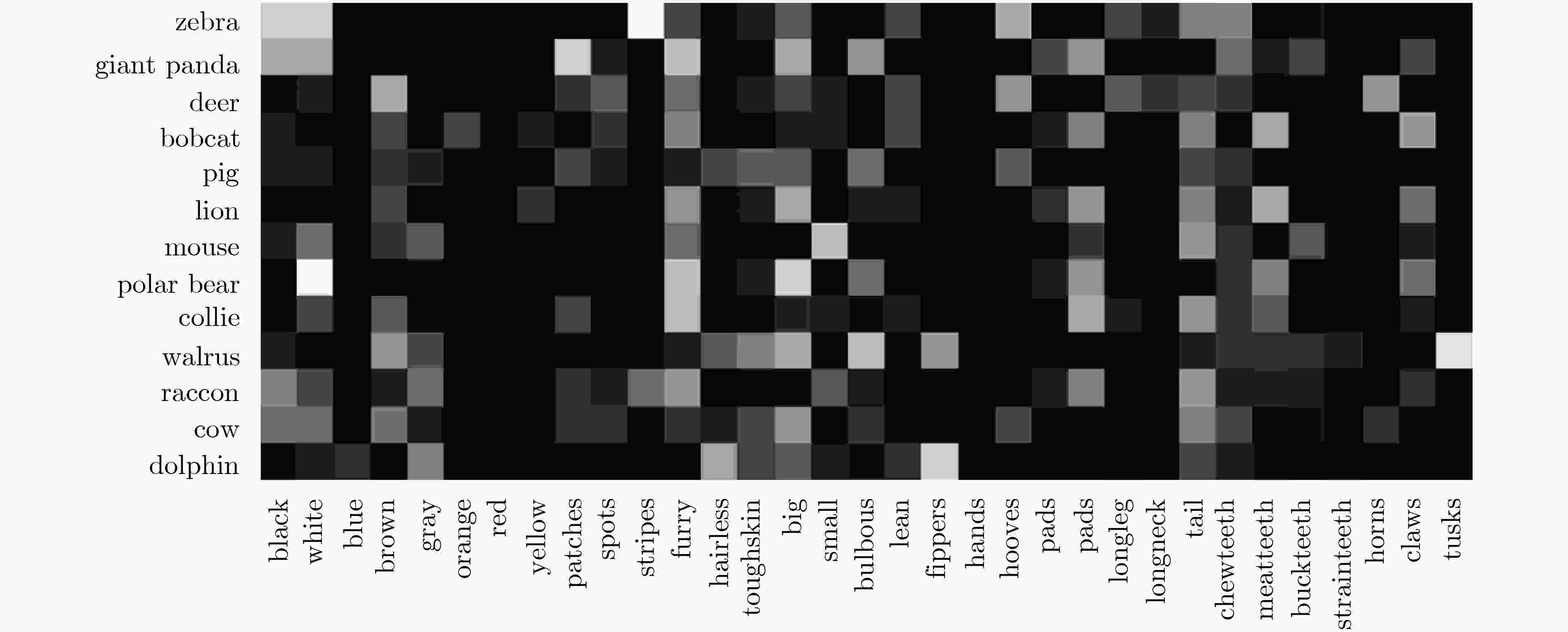

图 4 AwA类-属性关系矩阵[7]

图 6 领域漂移示例图[55]

表 1 机器学习方法对比表

训练集$\{ \cal{X},\cal{Y}\} $ 测试集$\{ \cal{X},\cal{Z}\} $ 训练类$\cal{Y}$与测试类$\cal{Z}$间关系$R$ 最终分类器$C$ 无监督学习 大量无标签图片 已知类图片 $\cal{Y} = \cal{Z}$ $C:\cal{X} \to \cal{Y}$ 有监督学习 大量带标签图片 已知类图片 $\cal{Y} = \cal{Z}$ $C:\cal{X} \to \cal{Y}$ 半监督学习 较少带标签图片和大量无标签图片 已知类图片 $\cal{Y} = \cal{Z}$ $C:\cal{X} \to \cal{Y}$ 少样本学习 极少带标签图片和大量无标签图片 已知类图片 $\cal{Y} = \cal{Z}$ $C:\cal{X} \to \cal{Y}$ 零样本学习 大量带标签图片 未知类图片 ${\cal Y} \cap {\cal Z} = \varnothing$ $C:\cal{X} \to \cal{Z}$ 表 2 零样本学习中深度卷积神经网络使用情况统计表

网络 论文数量 VGG 501 GoogleNet 271 ResNet 397 表 3 零样本学习性能比较(%)

方法 传统零样本学习 泛化零样本学习 AwA CUB SUN AwA CUB SUN SS PS SS PS SS PS U→T S→T H U→T S→T H U→T S→T H IAP 46.9 35.9 27.1 24.0 17.4 19.4 0.9 87.6 1.8 0.2 72.8 0.4 1.0 37.8 1.8 DAP 58.7 46.1 37.5 40.0 38.9 39.9 0.0 84.7 0.0 1.7 67.9 3.3 4.2 25.1 7.2 DeViSE 68.6 59.7 53.2 52.0 57.5 56.5 17.1 74.7 27.8 23.8 53.0 32.8 16.9 27.4 20.9 ConSE 67.9 44.5 36.7 34.3 44.2 38.8 0.5 90.6 1.0 1.6 72.2 3.1 6.8 39.9 11.6 SJE 69.5 61.9 55.3 53.9 57.1 53.7 8.0 73.9 14.4 23.5 59.2 33.6 14.7 30.5 19.8 SAE 80.7 54.1 33.4 33.3 42.4 40.3 1.1 82.2 2.2 7.8 54.0 13.6 8.8 18.0 11.8 SYNC 71.2 46.6 54.1 55.6 59.1 56.3 10.0 90.5 18.0 11.5 70.9 19.8 7.9 43.3 13.4 LDF 83.4 – 70.4 – – – – – – – – – – – – SP-AEN – 58.5 – 55.4 – 59.2 23.3 90.9 37.1 34.7 70.6 46.6 24.9 38.6 30.3 QFSL 84.8 79.7 69.7 72.1 61.7 58.3 66.2 93.1 77.4 71.5 74.9 73.2 51.3 31.2 38.8 -

SUN Yi, CHEN Yuheng, WANG Xiaogang, et al. Deep learning face representation by joint identification-verification[C]. The 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 1988–1996. LIU Chenxi, ZOPH B, NEUMANN M, et al. Progressive neural architecture search[C]. The 15th European Conference on Computer Vision, Munich, Germany, 2018: 19–35. LEDIG C, THEIS L, HUSZÁR F, et al. Photo-realistic single image super-resolution using a generative adversarial network[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 105–114. BIEDERMAN I. Recognition-by-components: A theory of human image understanding[J]. Psychological Review, 1987, 94(2): 115–147. doi: 10.1037/0033-295X.94.2.115 LAROCHELLE H, ERHAN D, and BENGIO Y. Zero-data learning of new tasks[C]. The 23rd National Conference on Artificial Intelligence, Chicago, USA, 2008: 646–651. PALATUCCI M, POMERLEAU D, HINTON G, et al. Zero-shot learning with semantic output codes[C]. The 22nd International Conference on Neural Information Processing Systems, Vancouver, Canada, 2009: 1410–1418. LAMPERT C H, NICKISCH H, and HARMELING S. Learning to detect unseen object classes by between-class attribute transfer[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 951–958. doi: 10.1109/CVPR.2009.5206594. HARRINGTON P. Machine Learning in Action[M]. Greenwich, CT, USA: Manning Publications Co, 2012: 5–14. ZHOU Dengyong, BOUSQUET O, LAL T N, et al. Learning with local and global consistency[C]. The 16th International Conference on Neural Information Processing Systems, Whistler, Canada, 2003: 321–328. 刘建伟, 刘媛, 罗雄麟. 半监督学习方法[J]. 计算机学报, 2015, 38(8): 1592–1617. doi: 10.11897/SP.J.1016.2015.01592LIU Jianwei, LIU Yuan, and LUO Xionglin. Semi-supervised learning methods[J]. Chinese Journal of Computers, 2015, 38(8): 1592–1617. doi: 10.11897/SP.J.1016.2015.01592 SUNG F, YANG Yongxin, LI Zhang, et al. Learning to compare: Relation network for few-shot learning[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1199–1208. FU Yanwei, XIANG Tao, JIANG Yugang, et al. Recent advances in zero-shot recognition: Toward data-efficient understanding of visual content[J]. IEEE Signal Processing Magazine, 2018, 35(1): 112–125. doi: 10.1109/MSP.2017.2763441 XIAN Yongqin, LAMPERT C H, SCHIELE B, et al. Zero-shot learning—A comprehensive evaluation of the good, the bad and the ugly[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(9): 2251–2265. doi: 10.1109/TPAMI.2018.2857768 WANG Wenlin, PU Yunchen, VERMA V K, et al. Zero-shot learning via class-conditioned deep generative models[C]. The 32nd AAAI Conference on Artificial Intelligence, New Orleans, USA, 2018: 4211–4218. FU Yanwei, HOSPEDALES T M, XIANG Tao, et al. Attribute learning for understanding unstructured social activity[C]. The 12th European Conference on Computer Vision, Florence, Italy, 2012: 530–543. ANTOL S, ZITNICK C L, and PARIKH D. Zero-shot learning via visual abstraction[C]. The 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 401–416. ROBYNS P, MARIN E, LAMOTTE W, et al. Physical-layer fingerprinting of LoRa devices using supervised and zero-shot learning[C]. The 10th ACM Conference on Security and Privacy in Wireless and Mobile Networks, Boston, USA, 2017: 58–63. doi: 10.1145/3098243.3098267. YANG Yang, LUO Yadan, CHEN Weilun, et al. Zero-shot hashing via transferring supervised knowledge[C]. The 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 2016: 1286–1295. doi: 10.1145/2964284.2964319. PACHORI S, DESHPANDE A, and RAMAN S. Hashing in the zero shot framework with domain adaptation[J]. Neurocomputing, 2018, 275: 2137–2149. doi: 10.1016/j.neucom.2017.10.061 LIU Jingen, KUIPERS B, and SAVARESE S. Recognizing human actions by attributes[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Colorado, USA, 2011: 3337–3344. FU Yanwei, HOSPEDALES T M, XIANG Tao, et al. Learning multimodal latent attributes[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(2): 303–316. doi: 10.1109/TPAMI.2013.128 JAIN M, VAN GEMERT J C, MENSINK T, et al. Objects2action: Classifying and localizing actions without any video example[C]. The IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4588–4596. XU Baohan, FU Yanwei, JIANG Yugang, et al. Video emotion recognition with transferred deep feature encodings[C]. The 2016 ACM on International Conference on Multimedia Retrieval, New York, USA, 2016: 15–22. JOHNSON M, SCHUSTER M, LE Q V, et al. Google’s multilingual neural machine translation system: Enabling zero-shot translation[J]. Transactions of the Association for Computational Linguistics, 2017, 5: 339–351. doi: 10.1162/tacl_a_00065 PRATEEK VEERANNA S, JINSEOK N, ENELDO L M, et al. Using semantic similarity for multi-label zero-shot classification of text documents[C]. The 23rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 2016: 423–428. DALAL N and TRIGGS B. Histograms of oriented gradients for human detection[C]. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, USA, 2005: 886–893. LOWE D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91–110. doi: 10.1023/B:VISI.0000029664.99615.94 BAY H, ESS A, TUYTELAARS T, et al. Speeded-up robust features (SURF)[J]. Computer Vision and Image Understanding, 2008, 110(3): 346–359. doi: 10.1016/j.cviu.2007.09.014 ROMERA-PAREDES B and TORR P H S. An embarrassingly simple approach to zero-shot learning[C]. The 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 2152–2161. ZHANG Li, XIANG Tao, and GONG Shaogang. Learning a deep embedding model for zero-shot learning[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 3010–3019. LI Yan, ZHANG Junge, ZHANG Jianguo, et al. Discriminative learning of latent features for zero-shot recognition[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 7463–7471. WANG Xiaolong, YE Yufei, and GUPTA A. Zero-shot recognition via semantic embeddings and knowledge graphs[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 6857–6866. WAH C, BRANSON S, WELINDER P, et al. The caltech-UCSD birds-200-2011 dataset[R]. Technical Report CNS-TR-2010-001, 2011. MIKOLOV T, SUTSKEVER I, CHEN Kai, et al. Distributed representations of words and phrases and their compositionality[C]. The 26th International Conference on Neural Information Processing Systems, Lake Tahoe, USA, 2013: 3111–3119. LEE C, FANG Wei, YEH C K, et al. Multi-label zero-shot learning with structured knowledge graphs[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1576–1585. JETLEY S, ROMERA-PAREDES B, JAYASUMANA S, et al. Prototypical priors: From improving classification to zero-shot learning[J]. arXiv: 2015, 1512.01192. KARESSLI N, AKATA Z, SCHIELE B, et al. Gaze embeddings for zero-shot image classification[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6412–6421. REED S, AKATA Z, LEE H, et al. Learning deep representations of fine-grained visual descriptions[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 49–58. ELHOSEINY M, ZHU Yizhe, ZHANG Han, et al. Link the head to the "beak": Zero shot learning from noisy text description at part precision[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6288–6297. doi: 10.1109/CVPR.2017.666. LAZARIDOU A, DINU G, and BARONI M. Hubness and pollution: Delving into cross-space mapping for zero-shot learning[C]. The 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 2015: 270–280. WANG Xiaoyang and JI Qiang. A unified probabilistic approach modeling relationships between attributes and objects[C]. The IEEE International Conference on Computer Vision, Sydney, Australia, 2013: 2120–2127. AKATA Z, PERRONNIN F, HARCHAOUI Z, et al. Label-embedding for attribute-based classification[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Portland, USA, 2013: 819–826. JURIE F, BUCHER M, and HERBIN S. Generating visual representations for zero-shot classification[C]. The IEEE International Conference on Computer Vision Workshops, Venice, Italy, 2017: 2666–2673. FARHADI A, ENDRES I, HOIEM D, et al. Describing objects by their attributes[C]. 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 1778–1785. doi: 10.1109/CVPR.2009.5206772. PATTERSON G, XU Chen, SU Hang, et al. The sun attribute database: Beyond categories for deeper scene understanding[J]. International Journal of Computer Vision, 2014, 108(1/2): 59–81. XIAO Jianxiong, HAYS J, EHINGER K A, et al. Sun database: Large-scale scene recognition from abbey to zoo[C]. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, USA, 2010: 3485–3492. doi: 10.1109/CVPR.2010.5539970. NILSBACK M E and ZISSERMAN A. Delving deeper into the whorl of flower segmentation[J]. Image and Vision Computing, 2010, 28(6): 1049–1062. doi: 10.1016/j.imavis.2009.10.001 NILSBACK M E and ZISSERMAN A. A visual vocabulary for flower classification[C]. 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, USA, 2006: 1447–1454. doi: 10.1109/CVPR.2006.42. NILSBACK M E and ZISSERMAN A. Automated flower classification over a large number of classes[C]. The 6th Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 2008: 722–729. doi: 10.1109/ICVGIP.2008.47. KHOSLA A, JAYADEVAPRAKASH N, YAO Bangpeng, et al. Novel dataset for fine-grained image categorization: Stanford dogs[C]. CVPR Workshop on Fine-Grained Visual Categorization, 2011. DENG Jia, DONG Wei, SOCHER R, et al. ImageNet: A large-scale hierarchical image database[C]. 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA, 2009: 248–255. CHAO Weilun, CHANGPINYO S, GONG Boqing, et al. An empirical study and analysis of generalized zero-shot learning for object recognition in the wild[C]. The 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 2016: 52–68. SONG Jie, SHEN Chengchao, YANG Yezhou, et al. Transductive unbiased embedding for zero-shot learning[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1024–1033. 李亚南. 零样本学习关键技术研究[D]. [博士论文], 浙江大学, 2018: 40–43.LI Yanan. Research on key technologies for zero-shot learning[D]. [Ph.D. dissertation], Zhejiang University, 2018: 40–43 FU Yanwei, HOSPEDALES T M, XIANG Tao, et al. Transductive multi-view zero-shot learning[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(11): 2332–2345. doi: 10.1109/TPAMI.2015.2408354 KODIROV E, XIANG Tao, and GONG Shaogang. Semantic autoencoder for zero-shot learning[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 4447–4456. STOCK M, PAHIKKALA T, AIROLA A, et al. A comparative study of pairwise learning methods based on kernel ridge regression[J]. Neural Computation, 2018, 30(8): 2245–2283. doi: 10.1162/neco_a_01096 ANNADANI Y and BISWAS S. Preserving semantic relations for zero-shot learning[J]. arXiv: 2018, 1803.03049. LI Yanan, WANG Donghui, HU Huanhang, et al. Zero-shot recognition using dual visual-semantic mapping paths[C]. The IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5207–5215. CHEN Long, ZHANG Hanwang, XIAO Jun, et al. Zero-shot visual recognition using semantics-preserving adversarial embedding networks[C]. The IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1043–1052. -

下载:

下载:

下载:

下载: