Multichannel MI-EEG Feature Decoding Based on Deep Learning

-

摘要:

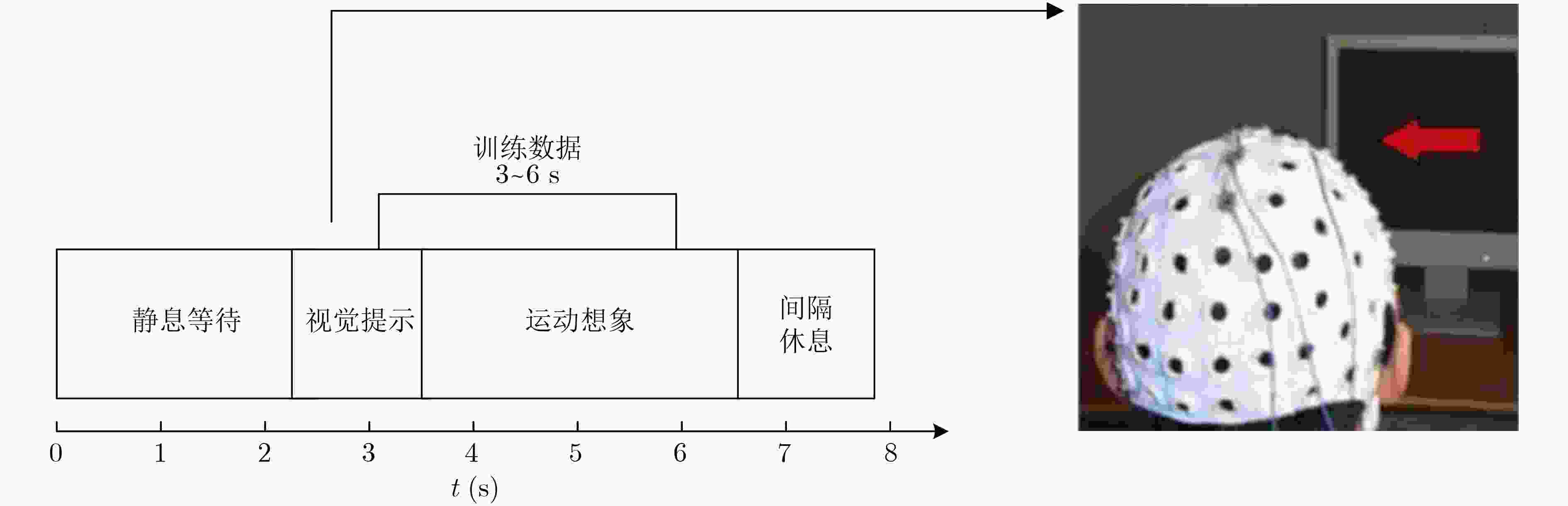

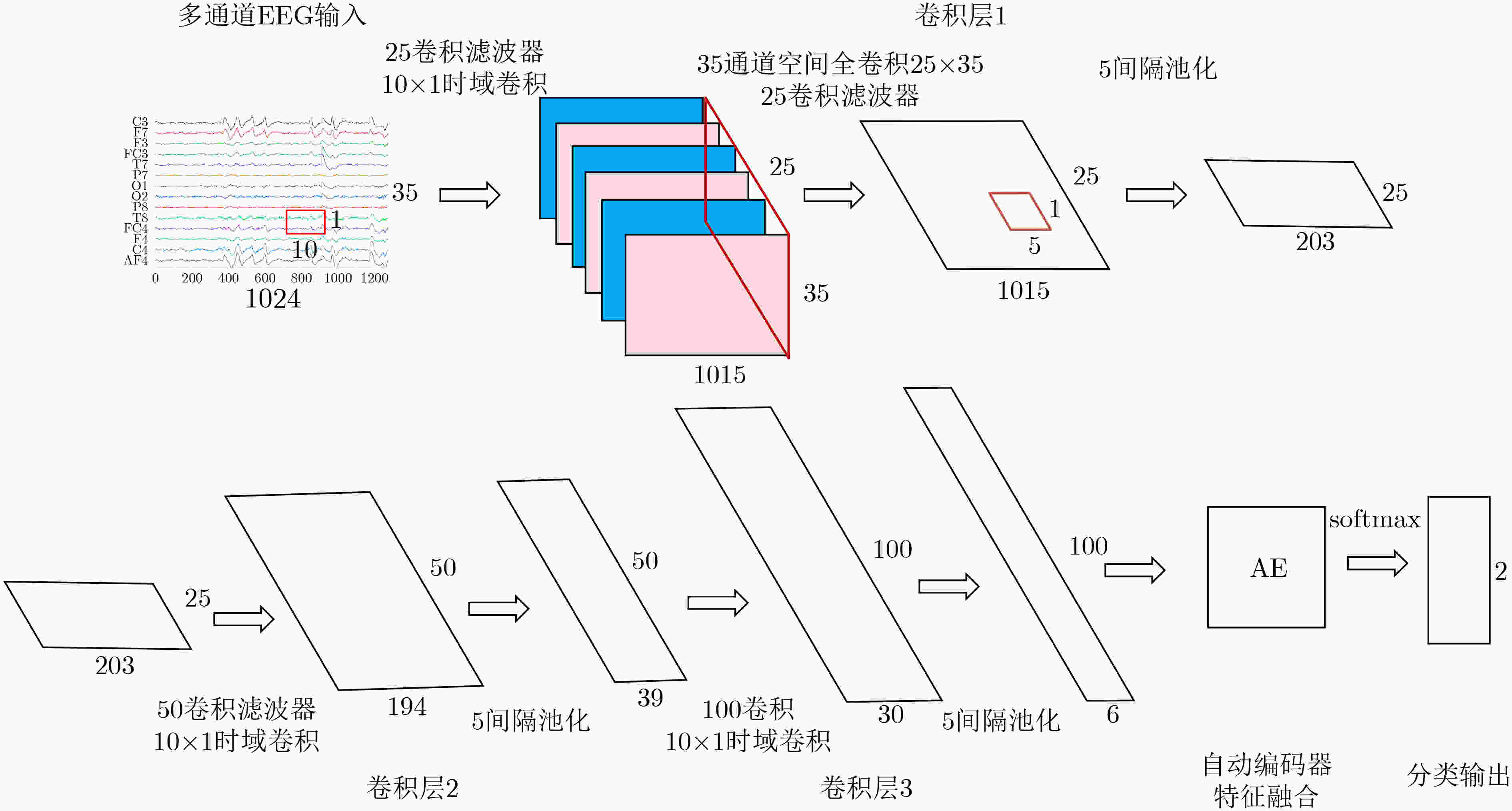

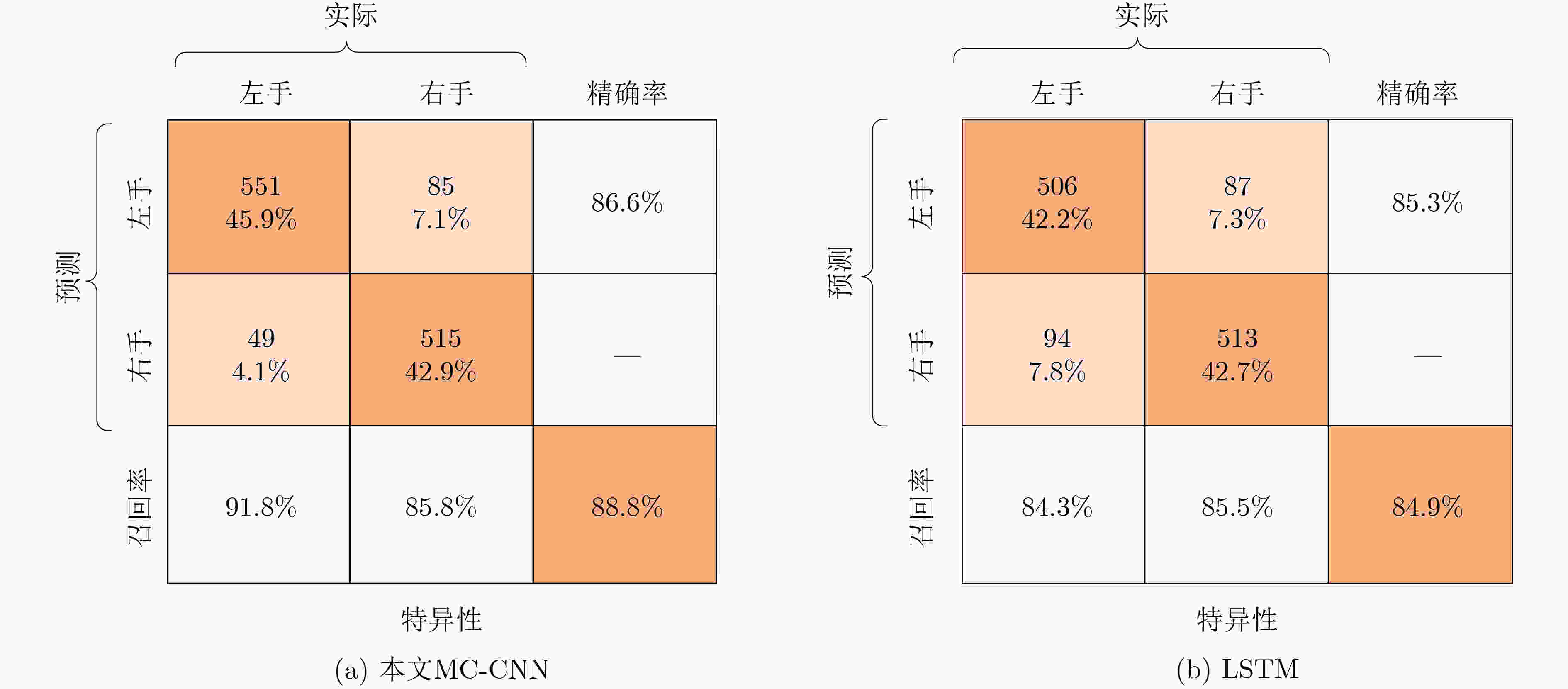

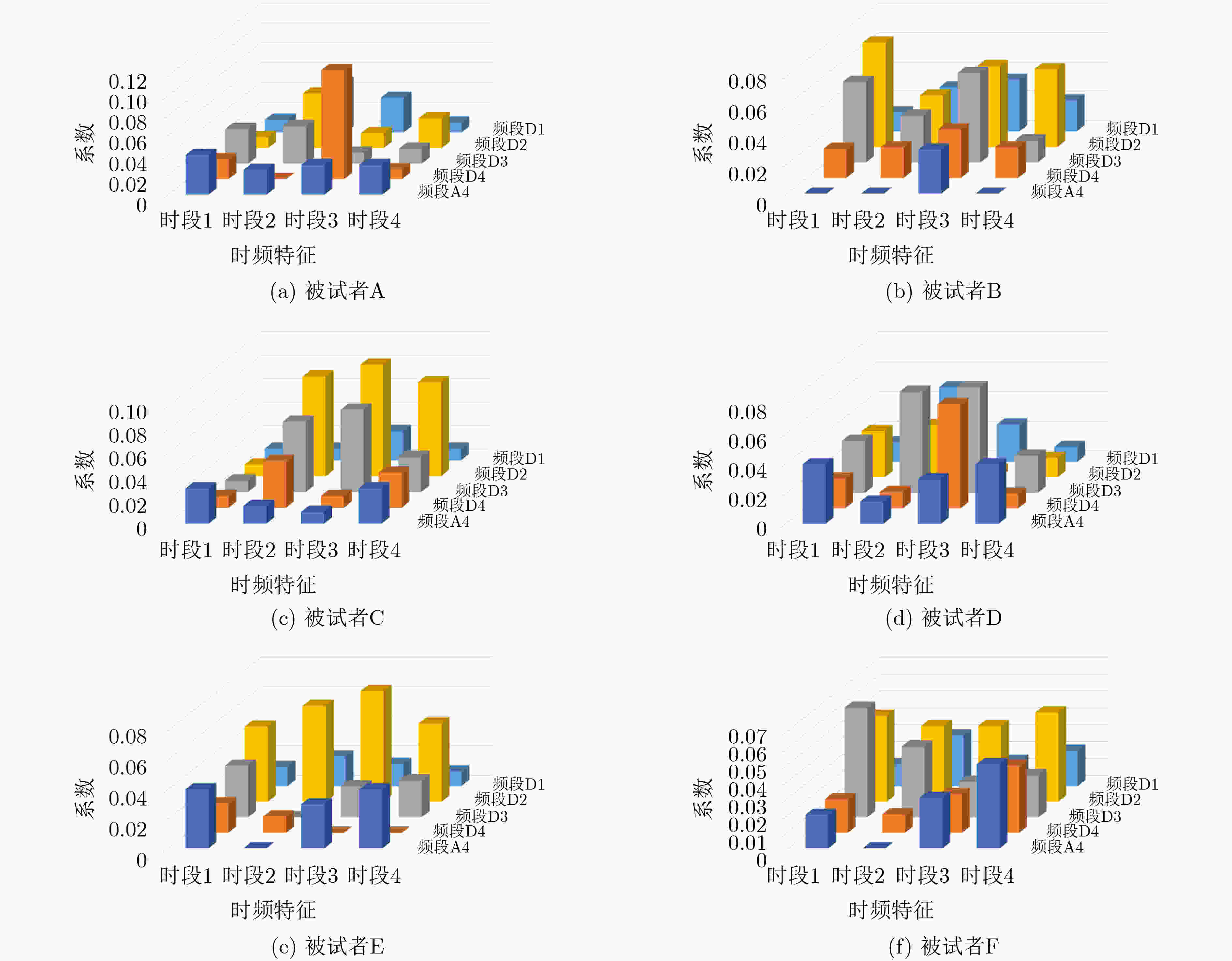

脑电(EEG)是一种在临床上广泛应用的脑信息记录形式,其反映了脑活动中神经细胞放电产生的电场变化情况。脑电广泛应用于脑-机接口(BCI)系统。然而,研究表明脑电信息空间分辨率较低,这种缺陷可以综合分析多通道电极的脑电数据来弥补。为了从多通道数据中高效地获取到与运动想象任务相关的辨识特征,该文提出一种针对多通道脑电信息的卷积神经网络(MC-CNN)解码方法,先对预先选取好的多通道数据预处理后送入2维卷积神经网络(CNN)进行时间-空间特征提取,然后利用自动编码(AE)器把这些特征映射为具有辨识度的特征子空间,最后指导识别网络进行分类识别。实验结果表明,该文所提多通道空间特征提取和构建方法在运动想象脑电任务识别性能和效率上都具有较大优势。

Abstract:Regarding as the measure of the electrical fields produced by the active brain, ElectroEncephaloGraphy (EEG) is a brain mapping and neuroimaging technique widely used inside and outside of the clinical domain, which is also widely used in Brain–Computer Interfaces (BCI). However, low spatial resolution is regarded as the deficiency of EEG signified from researches, which can fortunately be made up by synthetic analysis of data from different channels. In order to efficiently obtain subspace features with discriminant characteristics from EEG channel information, a Multi-Channel Convolutional Neural Networks (MC-CNN) model is proposed for MI-EEG decoding. Firstly input data is pre-processed form selected multi-channel signals, then the time-spatial features are extracted using a novel 2D Convolutional Neural Networks (CNN). Finally, these features are transformed to discriminant sub-space of information with Auto-Encoder (AE) to guide the identification network. The experimental results show that the proposed multi-channel spatial feature extraction method has certain advantages in recognition performance and efficiency.

-

Key words:

- Decoding of MI-EEG /

- Multi-channel feature fusion /

- Subspace features

-

表 1 数据经过各卷积层和神经网络层后的映射变化情况

网络结构层 滤波器 滤波器数 输入 输出 参数数量 卷积层1 (10, 1, 1) 50 (1024, 35) (1015, 35, 25) 500 批量归一化处理 (1015, 35, 25) (1015, 35, 25) 最大池化 (1, 5, 35) – (1015, 35, 25) (1015, 7, 1) 卷积层2 (5, 2, 1) 100 (1015, 7, 1) (1011, 6, 50) 1000 批量归一化处理 (1011, 6, 50) (1011, 6, 50) 最大池化 (10, 1, 50) – (1011, 6, 50) (101, 6, 1) 全连接层 (101, 6, 1) 606 表 2 MI-EEG实验数据情况

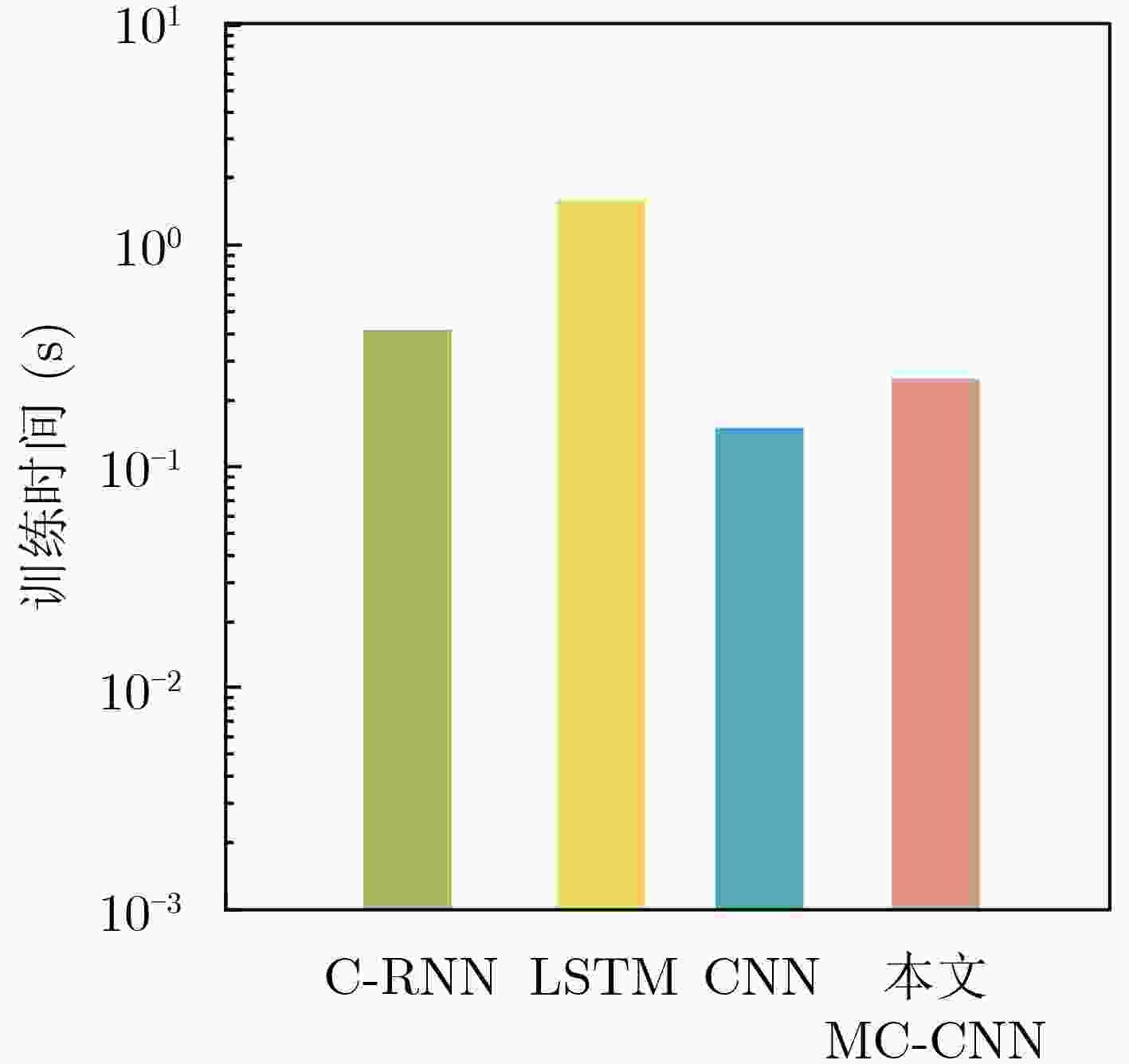

数据集 采集数据集 公共数据集 S1 S2 S3 被试者数 6 4 4 通道数 35 35 35 实验次数(次/人) 100 300 280 表 3 不同被试者和方法下的准确率(采集数据D1)

方法 被试者 平均值 A B C D E F CNN 0.826 0.844 0.852 0.862 0.871 0.819 0.8457 LSTM 0.835 0.871 0.872 0.852 0.858 0.848 0.8560 本文MC-CNN 0.851 0.879 0.922 0.863 0.900 0.868 0.8805 表 4 不同被试者和方法下的准确率(公共数据D2,D3)

方法 S2 S3 A B C D E F G H CNN 0.814 0.825 0.829 0.874 0.803 0.825 0.843 0.804 LSTM 0.882 0.829 0.828 0.858 0.886 0.832 0.868 0.820 本文MC-CNN 0.911 0.921 0.869 0.853 0.870 0.851 0.891 0.860 表 5 不同多通道MI-EEG解码方法的准确率对比(%)

方法 最差 最优 平均准确率 RC-SFS 80.4 92.3 81.96 RC-SBS 60.7 91.7 79.52 SVM-GA 67.9 94.8 83.86 RC-GA 75.1 98.5 88.20 MC-CNN 86.8 91.9 89.15 表 6 不同通道对识别结果的影响分布

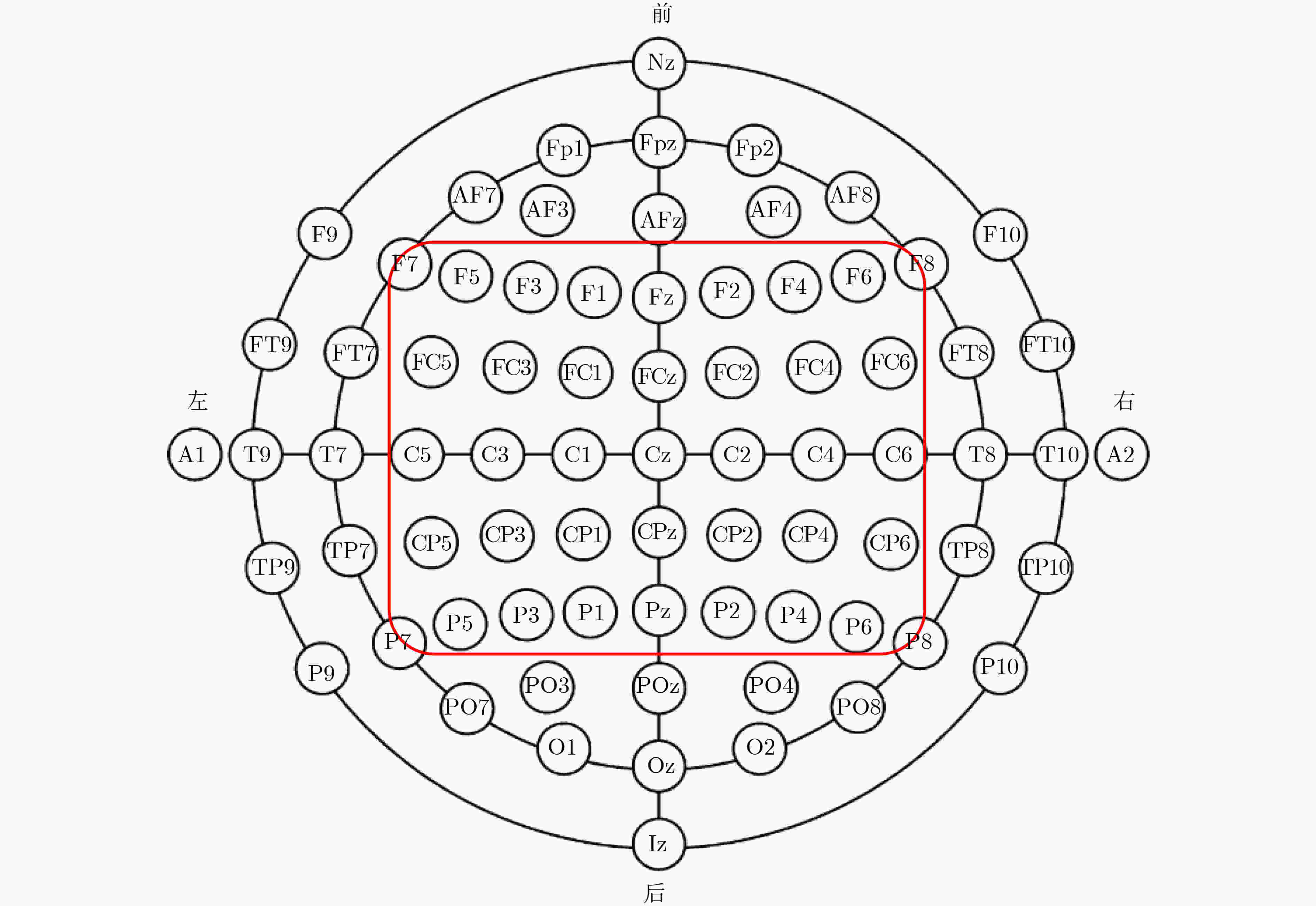

序号 影响程度 通道 1 极大 C3, C4, Cz 2 大 CP3, FC3, FC4, FC5, CP4, F5 3 中 F3, CP5, FC6, 4 弱 P3, P4, F4, F6, CP6, 5 微弱 C5, C1, C2, C6, FC1, F1, F2, P1, P2, P5, P6, FC2, CP1, CP2, FCz, CPz, Pz, Fz -

WOLPAW J R, BIRBAUMER N, MCFARLAND D J, et al. Brain–computer interfaces for communication and control[J]. Clinical Neurophysiology, 2002, 113(6): 767–791. doi: 10.1016/S1388-2457(02)00057-3 LECUN Y, BENGIO Y, and HINTON G. Deep learning[J]. Nature, 2015, 521(7553): 436–444. doi: 10.1038/nature14539 BELHADJ S A, BENMOUSSAT N, and KRACHAI M D. CSP features extraction and FLDA classification of EEG-based motor imagery for Brain-Computer Interaction[C]. The 2015 4th International Conference on Electrical Engineering, Boumerdes, Algeria, 2015: 1–6. doi: 10.1109/INTEE.2015.7416697. CHEN Jing, HU Bin, XU Lixin, et al. Feature-level fusion of multimodal physiological signals for emotion recognition[C]. 2015 IEEE International Conference on Bioinformatics and Biomedicine, Washington, USA, 2015: 395–399. doi: 10.1109/BIBM.2015.7359713. YANG Jun, YAO Shaowen, and WANG Jin. Deep fusion feature learning network for MI-EEG classification[J]. IEEE Access, 2018, 6: 79050–79059. doi: 10.1109/ACCESS.2018.2877452 HUSSAIN S, CALVO R A, and POUR P A. Hybrid fusion approach for detecting affects from multichannel physiology[C]. The 4th International Conference on Affective Computing and Intelligent Interaction, Memphis, USA, 2011: 568–577. doi: 10.1007/978-3-642-24600-5_60. JIRAYUCHAROENSAK S, PAN-NGUM S, and ISRASENA P. EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation[J]. The Scientific World Journal, 2014, 2014: 627892. doi: 10.1155/2014/627892 ZHANG Xiang, YAO Lina, SHENG Q Z, et al. Converting your thoughts to texts: Enabling brain typing via deep feature learning of EEG signals[C]. 2018 IEEE International Conference on Pervasive Computing and Communications, Athens, Greece, 2018: 1–10. doi: 10.1109/PERCOM.2018.8444575. DAI Mengxi, ZHENG Dezhi, NA Rui, et al. EEG classification of motor imagery using a novel deep learning framework[J]. Sensors, 2019, 19(3): 551. doi: 10.3390/s19030551 MZURIKWAO D, ANG C S, SAMUEL O W, et al. Efficient channel selection approach for motor imaginary classification based on convolutional neural network[C]. 2018 IEEE International Conference on Cyborg and Bionic Systems, Shenzhen, China, 2018: 418–421. doi: 10.1109/CBS.2018.8612157. HE Lin, GU Zhenghui, LI Yuanqing, et al. Classifying motor imagery EEG signals by iterative channel elimination according to compound weight[C]. International Conference on Artificial Intelligence and Computational Intelligence, Sanya, China, 2010: 71–78. TAN Chuanqi, SUN Fuchun, ZHANG Wenchang, et al. Spatial and spectral features fusion for EEG classification during motor imagery in BCI[C]. 2017 IEEE EMBS International Conference on Biomedical & Health Informatics, Orlando, USA, 2017: 309–312. doi: 10.1109/BHI.2017.7897267. 何群, 杜硕, 张园园, 等. 融合单通道框架及多通道框架的运动想象分类[J]. 仪器仪表学报, 2018, 39(9): 20–29.HE Qun, DU Shuo, ZHANG Yuanyuan, et al. Classification of motor imagery based on single-channel frame and multi-channel frame[J]. Chinese Journal of Scientific Instrument, 2018, 39(9): 20–29. CHUNG Y G, KIM M K, and KIM S P. Inter-channel connectivity of motor imagery EEG signals for a noninvasive BCI application[C]. 2011 International Workshop on Pattern Recognition in NeuroImaging, Seoul, South Korea, 2011: 49–52. doi: 10.1109/PRNI.2011.9. LECUN Y, KAVUKCUOGLU K, and FARABET C. Convolutional networks and applications in vision[C]. 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 2010: 253–256. doi: 10.1109/ISCAS.2010.5537907. ZHANG Jin, YAN Chungang, and GONG Xiaoliang. Deep convolutional neural network for decoding motor imagery based brain computer interface[C]. 2017 IEEE International Conference on Signal Processing, Communications and Computing, Xiamen, China, 2017: 1–5. doi: 10.1109/ICSPCC.2017.8242581. MATEI R P. Design method for orientation-selective CNN filters[C]. 2004 IEEE International Symposium on Circuits and Systems, Vancouver, Canada, 2004: III-105. doi: 10.1109/ISCAS.2004.1328694. LEE W Y, PARK S M, and SIM K B. Optimal hyperparameter tuning of convolutional neural networks based on the parameter-setting-free harmony search algorithm[J]. Optik, 2018, 172: 359–367. doi: 10.1016/j.ijleo.2018.07.044 MUÑOZ-ORDÓÑEZ J, COBOS C, MENDOZA M, et al. Framework for the training of deep neural networks in tensorFlow using metaheuristics[C]. The 19th International Conference on Intelligent Data Engineering and Automated Learning, Madrid, Spain, 2018: 801–811. doi: 10.1007/978-3-030-03493-1_83. KINGMA D P and BA J. Adam: A method for stochastic optimization[C]. The 3rd International Conference on Learning Representations, San Diego, USA, 2015: 1–15. HE Lin, HU Youpan, LI Yuanqing, et al. Channel selection by Rayleigh coefficient maximization based genetic algorithm for classifying single-trial motor imagery EEG[J]. Neurocomputing, 2013, 121: 423–433. doi: 10.1016/j.neucom.2013.05.005 -

下载:

下载:

下载:

下载: