Speaker Recognition Based on Multimodal GenerativeAdversarial Nets with Triplet-loss

-

摘要:

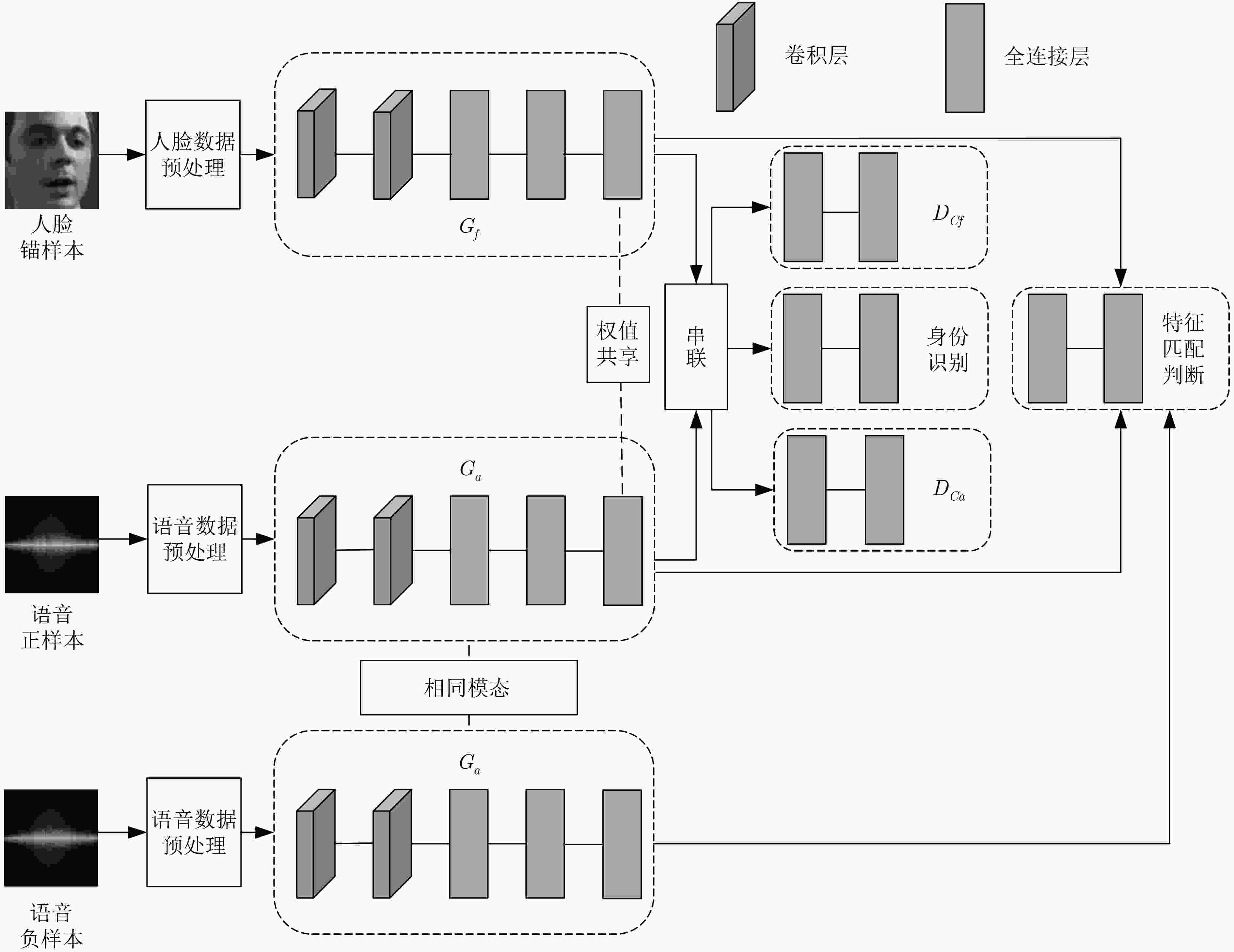

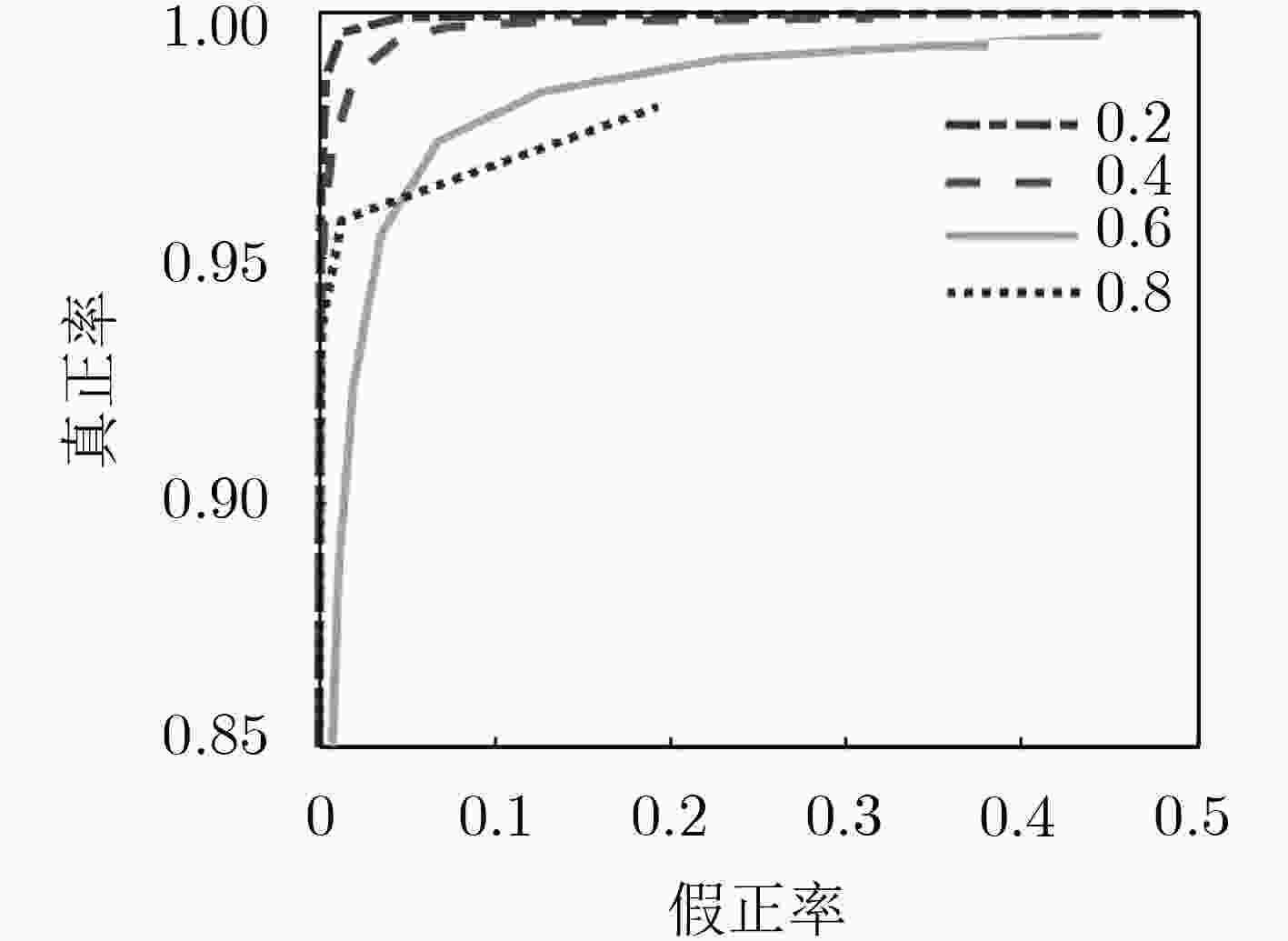

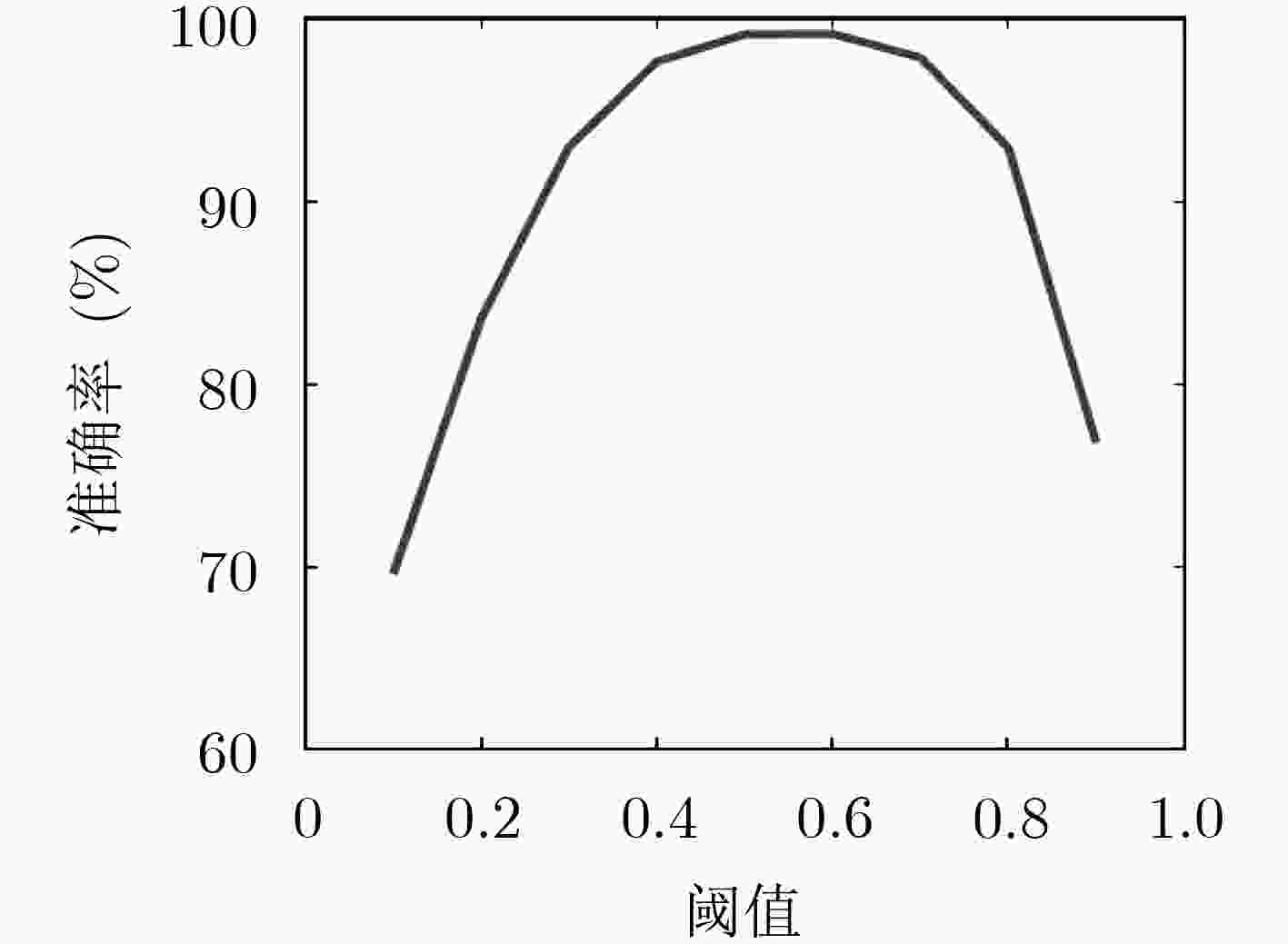

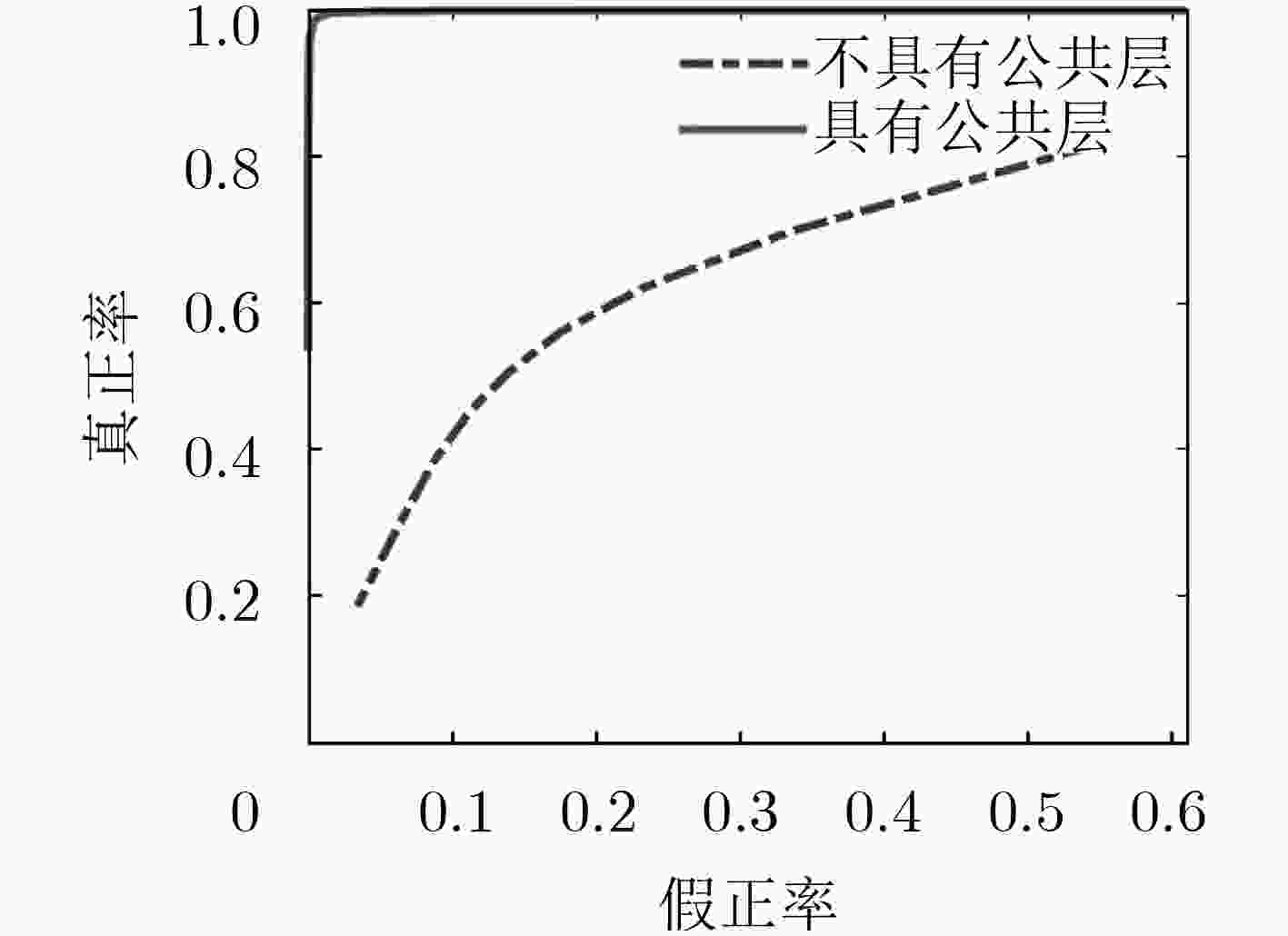

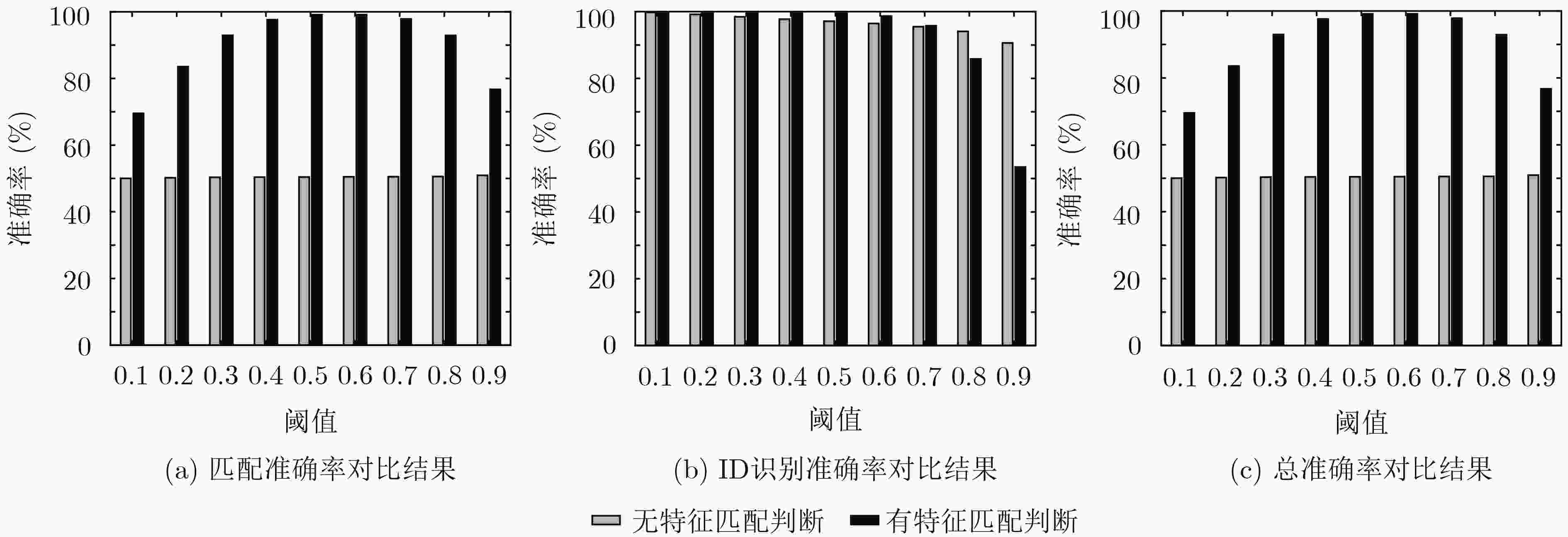

为了挖掘说话人识别领域中人脸和语音的相关性,该文设计多模态生成对抗网络(GAN),将人脸特征和语音特征映射到联系更加紧密的公共空间,随后利用3元组损失对两个模态的联系进一步约束,拉近相同个体跨模态样本的特征距离,拉远不同个体跨模态样本的特征距离。最后通过计算公共空间特征的跨模态余弦距离判断人脸和语音是否匹配,并使用Softmax识别说话人身份。实验结果表明,该方法能有效地提升说话人识别准确率。

Abstract:In order to explore the correlation between face and audio in the field of speaker recognition, a novel multimodal Generative Adversarial Network (GAN) is designed to map face features and audio features to a more closely connected common space. Then the Triplet-loss is used to constrain further the relationship between the two modals, with which the intra-class distance of the two modals is narrowed, and the inter-class distance of the two modals is extended. Finally, the cosine distance of the common space features of the two modals is calculated to judge whether the face and the voice are matched, and Softmax is used to recognize the speaker identity. Experimental results show that this method can effectively improve the accuracy of speaker recognition.

-

Key words:

- Speaker recognition /

- Cross-modal /

- Generative Adversarial Network (GAN) /

- Triplet-loss

-

表 1 不同特征的身份识别准确率(%)

特征 ID识别准确率 语音公共特征 95.57 人脸公共特征 99.41 串联特征 99.59 -

BREDIN H and CHOLLET G. Audio-visual speech synchrony measure for talking-face identity verification[C]. 2007 IEEE International Conference on Acoustics, Speech and Signal Processing, Honolulu, USA, 2007: Ⅱ-233–Ⅱ-236. HAGHIGHAT M, ABDEL-MOTTALEB M, and ALHALABI W. Discriminant correlation analysis: Real-time feature level fusion for multimodal biometric recognition[J]. IEEE Transactions on Information Forensics and Security, 2016, 11(9): 1984–1996. doi: 10.1109/TIFS.2016.2569061 CHENG H T, CHAO Y H, YEH S L, et al. An efficient approach to multimodal person identity verification by fusing face and voice information[C]. 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, Netherlands, 2005: 542–545. SOLTANE M, DOGHMANE N, and GUERSI N. Face and speech based multi-modal biometric authentication[J]. International Journal of Advanced Science and Technology, 2010, 21(6): 41–56. HU Yongtao, REN J S J, DAI Jingwen, et al. Deep multimodal speaker naming[C]. The 23rd ACM International Conference on Multimedia, Brisbane, Australia, 2015: 1107–1110. GENG Jiajia, LIU Xin, and CHEUNG Y M. Audio-visual speaker recognition via multi-modal correlated neural networks[C]. 2016 IEEE/WIC/ACM International Conference on Web Intelligence Workshops, Omaha, USA, 2016: 123–128. REN J, HU Yongtao, TAI Y W, et al. Look, listen and learn-a multimodal LSTM for speaker identification[C]. The 30th AAAI Conference on Artificial Intelligence, Phoenix, USA, 2016: 3581–3587. LIU Yuhang, LIU Xin, FAN Wentao, et al. Efficient audio-visual speaker recognition via deep heterogeneous feature fusion[C]. The 12th Chinese Conference on Biometric Recognition, Shenzhen, China, 2017: 575–583. GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C]. The 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2014: 2672–2680. 唐贤伦, 杜一铭, 刘雨微, 等. 基于条件深度卷积生成对抗网络的图像识别方法[J]. 自动化学报, 2018, 44(5): 855–864.TANG Xianlun, DU Yiming, LIU Yuwei, et al. Image recognition with conditional deep convolutional generative adversarial networks[J]. Acta Automatica Sinica, 2018, 44(5): 855–864. 孙亮, 韩毓璇, 康文婧, 等. 基于生成对抗网络的多视图学习与重构算法[J]. 自动化学报, 2018, 44(5): 819–828.SUN Liang, HAN Yuxuan, KANG Wenjing, et al. Multi-view learning and reconstruction algorithms via generative adversarial networks[J]. Acta Automatica Sinica, 2018, 44(5): 819–828. 郑文博, 王坤峰, 王飞跃. 基于贝叶斯生成对抗网络的背景消减算法[J]. 自动化学报, 2018, 44(5): 878–890.ZHENG Wenbo, WANG Kunfeng, and WANG Feiyue. Background subtraction algorithm with Bayesian generative adversarial networks[J]. Acta Automatica Sinica, 2018, 44(5): 878–890. RADFORD A, METZ L, and CHINTALA S. Unsupervised representation learning with deep convolutional generative adversarial networks[J]. arXiv: 1511.06434 , 2015. DENTON E, CHINTALA S, SZLAM A, et al. Deep generative image models using a laplacian pyramid of adversarial networks[C]. The 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 1486–1494. LEDIG C, THEIS L, HUSZÁR F, et al. Photo-realistic single image super-resolution using a generative adversarial network[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 105–114. WANG Xiaolong and GUPTA A. Generative image modeling using style and structure adversarial networks[C]. The 14th European Conference on Computer Vision, Amsterdam, Netherlands, 2016: 318–335. PENG Yuxin and QI Jinwei. CM-GANs: Cross-modal generative adversarial networks for common representation learning[J]. ACM Transactions on Multimedia Computing, Communications, and Applications, 2019, 15(1): 98–121. HINTON G E, SRIVASTAVA N, KRIZHEVSKY A, et al. Improving neural networks by preventing co-adaptation of feature detectors[J]. Computer Science, 2012, 3(4): 212–223. -

下载:

下载:

下载:

下载: