Semi-Markov Decision Process-based Resource Allocation Strategy for Virtual Sensor Network

-

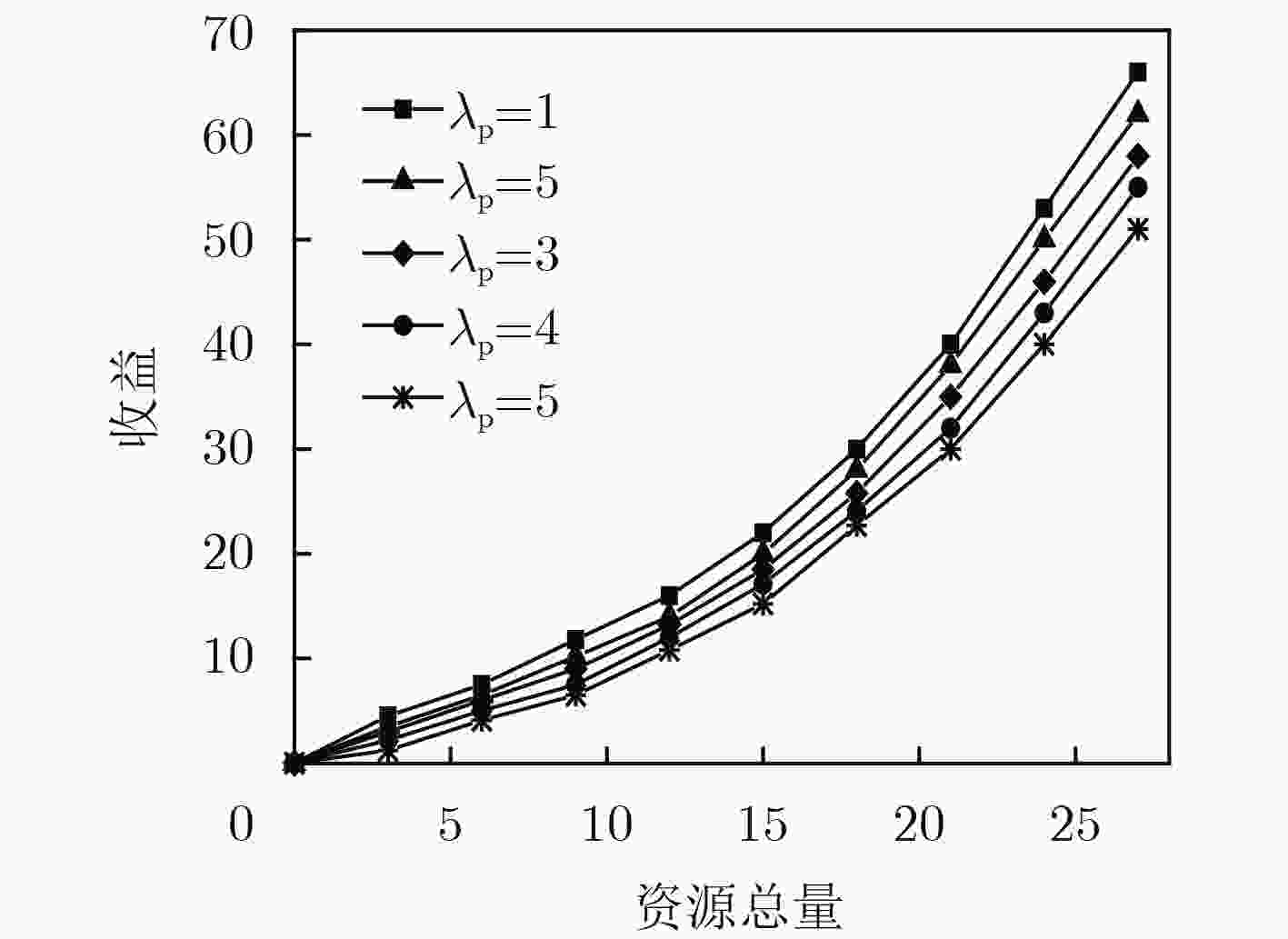

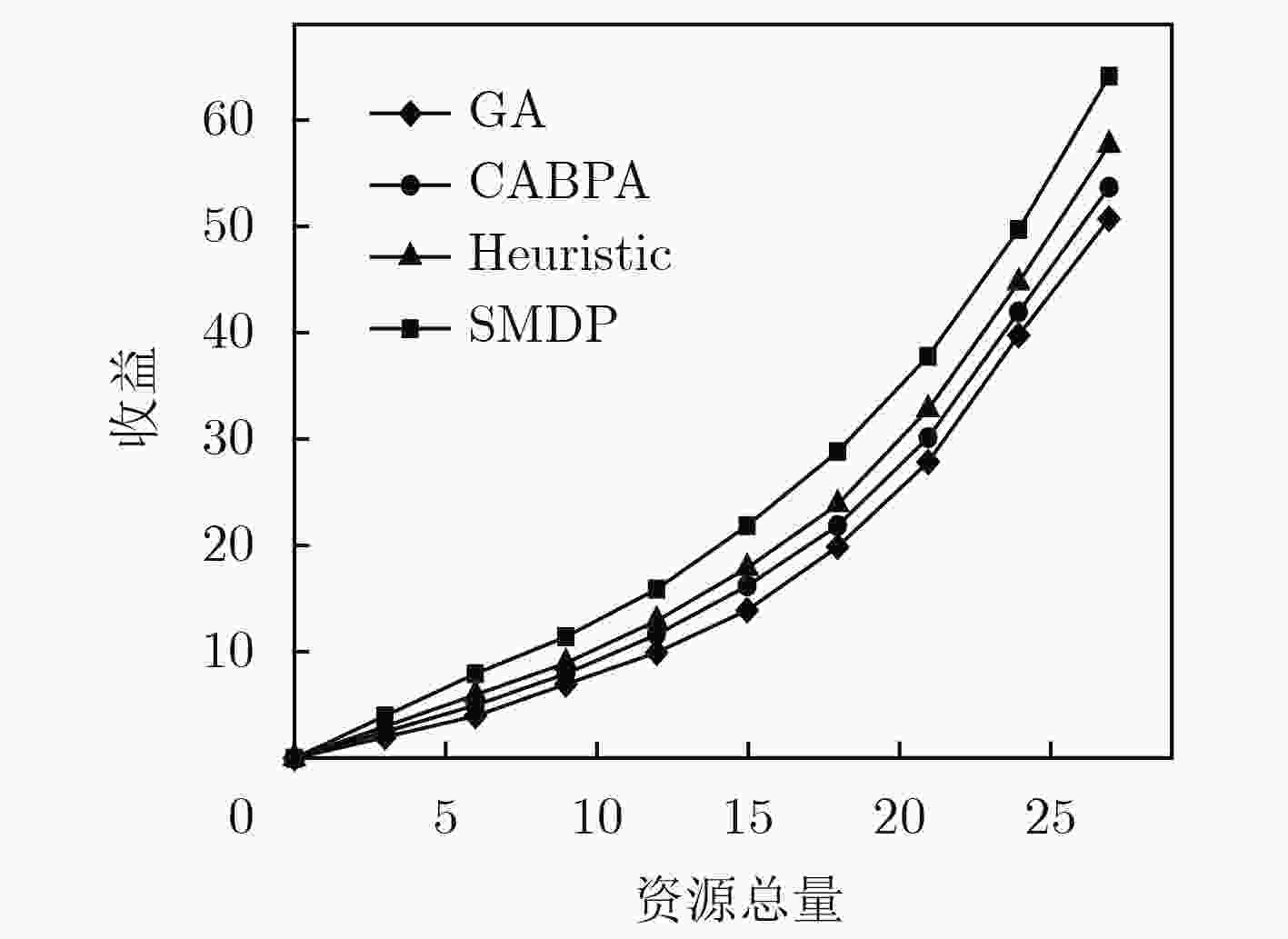

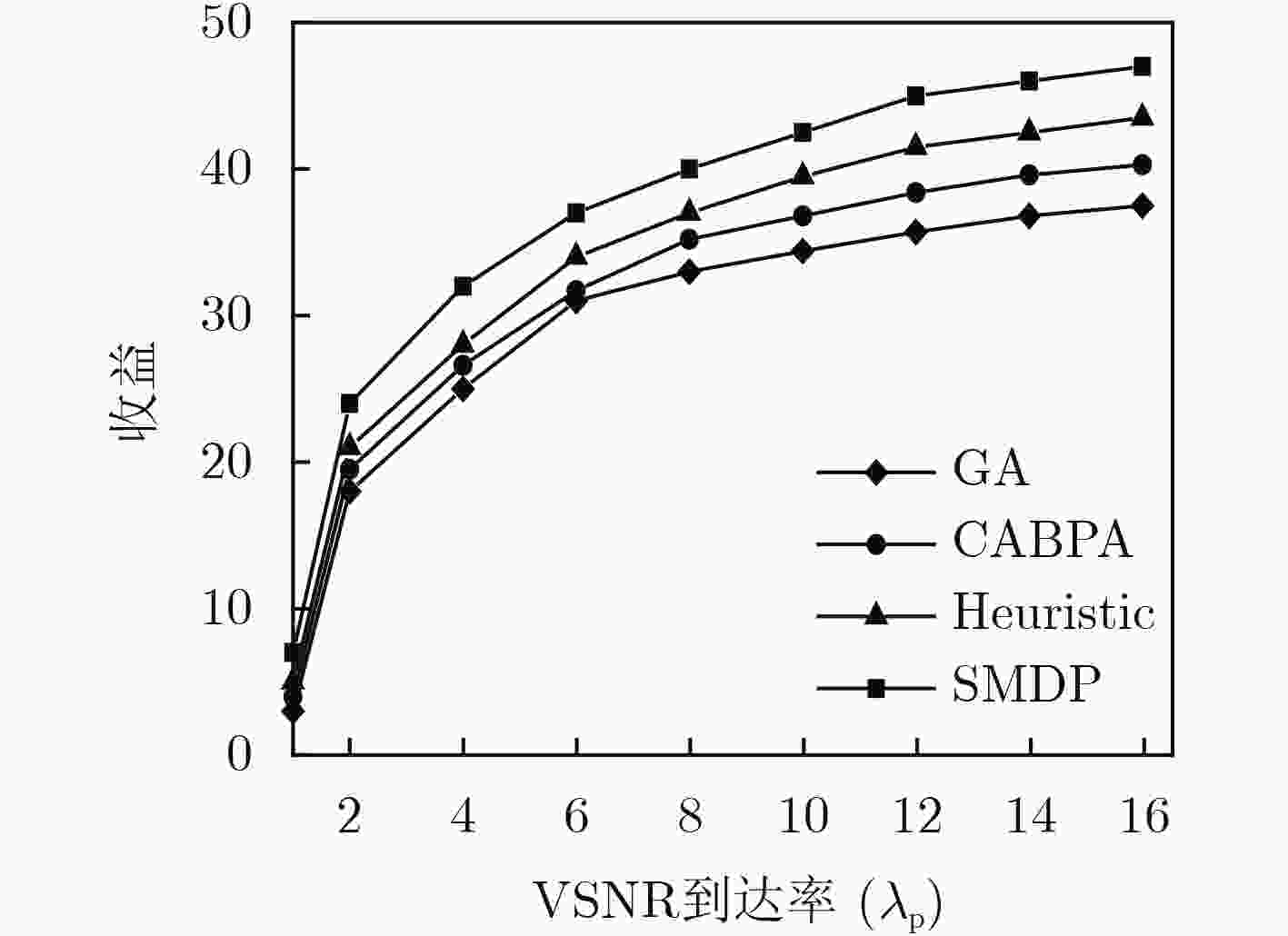

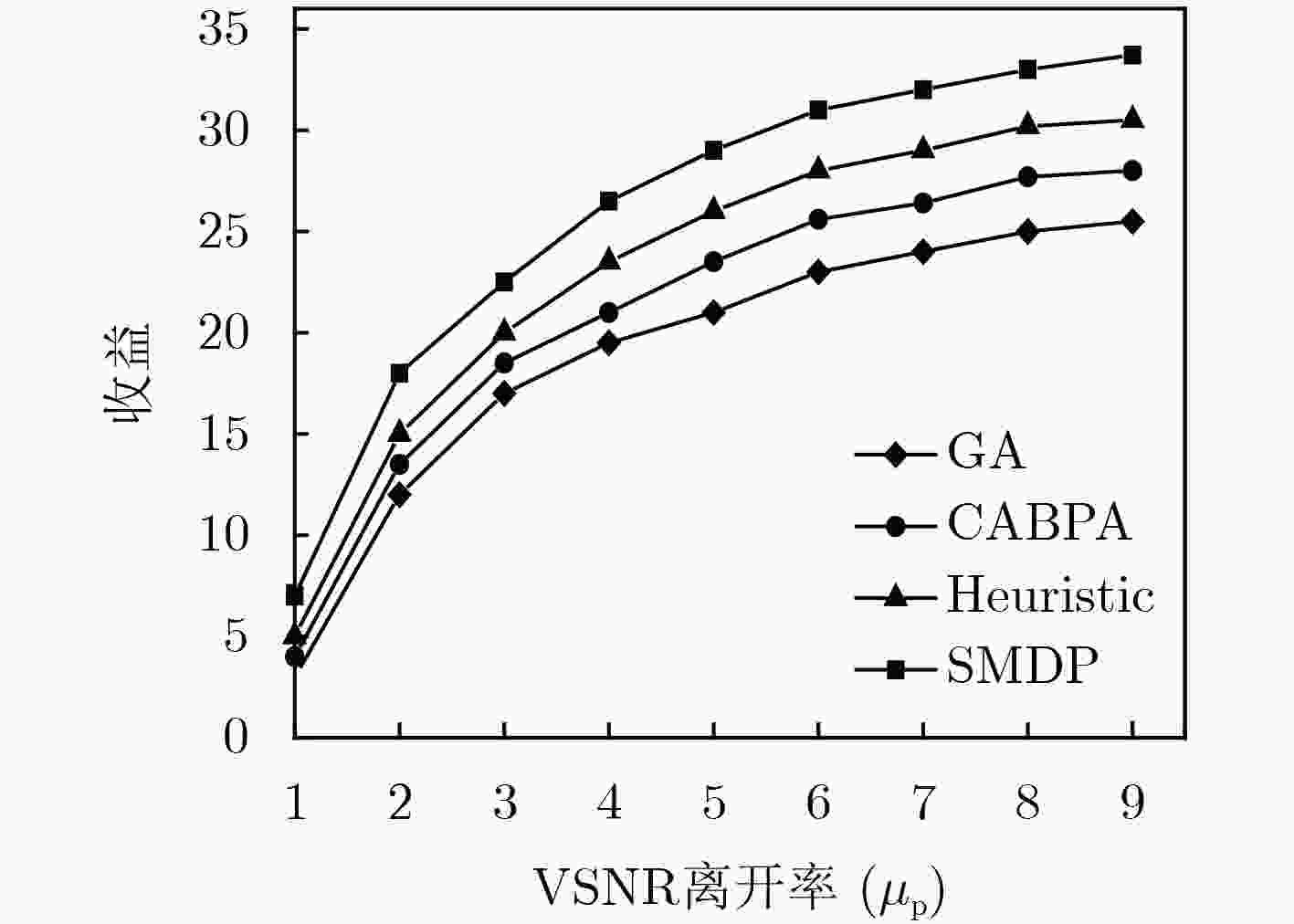

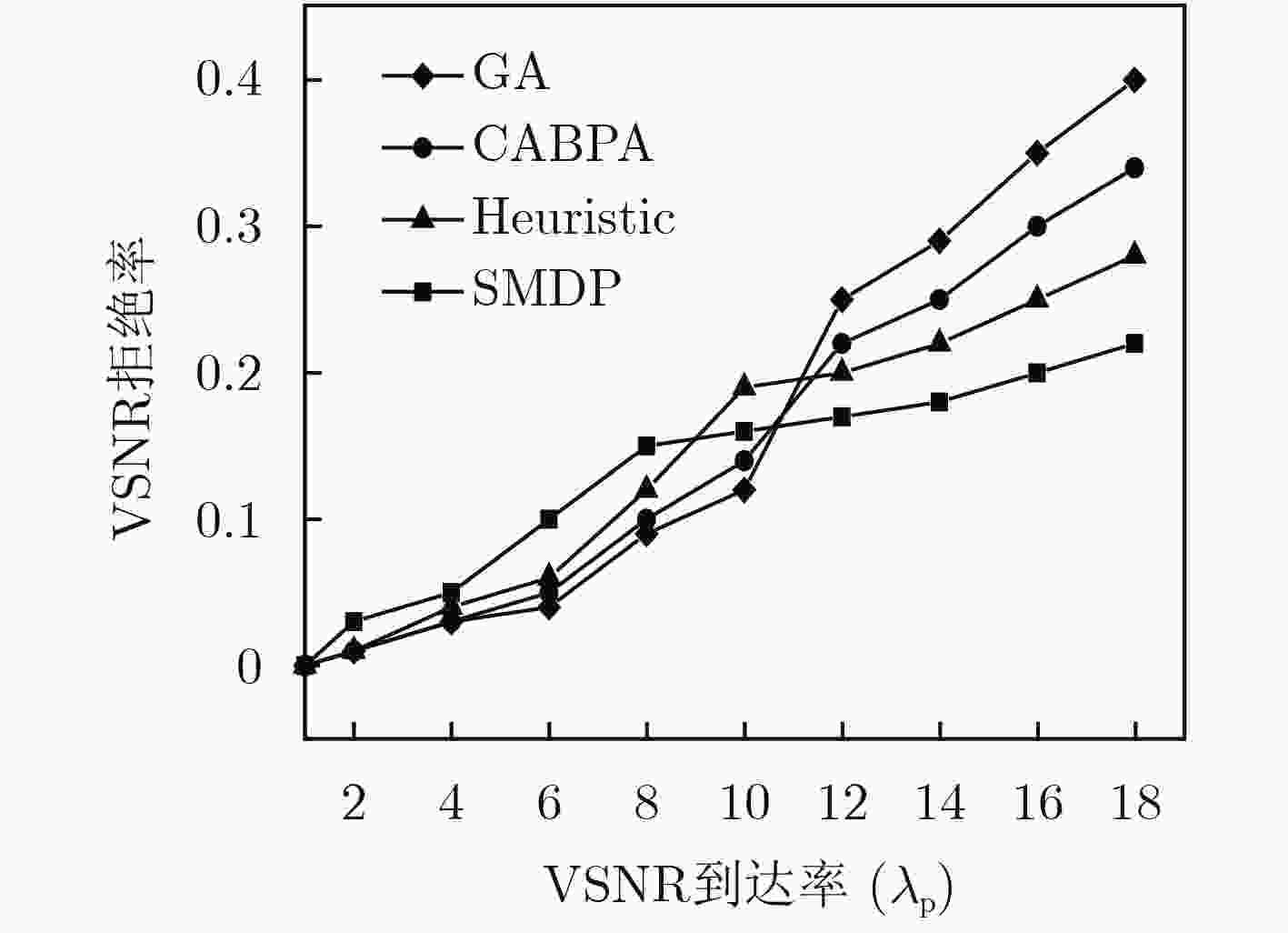

摘要: 针对传统无线传感网络(WSN)中资源部署与特定任务的耦合关系密切,造成较低的资源利用率,进而给资源提供者带来较低的收益问题,根据虚拟传感网络请求(VSNR)的动态变化情况,该文提出虚拟传感网络(VSN)中基于半马尔科夫决策过程(SMDP)的资源分配策略。定义VSN的状态集、行为集、状态转移概率,考虑传感网能量受限以及完成VSNR的时间,给出奖赏函数的表达式,并使用免模型强化学习算法求解特定状态下的行为,从而最大化网络资源提供者的长期收益。数值结果表明,该文的资源分配策略能有效提高传感网资源提供者的收益。Abstract: The close relationship between resource deployment and specific tasks in traditional Wireless Sensor Network(WSN) leads to low resource utilization and revenue. According to the dynamic changes of Virtual Sensor Network Request(VSNR), the resource allocation strategy based on Semi-Markov Decision Process(SMDP) is proposed in Virtual Sensor Network(VSN). Then, difining the state, action, and transition probability of the VSN, the expected reward is given by considering the energy and time to complete the VSNR, and the model-free reinforcement learning approach is used to maximize the long-term reward of the network resource provider. The numerical results show that the resource allocation strategy of this paper can effectively improve the revenue of the sensor network resource providers.

-

表 1 仿真参数设置表

参数 数值 参数 数值 $K$ 20~30 ${\lambda _{\rm{p}}}$ 1~18 ${\omega _{\rm{e}}}$ 0.5 ${\omega _{\rm{d}}}$ 0.5 ${\beta _{\rm{e}}}$ 2 ${\beta _{\rm{d}}}$ 2 ${E_{\rm{l}}}$ 20 ${P_{\rm{l}}}$ 5 ${D_{\rm{l}}}$ 20 $\delta $ 2 $\gamma $ 2 $\alpha $ 0.1 -

YETGIN H, CHEUNG K T K, El-HAJJAR M, et al. A survey of network lifetime maximization techniques in wireless sensor networks[J]. IEEE Communications Surveys & Tutorials, 2017, 19(2): 828–854. doi: 10.1109/COMST.2017.2650979 WU Dapeng, ZHANG Feng, WANG Honggang, et al. Security-oriented opportunistic data forwarding in mobile social networks[J]. Future Generation Computer Systems, 2018, 87: 803–815. doi: 10.1016/j.future.2017.07.028 DELGADO C, CANALES M, ORTÍN J, et al. Joint application admission control and network slicing in virtual sensor networks[J]. IEEE Internet of Things Journal, 2018, 5(1): 28–43. doi: 10.1109/JIOT.2017.2769446 WU Dapeng, ZHANG Zhihao, WU Shaoen, et al. Biologically inspired resource allocation for network slices in 5G-enabled internet of things[J]. IEEE Internet of Things Journal, 2018. doi: 10.1109/JIOT.2018.2888543 GUO Lei, NING Zhaolong, SONG Qingyang, et al. A QoS-oriented high-efficiency resource allocation scheme in wireless multimedia sensor networks[J]. IEEE Sensors Journal, 2017, 17(5): 1538–1548. doi: 10.1109/JSEN.2016.2645709 ZHANG Yueyue, ZHU Yaping, YAN Feng, et al. Energy-efficient radio resource allocation in software-defined wireless sensor networks[J]. IET Communications, 2018, 12(3): 349–358. doi: 10.1049/iet-com.2017.0937 HASSAN M M and ALSANAD A. Resource provisioning for cloud-assisted software defined wireless sensor network[J]. IEEE Sensors Journal, 2016, 16(20): 7401–7408. doi: 10.1109/JSEN.2016.2582339 DELGADO C, GÁLLEGO J R, CANALES M, et al. On optimal resource allocation in virtual sensor networks[J]. Ad Hoc Networks, 2016, 50: 23–40. doi: 10.1016/j.adhoc.2016.04.004 WU Dapeng, LIU Qianru, WANG Honggang, et al. Cache less for more: Exploiting cooperative video caching and delivery in D2D communications[J]. IEEE Transactions on Multimedia, 2018. doi: 10.1109/TMM.2018.2885931 ZHENG Kan, MENG Hanlin, CHATZIMISIOS P, et al. An SMDP-based resource allocation in vehicular cloud computing systems[J]. IEEE Transactions on Industrial Electronics, 2015, 62(12): 7920–7928. doi: 10.1109/TIE.2015.2482119 SCHOLLIG A, CAINES P E, EGERSTEDT M, et al. A hybrid Bellman equation for systems with regional dynamics[C]. The 200746th IEEE Conference on Decision and Control, New Orleans, USA, 2007: 3393–3398. doi: 10.1109/CDC.2007.4434952. GOSAVI A. Relative value iteration for average reward semi-Markov control via simulation[C]. 2013 Winter Simulations Conference, Washington, USA, 2013: 623–630. doi: 10.1109/WSC.2013.6721456. WU Dapeng, SHI Hang, WANG Honggang, et al. A feature-based learning system for internet of things applications[J]. IEEE Internet of Things Journal, 2019, 6(2): 1928–1937. doi: 10.1109/JIOT.2018.2884485 CHEN Yueyun and JIA Cuixia. An improved call admission control scheme based on reinforcement learning for multimedia wireless networks[C]. 2009 International Conference on Wireless Networks and Information Systems, Shanghai, China, 2009: 322–325. doi: 10.1109/WNIS.2009.91. ABUNDO M, DI VALERIO V, CARDELLINI V, et al. QoS-aware bidding strategies for VM spot instances: A reinforcement learning approach applied to periodic long running jobs[C]. 2015 IFIP/IEEE International Symposium on Integrated Network Management, Ottawa, Canada, 2015: 53–61. doi: 10.1109/INM.2015.7140276. DARKEN C, CHANG J, and MOODY J. Learning rate schedules for faster stochastic gradient search[C]. 1992 IEEE Workshop on Neural Networks for Signal Processing II, Helsingoer, Denmark, 1992: 3–12. doi: 10.1109/NNSP.1992.253713. -

下载:

下载:

下载:

下载: