A Method to Visualize Deep Convolutional Networks Based on Model Reconstruction

-

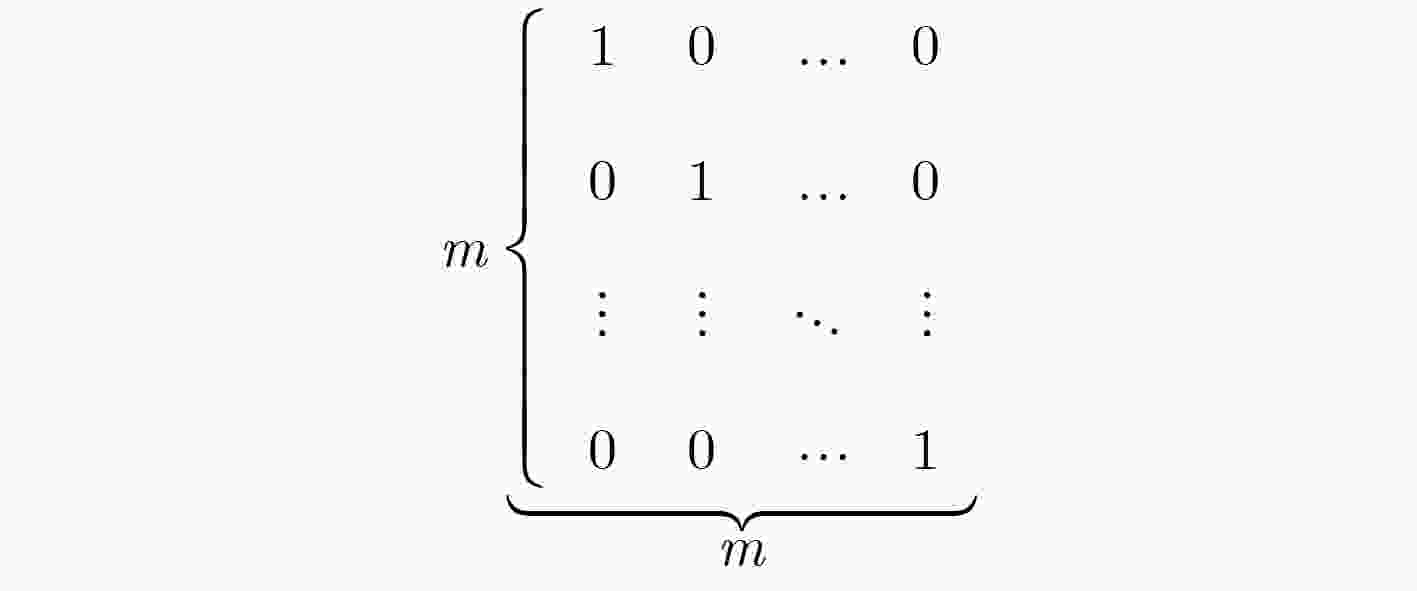

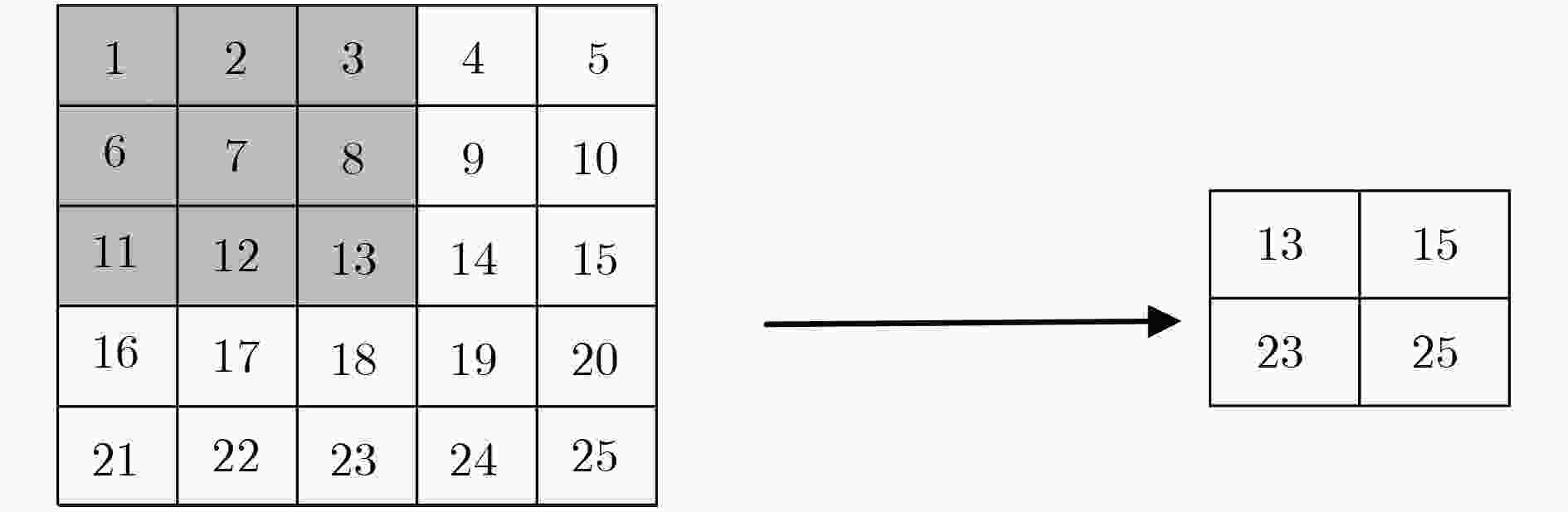

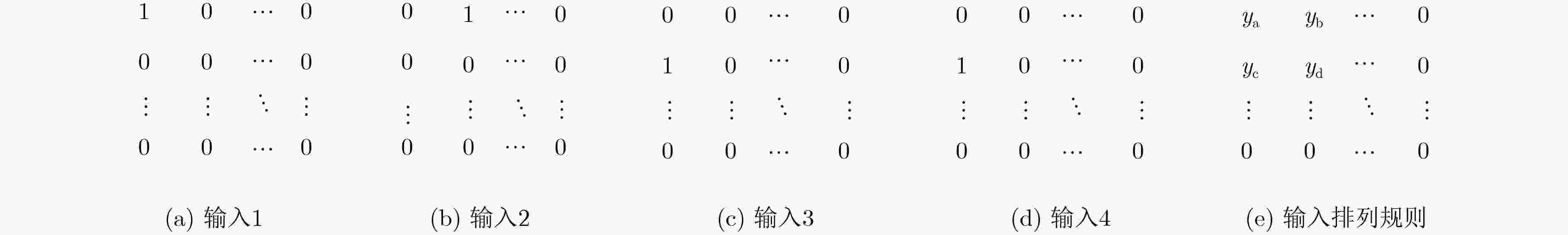

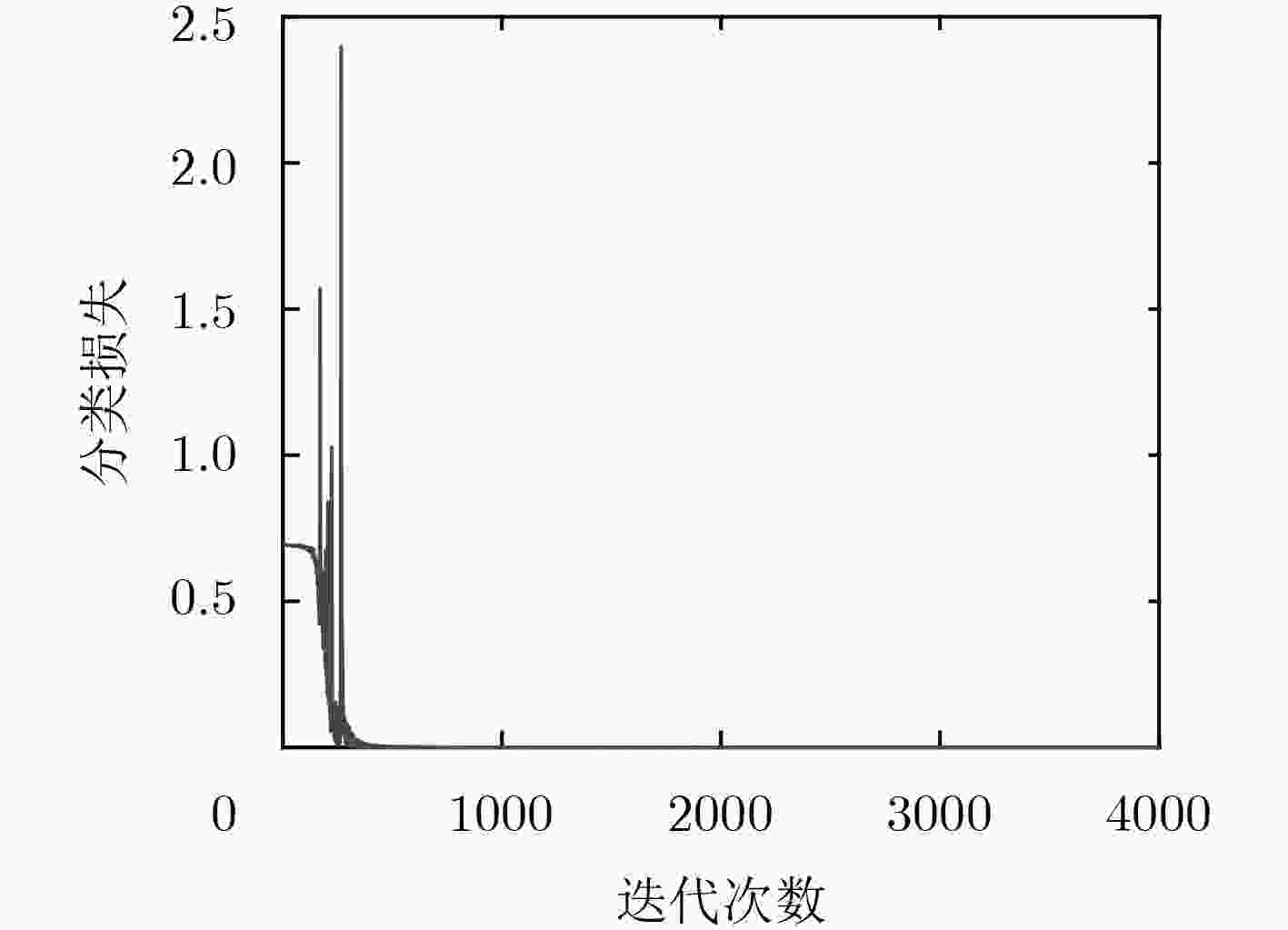

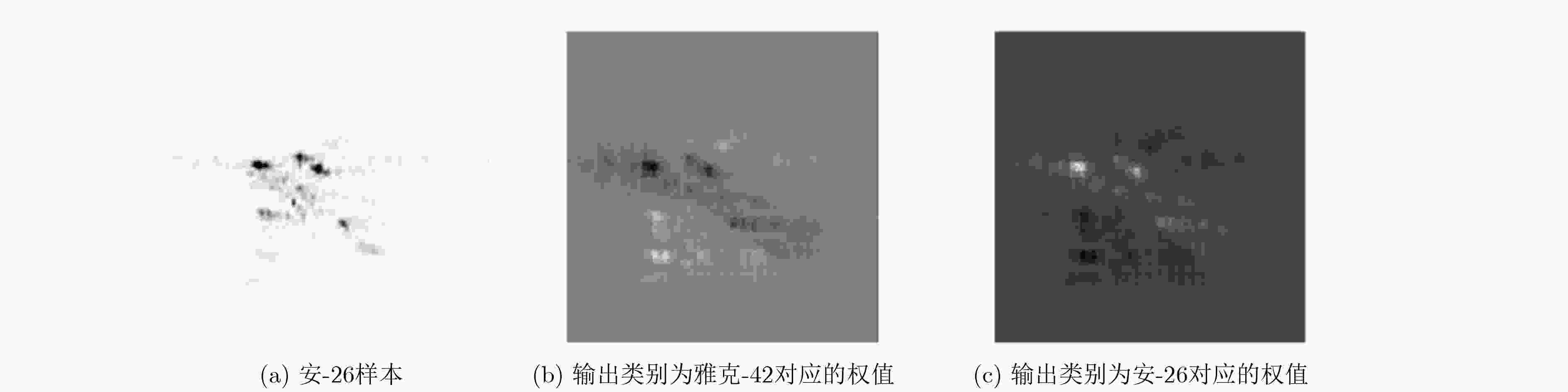

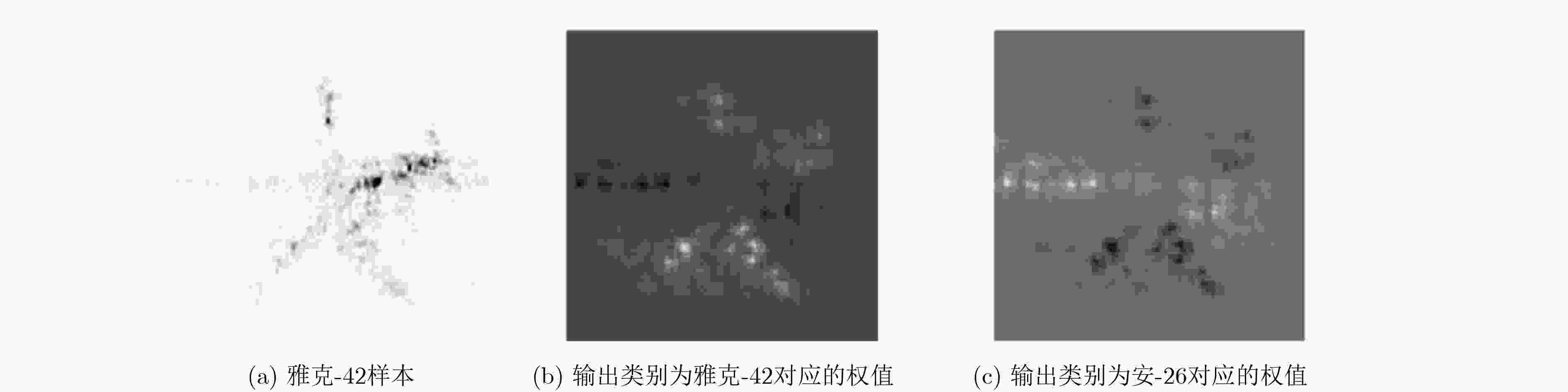

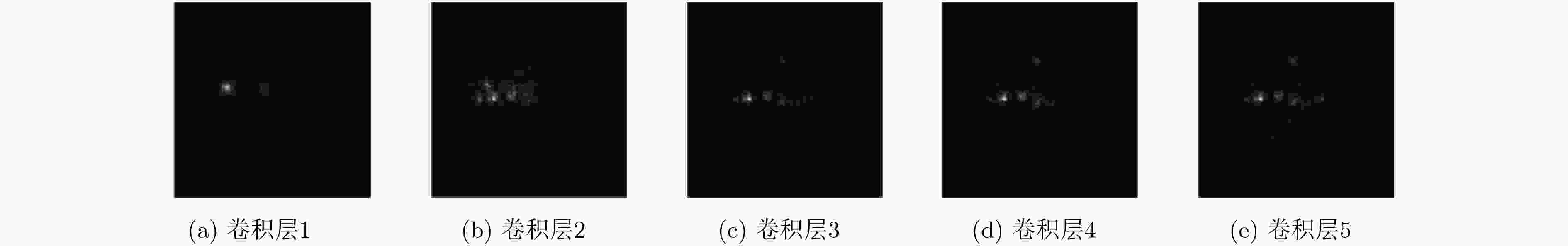

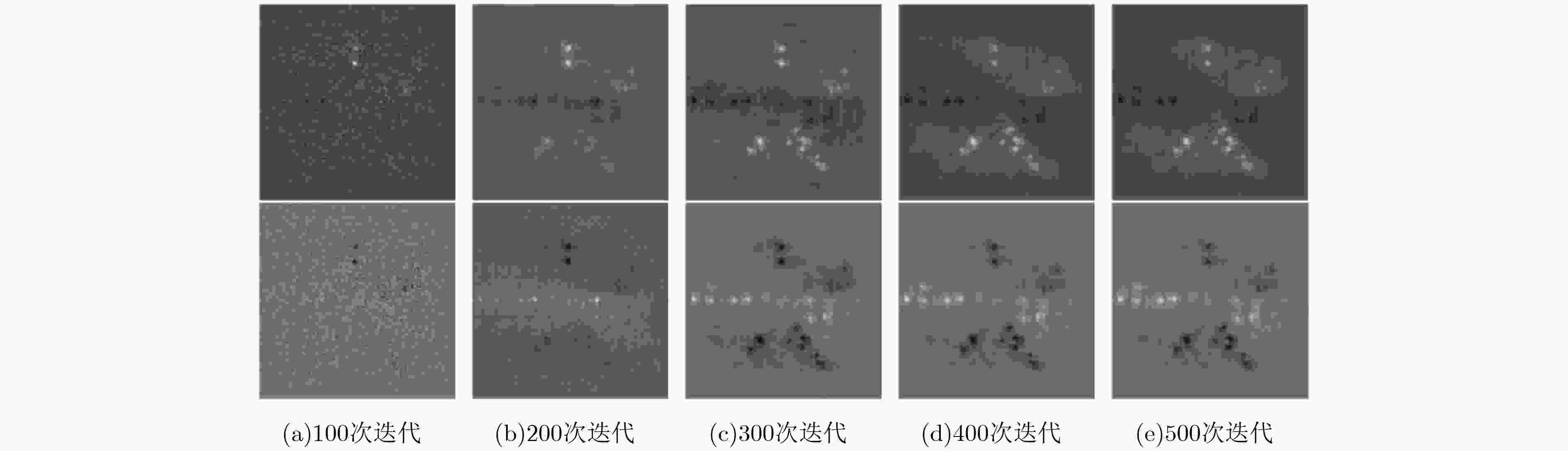

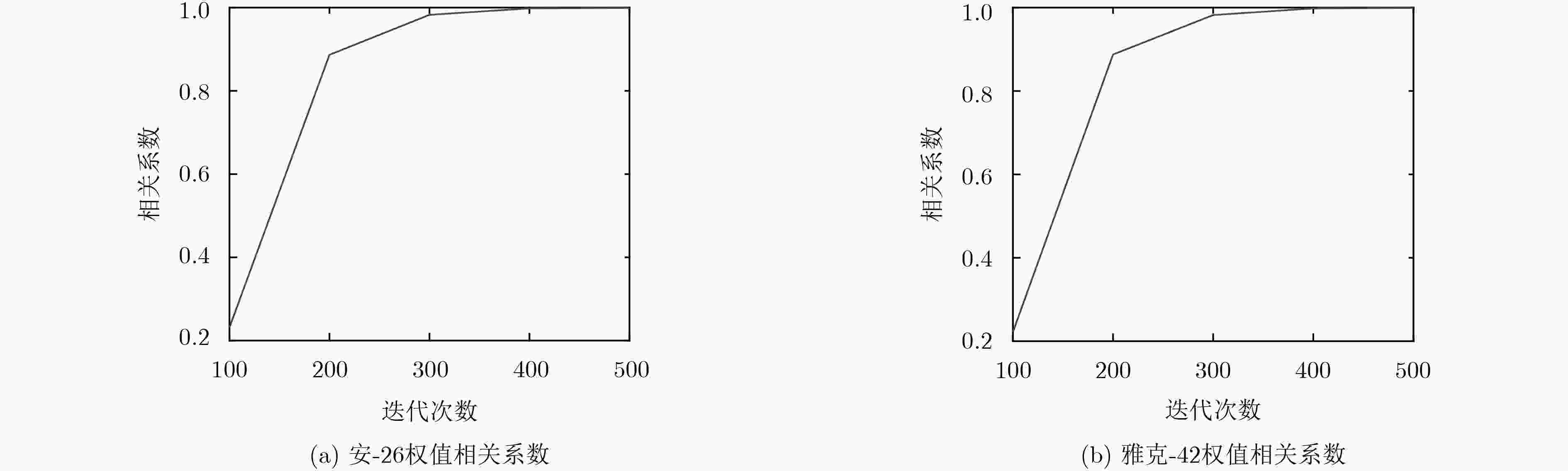

摘要: 针对深度卷积网络原理分析的问题,该文提出一种基于模型重建的权值可视化方法。首先利用原有的神经网络对测试样本进行前向传播,以获取重建模型所需要的先验信息;然后对原本网络中的部分结构进行修改,使其便于后续的参数计算;再利用正交向量组,逐一地计算重建模型的参数;最后将计算所得的参数按照特定的顺序进行重排列,实现权值的可视化。实验结果表明,对于满足一定条件的深度卷积网络,利用该文所提方法重建的模型在分类过程的前向传播运算中与原模型完全等效,并且可以明显观察到重建后模型的权值所具有的特征,从而分析神经网络实现图像分类的原理。Abstract: A method for visualizing the weights of a reconstructed model is proposed to analyze a deep convolutional network works. Firstly, a specific input is used in the original neural network during the forward propagation to get the prior information for model reconstruction. Then some of the structure of the original network is changed for further parameter calculation. After that, the parameters of the reconstructed model are calculated with a group of orthogonal vectors. Finally, the parameters are put into a special order to make them visualized. Experimental results show that the model reconstructed with the proposed method is totally equivalent to the original model during the forward propagation in the classification process. The feature of the weights of the reconstructed model can be observed clearly and the principle of the neural network can be analyzed with the feature.

-

Key words:

- Deep convolutional networks /

- Visualization /

- Model reconstruction

-

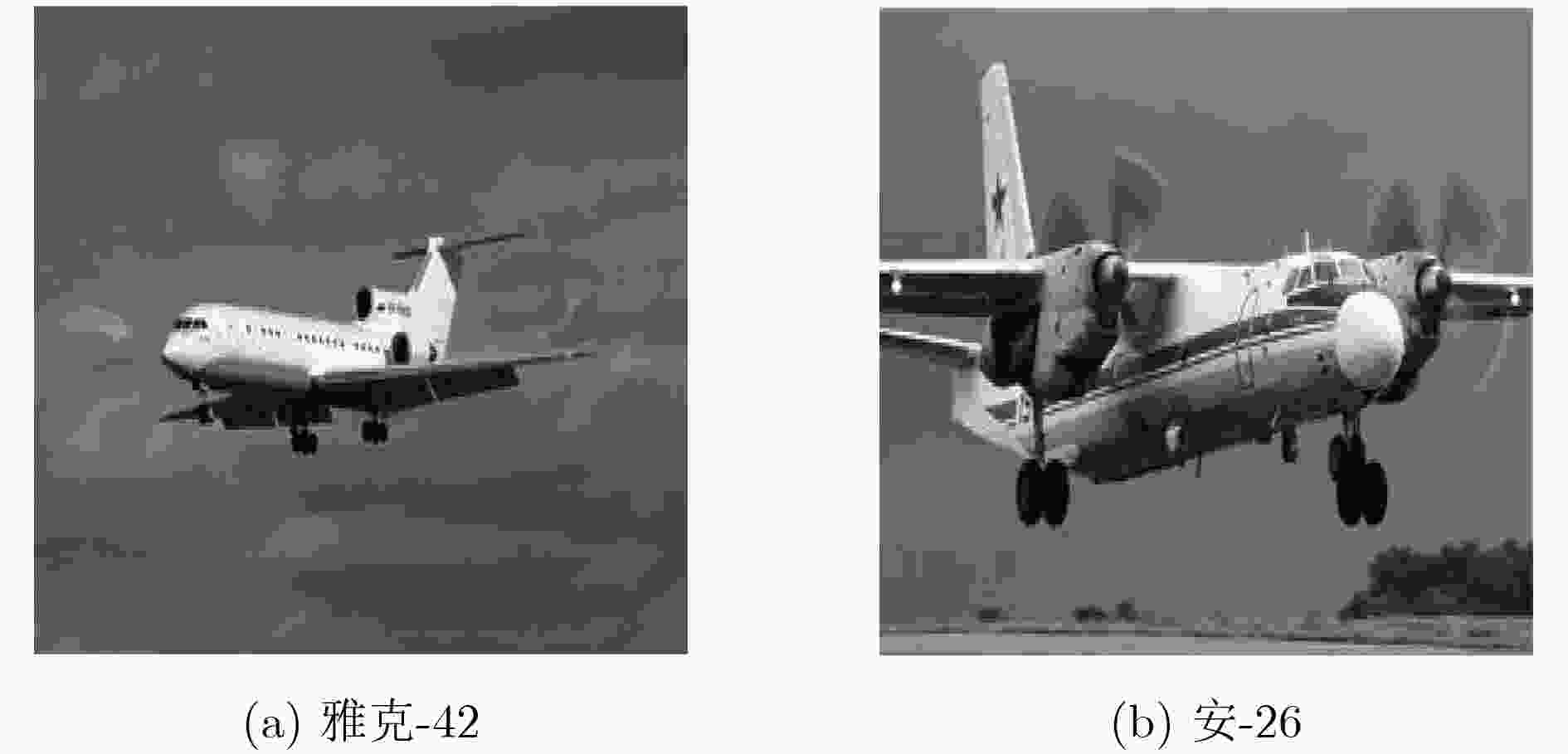

表 1 两种模型分类输出比较

输出结果 安-26飞机图像 雅克-42飞机图像 原模型 –13.45 –9.22 –8.14 –6.75 –5.63 10.65 11.01 5.93 9.12 7.31 13.42 9.30 8.23 6.83 5.69 –10.81 –11.15 –6.01 –9.22 –7.38 重建后模型 –13.45 –9.22 –8.14 –6.75 –5.63 10.65 11.01 5.93 9.12 7.31 13.42 9.30 8.23 6.83 5.69 –10.81 –11.15 –6.01 –9.22 –7.38 -

FARAHBAKHSH E, KOZEGAR E, and SORYANI M. Improving Persian digit recognition by combining data augmentation and AlexNet[C]. Iranian Conference on Machine Vision and Image Processing, Isfahan, Iran, 2017: 265–270. HOU Saihui, LIU Xu, and WANG Zilei. DualNet: Learn complementary features for image recognition[C]. 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 502–510. SZEGEDY C, LIU Wei, JIA Yangqing, et al.. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015: 1–9. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al.. Deep residual learning for image recognition[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. 王俊, 郑彤, 雷鹏, 等. 深度学习在雷达中的研究综述[J]. 雷达学报, 2018, 7(4): 395–411. doi: 10.12000/JR18040WANG Jun, ZHENG Tong, LEI Peng, et al. Study on deep learning in radar[J]. Journal of Radars, 2018, 7(4): 395–411. doi: 10.12000/JR18040 PUNJABI A and KATSAGGELOS A K. Visualization of feature evolution during convolutional neural network training[C]. The 25th European Signal Processing Conference, Kos, Greece, 2017: 311–315. ZEILER M D and FERGUS R. Visualizing and understanding convolutional networks[C]. The 13th European Conference on Computer Vision, Zurich, Switzerland, 2014: 818–833. ZHOU Bolei, KHOSLA A, LAPEDRIZA A, et al.. Learning deep features for discriminative localization[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 2921–2929. SUZUKI S and SHOUNO H. A study on visual interpretation of network in network[C]. 2017 International Joint Conference on Neural Networks, Anchorage, USA, 2017: 903–910. GAL Y and GHAHRAMANI Z. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning[C]. The 33rd International Conference on Machine Learning, New York, USA, 2016: 1050–1059. NAIR V and HINTON G E. Rectified linear units improve restricted Boltzmann machines[C]. The 27th International Conference on International Conference on Machine Learning, Haifa, Israel, 2010: 807–814. PEHLEVAN C and CHKLOVSKII D B. A normative theory of adaptive dimensionality reduction in neural networks[C]. The 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 2269–2277. IOFFE S and SZEGEDY C. Batch normalization: Accelerating deep network training by reducing internal covariate shift[C]. The 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 448–456. 王思雨, 高鑫, 孙皓, 等. 基于卷积神经网络的高分辨率SAR图像飞机目标检测方法[J]. 雷达学报, 2017, 6(2): 195–203. doi: 10.12000/JR17009WANG Siyu, GAO Xin, SUN Hao, et al. An aircraft detection method based on convolutional neural networks in high-resolution SAR images[J]. Journal of Radars, 2017, 6(2): 195–203. doi: 10.12000/JR17009 NOH H, HONG S, and HAN B. Learning deconvolution network for semantic segmentation[C]. 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1520–1528. -

下载:

下载:

下载:

下载: