Robust Visual Tracking Based on Spatial Reliability Constraint

-

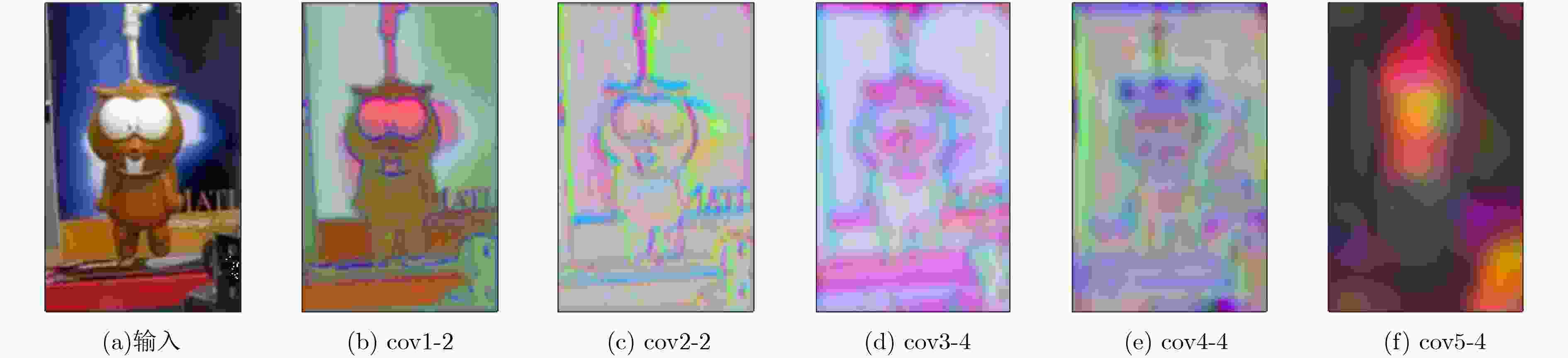

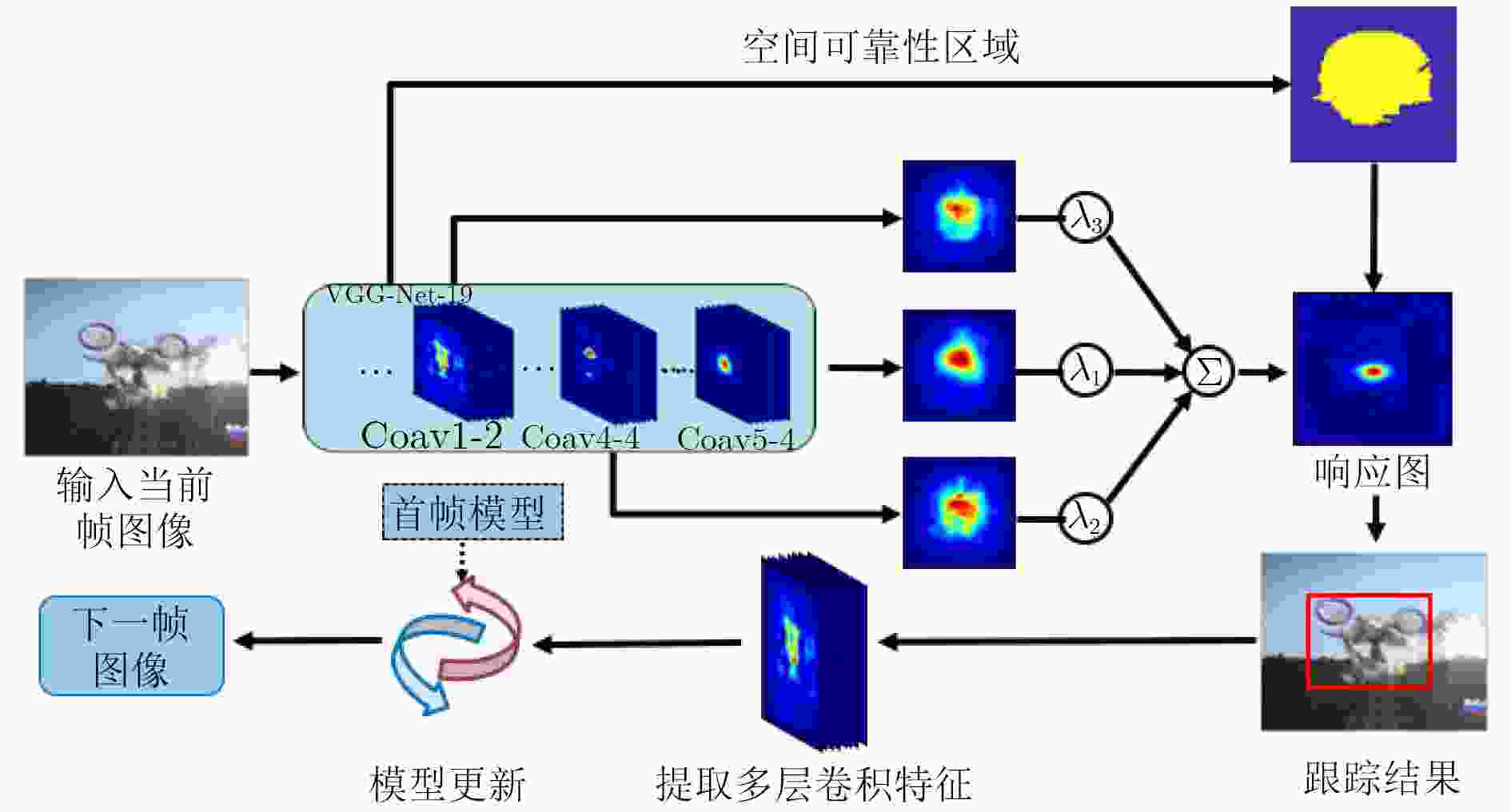

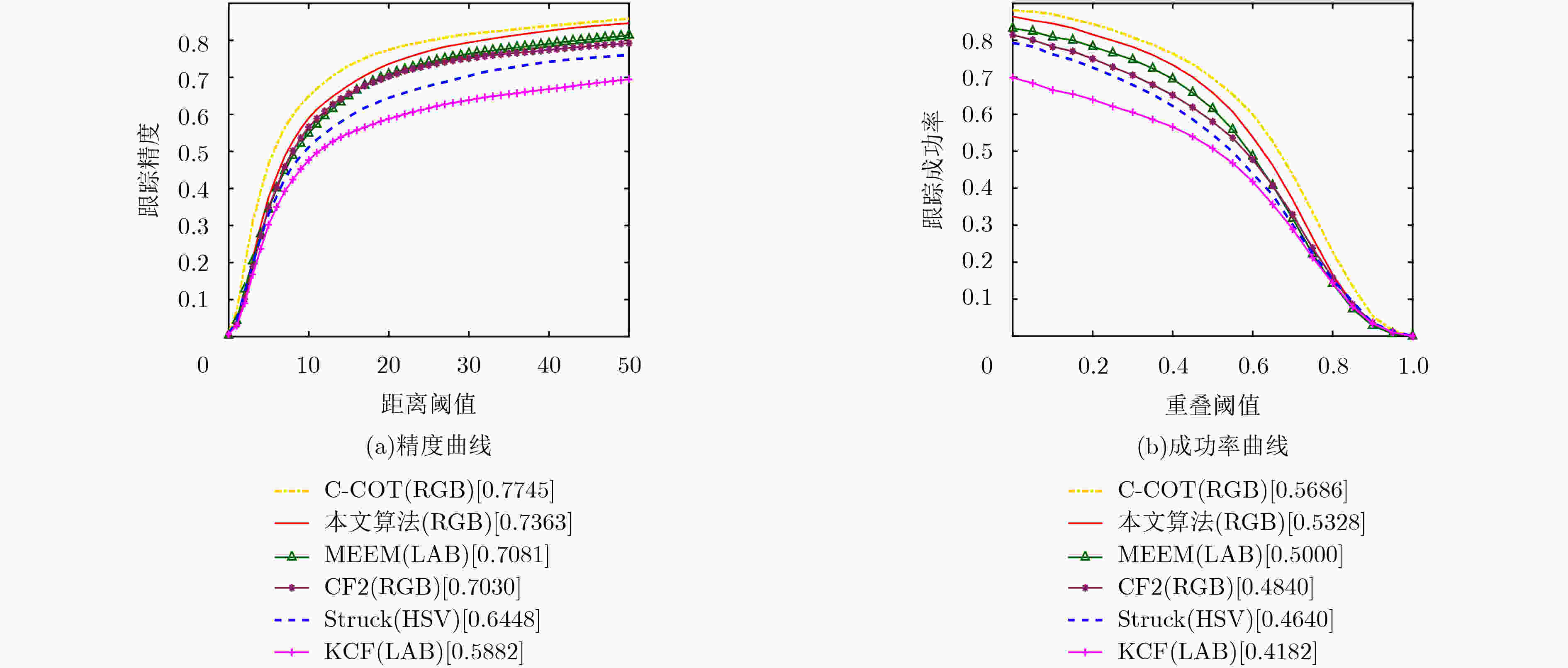

摘要: 针对复杂背景下目标容易发生漂移的问题,该文提出一种基于空间可靠性约束的目标跟踪算法。首先通过预训练卷积神经网络(CNN)模型提取目标的多层深度特征,并在各层上分别训练相关滤波器,然后对得到的响应图进行加权融合。接着通过高层特征图提取目标的可靠性区域信息,得到一个二值注意力矩阵,最后将得到的二值矩阵用于约束融合后响应图的搜索范围,范围内的最大响应值即为目标的中心位置。为了处理长时遮挡问题,该文提出一种基于首帧模板信息的随机选择更新策略。实验结果表明,该算法在应对相似背景干扰、遮挡、超出视野等多种场景均有良好的性能表现。Abstract: Because of the problem that the target is prone to drift in complex background, a robust tracking algorithm based on spatial reliability constraint is proposed. Firstly, the pre-trained Convolutional Neural Network (CNN) model is used to extract the multi-layer deep features of the target, and the correlation filters are respectively trained on each layer to perform weighted fusion of the obtained response maps. Then, the reliability region information of the target is extracted through the high-level feature map, a binary matrix is obtained. Finally, the obtained binary matrix is used to constrain the search area of the response map, and the maximum response value in the area is the target position. In addition, in order to deal with the long-term occlusion problem, a random selection model update strategy with the first frame template information is proposed. The experimental results show that the proposed algorithm has good performance in dealing with similar background interference, occlusion, and other scenes.

-

Key words:

- Visual tracking /

- Spatial reliability constraint /

- Deep features /

- Correlation filter /

- Model update

-

表 1 基于空间可靠性约束的鲁棒视觉跟踪算法

输入:图像序列I1, I2, ···, In,目标初始位置p0=(x0, y0),目标初

始尺度s0=(w0, h0)。输出:每帧图像的跟踪结果pt=(xt, yt), st=(wt, ht)。 对于t=1, 2, ···, n, do: (1) 定位目标中心位置 (a) 利用前一帧目标位置pt–1确定第t帧ROI区域,并提取其

分层卷积特征;(b) 对于每一层的卷积特征,利用式(4)和式(5)计算其相关

响应图;(c) 利用式(6)对多个相关响应图进行融合,得到最终的相

关响应图;

(d)通过式(7)和式(8)提取空间可靠性区域图并将用于约束

响应图搜索范围;(e) 利用式(9)确定第t 帧中目标的中心位置pt。 (2) 确定目标最佳尺度 (a) 利用pt和前一帧目标尺度st–1进行多尺度采样,得到采样

图像集Is={$ I_{s_1},\ I_{s_2},\ ·\!·\!·,\ I_{s_m}$};(b) 采用文献[14]中的尺度估计方法确定第t帧中目标的最佳

尺度st。(3) 模型更新 (a) 通过得到响应图计算最大响应值; (b) 依据响应值大小和式(10)—式(12)对滤波器进行更新。 结束 表 2 不同属性下算法的跟踪精度对比结果

算法 SV(60) OCC(45) IV(34) BC(27) DEF(42) MB(29) FM(37) IPR(46) OPR(57) OV(13) LR(8) 本文算法 0.827 0.799 0.855 0.872 0.801 0.813 0.800 0.879 0.844 0.756 0.870 HDT 0.811 0.753 0.803 0.855 0.817 0.764 0.800 0.851 0.804 0.663 0.749 HCF 0.800 0.748 0.805 0.857 0.788 0.772 0.788 0.863 0.807 0.680 0.778 表 3 不同属性下算法的跟踪成功率对比结果

算法 SV(60) OCC(45) IV(34) BC(27) DEF(42) MB(29) FM(37) IPR(46) OPR(57) OV(13) LR(8) 本文算法 0.580 0.594 0.635 0.627 0.570 0.624 0.609 0.605 0.597 0.556 0.510 HDT 0.491 0.528 0.540 0.593 0.546 0.545 0.549 0.557 0.533 0.541 0.376 HCF 0.490 0.526 0.547 0.602 0.532 0.557 0.550 0.599 0.534 0.542 0.383 表 4 算法各部分对跟踪性能影响对比实验

SRCT SRCT-S SRCT-R SRCT-S-R 成功率 0.624 0.618 0.610 0.603 跟踪精度 0.864 0.856 0.841 0.838 -

SMEULDERS A W M, CHU D M, CUCCHIARA R, et al. Visual tracking: An experimental survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(7): 1442–1468. doi: 10.1109/TPAMI.2013.230 WANG Naiyan, SHI Jianping, YEUNG D Y, et al. Understanding and diagnosing visual tracking systems[C]. Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 3101–3109. doi: 1109/ICCV.2015.355. RAWAT W and WANG Zenghui. Deep convolutional neural networks for image classification: A comprehensive review[J]. Neural Computation, 2017, 29(9): 2352–2449. doi: 10.1162/neco_a_00990 GIRSHICK R, DONAHUE J, DARRELL T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]. Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580–587. SHELHAMER E, LONG J, and DARRELL T. Fully convolutional networks for semantic segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(4): 640–651. doi: 10.1109/TPAMI.2016.2572683 WANG Naiyan and YEUNG D Y. Learning a deep compact image representation for visual tracking[C]. Proceedings of the 26th International Conference on Neural Information Processing Systems, South Lake Tahoe, Nevada, USA, 2013: 809–817. HONG S, YOU T, KWAK S, et al. Online tracking by learning discriminative saliency map with convolutional neural network[C]. Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 2015: 597–606. NAM H and HAN B. Learning multi-domain convolutional neural networks for visual tracking[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 4293–4302. 李寰宇, 毕笃彦, 杨源, 等. 基于深度特征表达与学习的视觉跟踪算法研究[J]. 电子与信息学报, 2015, 37(9): 2033–2039. doi: 10.11999/JEIT150031LI Huanyu, BI Duyan, YANG Yuan, et al. Research on visual tracking algorithm based on deep feature expression and learning[J]. Journal of Electronics &Information Technology, 2015, 37(9): 2033–2039. doi: 10.11999/JEIT150031 侯志强, 戴铂, 胡丹, 等. 基于感知深度神经网络的视觉跟踪[J]. 电子与信息学报, 2016, 38(7): 1616–1623. doi: 10.11999/JEIT151449HOU Zhiqiang, DAI Bo, HU Dan, et al. Robust visual tracking via perceptive deep neural network[J]. Journal of Electronics &Information Technology, 2016, 38(7): 1616–1623. doi: 10.11999/JEIT151449 HENRIQUES J F, CASEIRO R, MARTINS P, et al. Exploiting the circulant structure of tracking-by-detection with kernels[C]. Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 2012: 702–715. doi: 10.1007/978-3-642-33765-9_50. DANELLJAN M, KHAN F S, FELSBERG M, et al. Adaptive color attributes for real-time visual tracking[C]. Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1090–1097. doi: 10.1109/CVPR.2014.143. HENRIQUES J F, CASEIRO R, MARTINS P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596. doi: 10.1109/tpami.2014.2345390 DANELLJAN M, HÄGER G, KHAN F S, et al. Accurate scale estimation for robust visual tracking[C]. Proceedings of British Machine Vision Conference, Nottingham, UK, 2014: 65.1–65.11. doi: 10.5244/C.28.65. DANELLJAN M, HÄGER G, KHAN F S, et al. Learning spatially regularized correlation filters for visual tracking[C]. Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4310–4318. doi: 10.1109/ICCV.2015.490. DANELLJAN M, ROBINSON A, KHAN F S, et al. Beyond correlation filters: Learning continuous convolution operators for visual tracking[C]. Proceedings of the 14th European Conference, Amsterdam, the Netherlands, 2016: 472–488. doi: 10.1007/978-3-319-46454-1_29. RUSSAKOVSKY O, DENG Jia, SU Hao, et al. Imagenet large scale visual recognition challenge[J]. International Journal of Computer Vision, 2015, 115(3): 211–252. doi: 10.1007/s11263-015-0816-y KRIZHEVSKY A, SUTSKEVER I, and HINTON G E. ImageNet classification with deep convolutional neural networks[C]. Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, USA, 2012: 1097–1105. doi: 10.1145/3065386. SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]. International Conference on Learning Representations, San Diego,USA,2015. HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770–778. doi: 10.1109/CVPR.2016.90. VEDALDI A and LENC K. Matconvnet: Convolutional neural networks for matlab[C]. Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 2015: 689–692. doi: 10.1145/2733373.2807412. WU Yi, LIM J, and YANG M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834–1848. doi: 10.1109/TPAMI.2014.2388226 DANELLJAN M, HÄGER G, KHAN F S, et al. Convolutional features for correlation filter based visual tracking[C]. Proceedings of 2015 IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 2015: 58–66. doi: 10.1109/ICCVW.2015.84. QI Yuankai, ZHANG Shengping, QIN Lei, et al. Hedged deep tracking[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 4303–4311. doi: 10.1109/CVPR.2016.466. MA Chao, HUANG Jiabin, YANG Xiaokang, et al. Hierarchical convolutional features for visual tracking[C]. Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 3074–3082. doi: 10.1109/ICCV.2015.352. ZHANG Jianming, MA Shugao, and SCLAROFF S. MEEM: Robust tracking via multiple experts using entropy minimization[C]. Proceedings of the 13th European Conference, Zurich, Switzerland, 2014: 188–203. LIANG Pengpeng, BLASCH E, and LING Haibin. Encoding color information for visual tracking: Algorithms and benchmark[J]. IEEE Transactions on Image Processing, 2015, 24(12): 5630–5644. doi: 10.1109/TIP.2015.2482905 TAO Ran, GAVVES E, and SMEULDERS A W M. Siamese instance search for tracking[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 1420–1429. doi: 10.1109/CVPR.2016.158. BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional siamese networks for object tracking[C]. European Conference on Computer Vision, Amsterdam, the Netherlands, 2016: 850–865. 侯志强, 张浪, 余旺盛, 等. 基于快速傅里叶变换的局部分块视觉跟踪算法[J]. 电子与信息学报, 2015, 37(10): 2397–2404. doi: 10.11999/JEIT150183HOU Zhiqiang, ZHANG Lang, YU Wangsheng, et al. Local patch tracking algorithm based on fast fourier transform[J]. Journal of Electronics &Information Technology, 2015, 37(10): 2397–2404. doi: 10.11999/JEIT150183 -

下载:

下载:

下载:

下载: