Online Learning-based Virtual Resource Allocation for Network Slicing in Virtualized Cloud Radio Access Network

-

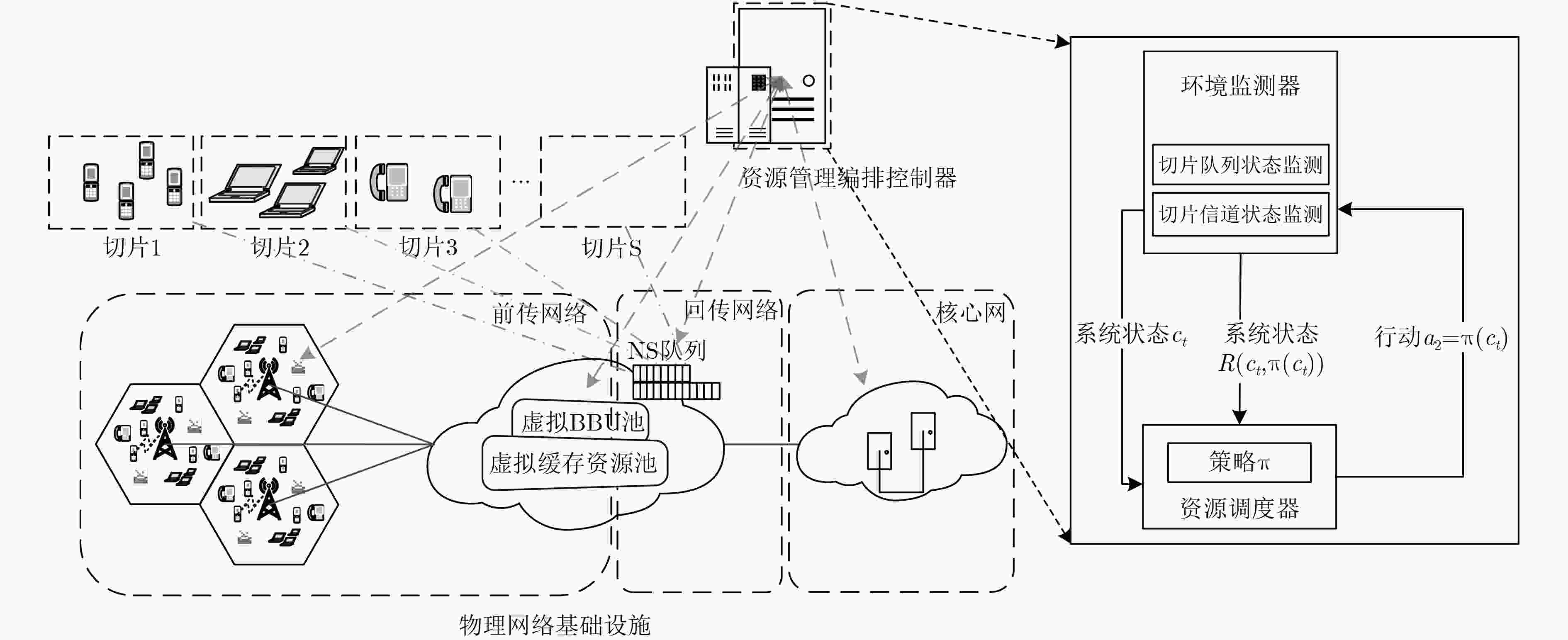

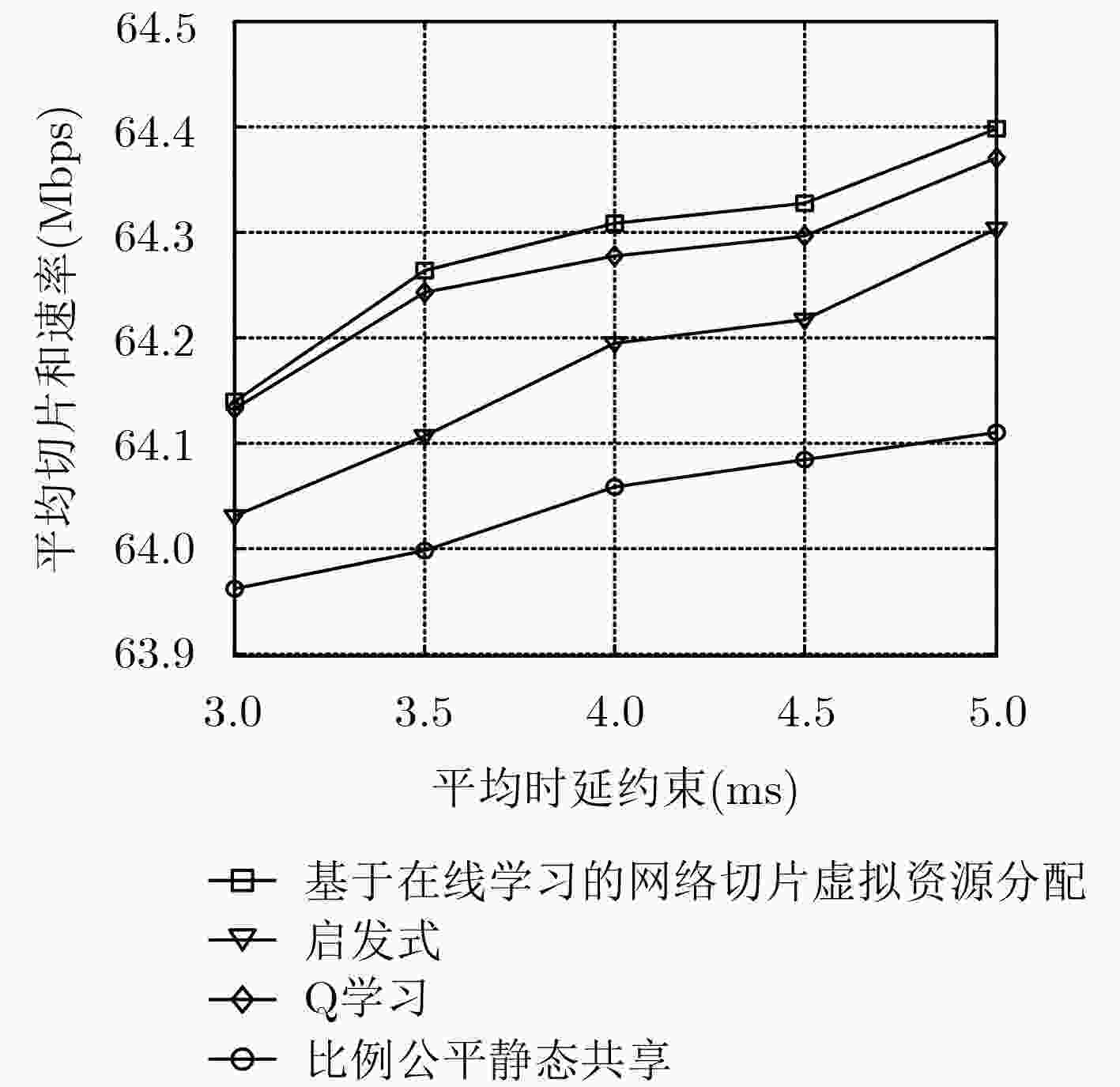

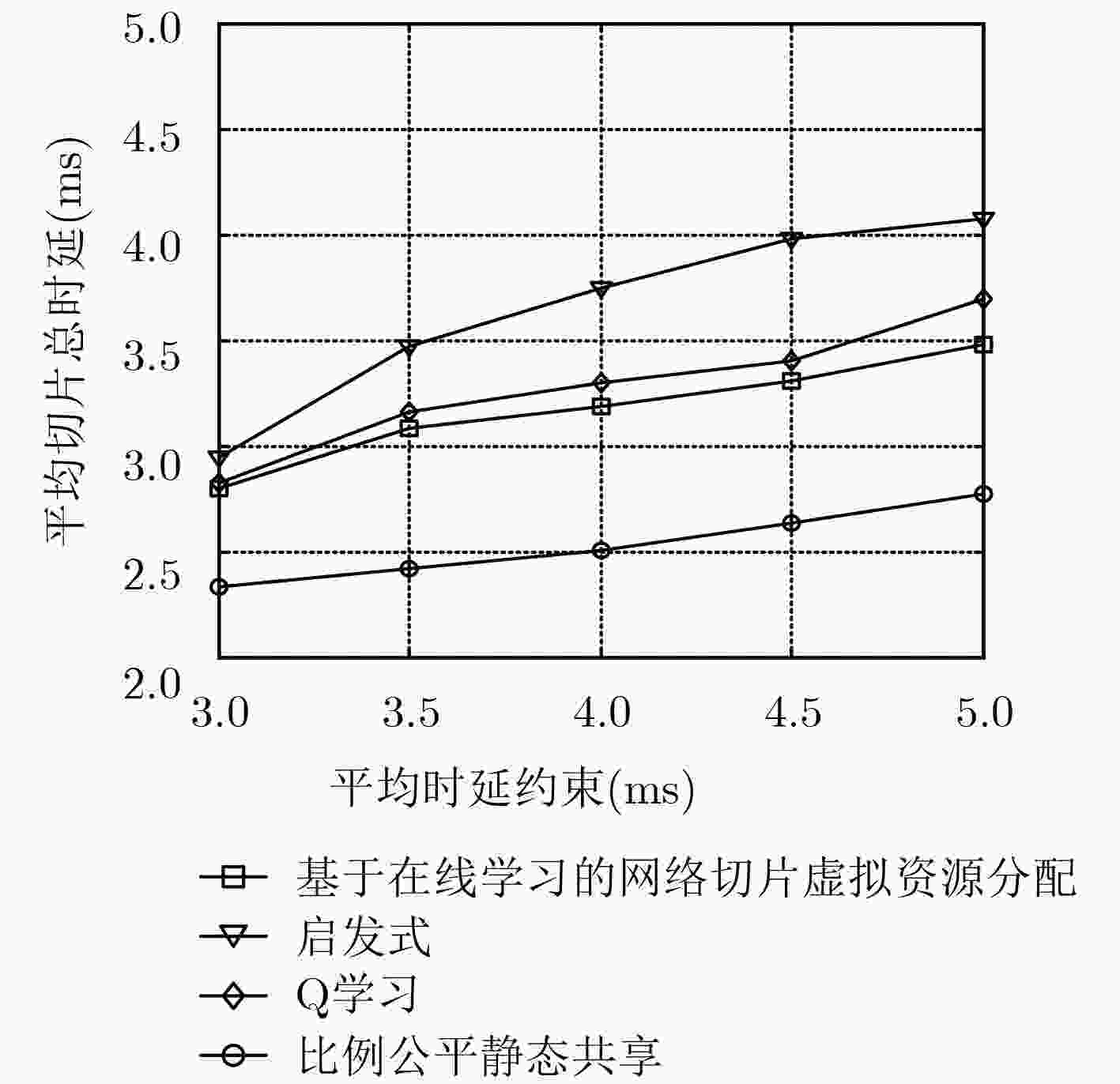

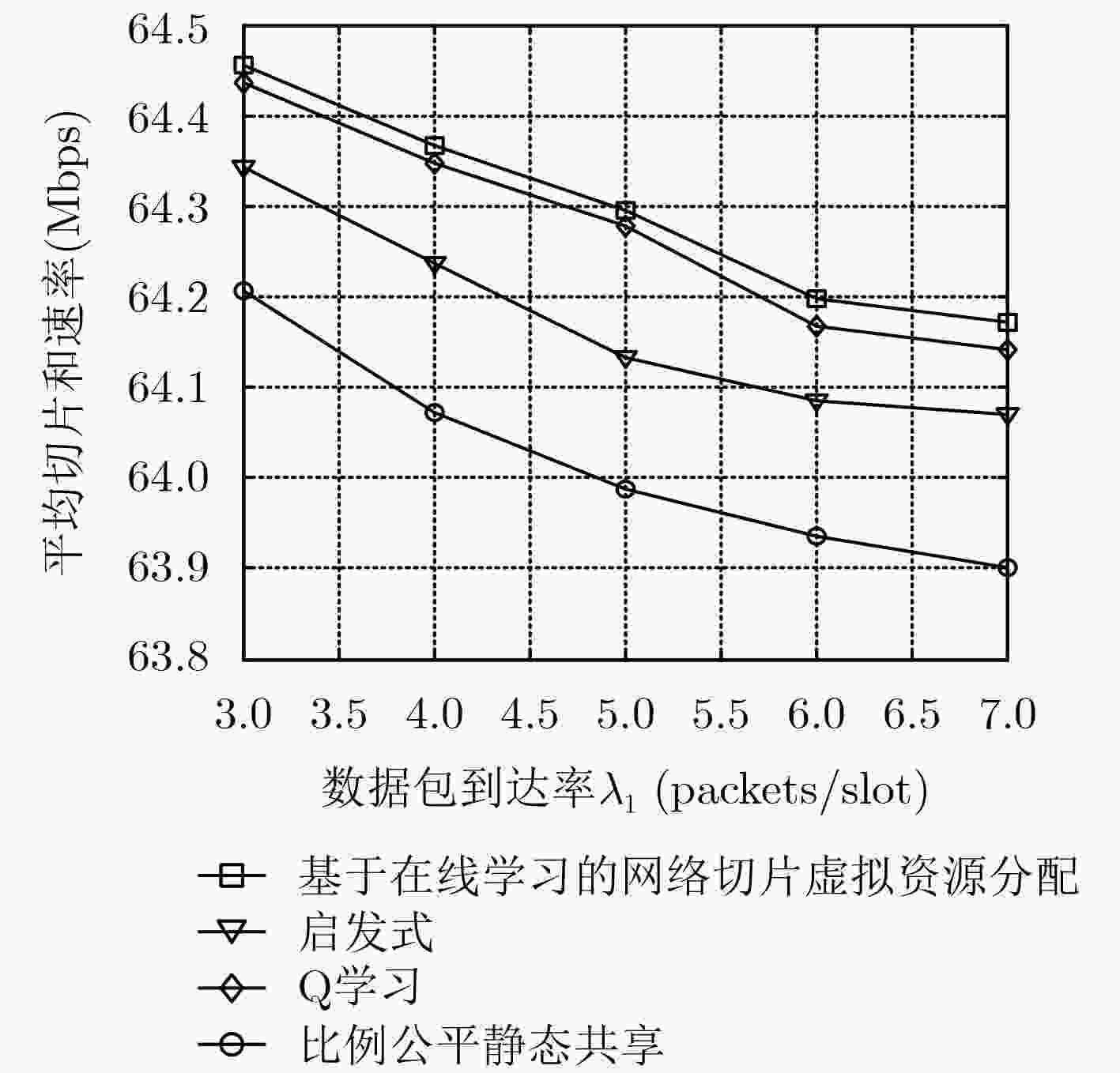

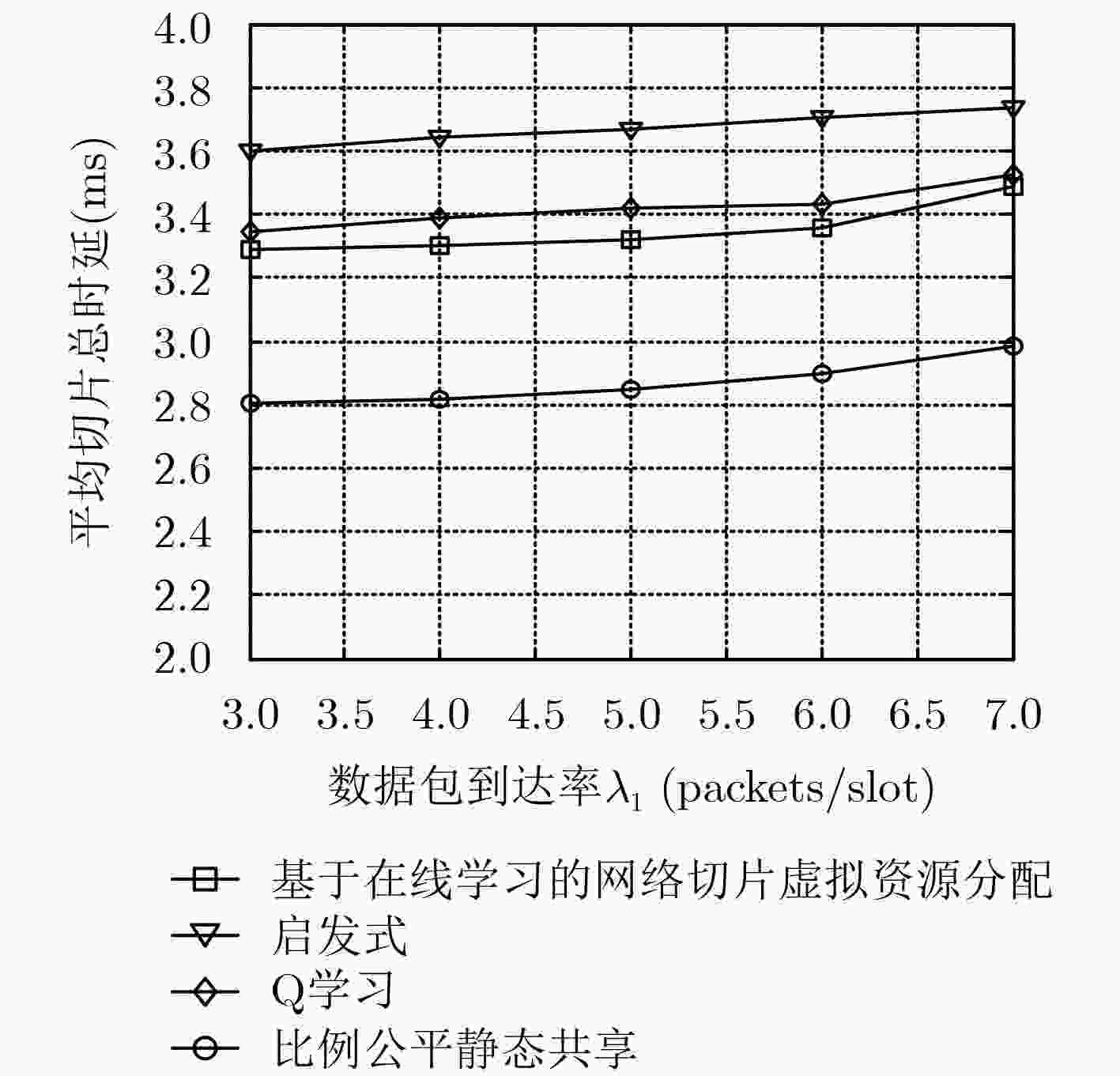

摘要: 针对现有研究中缺乏云无线接入网络(C-RAN)场景下对网络切片高效的动态资源分配方案的问题,该文提出一种虚拟化C-RAN网络下的网络切片虚拟资源分配算法。首先基于受限马尔可夫决策过程(CMDP)理论建立了一个虚拟化C-RAN场景下的随机优化模型,该模型以最大化平均切片和速率为目标,同时受限于各切片平均时延约束以及网络平均回传链路带宽消耗约束。其次,为了克服CMDP优化问题中难以准确掌握系统状态转移概率的问题,引入决策后状态(PDS)的概念,将其作为一种“中间状态”描述系统在已知动态发生后,但在未知动态发生前所处的状态,其包含了所有与系统状态转移有关的已知信息。最后,提出一种基于在线学习的网络切片虚拟资源分配算法,其在每个离散的资源调度时隙内会根据当前系统状态为每个网络切片分配合适的资源块数量以及缓存资源。仿真结果表明,该算法能有效地满足各切片的服务质量(QoS)需求,降低网络回传链路带宽消耗的压力并同时提升系统吞吐量。Abstract: To solve the problem of lacking efficient and dynamic resource allocation schemes for 5G Network Slicing (NS) in Cloud Radio Access Network (C-RAN) scenario in the existing researches, a virtual resource allocation algorithm for NS in virtualized C-RAN is proposed. Firstly, a stochastic optimization model in virtualized C-RAN network is established based on the Constrained Markov Decision Process (CMDP) theory, which maximizes the average sum rates of all slices as its objective, and is subject to the average delay constraint for each slice as well as the average network backhaul link bandwidth consumption constraint in the meantime. Secondly, in order to overcome the issue of having difficulties in acquiring the accurate transition probabilities of the system states in the proposed CMDP optimization problem, the concept of Post-Decision State (PDS) as an " intermediate state” is introduced, which is used to describe the state of the system after the known dynamics, but before the unknown dynamics occur, and it incorporates all of the known information about the system state transition. Finally, an online learning based virtual resource allocation algorithm is presented for NS in virtualized C-RAN, where in each discrete resource scheduling slot, it will allocate appropriate Resource Blocks (RBs) and caching resource for each network slice according to the observed current system state. The simulation results reveal that the proposed algorithm can effectively satisfy the Quality of Service (QoS) demand of each individual network slice, reduce the pressure of backhaul link on bandwidth consumption and improve the system throughput.

-

表 1 虚拟化C-RAN网络下基于在线学习的网络切片虚拟资源分配算法

输入 系统状态空间$C$,动作空间$A$,拉格朗日回报函数

$g({c_t}, {\text{π}} ({c_t}))$,有限信道状态集合${\text{H}}$。初始化:初始化决策后状态的状态值函数${\tilde V_0}(\tilde c) \in R, \forall \tilde c \in C\,$,令

$t \leftarrow 0$, ${c_t} \leftarrow c \in C\,$。学习阶段:

(1) 求解

${a_t} = \mathop {\arg \min }\limits_{a \in A} \left\{ {g({c_t}, a) + \gamma {{\tilde V}_t}({S^{M, a}}({c_t}, a))} \right\}$; (27)(2) 观察PDS状态${\tilde c_t}$和下一时隙状态${c_{t + 1}}$:${\tilde c_t} = {S^{M, a}}({c_t}, {a_t})$,

${c_{t + 1}} = {S^{M, W}}({\tilde c_t}, {{\text{A}}_t}, {{\text{H}}_{t + 1}})$;(3) 计算${c_{t + 1}}$的状态值函数:

${V_t}({c_{t + 1}}) = \mathop {\min }\limits_{a \in A} \left\{ {g({c_{t + 1}}, a) + \gamma {{\tilde V}_t}({S^{M, a}}({c_{t + 1}}, a))} \right\}$; (28)(4) 更新${\tilde V_{t + 1}}({\tilde c_t})$: ${\tilde V_{t + 1}}({\tilde c_t}) = (1 - {\alpha _t}){\tilde V_t}({\tilde c_t}) + {\alpha _t}{V_t}({c_{t + 1}})$; (29) (5) 利用随机次梯度法更新拉格朗日乘子${\text{β}} :{\beta _i} \ge 0$。 输出 最优策略${\text{π}} _{{\rm{PDS}}}^ * $。 表 2 仿真参数

仿真参数 值 远端射频头(RRH)最大发射功率 20 dBm 各切片最大队列长度${Q_{s, \max }}$ 20 packets 噪声功率谱密度 –174 dBm/Hz 数据包大小$L$ 4 kbit/packet 路径损耗模型 104.5+20lg(d) (d[km]) 时隙长度$\tau $ 1 ms -

HOSSAIN E and HASAN M. 5G cellular: Key enabling technologies and research challenges[J]. IEEE Instrumentation & Measurement Magazine, 2015, 18(3): 11–21. doi: 10.1109/MIM.2015.7108393 CHECKO A, CHRISTIANSEN H L, YAN Ying, et al. Cloud RAN for mobile networks-A technology overview[J]. IEEE Communications Surveys & Tutorials, 2015, 17(1): 405–426. doi: 10.1109/COMST.2014.2355255 NIU Binglai, ZHOU Yong, SHAH-MANSOURI H, et al. A dynamic resource sharing mechanism for cloud radio access networks[J]. IEEE Transactions on Wireless Communications, 2016, 15(12): 8325–8338. doi: 10.1109/TWC.2016.2613896 KALIL M, Al-DWEIK A, SHARKH M F A, et al. A framework for joint wireless network virtualization and cloud radio access networks for next generation wireless networks[J]. IEEE Access, 2017, 5: 20814–20827. doi: 10.1109/ACCESS.2017.2746666 BERTSEKAS D and GALLAGER R. Data Networks[M]. Englewood Cliffs: Prentice-Hall, 1991, 152–162. YANG Jian, ZHANG Shuben, WU Xiaomin, et al. Online learning-based server provisioning for electricity cost reduction in data center[J]. IEEE Transactions on Control Systems Technology, 2017, 25(3): 1044–1051. doi: 10.1109/TCST.2016.2575801 KALIL M, SHAMI A, and YE Yinghua. Wireless resources virtualization in LTE systems[C]. Proceedings of 2014 IEEE Conference on Computer Communications Workshops, Toronto, Canada, 2014: 363–368. doi: 10.1109/INFCOMW.2014.6849259. POWELL W B. Approximate Dynamic Programming: Solving the Curses of Dimensionality[M]. Hoboken, USA: Wiley, 2011, 289–388. LAKSHMINARAYANAN C and BHATNAGAR S. Approximate dynamic programming with (min, +) linear function approximation for Markov decision processes[J]. arXiv preprint arXiv: 1403.4179, 2014. LI Rongpeng, ZHAO Zhifeng, CHEN Xianfu, et al. TACT: A transfer actor-critic learning framework for energy saving in cellular radio access networks[J]. IEEE Transactions on Wireless Communications, 2014, 13(4): 2000–2011. doi: 10.1109/TWC.2014.022014.130840 HE Xiaoming, WANG Kun, HUANG Huawei, et al. Green resource allocation based on deep reinforcement learning in content-centric IoT[J]. IEEE Transactions on Emerging Topics in Computing, 2019. doi: 10.1109/TETC.2018.2805718 -

下载:

下载:

下载:

下载: