Visual Tracking Method Based on Reverse Sparse Representation under Illumination Variation

-

摘要:

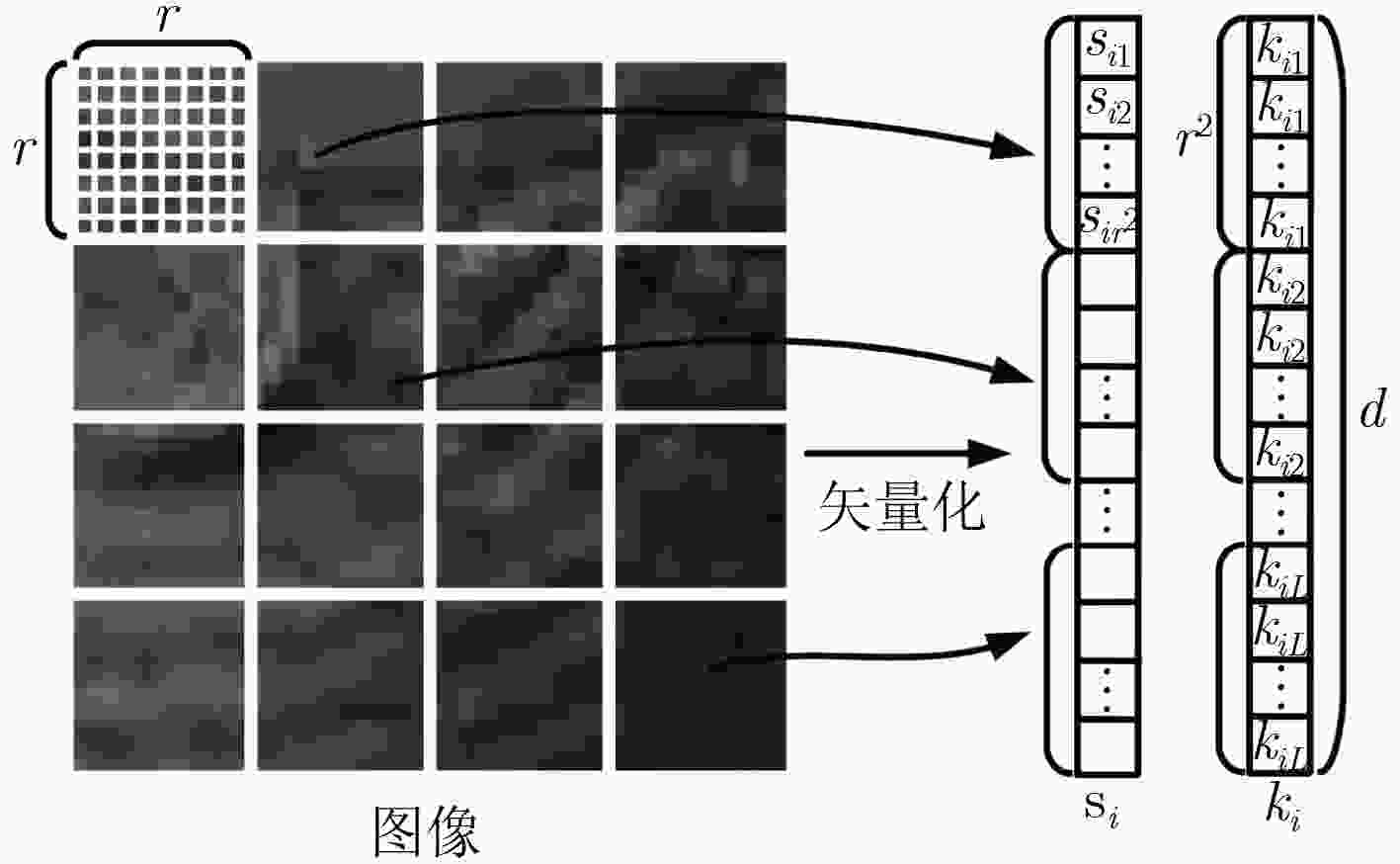

针对光照变化引起目标跟踪性能显著下降的问题,该文提出一种联合优化光照补偿和多任务逆向稀疏表示的视觉跟踪方法。首先基于模板与候选目标的平均亮度差异对模板实施光照补偿,并利用候选目标逆向稀疏表示光照补偿后的模板。而后将所得多个关于单模板的优化问题转化为一个关于多模板的多任务优化问题,并利用交替迭代方法求解此多任务优化问题以获得最优光照补偿系数矩阵以及稀疏编码矩阵。最后利用所得稀疏编码矩阵快速剔除无关候选目标,并采用局部结构化评估方法实现目标精确跟踪。仿真结果表明,与现有主流算法相比,剧烈光照变化情况下,所提方法可显著改善目标跟踪精度及稳健性。

Abstract:Focusing on the issue of heavy decrease of object tracking performance induced by illumination variation, a visual tracking method via jointly optimizing the illumination compensation and multi-task reverse sparse representation is proposed. The template illumination is firstly compensated by the developed algorithm, which is based on the average brightness difference between templates and candidates. In what follows, the candidate set is exploited to sparsely represent the templates after illumination compensation. Subsequently, the obtained multiple optimization issues associated with single template can be recast as a multi-task optimization one related to multiple templates, which can be solved by the alternative iteration approach to acquire the optimal illumination compensation coefficient and the sparse coding matrix. Finally, the obtained sparse coding matrix can be exploited to quickly eliminate the unrelated candidates, afterwards the local structured evaluation method is employed to achieve the accurate object tracking. As compared to the existing state-of-the-art algorithms, simulation results show that the proposed algorithm can improve the accuracy and robustness of the object tracking significantly in the presence of heavy illumination variation.

-

Key words:

- Visual tracking /

- Illumination compensation /

- Sparse representation /

- Particle filter

-

表 1 光照补偿与多任务逆向稀疏表示联合优化算法

输入:${T}$, ${Y}$, $\beta $和$\tilde \lambda $ (1) 基于式(8)设定稀疏编码矩阵${C}$的初始值; (2) 由式(12),式(2),式(4),式(6)获得${K}$; (3) 利用APG方法求解问题式(13)以求得${C}$; (4) 重复步骤(2),步骤(3),直至满足收敛条件。 输出:${K}$和${C}$ 表 2 视频序列及其主要挑战

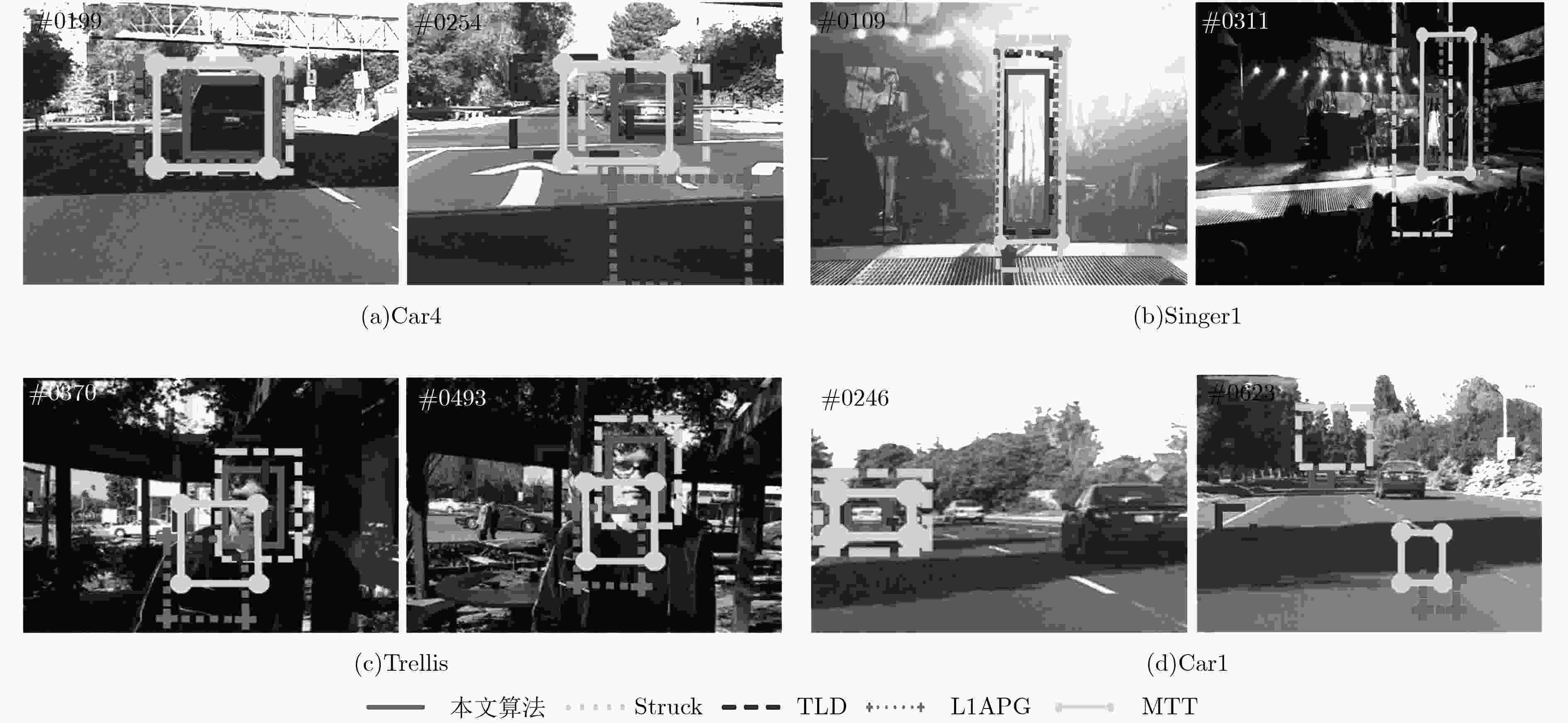

测试序列 挑战因素 Car4 光照变化,尺度变化 Singer1 光照变化,尺度变化,遮挡等 Trellis 光照变化,背景杂波,尺度变化等 Car1 光照变化,运动模糊,尺度变化等 表 3 不同跟踪方法的平均中心位置误差和平均跟踪重叠率

测试序列 平均中心位置误差(像素) 平均跟踪重叠率 本文 TLD Struck L1APG MTT 本文 TLD Struck L1APG MTT Car4 3.47 12.84 8.69 77.00 22.34 0.84 0.63 0.49 0.25 0.45 Singer1 2.88 7.99 14.51 53.35 36.17 0.86 0.73 0.36 0.28 0.34 Trellis 6.82 31.06 6.92 62.20 68.80 0.65 0.48 0.61 0.20 0.21 Car1 1.18 85.15 51.73 93.93 101.81 0.83 0.26 0.11 0.17 0.15 平均 3.59 24.26 20.46 71.62 57.28 0.80 0.53 0.40 0.23 0.29 表 4 快速候选目标筛选方案对运行速度(FPS)的影响

测试序列 Car4 Singer1 Trellis Car1 不采用筛选方案运行速度(FPS) 4.1 4.6 3.1 5.5 采用筛选方案运行速度(FPS) 10.5 8.7 10.4 8.4 -

FRADI H, LUVISON B, and PHAM Q C. Crowd behavior analysis using local mid-level visual descriptors[J]. IEEE Transactions on Circuits & Systems for Video Technology, 2017, 27(3): 589–602. doi: 10.1109/TCSVT.2016.2615443 YU Gang, LI Chao, and SHANG Zeyuan. Video monitoring method, video monitoring system and computer program product[P]. USA Patent, 9792505, 2017. UENG S K and CHEN Guanzhi. Vision based multi-user human computer interaction[J]. Multimedia Tools & Applications, 2016, 75(16): 10059–10076. doi: 10.1007/s11042-015-3061-z WU Yi, LIM J, and YANG Minghsuan. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2015, 37(9): 1834–1848. doi: 10.1109/TPAMI.2014.2388226 PAN Zheng, LIU Shuai, and FU Weina. A review of visual moving target tracking[J]. Multimedia Tools & Applications, 2017, 76(16): 16989–17018. doi: 10.1007/s11042-016-3647-0 薛模根, 刘文琢, 袁广林, 等. 基于编码迁移的快速鲁棒视觉跟踪[J]. 电子与信息学报, 2017, 39(7): 1571–1577. doi: 10.11999/JEIT160966XUE Mogen, LIU Wenzhuo, YUAN Guanglin, et al. Fast robust visual tracking based on coding transfer[J]. Journal of Electronics &Information Technology, 2017, 39(7): 1571–1577. doi: 10.11999/JEIT160966 杨峰, 张婉莹. 一种多模型贝努利粒子滤波机动目标跟踪算法[J]. 电子与信息学报, 2017, 39(3): 634–639. doi: 10.11999/JEIT160467YANG Feng and ZHANG Wanying. Multiple model Bernoulli particle filter for maneuvering target tracking[J]. Journal of Electronics &Information Technology, 2017, 39(3): 634–639. doi: 10.11999/JEIT160467 BAIG M Z and GOKHALE A V. Object tracking using mean shift algorithm with illumination invariance[C]. Fifth International Conference on Communication Systems and Network Technologies, Gwalior, India, 2015: 550–553. NAYAK A and CHAUDHURI S. Automatic illumination correction for scene enhancement and object tracking[J]. Image & Vision Computing, 2006, 24(9): 949–959. doi: 10.1016/j.imavis.2006.02.017 SILVEIRA G and MALIS E. Real-time visual tracking under arbitrary illumination changes[C]. IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, USA, 2007: 1–6. WANG Yuru, TANG Xianglong, CUI Qing, et al. Dynamic appearance model for particle filter based visual tracking[J]. Pattern Recognition, 2012, 45(12): 4510–4523. doi: 10.1016/j.patcog.2012.05.010 BAO Chenglong, WU Yi, LING Haibin, et al. Real time robust L1 tracker using accelerated proximal gradient approach[C]. IEEE Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 1830–1837. MA Bo, SHEN Jianbing, LIU Yangbiao, et al. Visual tracking using strong classifier and structural local sparse descriptors[J]. IEEE Transactions on Multimedia, 2015, 17(10): 1818–1828. doi: 10.1109/TMM.2015.2463221 ZHUANG Bohan, LU Huchuan, XIAO Ziyang, et al. Visual tracking via discriminative sparse similarity map[J]. IEEE Transactions on Image Processing, 2014, 23(4): 1872–1881. doi: 10.1109/TIP.2014.2308414 JIA Xu, LU Huchuan, and YANG Minghsuan. Visual tracking via coarse and fine structural local sparse appearance models[J]. IEEE Transactions on Image Processing, 2016, 25(10): 4555–4564. doi: 10.1109/TIP.2016.2592701 SUI Yao and ZHANG Li. Robust tracking via locally structured representation[J]. International Journal of Computer Vision, 2016, 119(2): 110–144. doi: 10.1007/s11263-016-0881-x ZHANG Tianzhu, GHANEM B, LIU Si, et al. Robust visual tracking via multi-task sparse learning[C]. IEEE Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 2042–2049. MA Bo, HUANG Lianghua, SHEN Jianbing, et al. Visual tracking under motion blur[J]. IEEE Transactions on Image Processing, 2016, 25(12): 5867–5876. doi: 10.1109/TIP.2016.2615812 ROSS D A, LIM J, LIN R S, et al. Incremental learning for robust visual tracking[J]. International Journal of Computer Vision, 2008, 77(1): 125–141. doi: 10.1007/s11263-007-0075-7 POLSON N and SOKOLOV V. Bayesian particle tracking of traffic flows[J]. IEEE Transactions on Intelligent Transportation Systems, 2018, 19(2): 345–356. doi: 10.1109/TITS.2017.2650947 HE Zhenyu, YI Shuangyan, CHEUNG Y M, et al. Robust object tracking via key patch sparse representation[J]. IEEE Transactions on Cybernetics, 2017, 47(2): 354–364. doi: 10.1109/TCYB.2016.2514714 ZHANG Kaihua, ZHANG Lei, and YANG Minghsuan. Real-time compressive tracking[C]. European Conference on Computer Vision, Florence, Italy, 2012: 864–877. KALAL Z, MATAS J, and MIKOLAJCZYK K. P-N learning: Bootstrapping binary classifiers by structural constraints[C]. IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, USA, 2010: 49–56. HARE S, SAFFARI A, and TORR P H S. Struck: Structured output tracking with kernels[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2016, 38(10): 2096–2109. doi: 10.1109/TPAMI.2015.2509974 -

下载:

下载:

下载:

下载: