Night-vision Image Fusion Based on Intensity Transformation and Two-scale Decomposition

-

摘要:

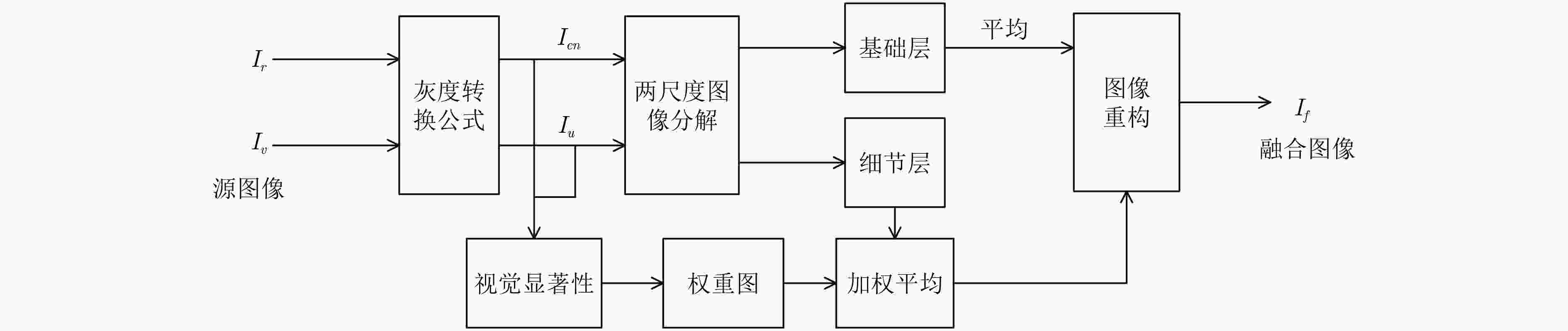

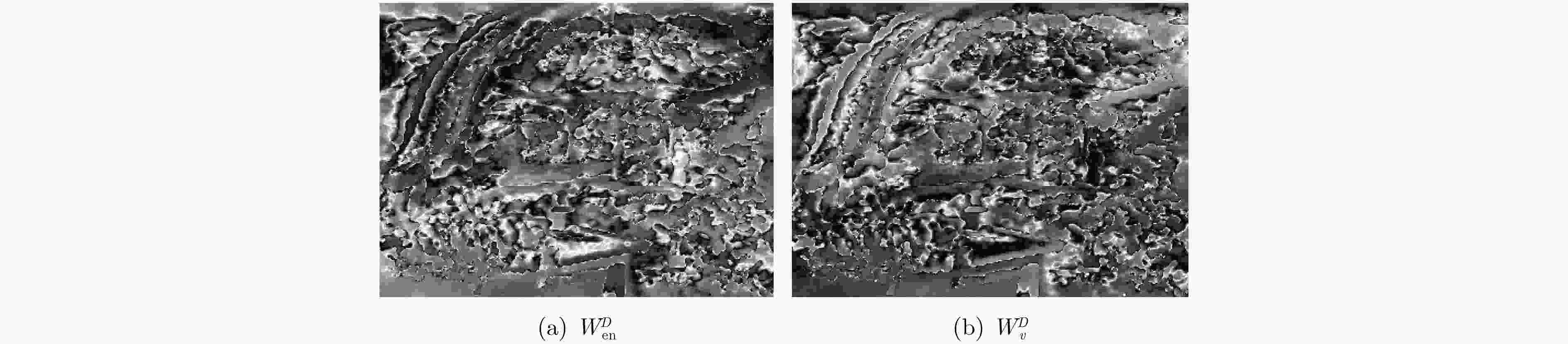

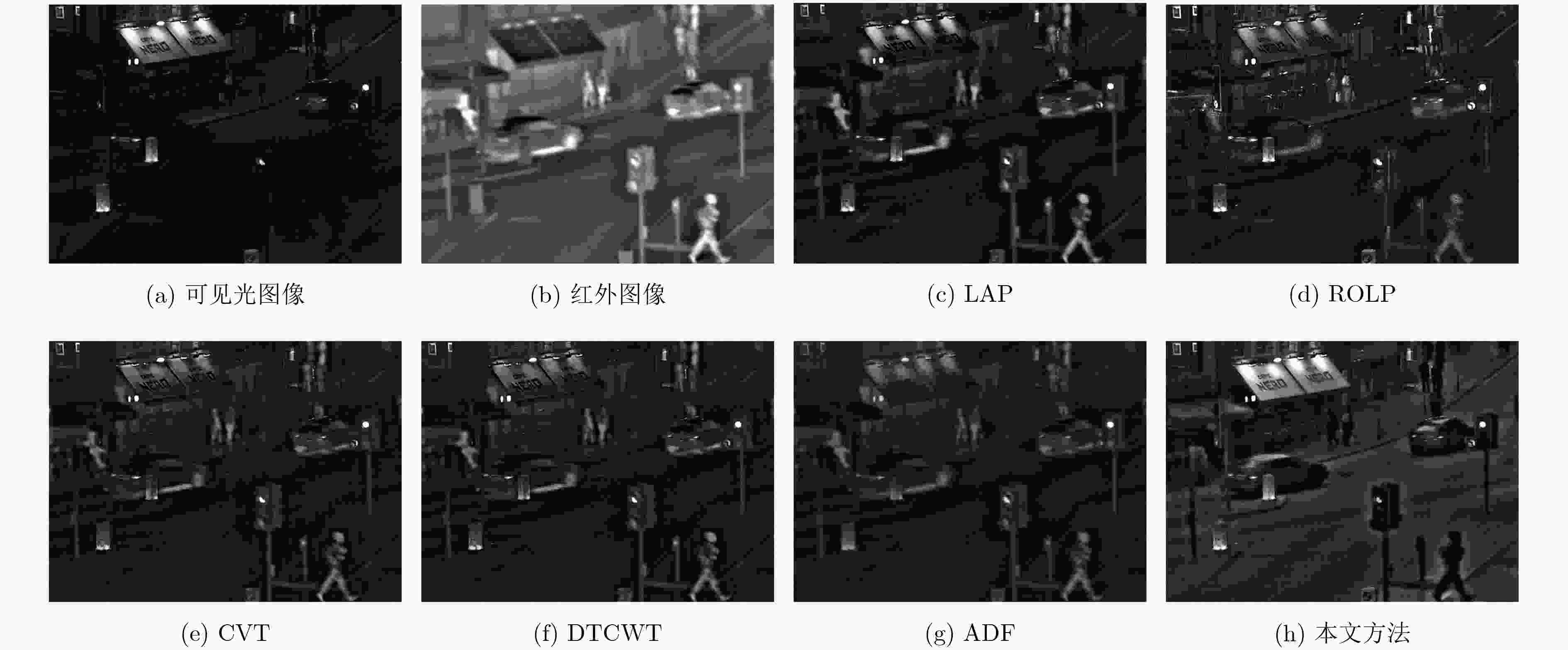

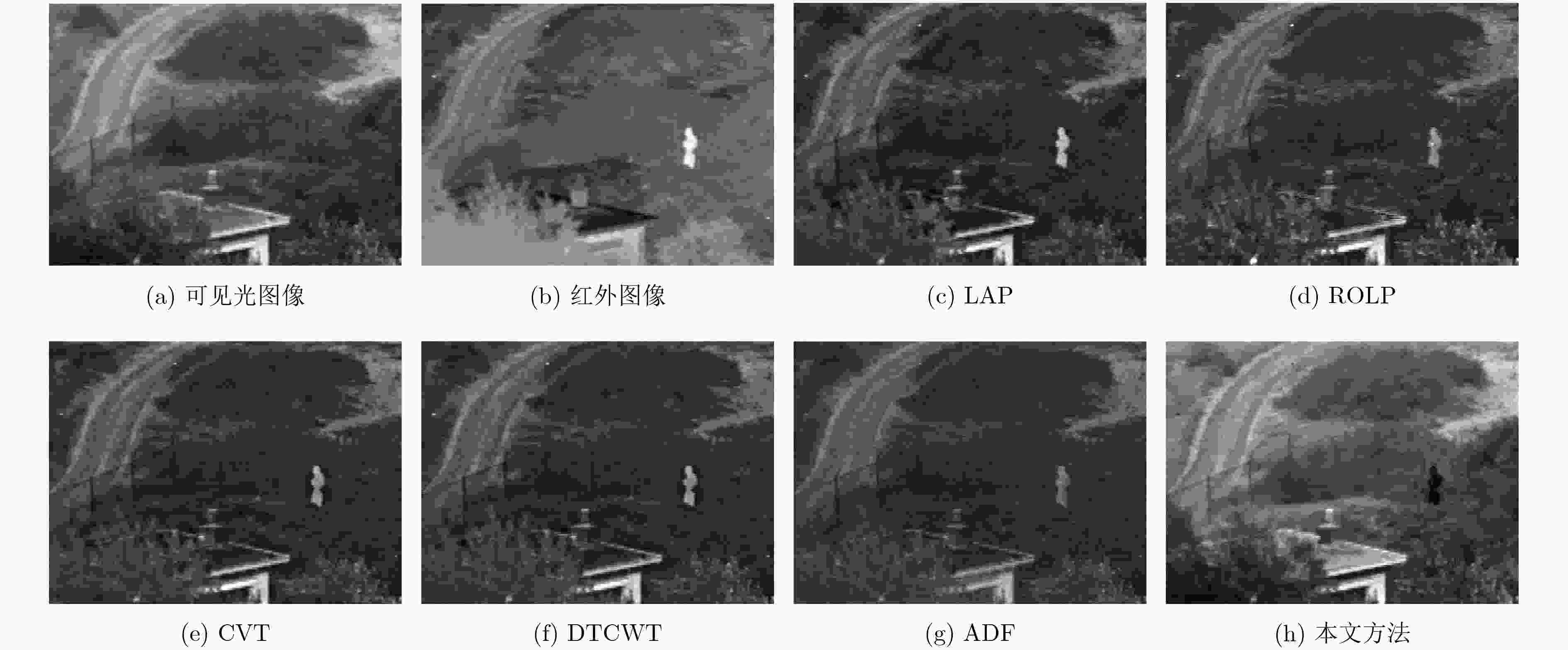

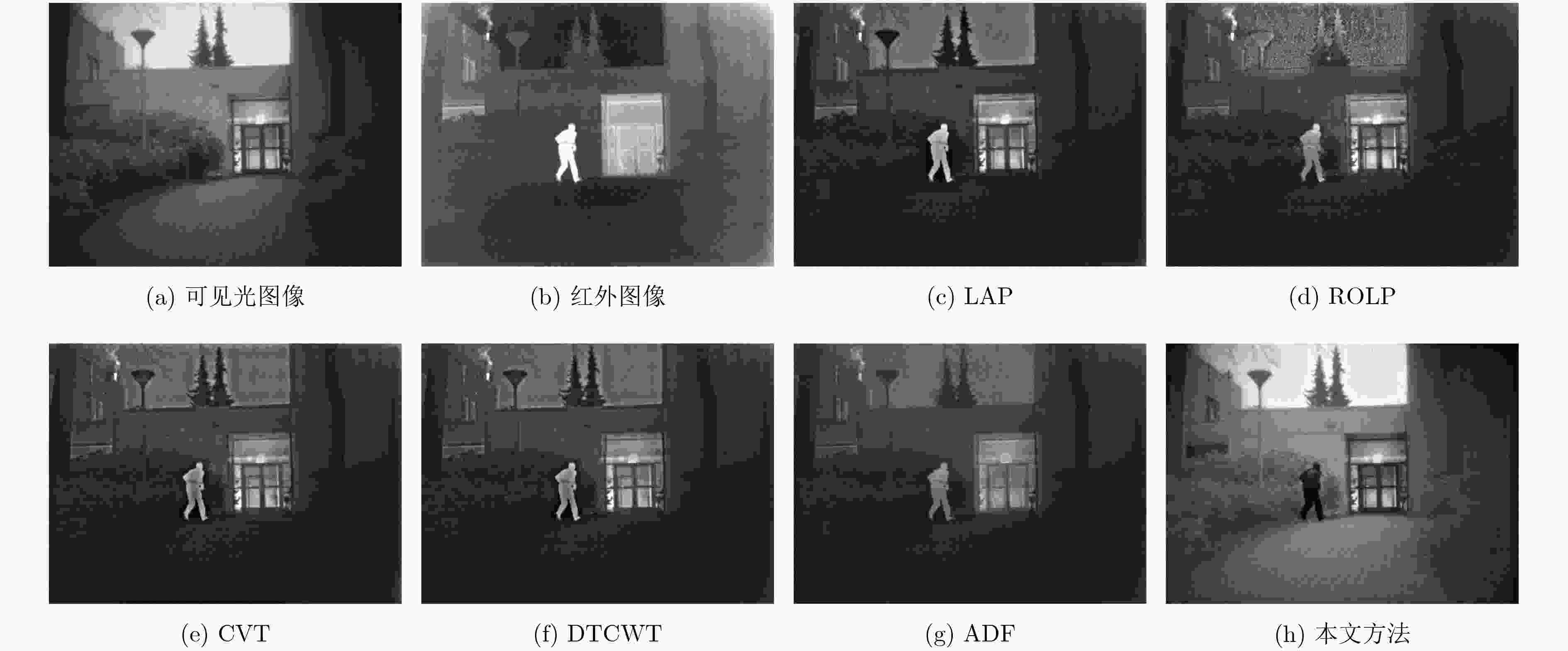

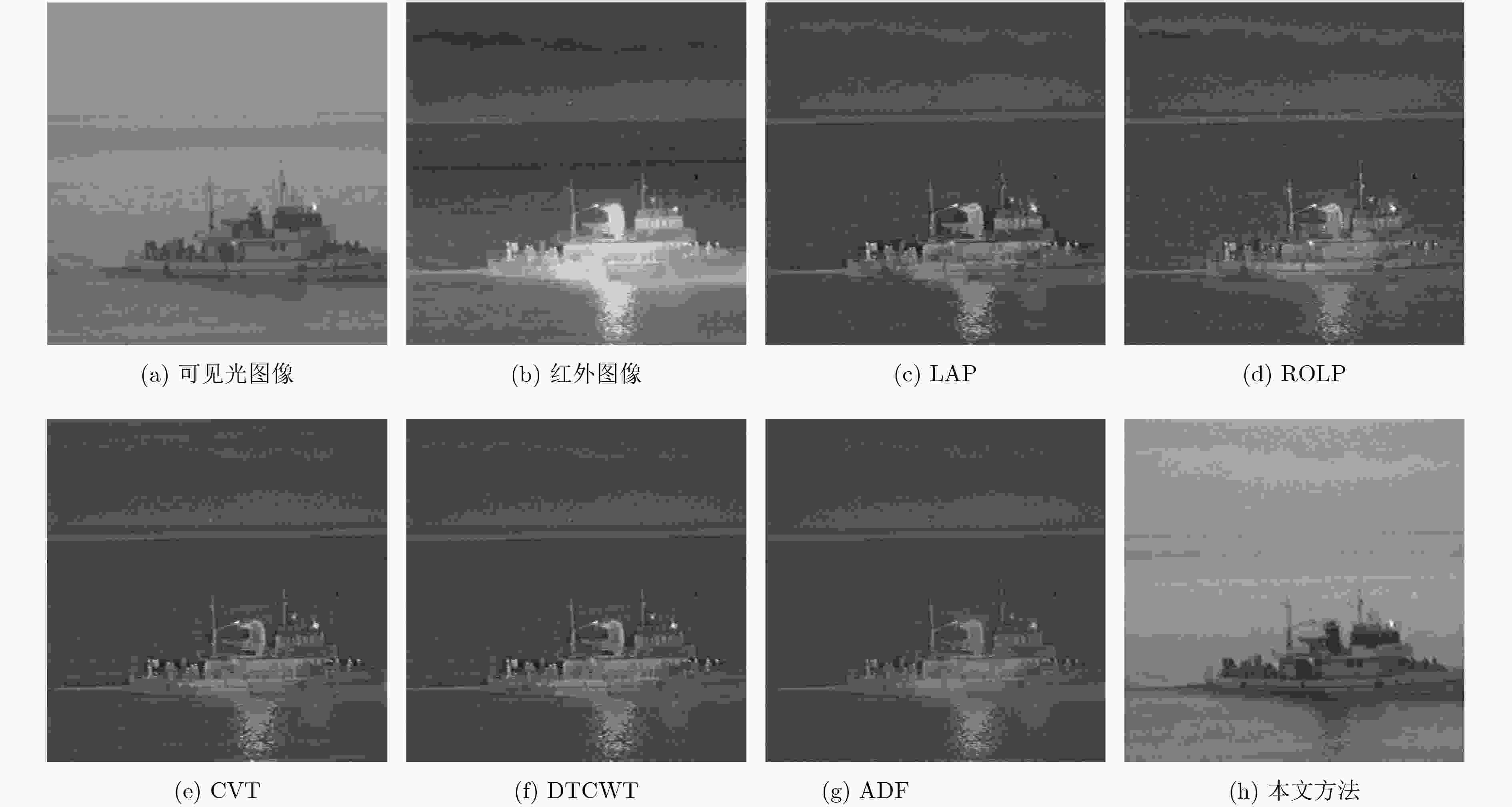

为了获得更适合人感知的夜视融合图像,该文提出一种基于灰度变换与两尺度分解的夜视图像融合算法。首先,利用红外像素值作为指数因子对可见光图像进行灰度转换,在达到可见光图像增强的同时还使可见光与红外图像融合任务转换为同类图像融合。其次,通过均值滤波对增强结果与原始可见光图像进行两尺度分解。再次,运用基于视觉权重图的方法融合细节层。最后,综合这些结果重构出融合图像。由于该文方法在可见光波段显示结果,因此融合图像更适合视觉感知。实验结果表明,所提方法在视觉质量和客观评价方面优于其它5种对比方法,融合时间小于0.2 s,满足实时性要求。融合后图像背景细节信息清晰,热目标突出,同时降低处理时间。

Abstract:In order to achieve more suitable night vision fusion images for human perception, a novel night-vision image fusion algorithm is proposed based on intensity transformation and two-scale decomposition. Firstly, the pixel value from the infrared image is used as the exponential factor to achieve intensity transformation of the visible image, so that the task of infrared-visible image fusion can be transformed into the merging of homogeneous images. Secondly, the enhanced result and the original visible image are decomposed into base and detail layers through a simple average filter. Thirdly, the detail layers are fused by the visual weight maps. Finally, the fused image is reconstructed by synthesizing these results. The fused image is more suitable for the visual perception, because the proposed method presents the result in the visual spectrum band. Experimental results show that the proposed method outperforms obviously the other five methods. In addition, the computation time of the proposed method is less than 0.2 s, which meet the real-time requirements. In the fused result, the details of the background are clear while the objects with high temperature variance are highlighted as well.

-

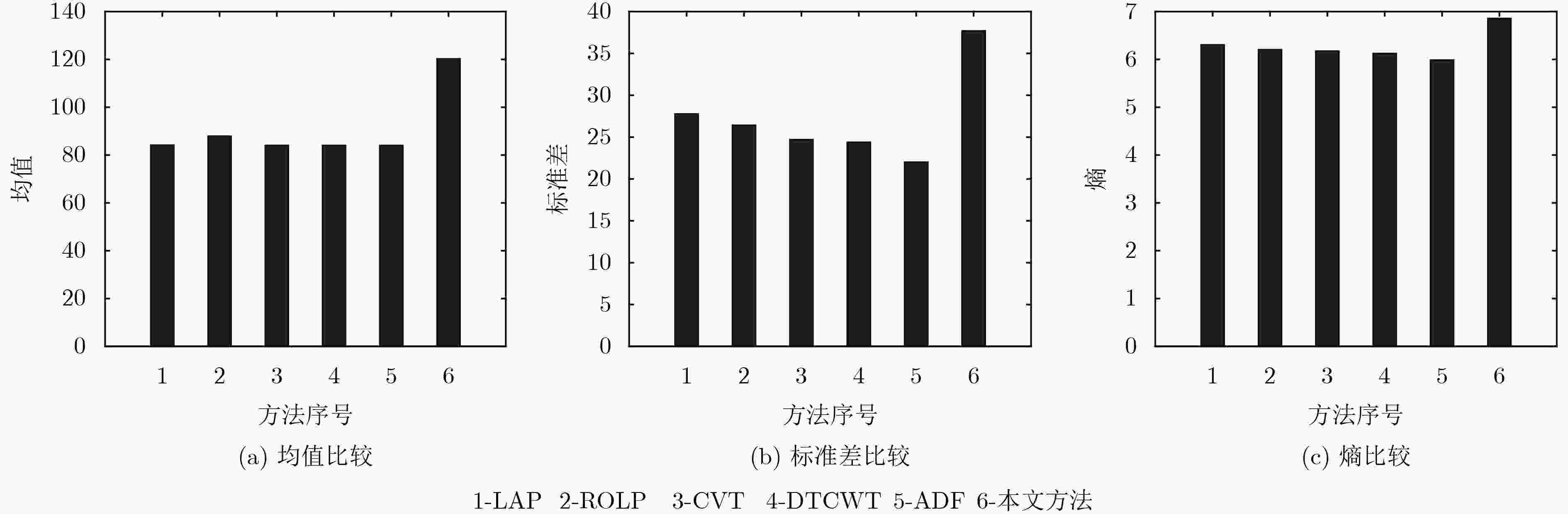

表 1 不同融合方法的客观性能指标

图像 评价指标 LAP ROLP CVT DTCWT ADF 本文方法 $\mathop \mu \limits^ \wedge $ 52.5067 55.5025 51.9005 51.8983 51.7756 70.1690 Quad $\sigma $ 31.5616 28.2624 25.1804 25.2682 21.9894 34.3756 ${E_f}$ 6.4729 6.1093 6.1692 6.1586 6.0398 6.7689 $\mathop \mu \limits^ \wedge $ 90.8149 96.3052 91.0868 91.0788 91.1387 124.2739 UNcamp $\sigma $ 29.1292 27.7301 26.9391 26.2760 23.2265 38.3262 ${E_f}$ 6.6550 6.5508 6.5310 6.4847 6.2865 7.2638 $\mathop \mu \limits^ \wedge $ 82.1788 86.1979 82.1010 82.0766 82.0353 122.6444 Kaptein $\sigma $ 36.2649 35.7918 34.1582 33.6152 31.6902 51.6181 ${E_f}$ 6.7763 6.7911 6.7779 6.7054 6.6047 7.4176 $\mathop \mu \limits^ \wedge $ 110.9204 113.3709 110.9161 110.9148 110.9183 163.6281 Steamboat $\sigma $ 14.0743 13.8319 12.4700 12.3160 11.0786 26.4028 ${E_f}$ 5.3071 5.3595 5.2087 5.1377 5.0049 5.9645 表 2 处理时间对比(s)

图像 大小 LAP ROLP CVT DTCWT ADF 本文方法 Quad 496×632 0.0193 0.1931 1.9994 0.5288 0.9267 0.1681 UNcamp 270×360 0.0094 0.1076 1.2281 0.2480 0.3225 0.1021 Kaptein 450×620 0.0203 0.1919 1.8308 0.4891 0.8570 0.1341 Steamboat 510×505 0.0127 0.1771 1.7049 0.4434 0.8472 0.1192 平均 0.0247 0.1674 1.6908 0.4273 0.7384 0.1309 -

冯鑫, 张建华, 胡开群, 等. 基于变分多尺度的红外与可见光图像融合[J]. 电子学报, 2018, 46(3): 680–687. doi: 10.3969/j.issn.0372-2112.2018.03.025FENG Xin, ZHANG Jianhua, HU Kaiqun, et al. The infrared and visible image fusion method based on variational multiscale[J]. Acta Electronica Sinica, 2018, 46(3): 680–687. doi: 10.3969/j.issn.0372-2112.2018.03.025 江泽涛, 吴辉, 周哓玲. 基于改进引导滤波和双通道脉冲发放皮层模型的红外与可见光图像融合算法[J]. 光学学报, 2018, 38(2): 112–120. doi: 10.3788/aos201838.0210002JIANG Zetao, WU Hui, and ZHOU Xiaoling. Infrared and visible image fusion algorithm based on improved guided filtering and dual-channel spiking cortical model[J]. Acta Optica Sinica, 2018, 38(2): 112–120. doi: 10.3788/aos201838.0210002 LI Jinxi, ZHOU Dingfu, YUAN Sheng, et al. Modified image fusion technique to remove defocus noise in optical scanning holography[J]. Optics Communications, 2018, 407(15): 234–238. doi: 10.1016/j.optcom.2017.08.057 YIN Xiang and MA Jun. Image fusion method based on entropy rate segmentation and multi-scale decomposition[J]. Laser & Optoelectronics Progress, 2018, 55(1): 1–8. doi: 10.3788/LOP55.011011 LI Shutao, KANG Xudong, and HU Jianwen. Image fusion with guided filtering[J]. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 2013, 22(7): 2864–2875. doi: 10.1109/TIP.2013.2244222 LEWIS J J, O'CALLAGHAN R J, NIKOLOV S G, et al. Pixel- and region-based image fusion with complex wavelets[J]. Information Fusion, 2007, 8(2): 119–130. doi: 10.1016/j.inffus.2005.09.006 KUMAR B K S. Image fusion based on pixel significance using cross bilateral filter[J]. Signal, Image and Video Processing, 2015, 9(5): 1193–1204. doi: 10.1007/s11760-013-0556-9 谢伟, 周玉钦, 游敏. 融合梯度信息的改进引导滤波[J]. 中国图象图形学报, 2016, 21(9): 1119–1126. doi: 10.11834/jig.20160901XIE Wei, ZHOU Yuqin, and YOU Min. Improved guided image filtering integrated with gradient information[J]. Journal of Image and Graphics, 2016, 21(9): 1119–1126. doi: 10.11834/jig.20160901 ZUO Yujia, LIU Jinghong, BAI Guanbing, et al. Airborne infrared and visible image fusion combined with region segmentation[J]. Sensors, 2017, 17(5): 1–15. doi: 10.3390/s17051127 TAO Li, NGO Hau, ZHANG Ming, et al. A multisensory image fusion and enhancement system for assisting drivers in poor lighting conditions[C]. Proceedings of the 34th Applied Imagery and Pattern Recognition Workshop, Washington, USA, 2005: 106–113. CHANDRASHEKAR L and SREEDEVI A. Advances in biomedical imaging and image fusion[J]. International Journal of Computer Applications, 2018, 179(24): 1–9. doi: 10.5120/ijca2018912307 LIU Yu, CHEN Xun, PENG Hu, et al. Multi-focus image fusion with a deep convolutional neural network[J]. Information Fusion, 2017, 36(7): 191–207. doi: 10.1016/j.inffus.2016.12.001 刘峰, 沈同圣, 马新星. 交叉双边滤波和视觉权重信息的图像融合[J]. 仪器仪表学报, 2017, 38(4): 1005–1013. doi: 10.3969/j.issn.0254-3087.2017.04.027LIU Feng, SHEN Tongsheng, and MA Xinxing. Image fusion via cross bilateral filter and visual weight information[J]. Chinese Journal of Scientific Instrument, 2017, 38(4): 1005–1013. doi: 10.3969/j.issn.0254-3087.2017.04.027 ZHAO Jufeng, FENG Huajun, XU Zhihai, et al. Detail enhanced multi-source fusion using visual weight map extraction based on multi scale edge preserving decomposition[J]. Optics Communications, 2013, 287(2): 45–52. doi: 10.1016/j.optcom.2012.08.070 LIU Zhaodong, CHAI Yi, YIN Hongpeng, et al. A novel multi-focus image fusion approach based on image decomposition[J]. Information Fusion, 2017, 35(5): 102–116. doi: 10.1016/j.inffus.2016.09.007 孙彦景, 杨玉芬, 刘东林, 等. 基于内在生成机制的多尺度结构相似性图像质量评价[J]. 电子与信息学报, 2016, 38(1): 127–134. doi: 10.11999/JEIT150616SUN Yanjing, YANG Yufen, LIU Donglin, et al. Multiple-scale structural similarity image quality assessment based on internal generative mechanism[J]. Journal of Electronics &Information Technology, 2016, 38(1): 127–134. doi: 10.11999/JEIT150616 LI Jun, SONG Minghui, and PENG Yuanxi. Infrared and visible image fusion based on robust principal component analysis and compressed sensing[J]. Infrared Physics & Technology, 2018, 89(3): 129–139. doi: 10.1016/j.infrared.2018.01.003 刘国军, 高丽霞, 陈丽奇. 广义平均的全参考型图像质量评价池化策略[J]. 光学精密工程, 2017, 25(3): 742–748. doi: 10.3788/OPE.20172503.0742LIU Guojun, GAO Lixia, and CHEN Liqi. Pool strategy for full-reference IQA via general means[J]. Optics and Precision Engineering, 2017, 25(3): 742–748. doi: 10.3788/OPE.20172503.0742 曲怀敬, 李健. 基于混合统计建模的图像融合[J]. 计算机辅助设计与图形学学报, 2017, 29(5): 838–845. doi: 10.3969/j.issn.1003-9775.2017.05.007QU Huaijing and LI Jian. Image fusion based on statistical mixture modeling[J]. Journal of Computer-Aided Design &Computer Graphics, 2017, 29(5): 838–845. doi: 10.3969/j.issn.1003-9775.2017.05.007 朱攀, 刘泽阳, 黄战华. 基于DTCWT和稀疏表示的红外偏振与光强图像融合[J]. 光子学报, 2017, 46(12): 213–221. doi: 10.3788/gzxb20174612.1210002ZHU Pan, LIU Zeyang, and HUANG Zhanhua. Infrared polarization and intensity image fusion based on dual-tree complex wavelet transform and sparse representation[J]. Acta Photonica Sinica, 2017, 46(12): 213–221. doi: 10.3788/gzxb20174612.1210002 -

下载:

下载:

下载:

下载: