Remote Sensing Image Fusion Based on Optimized Dictionary Learning

-

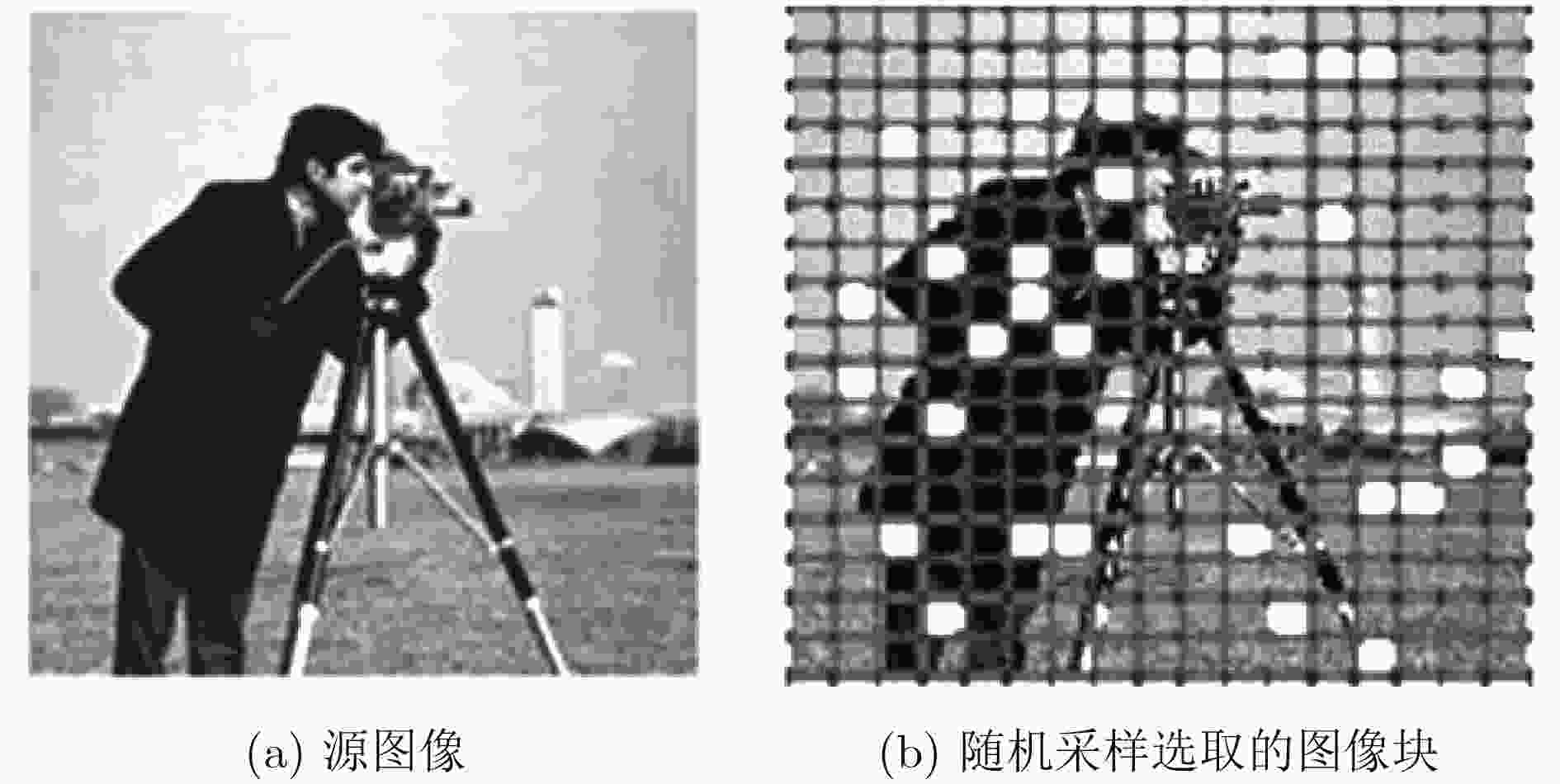

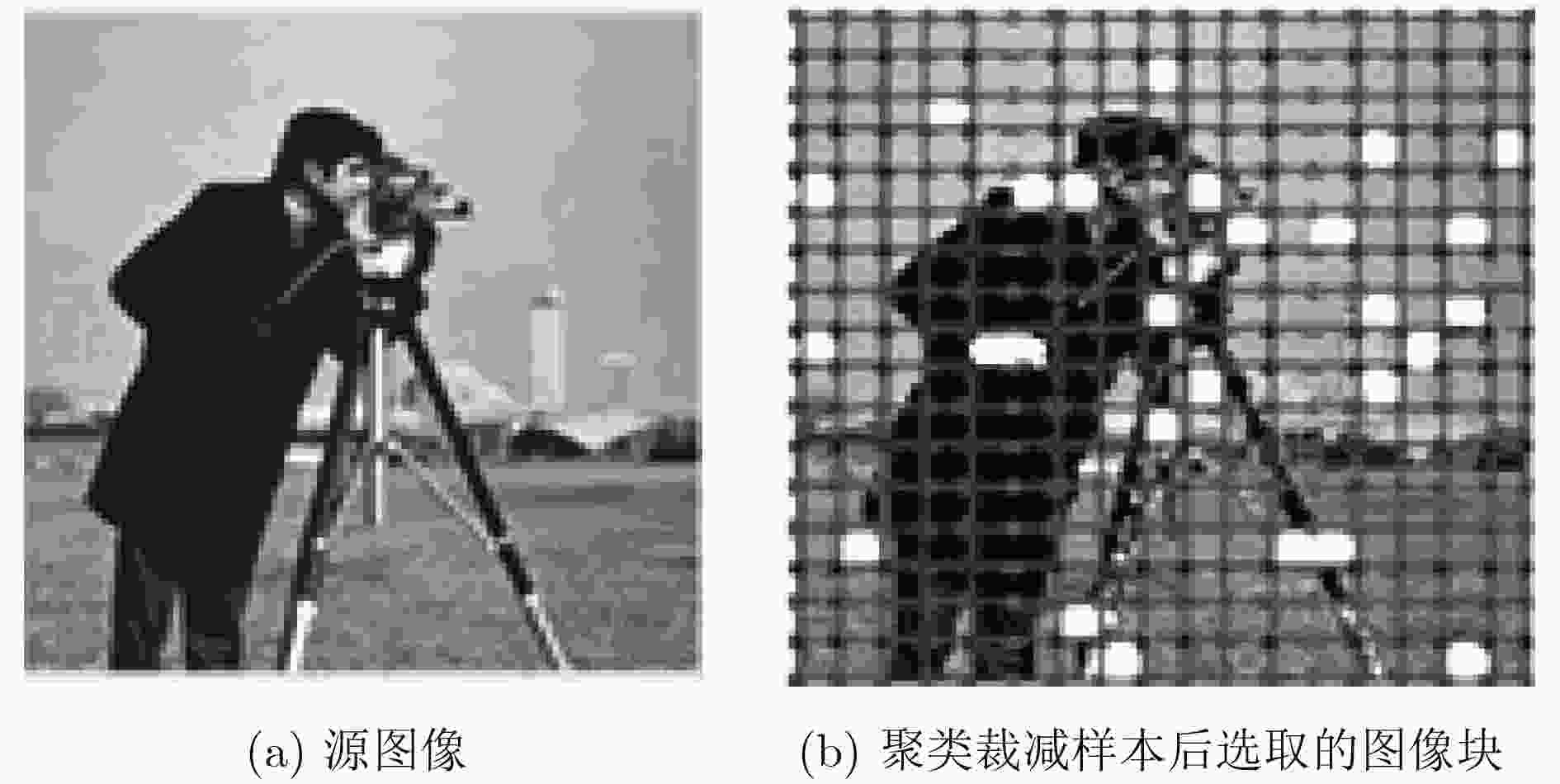

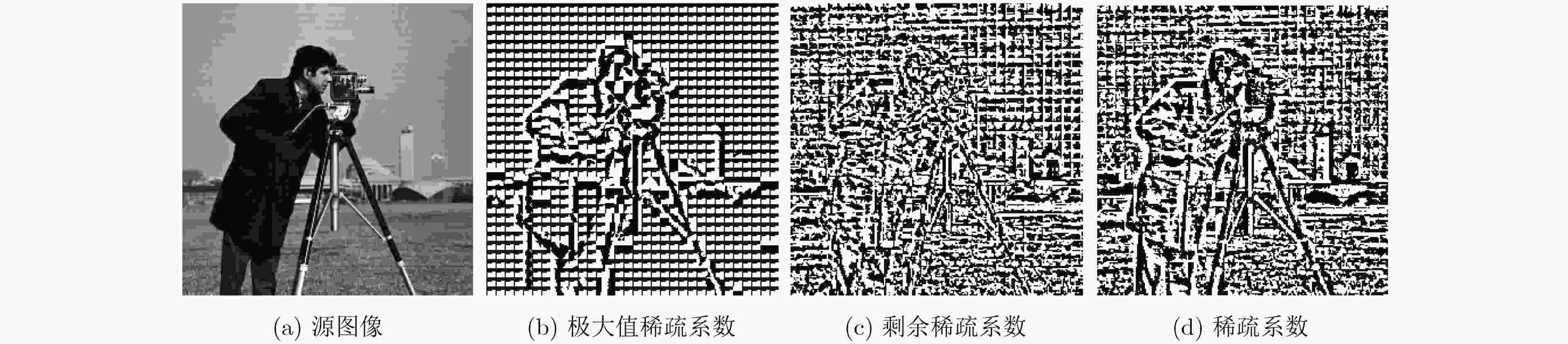

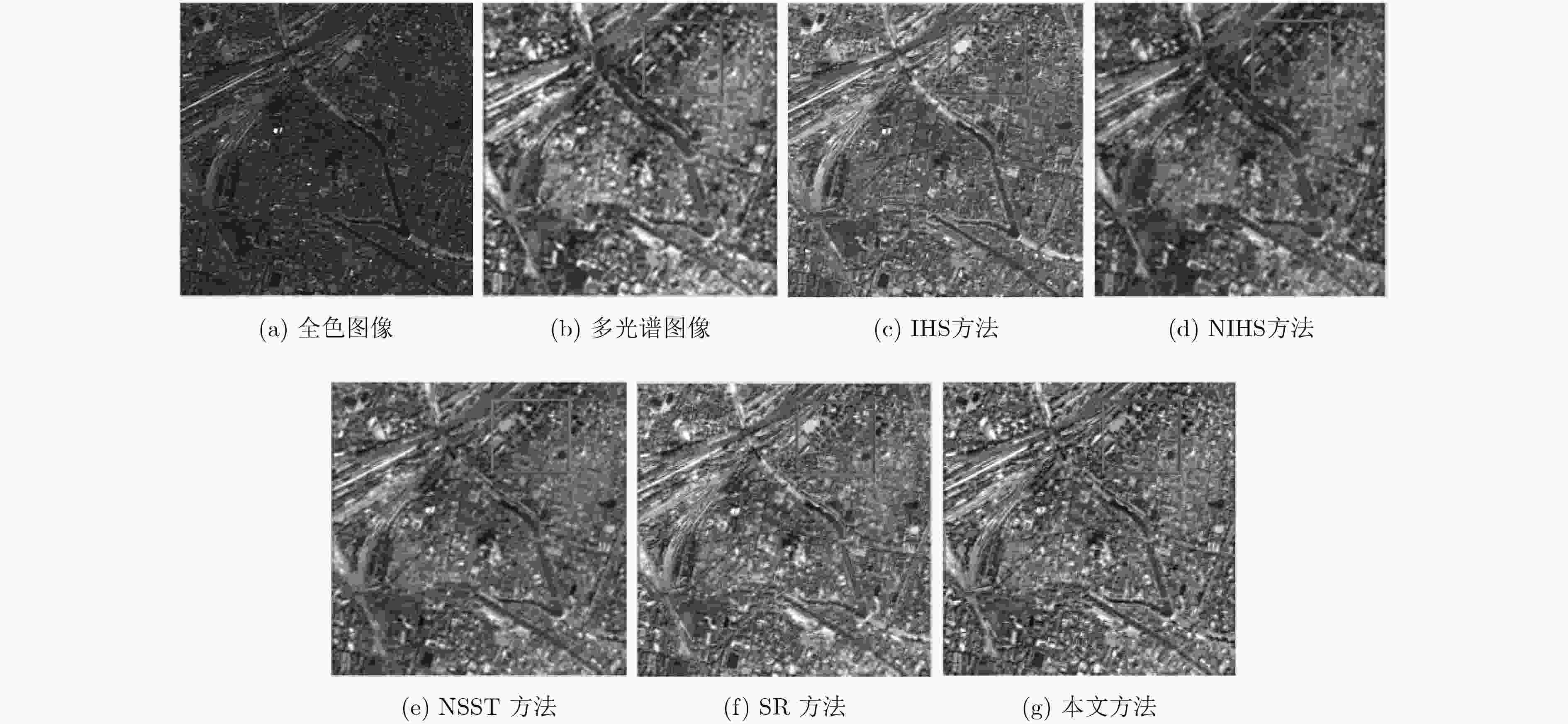

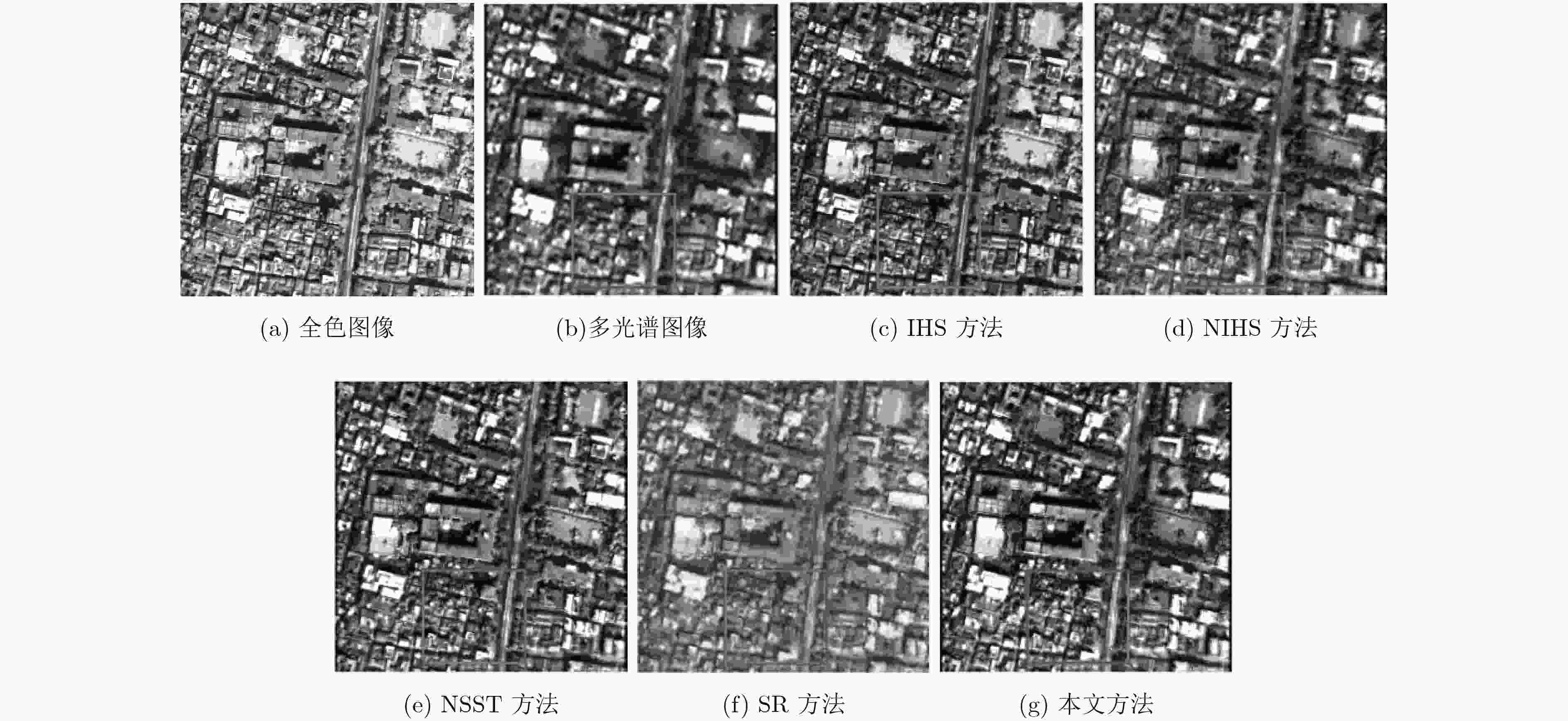

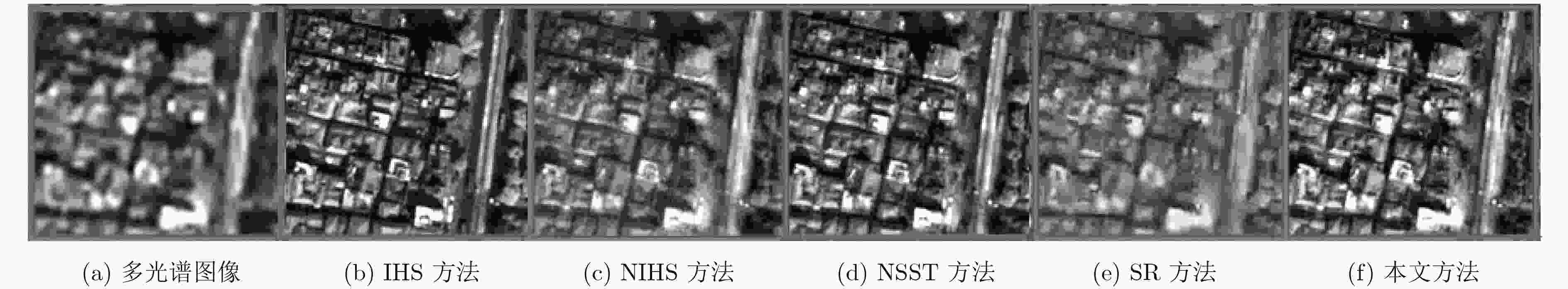

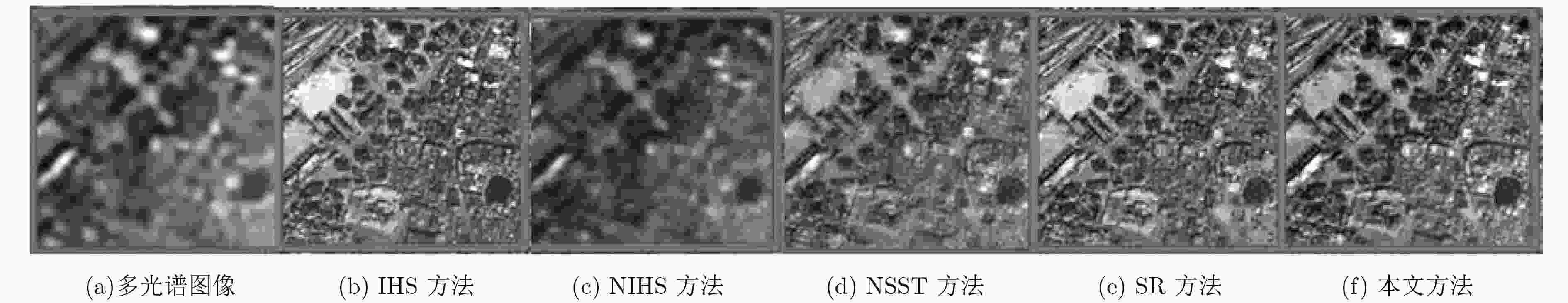

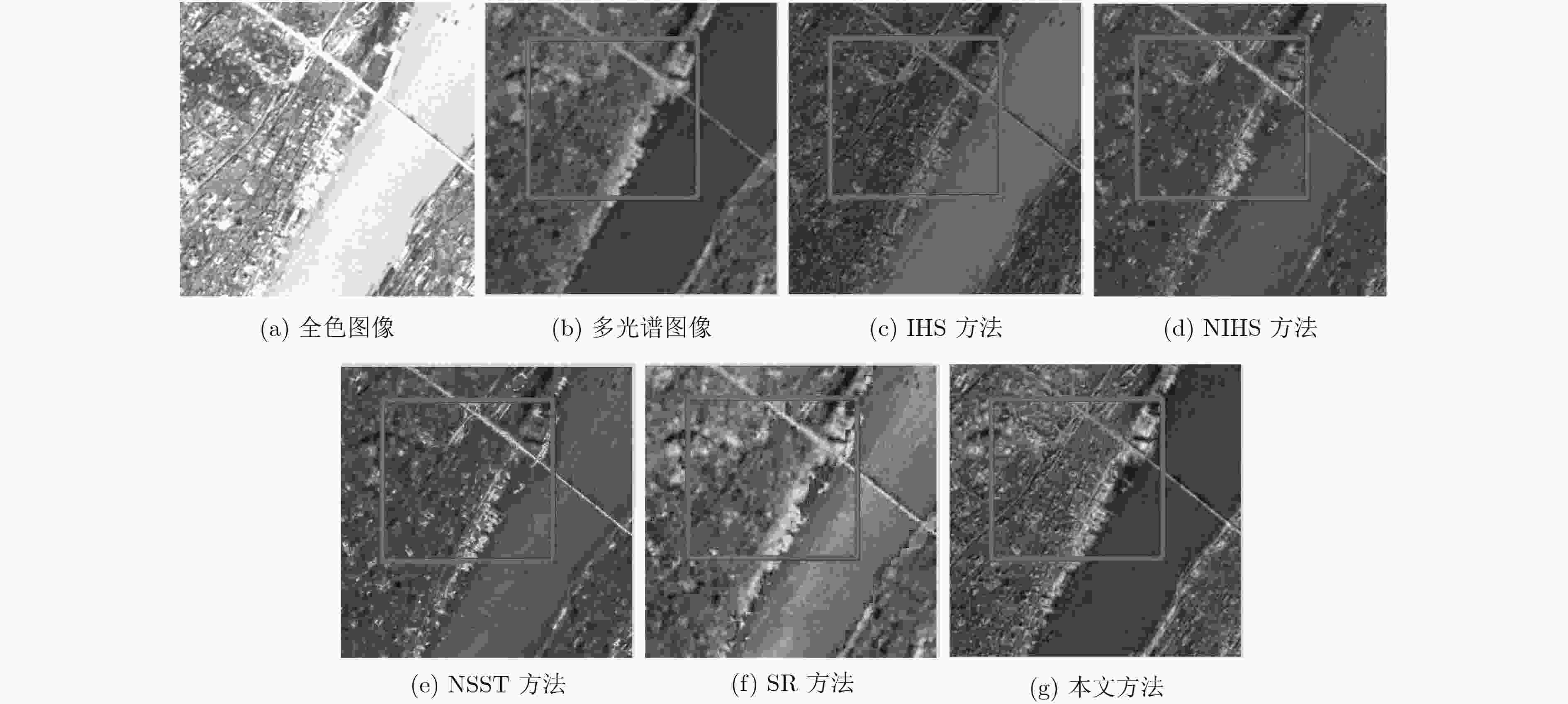

摘要: 为提升全色图像和多光谱图像的融合效果,该文提出基于优化字典学习的遥感图像融合方法。首先将经典图像库中的图像分块作为训练样本,对其进行K均值聚类,根据聚类结果适度裁减数量较多且相似度较高的图像块,减少训练样本个数。接着对裁减后的训练样本进行训练,得到通用性字典,并标记出相似字典原子和较少使用的字典原子。然后用与原稀疏模型差异最大的全色图像块规范化后替换相似字典原子和较少使用的字典原子,得到自适应字典。使用自适应字典对多光谱图像经IHS变换后获取的亮度分量和源全色图像进行稀疏表示,把每一个图像块稀疏系数中的模极大值系数分离,得到极大值稀疏系数,将剩下的稀疏系数称为剩余稀疏系数。针对极大值稀疏系数和剩余稀疏系数分别选择不同的融合规则进行融合,以保留更多的光谱信息和空间细节信息,最后进行IHS逆变换获得融合图像。实验结果表明,与传统方法相比所提方法得到的融合图像主观视觉效果较好,且客观评价指标更优。Abstract: In order to improve the fusion quality of panchromatic image and multi-spectral image, a remote sensing image fusion method based on optimized dictionary learning is proposed. Firstly, K-means cluster is applied to image blocks in the image database, and then image blocks with high similarity are removed partly in order to improve the training efficiency. While obtaining a universal dictionary, the similar dictionary atoms and less used dictionary atoms are marked for further research. Secondly, similar dictionary atoms and less used dictionary atoms are replaced by panchromatic image blocks with the largest difference from the original sparse model to obtain an adaptive dictionary. Furthermore the adaptive dictionary is used to sparse represent the intensity component and panchromatic image, the modulus maxima coefficients in the sparse coefficients of each image blocks are separated to obtain maximal sparse coefficients, and the remaining sparse coefficients are called residual sparse coefficients. Then, each part is fused by different fusion rules to preserve more spectral and spatial detail information. Finally, inverse IHS transform is employed to obtain the fused image. Experiments demonstrate that the proposed method provides better spectral quality and superior spatial information in the fused image than its counterparts.

-

Key words:

- Remote sensing image fusion /

- K-means cluster /

- Adaptive dictionary /

- Sparse represent /

- Fusion rule

-

表 1 字典性能对比

字典 相似字典原子 较少使用的字典原子 总误差 Dr 13 11 0.614 Dc 2 13 0.024 Da 0 0 0.022 表 3 不同采样率下字典训练误差对比

算法 采样10% 采样30% 采样50% 采样70% 采样90% KSVD算法 0.636 0.614 0.448 0.022 0.022 本文算法 0.036 0.022 0.021 0.019 0.018 表 2 不同采样率下字典训练效率对比(s)

算法 采样10% 采样30% 采样50% 采样70% 采样90% KSVD算法 579.8 1280.5 1923.3 3235.4 6577.2 本文算法 1562.4 2281.7 2936.4 4240.6 7578.9 表 4 Road原图像融合结果的性能比较

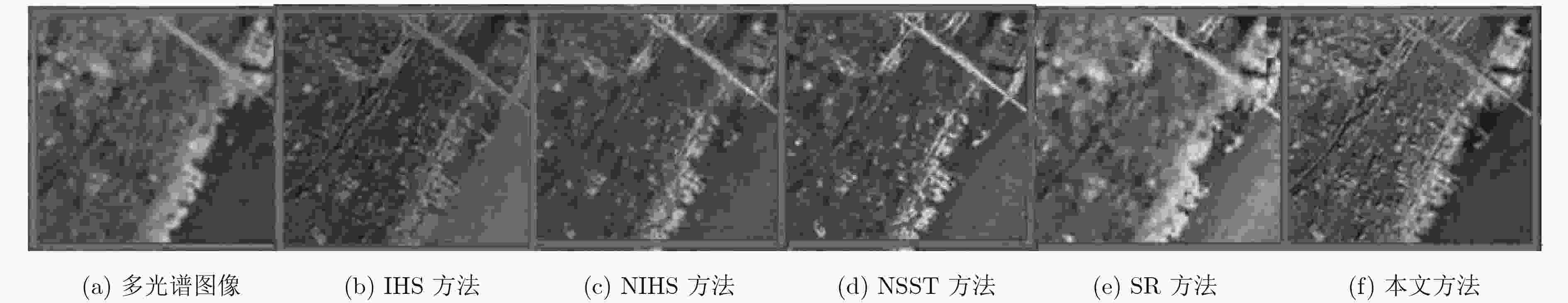

融合方法 ERGAS RASE SAM UIQI IHS方法 9.7446 24.5692 1.1642 0.3469 NIHS方法 5.1226 33.7954 0.3466 0.8387 NSST方法 6.6566 16.6560 1.0277 0.7262 SR方法 8.2873 28.3787 1.1011 0.5979 本文方法 5.0543 12.9461 0.6602 0.7925 表 5 City原图像融合结果的性能比较

融合方法 ERGAS RASE SAM UIQI IHS方法 13.1711 34.7595 2.7537 0.5705 NIHS方法 6.2005 16.9826 1.5020 0.8981 NSST方法 8.5364 23.4545 2.2660 0.8229 SR方法 9.1183 46.6352 1.7720 0.7655 本文方法 6.0461 18.8073 1.4300 0.8673 表 6 River原图像融合结果的性能比较

融合方法 ERGAS RASE SAM UIQI IHS方法 8.1003 14.5947 4.9814 0.7037 NIHS方法 4.3391 12.7904 1.1056 0.9197 NSST方法 5.6170 17.5402 3.1750 0.8637 SR方法 9.3164 75.8740 4.6461 0.8020 本文方法 4.2291 9.6131 2.9010 0.9307 表 7 Fighter原图像融合结果的性能比较

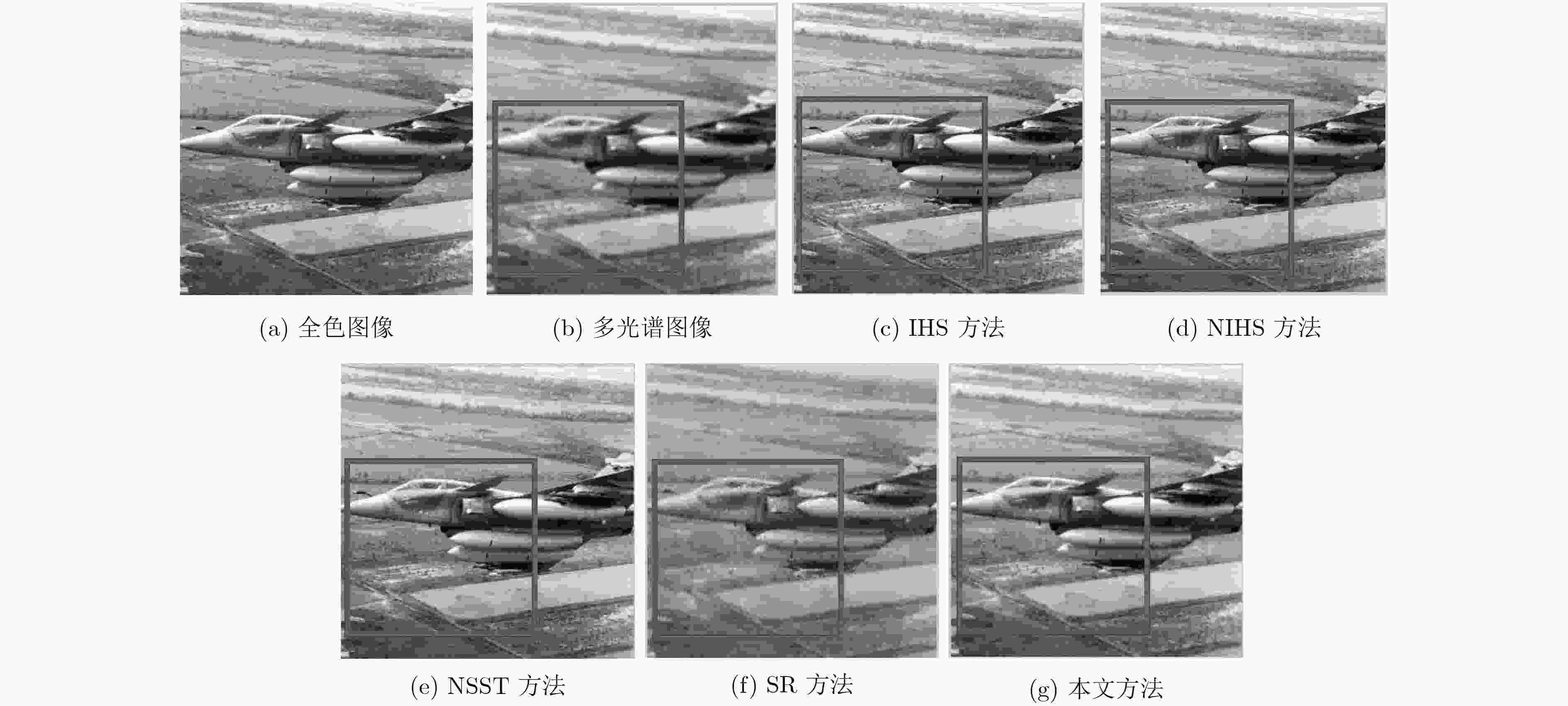

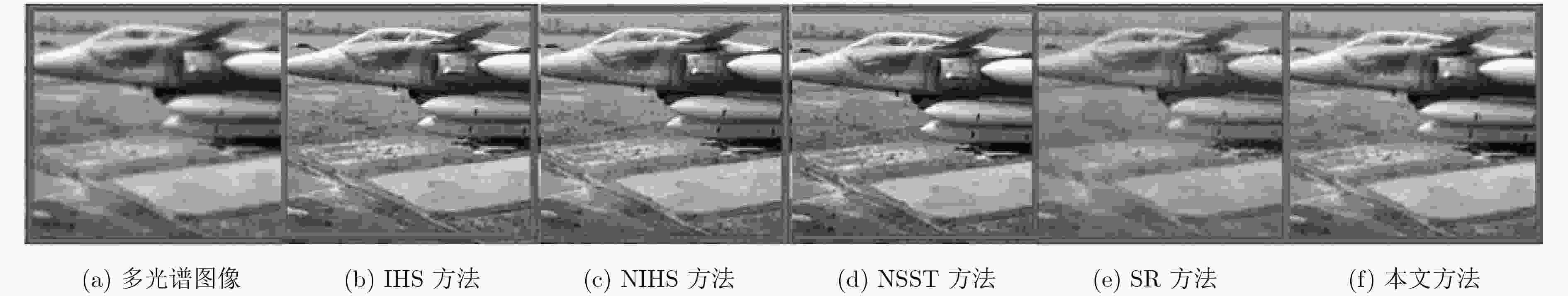

融合方法 ERGAS RASE SAM UIQI IHS方法 1.4788 4.2170 0.2381 0.9830 NIHS方法 1.4199 4.0977 0.2392 0.9850 NSST方法 1.5954 6.0820 0.3091 0.9834 SR方法 2.3366 7.0861 0.3709 0.9508 本文方法 0.9564 2.7291 0.1570 0.9929 -

HASSAN G. A review of remote sensing image fusion methods[J]. Information Fusion, 2016, 32(PA): 75–89 doi: 10.1016/j.inffus.2016.03.003 MUFIT C and ABDULKADIR T. Intensity–hue–saturation-based image fusion using iterative linear regression[J]. Journal of Applied Remote Sensing, 2016, 10(4): 045019 doi: 10.1117/1.JRS.10.045019 JI Feng, LI Zeren, CHANG Xia, et al. Remote sensing image fusion method based on PCA and NSCT transform[J]. Journal of Graphics, 2017, 38(2): 247–252 doi: 10.11996/JG.j.2095-302X.2017020247 LI Xu, ZHANG Yiming, GAO Yanan, et al. Using guided filtering to improve gram-schmidt based pansharpening method for GeoEye-1 satellite images[C]. International Conference on Information Systems and Computing Technology, Shanghai, China, 2016: 33–37. YU Biting, JIA Bo, DING Lu, et al. Hybrid dual-tree complex wavelet transform and support vector machine for digital multi-focus image fusion[J]. Neurocomputing, 2016, 182(C): 1–9 doi: 10.1016/j.neucom.2015.10.084 JORGE N M, XAVIER O, OCTAVI F, et al. Multiresolution-based image fusion with additive wavelet decomposition[J]. IEEE Transactions on Geoscience&Remote Sensing, 1999, 37(3): 1204–1211 doi: 10.1109/36.763274 CHEN Ning, NIU Weiran, ZHANG Jian, et al. Remote sensing image fusion algorithm based on modified Contourlet transform[J]. Journal of Computer Applications, 2015, 35(7): 2015–2019 doi: 10.11772/j.issn.1001-9081.2015.07.2015 刘健, 雷英杰, 邢雅琼, 等. 基于改进型NSST变换的图像融合方法[J]. 控制与决策, 2017, 32(2): 275–280 doi: 10.13195/j.kzyjc.2016.0075LIU Jian, LEI Yingjie, XING Yaqiong, et al. Image fusion method based on improved NSST transform[J]. Control&Decision, 2017, 32(2): 275–280 doi: 10.13195/j.kzyjc.2016.0075 肖化超, 周诠, 郑小松. 基于IHS变换和Curvelet变换的卫星遥感图像融合方法[J]. 华南理工大学学报 (自然科学版), 2016, 44(1): 58–64 doi: 10.3969/j.issn.1000-565X.2016.01.009XIAO Huachao, ZHOU Quan, and ZHENG Xiaosong. Remote sensing image fusion based on IHS transform and Curvelet transform[J]. Journal of South China University of Technology(Natural Science Edition) , 2016, 44(1): 58–64 doi: 10.3969/j.issn.1000-565X.2016.01.009 刘静, 李小超, 祝开建, 等. 基于分布式压缩感知的遥感图像融合算法[J]. 电子与信息学报, 2017, 39(10): 2374–2381 doi: 10.11999/JEIT161393LIU Jing, LI Xiaochao, ZHU Kaijian, et al. Remote sensing image fusion algorithm based on distributed compression sensing[J]. Journal of Electronics&Information Technology, 2017, 39(10): 2374–2381 doi: 10.11999/JEIT161393 ALTAN U M, HU Jianwen, and LI Shutao. Remote sensing image fusion method based on nonsubsampled shearlet transform and sparse representation[J]. Sensing&Imaging, 2015, 16(1): 23 doi: 10.1007/s11220-015-0125-0 WANG Jun, PENG Jinye, JIANG Xiaoyue, et al. Remote-sensing image fusion using sparse representation with sub-dictionaries[J]. International Journal of Remote Sensing, 2017, 38(12): 3564–3585 doi: 10.1080/01431161.2017.1302106 The CIFAR-10 dataset [OL]. http://www.cs.toronto.edu/~kriz/cifar.html, 2018. BRUNO A O and DAVID J F. Emergence of simple-cell receptive field properties by learning a sparse code for natural images[J]. Nature, 1996, 381(6583): 607–609 doi: 10.1038/381607a0 MICHAL A, MICHAEL E, and ALFRED M B. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation[J]. IEEE Transactions on Signal Processing, 2006, 54(11): 4311–4322 doi: 10.1109/TSP.2006.881199 RAMIN R and KRISHNAPRASAD P S. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition[C]. Conference on Signals, Systems & Computers, Pacific Grove, USA, 2002: 40–44. ZHU Xiaoxiang and RICHARD B. A sparse image fusion algorithm with application to pan-sharpening[J].IEEE Transactions on Geoscience&Remote Sensing, 2013, 51(5): 2827–2836 doi: 10.1109/TGRS.2012.2213604 SAMAN J, HASHEMY S M, KOUROSH M, et al. Classification of aquifer vulnerability using k-means cluster analysis[J]. Journal of Hydrology, 2017, 549: 27–37 doi: 10.1016/j.jhydrol.2017.03.060 吴一全, 李立. 利用核模糊聚类和正则化的图像稀疏去噪[J]. 光子学报, 2014, 43(3): 0310001 doi: 10.3788/gzxb20144303.0310001WU Yiquan and LI Li. Image sparse denoising using kernel fuzzy clustering and regularization[J]. Acta Photonica Sinica, 2014, 43(3): 0310001 doi: 10.3788/gzxb20144303.0310001 刘帆. 基于小波核滤波器和稀疏表示的遥感图像融合[D]. [博士论文], 西安电子科技大学, 2014.LIU Fan. Remote sensing image fusion based on wavelet kernel filter and sparse representation[D]. [Ph.D. dissertation], Xidian University, 2014. CARPER W J, THOMAS M L, and RALPH W K. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data[J]. Photogrammetric Engineering&Remote Sensing, 1990, 56(4): 459–467. MORTEZA G and HASSAN G. Nonlinear IHS: A promising method for Pan-sharpening[J]. IEEE Geoscience&Remote Sensing Letters, 2016, 13(11): 1606–1610 doi: 10.1109/LGRS.2016.2597271 -

下载:

下载:

下载:

下载: