Aircraft Detection Method Based on Deep Convolutional Neural Network for Remote Sensing Images

-

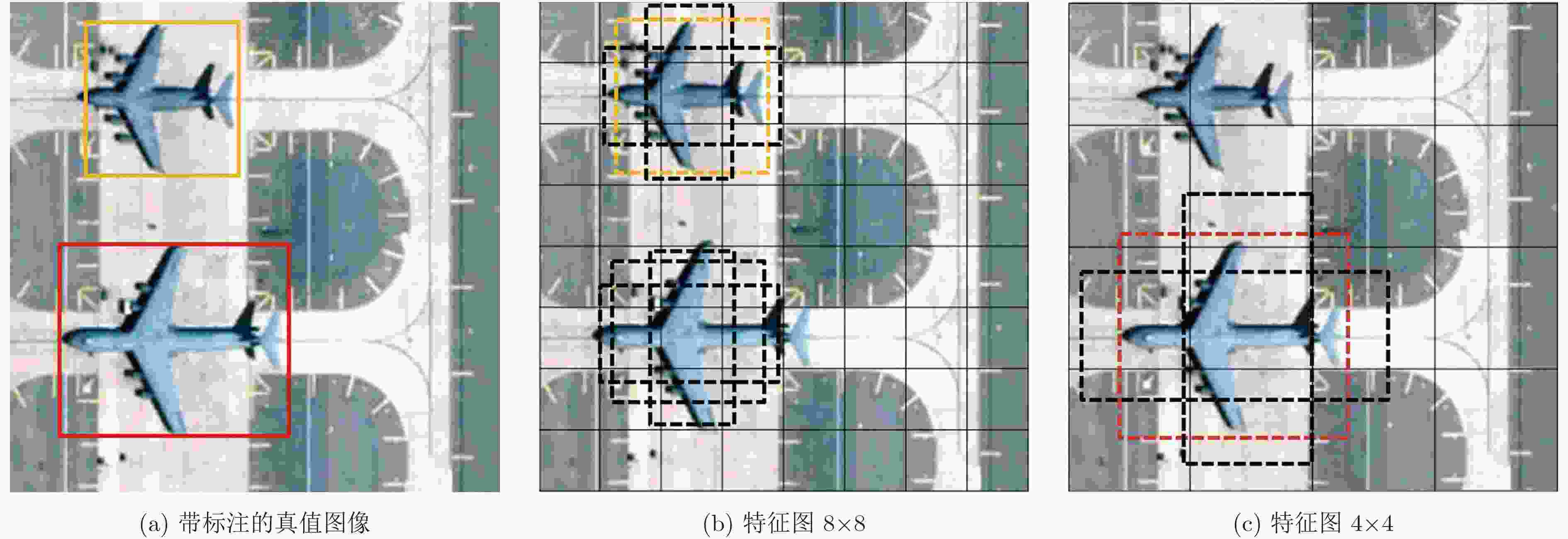

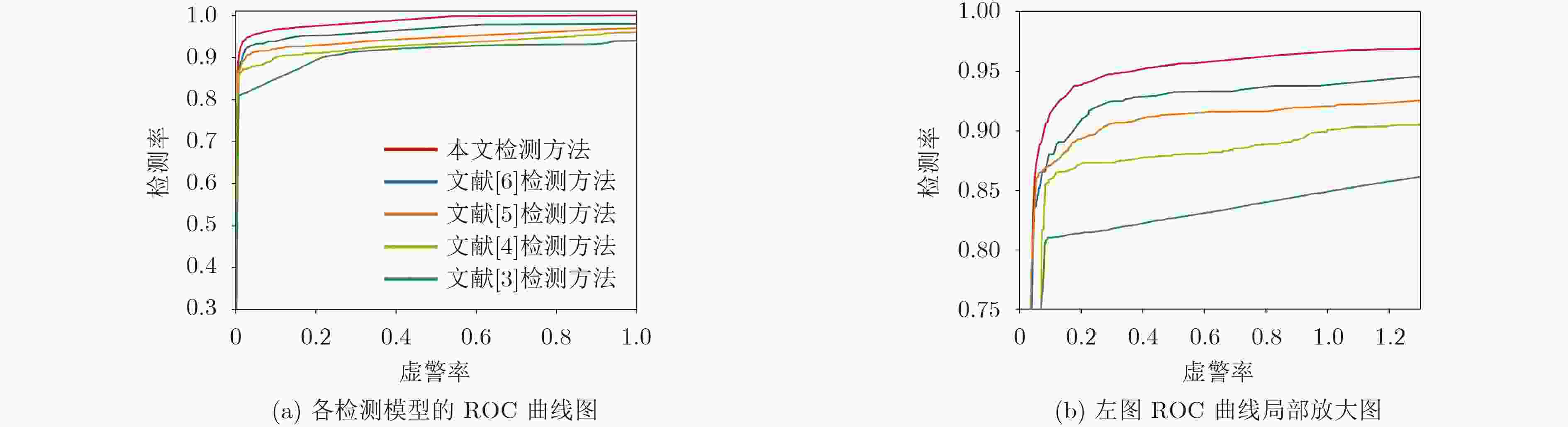

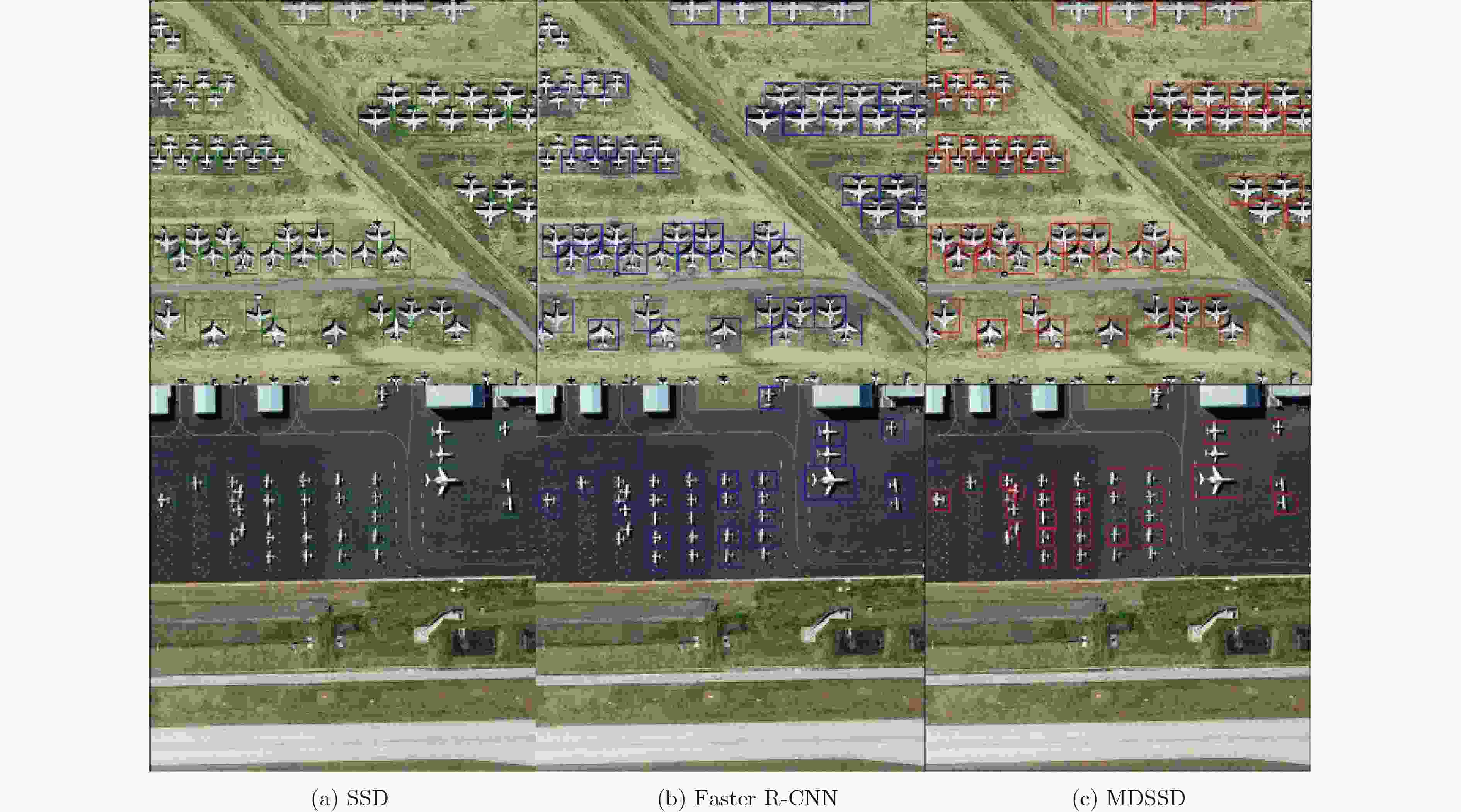

摘要: 飞机检测是遥感图像分析领域的研究热点,现有检测方法的检测流程分为多步,难以进行整体优化,并且对于飞机密集区域或背景复杂区域的检测精度较低。针对以上问题,该文提出一种端到端的检测方法MDSSD来提高检测精度。该方法基于单一网络目标多尺度检测框架(SSD),以一个密集连接卷积网络(DenseNet)作为基础网络提取特征,后面连接一个由多个卷积层构成的子网络对目标进行检测和定位。该方法融合了多层次特征信息,同时设计了一系列不同长宽比的候选框,以实现不同尺度飞机的检测。该文的检测方法完全摒弃了候选框提取阶段,将所有检测流程整合在一个网络中,更加简洁有效。实验结果表明,在多种复杂场景的遥感图像中,该方法能够达到较高的检测精度。Abstract: Aircraft detection is a hot issue in the field of remote sensing image analysis. There exist many problems in current detection methods, such as complex detection procedure, low accuracy in complex background and dense aircraft area. To solve these problems, an end-to-end aircraft detection method named MDSSD is proposed in this paper. Based on Single Shot multibox Detector (SSD), a Densely connected convolutional Network (DenseNet) is used as the base network to extract features for its powerful ability in feature extraction, then an extra sub-network consisting of several feature layers is appended to detect and locate aircrafts. In order to locate aircrafts of various scales more accurately, a series of aspect ratios of default boxes are set to better match aircraft shapes and combine predictions deduced from feature maps of different layers. The method is more brief and efficient than methods that require object proposals, because it eliminates proposal generation completely and encapsulates all computation in a single network. Experiments demonstrate that this approach achieves better performance in many complex scenes.

-

表 1 MDSSD检测模型网络参数列表

网络层 网络层参数 输出特征图大小 卷积层 $7 \times 7\;{\rm{conv}}$,步长2 $256 \times 256$ 密集连接1 $\left[ {1 \times 1\;{\rm{conv}},3 \times 3\;{\rm{conv}}} \right] \times 9$ $256 \times 256$ 转换层1 $1 \times 1\;{\rm{conv}}$ $256 \times 256$ $2 \times 2$\ 平均池化,步长2 $128 \times 128$ 密集连接2 $\left[ {1 \times 1\;{\rm{conv}},3 \times 3\;{\rm{conv}}} \right] \times 9$ $128 \times 128$ 转换层2 $1 \times 1\;{\rm{conv}}$ $128 \times 128$ $2 \times 2\ $平均池化,步长2 $64 \times 64$ 密集连接3 $\left[ {1 \times 1\;{\rm{conv}},3 \times 3\;{\rm{conv}}} \right] \times 9$ $64 \times 64$ 卷积层Conv1_1 $1 \times 1\;{\rm{conv}}$ $64 \times 64$ 卷积层Conv1_2 $3 \times 3\;{\rm{conv}}$,步长2 $32 \times 32$ 卷积层Conv2_1 $1 \times 1\;{\rm{conv}}$ $32 \times 32$ 卷积层Conv2_2 $3 \times 3\;{\rm{conv}}$,步长2 $16 \times 16$ 卷积层Conv3_1 $1 \times 1\;{\rm{conv}}$ $16 \times 16$ 卷积层Conv3_2 $3 \times 3\;{\rm{conv}}$,步长2 $8 \times 8$ 卷积层Conv4_1 $1 \times 1\;{\rm{conv}}$ $8 \times 8$ 卷积层Conv4_2 $3 \times 3\;{\rm{conv}}$,步长2 $4 \times 4$ 表 2 常见飞机型号参数列表

飞机型号 飞机长度(m) 飞机翼展(m) 飞机长度/翼展

范围(m)F-16 15.09 9.45 10~20 MV-22 17.50 14.00 AH-64 17.76 14.63 F-22 18.90 13.56 C-130H 21.00 30.00 20~40 P-3C 35.57 30.36 KC-135 41.51 39.87 40~60 B-1B 44.50 24.00 B-52H 49.05 56.40 C-17 53.29 50.29 C-5 75.54 67.88 60~80 BOEING747-8 76.40 68.50 Antonov An 225 84.00 88.40 80~100 表 3 融合的特征层大小对检测性能的影响

融合的特征层大小 AP (%) $64 \times 64$ $32 \times 32$ $16 \times 16$ $8 \times 8$ $4 \times 4$ $2 \times 2$ √ √ √ √ √ √ 92.07 √ √ √ √ √ 92.07 √ √ √ √ 87.42 √ √ √ 80.71 表 4 候选框长宽比对检测性能的影响

候选框的长宽比 AP (%) $\left\{ 1 \right\}$ $\left\{ {\displaystyle\frac{1}{2},2} \right\}$ $\left\{ {\displaystyle\frac{2}{3},\frac{3}{2}} \right\}$ $\left\{ {\displaystyle\frac{1}{3},3} \right\}$ √ √ √ √ 92.07 √ √ √ 92.07 √ √ 90.47 √ 86.53 表 5 MDSSD与遥感领域检测模型性能对比

表 6 3种目标检测模型性能对比

检测模型 SSD Faster R-CNN MDSSD AP(%) 87.64 89.73 92.07 -

冯卫东, 孙显, 王宏琦. 基于空间语义模型的高分辨率遥感图像目标检测方法[J]. 电子与信息学报, 2013, 35(10): 2518–2523 doi: 10.3724/SP.J.1146.2013.00033FENG Weidong, SUN Xian, and WANG Hongqi. Spatial semantic model based geo-objects detection method for high resolution remote sensing images[J]. Journal of Electronics&Information Technology, 2013, 35(10): 2518–2523 doi: 10.3724/SP.J.1146.2013.00033 王思雨, 高鑫, 孙皓, 等. 基于卷积神经网络的高分辨率SAR图像飞机目标检测方法[J]. 雷达学报, 2017, 6(2): 195–203 doi: 10.12000/JR17009WANG Siyu, GAO Xin, SUN Hao, et al. An aircraft detection method based on convolutional neural networks in high-resolution SAR images[J]. Journal of Radars, 2017, 6(2): 195–203 doi: 10.12000/JR17009 SUN Hao, SUN Xian, WANG Hongqi, et al. Automatic target detection in high-resolution remote sensing images using spatial sparse coding bag-of-words model[J]. IEEE Geoscience and Remote Sensing Letters, 2012, 9(1): 109–113 doi: 10.1109/LGRS.2011.2161569 ZHANG Wanceng, SUN Xian, FU Kun, et al. Object detection in high-resolution remote sensing images using rotation invariant parts based model[J]. IEEE Geoscience and Remote Sensing Letters, 2014, 11(1): 74–78 doi: 10.1109/LGRS.2013.2246538 ZHAO An, FU Kun, SUN Hian, et al. An effective method based on acf for aircraft detection in remote sensing images[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(5): 744–748 doi: 10.1109/LGRS.2017.2677954 DIAO Wenhui, SUN Xian, ZHENG Xinwei, et al. Efficient saliency-based object detection in remote sensing images using deep belief networks[J]. IEEE Geoscience and Remote Sensing Letters, 2016, 13(2): 137–141 doi: 10.1109/LGRS.2015.2498644 REN S, HE K, GIRSHICK R, et al. In Faster R-CNN: Towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137–1149 doi: 10.1109/TPAMI.2016.2577031 LIU Wei, ANGUELOV D, ERHAN D, et al. SSD: Single shot multibox detector[C]. Computer Vision and Pattern Recognition, Boston, USA, 2015: 21–37. HUANG Gao, LIU Zhuang, and LAURENS van der Maaten. Densely connected convolutional networks[C]. Computer Vision and Pattern Recognition. Hawaii, USA, 2017: 567–576. SIMONYAN K and ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[J]. Computer Science, 2014, 10(1): 123–132. ZHOU Bolei, KHOSLA A, LAPEDRIZA A, et al. Object detectors emerge in deep scene CNNs[J]. Computer Science, 2014, 16(2): 1205–1217. ERHAN D, SZEGEDY C, TOSHEV A, et al. In Scalable object detection using deep neural networks[C]. Computer Vision and Pattern Recognition, Columbus, USA, 2014: 2155–2162. GIRSHICK R. Fast R-CNN[J]. Computer Science, 2015, 4(1): 1440–1448. EVERINGHAM M, GOOL L V, WILLIAMS C K I, et al. The pascal Visual Object Classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303–338 doi: 10.1007/s11263-009-0275-4 -

下载:

下载:

下载:

下载: